- Graph Theory - Home

- Graph Theory - Introduction

- Graph Theory - History

- Graph Theory - Fundamentals

- Graph Theory - Applications

- Types of Graphs

- Graph Theory - Types of Graphs

- Graph Theory - Simple Graphs

- Graph Theory - Multi-graphs

- Graph Theory - Directed Graphs

- Graph Theory - Weighted Graphs

- Graph Theory - Bipartite Graphs

- Graph Theory - Complete Graphs

- Graph Theory - Subgraphs

- Graph Theory - Trees

- Graph Theory - Forests

- Graph Theory - Planar Graphs

- Graph Theory - Hypergraphs

- Graph Theory - Infinite Graphs

- Graph Theory - Random Graphs

- Graph Representation

- Graph Theory - Graph Representation

- Graph Theory - Adjacency Matrix

- Graph Theory - Adjacency List

- Graph Theory - Incidence Matrix

- Graph Theory - Edge List

- Graph Theory - Compact Representation

- Graph Theory - Incidence Structure

- Graph Theory - Matrix-Tree Theorem

- Graph Properties

- Graph Theory - Basic Properties

- Graph Theory - Coverings

- Graph Theory - Matchings

- Graph Theory - Independent Sets

- Graph Theory - Traversability

- Graph Theory Connectivity

- Graph Theory - Connectivity

- Graph Theory - Vertex Connectivity

- Graph Theory - Edge Connectivity

- Graph Theory - k-Connected Graphs

- Graph Theory - 2-Vertex-Connected Graphs

- Graph Theory - 2-Edge-Connected Graphs

- Graph Theory - Strongly Connected Graphs

- Graph Theory - Weakly Connected Graphs

- Graph Theory - Connectivity in Planar Graphs

- Graph Theory - Connectivity in Dynamic Graphs

- Special Graphs

- Graph Theory - Regular Graphs

- Graph Theory - Complete Bipartite Graphs

- Graph Theory - Chordal Graphs

- Graph Theory - Line Graphs

- Graph Theory - Complement Graphs

- Graph Theory - Graph Products

- Graph Theory - Petersen Graph

- Graph Theory - Cayley Graphs

- Graph Theory - De Bruijn Graphs

- Graph Algorithms

- Graph Theory - Graph Algorithms

- Graph Theory - Breadth-First Search

- Graph Theory - Depth-First Search (DFS)

- Graph Theory - Dijkstra's Algorithm

- Graph Theory - Bellman-Ford Algorithm

- Graph Theory - Floyd-Warshall Algorithm

- Graph Theory - Johnson's Algorithm

- Graph Theory - A* Search Algorithm

- Graph Theory - Kruskal's Algorithm

- Graph Theory - Prim's Algorithm

- Graph Theory - Borůvka's Algorithm

- Graph Theory - Ford-Fulkerson Algorithm

- Graph Theory - Edmonds-Karp Algorithm

- Graph Theory - Push-Relabel Algorithm

- Graph Theory - Dinic's Algorithm

- Graph Theory - Hopcroft-Karp Algorithm

- Graph Theory - Tarjan's Algorithm

- Graph Theory - Kosaraju's Algorithm

- Graph Theory - Karger's Algorithm

- Graph Coloring

- Graph Theory - Coloring

- Graph Theory - Edge Coloring

- Graph Theory - Total Coloring

- Graph Theory - Greedy Coloring

- Graph Theory - Four Color Theorem

- Graph Theory - Coloring Bipartite Graphs

- Graph Theory - List Coloring

- Advanced Topics of Graph Theory

- Graph Theory - Chromatic Number

- Graph Theory - Chromatic Polynomial

- Graph Theory - Graph Labeling

- Graph Theory - Planarity & Kuratowski's Theorem

- Graph Theory - Planarity Testing Algorithms

- Graph Theory - Graph Embedding

- Graph Theory - Graph Minors

- Graph Theory - Isomorphism

- Spectral Graph Theory

- Graph Theory - Graph Laplacians

- Graph Theory - Cheeger's Inequality

- Graph Theory - Graph Clustering

- Graph Theory - Graph Partitioning

- Graph Theory - Tree Decomposition

- Graph Theory - Treewidth

- Graph Theory - Branchwidth

- Graph Theory - Graph Drawings

- Graph Theory - Force-Directed Methods

- Graph Theory - Layered Graph Drawing

- Graph Theory - Orthogonal Graph Drawing

- Graph Theory - Examples

- Computational Complexity of Graph

- Graph Theory - Time Complexity

- Graph Theory - Space Complexity

- Graph Theory - NP-Complete Problems

- Graph Theory - Approximation Algorithms

- Graph Theory - Parallel & Distributed Algorithms

- Graph Theory - Algorithm Optimization

- Graphs in Computer Science

- Graph Theory - Data Structures for Graphs

- Graph Theory - Graph Implementations

- Graph Theory - Graph Databases

- Graph Theory - Query Languages

- Graph Algorithms in Machine Learning

- Graph Neural Networks

- Graph Theory - Link Prediction

- Graph-Based Clustering

- Graph Theory - PageRank Algorithm

- Graph Theory - HITS Algorithm

- Graph Theory - Social Network Analysis

- Graph Theory - Centrality Measures

- Graph Theory - Community Detection

- Graph Theory - Influence Maximization

- Graph Theory - Graph Compression

- Graph Theory Real-World Applications

- Graph Theory - Network Routing

- Graph Theory - Traffic Flow

- Graph Theory - Web Crawling Data Structures

- Graph Theory - Computer Vision

- Graph Theory - Recommendation Systems

- Graph Theory - Biological Networks

- Graph Theory - Social Networks

- Graph Theory - Smart Grids

- Graph Theory - Telecommunications

- Graph Theory - Knowledge Graphs

- Graph Theory - Game Theory

- Graph Theory - Urban Planning

- Graph Theory Useful Resources

- Graph Theory - Quick Guide

- Graph Theory - Useful Resources

- Graph Theory - Discussion

Graph Theory - Graph Laplacian

Graph Laplacian

Graph Laplacian is used to study the structure and properties of graphs. It provides information about various aspects of a graph, such as connectivity, diffusion processes, and spectral properties.

The Graph Laplacian of a graph G = (V, E) is a matrix that contains information about the graph's structure, specifically its vertices and edges. It is defined as −

L = D - A

Where,

- L is the Laplacian matrix.

- D is the degree matrix, a diagonal matrix where each diagonal entry D(i, i) represents the degree (number of edges) of vertex i.

- A is the adjacency matrix, where each entry A(i, j) indicates whether there is an edge between vertices i and j.

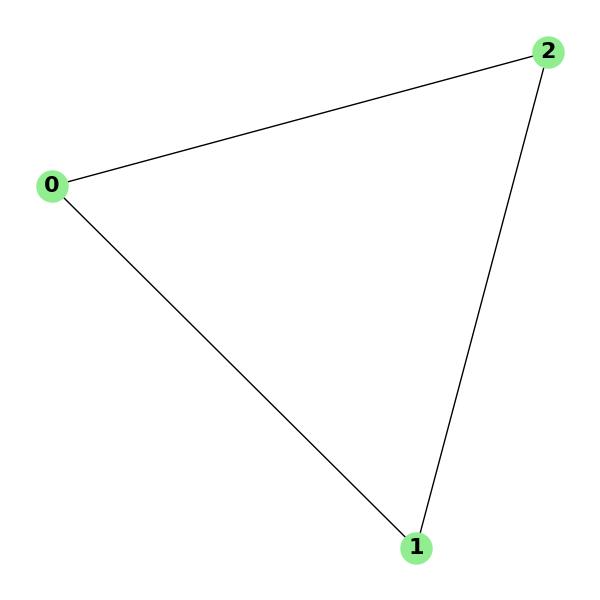

Example: Laplacian Matrix Calculation

Consider a simple graph with 3 vertices connected in a cycle (a triangle). The adjacency matrix and degree matrix are −

Adjacency matrix A: [[0, 1, 1], [1, 0, 1], [1, 1, 0]] Degree matrix D: [[2, 0, 0], [0, 2, 0], [0, 0, 2]] Laplacian matrix L: [[ 2, -1, -1], [-1, 2, -1], [-1, -1, 2]]

The Laplacian matrix of this graph is a symmetric matrix that represents the graph's structure in a concise form. Each row and column corresponds to a vertex, and the matrix encodes information about how the vertices are connected.

Properties of the Graph Laplacian

The Graph Laplacian has several important properties, they are −

- Symmetry: The Laplacian matrix is symmetric if the graph is undirected, meaning L = LT.

- Eigenvalues: The eigenvalues of the Laplacian matrix reveal important structural properties of the graph. The smallest eigenvalue is always 0, and its multiplicity indicates the number of connected components in the graph.

- Diagonal Entries: The diagonal entries of the Laplacian matrix correspond to the degree of each vertex. This provides information about the local connectivity of vertices.

- Sparsity: The Laplacian matrix is generally sparse, especially for large graphs, since most graphs have relatively few edges compared to the number of possible edges.

Example: Eigenvalues of the Laplacian Matrix

Consider the same graph from the previous example (the cycle graph with 3 vertices). The Laplacian matrix was −

Laplacian matrix L: [[ 2, -1, -1], [-1, 2, -1], [-1, -1, 2]] Eigenvalues of the Laplacian matrix: [0, 4, 4]

In this case, the eigenvalues 0, 4, and 4 provide important information about the graph's connectivity and structure. The multiplicity of the eigenvalue 0 indicates that the graph is connected, and the other eigenvalues give details of the graph's overall connectivity and behavior.

Applications of the Graph Laplacian

The Graph Laplacian is used in various applications, such as −

- Graph Connectivity: The multiplicity of the eigenvalue 0 of the Laplacian matrix indicates the number of connected components in a graph. A graph is connected if and only if the eigenvalue 0 has multiplicity 1.

- Spectral Clustering: Spectral clustering algorithms use the Laplacian matrix to partition a graph into clusters based on the eigenvectors corresponding to the smallest eigenvalues. This technique is commonly used in machine learning for community detection and data segmentation.

- Diffusion Processes: The Laplacian matrix is used to model diffusion processes, such as heat diffusion or random walks, on graphs. The eigenvalues and eigenvectors determine how quickly diffusion spreads through the network.

- Graph Laplacian Regularization: In machine learning, the Laplacian matrix is commonly used for regularization in graph-based semi-supervised learning, where the goal is to predict labels for nodes in a graph using a combination of labeled and unlabeled data.

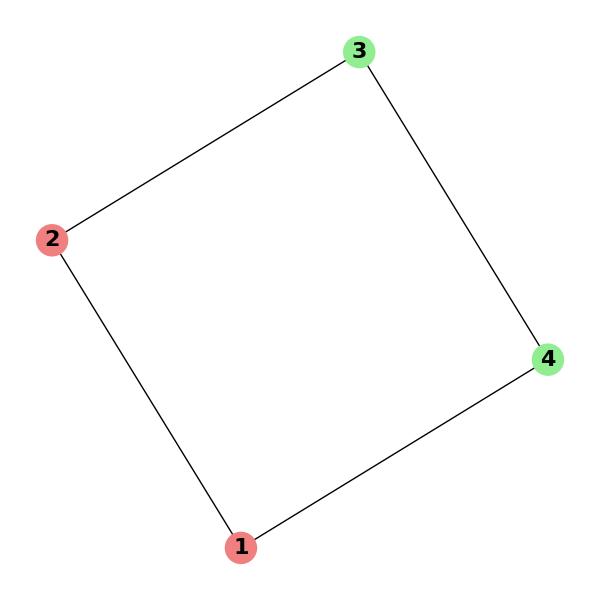

Example: Spectral Clustering

Consider a graph with several nodes and edges. The goal is to cluster the nodes into two groups. One way to do this is to use the eigenvectors corresponding to the smallest eigenvalues of the Laplacian matrix. These eigenvectors can be used as features to cluster the nodes into groups that are closely connected.

Laplacian matrix L: [[ 4, -1, 0, -1], [-1, 4, -1, 0], [ 0, -1, 4, -1], [-1, 0, -1, 4]] Eigenvectors corresponding to the smallest eigenvalues: [[ 0.5, -0.5], [ 0.5, 0.5], [ 0.5, -0.5], [-0.5, 0.5]] Clustering the nodes based on the eigenvectors: Group 1: [1, 2], Group 2: [3, 4]

This example shows how spectral clustering works by partitioning the nodes into two groups based on the eigenvectors of the Laplacian matrix.

Normalized Laplacian Matrix

The normalized Laplacian matrix is a variation of the Laplacian matrix that accounts for vertex degrees. It is defined as −

L_norm = D(-1/2) * L * D(-1/2)

Where D(-1/2) is the inverse square root of the degree matrix. The normalized Laplacian matrix is useful in spectral clustering and graph-based signal processing because it accounts for the degree of vertices and makes the analysis more strong to variations in vertex degrees.

Cheeger's Inequality and Graph Laplacians

Cheeger's inequality relates the second-smallest eigenvalue of the Laplacian matrix to the graph's conductance, a measure of its connectivity. Specifically, Cheeger's inequality states that −

h(G) 2 / 2 2 * h(G)

Where, h(G) is the conductance of the graph, and 2 is the second-smallest eigenvalue of the Laplacian matrix. This inequality provides a way to estimate the graph's connectivity based on its spectral properties.