Ladners Theorem in Automata Theory

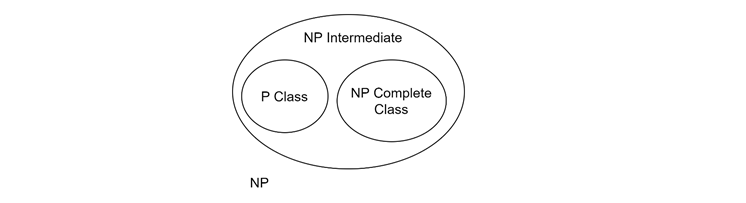

The Ladners Theorem deals with the complexity classes: P, NP, and NP-complete. The theorem addresses a fundamental question in computer science: if P is not equal to NP, then are there problems in NP that are neither in P nor NP-complete? The Ladners Theorem answers this question by proving that there are indeed such problems, known as NP-intermediate problems.

Understanding the Basics: P, NP, and NP-Complete

Before understanding the Ladners Theorem, it is important to get the basics of P, NP, and NP-complete problems.

- P (Polynomial Time) − In simple term, this class consists of problems that can be solved by an algorithm in polynomial time. In other words, if a problem is in P, there is an efficient algorithm that can solve it in a certain amount of time as the input size grows.

- NP (Nondeterministic Polynomial Time) − This class includes problems for which a solution, once found, can be verified in polynomial time. However, it is not known whether these problems can be solved in polynomial time or not.

- NP-Complete − The most complex class is the NP-Complete. If any NP-complete problem can be solved in polynomial time, then every problem in NP can also be solved in polynomial time, implying P = NP. Conversely, if P ≠ NP, then NP-complete problems cannot be solved in polynomial time.

The P vs NP Question

One of the most famous problem in computer science is whether P = NP or not. If P were equal to NP, every problem that can be verified quickly could also be solved quickly. However, if P ≠ NP, then there are problems in NP that cannot be solved as efficiently as they can be verified.

NP-Intermediate Problems

Now it comes to the Ladner's Theorem. It introduces the concept of NP-intermediate problems. These are problems that belong to NP but are neither in P nor NP-complete. They sit in between the two classes, having an intermediate complexity.

We can define this formally in this way, a language L ∈ NP is NP-intermediate iff L ∉ P and L ∉ NP-complete.

In simpler terms, if P ≠ NP, then NP contains problems that are too difficult to be in P but not as hard as NP-complete problems.

Ladners Theorem

The Statement: Ladner's Theorem states: If P ≠ NP, then there is a language L which is an NP-intermediate language. This means that if it turns out P is not equal to NP, then NP must contain problems that are neither easy enough to be solved in polynomial time (P) nor hard enough to be NP-complete.

Proof Overview

Ladners Theorem is proved using a technique called diagonalization, this method often used in theoretical computer science to construct new problems that are not part of a given set.

Special Function H

To understand the proof, let us assume a special function H: N → N with the following properties:

- Time Complexity − H(m) can be processed in O(m³) time.

- Growth Condition − H(m) → ∞ with m if SATH ∉ P.

- Constant Condition − H(m) ≤ C (where C is a constant) if SATH ∈ P.

Here, SATH is a specially constructed problem based on SAT (satisfiability problem, a well-known NP-complete problem). The Ladner used this function H to create a language SATH that is NPintermediate, assuming P ≠ NP.

The Construction of SATH

Case 1: SATH ∈ P

- If SATH is in P, then H(m) is bounded by a constant C.

- This implies a polynomial-time algorithm for SAT as follows −

- Given an input φ, compute m = |φ| (the length of φ).

- Generate the string φ 01mH(m).

- Verify if this string belongs to SATH.

- Since P ≠ NP, this scenario leads to a contradiction, showing that SATH ∉ P.

Case 2: SATH ∈ NP-Complete

- If SATH is NP-complete, then H(m) → ∞ as m increases .

- This implies a polynomial-time reduction from SAT to SATH, but it also leads to a contradiction because it would require an impossibly large number of computational resources as m grows.

- Hence, SATH ∉ NP-complete.

Construction of the Function H

The construction of H is carefully designed for the membership of strings in SATH based on their length. The value of H(m) checks whether a string of length m belongs to SATH. If H(m) is small, it suggests that SATH might be in P; if H(m) grows rapidly, it suggests that SATH is not in P.

Limits of Diagonalization

Diagonalization is a technique that used to separate different sets, such as P and NP, for NPintermediate problems. However, it has its limits.

Kozen's Theorem shows that b diagonalization does not relativize, meaning it cannot be used to separate P from NP under all circumstances. While Ladner's Theorem uses diagonalization, it does so in a way that highlights these limitations.

Examples of NP-Intermediate Problems

Ladner's Theorem implies the existence of NP-intermediate problems if P ≠ NP. Some examples of problems believed to be NP-intermediate, these include −

- Computing the Discrete Logarithm − This problem involves finding the exponent in the equation gx ≡ h (mod p), where g, h, and p are given.

- Graph Isomorphism Problem − This problem asks whether two graphs are isomorphic, meaning they contain the same structure.

- Factoring the Discrete Logarithm − Like the discrete logarithm problem, but focusing on factoring numbers.

- Approximation of the Shortest Vector in a Lattice − This problem deals with finding the shortest non-zero vector in a lattice.

- Minimum Circuit Size Problem − This problem involves finding the smallest circuit that computes a given function.

These problems are not known to be in P or NP-complete, making them candidates for being NPintermediate.

Conclusion

In this chapter, we explained the Ladner's Theorem that addresses the existence of NP-intermediate problems. We started by the understanding the basic classes of P, NP, and NP-complete problems and discussed the implications of the P vs NP question.

The Ladner's Theorem shows that if P ≠ NP, then NP contains problems that are neither in P nor NP-complete. We also understood the proof of Ladner's Theorem, which uses the idea of diagonalization and the construction of a special function H.