- OS - Home

- OS - Overview

- OS - History

- OS - Evolution

- OS - Functions

- OS - Components

- OS - Structure

- OS - Architecture

- OS - Services

- OS - Properties

- Process Management

- Processes in Operating System

- States of a Process

- Process Schedulers

- Process Control Block

- Operations on Processes

- Process Suspension and Process Switching

- Process States and the Machine Cycle

- Inter Process Communication (IPC)

- Remote Procedure Call (RPC)

- Context Switching

- Threads

- Types of Threading

- Multi-threading

- System Calls

- Scheduling Algorithms

- Process Scheduling

- Types of Scheduling

- Scheduling Algorithms Overview

- FCFS Scheduling Algorithm

- SJF Scheduling Algorithm

- Round Robin Scheduling Algorithm

- HRRN Scheduling Algorithm

- Priority Scheduling Algorithm

- Multilevel Queue Scheduling

- Lottery Scheduling Algorithm

- Starvation and Aging

- Turn Around Time & Waiting Time

- Burst Time in SJF Scheduling

- Process Synchronization

- Process Synchronization

- Solutions For Process Synchronization

- Hardware-Based Solution

- Software-Based Solution

- Critical Section Problem

- Critical Section Synchronization

- Mutual Exclusion Synchronization

- Mutual Exclusion Using Interrupt Disabling

- Peterson's Algorithm

- Dekker's Algorithm

- Bakery Algorithm

- Semaphores

- Binary Semaphores

- Counting Semaphores

- Mutex

- Turn Variable

- Bounded Buffer Problem

- Reader Writer Locks

- Test and Set Lock

- Monitors

- Sleep and Wake

- Race Condition

- Classical Synchronization Problems

- Dining Philosophers Problem

- Producer Consumer Problem

- Sleeping Barber Problem

- Reader Writer Problem

- OS Deadlock

- Introduction to Deadlock

- Conditions for Deadlock

- Deadlock Handling

- Deadlock Prevention

- Deadlock Avoidance (Banker's Algorithm)

- Deadlock Detection and Recovery

- Deadlock Ignorance

- Resource Allocation Graph

- Livelock

- Memory Management

- Memory Management

- Logical and Physical Address

- Contiguous Memory Allocation

- Non-Contiguous Memory Allocation

- First Fit Algorithm

- Next Fit Algorithm

- Best Fit Algorithm

- Worst Fit Algorithm

- Buffering

- Fragmentation

- Compaction

- Virtual Memory

- Segmentation

- Paged Segmentation & Segmented Paging

- Buddy System

- Slab Allocation

- Overlays

- Free Space Management

- Locality of Reference

- Paging and Page Replacement

- Paging

- Demand Paging

- Page Table

- Page Replacement Algorithms

- Second Chance Page Replacement

- Optimal Page Replacement Algorithm

- Belady's Anomaly

- Thrashing

- Storage and File Management

- File Systems

- File Attributes

- Structures of Directory

- Linked Index Allocation

- Indexed Allocation

- Disk Scheduling Algorithms

- FCFS Disk Scheduling

- SSTF Disk Scheduling

- SCAN Disk Scheduling

- LOOK Disk Scheduling

- I/O Systems

- I/O Hardware

- I/O Software

- I/O Programmed

- I/O Interrupt-Initiated

- Direct Memory Access

- OS Types

- OS - Types

- OS - Batch Processing

- OS - Multiprogramming

- OS - Multitasking

- OS - Multiprocessing

- OS - Distributed

- OS - Real-Time

- OS - Single User

- OS - Monolithic

- OS - Embedded

- Popular Operating Systems

- OS - Hybrid

- OS - Zephyr

- OS - Nix

- OS - Linux

- OS - Blackberry

- OS - Garuda

- OS - Tails

- OS - Clustered

- OS - Haiku

- OS - AIX

- OS - Solus

- OS - Tizen

- OS - Bharat

- OS - Fire

- OS - Bliss

- OS - VxWorks

- Miscellaneous Topics

- OS - Security

- OS Questions Answers

- OS - Questions Answers

- OS Useful Resources

- OS - Quick Guide

- OS - Useful Resources

- OS - Discussion

Operating System - Allocating Kernel Memory (Slab Allocation)

In the previous chapter, we discussed the Buddy System for allocating kernel memory. In this system, the main drawback is the wastage of memory due to fragmentation. To fix this issue, modern operating systems use a technique called Slab Allocation on top of the Buddy System. This chapter will explain slab allocation, how it works, and its advantages.

Read this chapter to learn the following −

- What is Slab Allocation?

- Structure of Slab Allocation

- Slab Allocation in Linux Kernel

- Advantages of Slab Allocation

- Slab Allocation vs Buddy System

What is Slab Allocation?

Slab Allocation is a memory management technique used by kernel of modern operating systems such as Linux to efficiently allocate memory for frequently used objects like file descriptors, inodes, and network buffers. The idea behind slab allocation is to introduce a cache for each type of object that the kernel frequently uses. Each cache consists pre allocated memory chunks called slabs. These slabs are divided into smaller fixed-size blocks called objects. The object can be quickly allocated and deallocated from the slab cache without causing fragmentation and memory wastage.

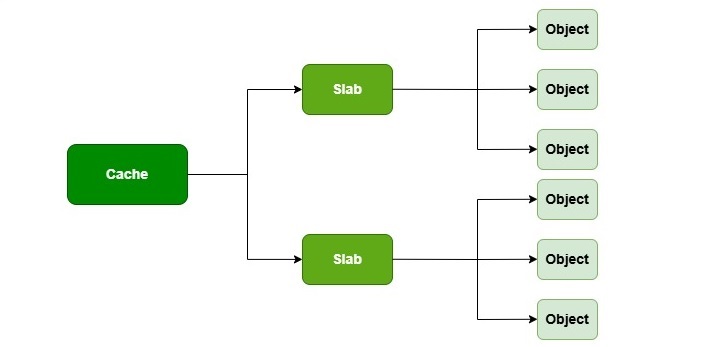

The image below shows the hierarchy of slab allocation in an operating system −

Structure of Slab Allocation

As depicted in the image, slab allocation consists of three main components −

- Cache − A cache is a group of slabs that store objects of the same type. There different caches are created for different types of objects used by the kernel.

- Slab − A slab is a contiguous block of memory that contains multiple objects of the same type. Each slab is divided into smaller fixed-size blocks called objects.

- Object − An object is a fixed-size block of memory that is allocated from a slab. Each object maps to a specific data structure used by the kernel.

Each of the slab can be in one of the following three states: Full - all objects are allocated, Partial - some objects are allocated, and Empty - no objects are allocated. The slab allocator uses partial slabs first to satisfy allocation requests. This way fragmentation is minimized. If no partial slabs are available, a new slab is created from the buddy system.

A slab descriptor, struct slab, represents each slab −

struct slab {

struct list_head list; /* full, partial, or empty list */

unsigned long colouroff; /* offset for the slab coloring */

void *s_mem; /* first object in the slab */

unsigned int inuse; /* allocated objects in the slab */

kmem_bufctl_t free; /* first free object, if any */

};

Slab Allocation in Linux Kernel

In the Linux kernel, slab allocation is implemented using three different allocators −

1. Slab Allocator

This is the original slab allocator. It uses caches to store frequently used objects. It provides efficient memory allocation and deallocation for kernel objects.

The slab allocator maintains a data structure called kmem_cache for each type of object. The kmem_cache structure contains information about the size of the object, the number of objects per slab, and pointers to the lists of full, partial, and empty slabs.

struct kmem_cache {

size_t object_size; /* Size of each object */

size_t slab_size; /* Size of each slab */

unsigned int objects_per_slab; /* Number of objects per slab */

struct list_head full_slabs; /* List of full slabs */

struct list_head partial_slabs; /* List of partial slabs */

struct list_head empty_slabs; /* List of empty slabs */

// Other fields...

};

2. SLOB Allocator

The Simple List of Blocks (SLOB) allocator is a simpler version of the slab allocator. It is designed for systems with low memory storage. This allocator provides a straightforward approach to memory allocation.

Each block in SLOB is represented by a structure called slob_block −

struct slob_block {

size_t size; /* Size of the block */

struct slob_block *next; /* Pointer to the next block */

};

3. SLUB Allocator

The SLUB (the unqueued slab allocator) is the default slab allocator in modern Linux kernels. It is more efficient and scalable than the original slab allocator. This allocator reduces overhead and improves performance for multi-processor systems.

SLUB uses a data structure called slub_cache to manage memory allocation −

struct slub_cache {

size_t object_size; /* Size of each object */

size_t slab_size; /* Size of each slab */

unsigned int objects_per_slab; /* Number of objects per slab */

struct list_head full_slabs; /* List of full slabs */

struct list_head partial_slabs; /* List of partial slabs */

struct list_head empty_slabs; /* List of empty slabs */

// Other fields...

};

Advantages of Slab Allocation

Slab allocation is used in all of the modern operating systems due to its following advantages −

- Reduced Fragmentation − Slab allocation reduces the internal fragmentation caused by the buddy system by working on top of it and allocating memory in fixed-size objects.

- Faster Allocation and Deallocation − The slabs in slab allocation are pre-allocated. So allocation and deallocation of objects are faster compared to traditional memory allocation techniques.

- Object Caching − Frequently used objects are cached in slabs. This helps to access them in O(1) time and improves overall system performance.

- Memory Reuse − When an object is deallocated, it is returned to the slab cache for reuse. This reduces the need for frequent memory allocations from the buddy system.

Slab Allocation vs Buddy System

Here is a comparison between slab allocation and the buddy system −

| Feature | Slab Allocation | Buddy System |

|---|---|---|

| Fragmentation | Reduces internal fragmentation by allocating fixed-size objects. | Suffers from internal fragmentation due to variable-sized allocations. |

| Allocation Speed | Faster allocation and deallocation due to pre-allocated slabs. | Slower allocation and deallocation due to splitting and merging of blocks. |

| Object Caching | Caches frequently used objects for quick access. | No object caching mechanism. |

| Memory Reuse | Deallocated objects are returned to the slab cache for reuse. | Deallocated memory is returned to the buddy system, which may lead to fragmentation. |

Conclusion

Slab allocation works on top of the buddy system to improve memory allocation efficiency in the kernel of modern operating systems. It reduces fragmentation by allocating memory in fixed-size objects and provides faster allocation and deallocation through pre-allocated slabs. It uses caching mechanism to store frequently used objects and access them in O(1) time.