- OS - Home

- OS - Overview

- OS - History

- OS - Evolution

- OS - Functions

- OS - Components

- OS - Structure

- OS - Architecture

- OS - Services

- OS - Properties

- Process Management

- Processes in Operating System

- States of a Process

- Process Schedulers

- Process Control Block

- Operations on Processes

- Process Suspension and Process Switching

- Process States and the Machine Cycle

- Inter Process Communication (IPC)

- Remote Procedure Call (RPC)

- Context Switching

- Threads

- Types of Threading

- Multi-threading

- System Calls

- Scheduling Algorithms

- Process Scheduling

- Types of Scheduling

- Scheduling Algorithms Overview

- FCFS Scheduling Algorithm

- SJF Scheduling Algorithm

- Round Robin Scheduling Algorithm

- HRRN Scheduling Algorithm

- Priority Scheduling Algorithm

- Multilevel Queue Scheduling

- Lottery Scheduling Algorithm

- Starvation and Aging

- Turn Around Time & Waiting Time

- Burst Time in SJF Scheduling

- Process Synchronization

- Process Synchronization

- Solutions For Process Synchronization

- Hardware-Based Solution

- Software-Based Solution

- Critical Section Problem

- Critical Section Synchronization

- Mutual Exclusion Synchronization

- Mutual Exclusion Using Interrupt Disabling

- Peterson's Algorithm

- Dekker's Algorithm

- Bakery Algorithm

- Semaphores

- Binary Semaphores

- Counting Semaphores

- Mutex

- Turn Variable

- Bounded Buffer Problem

- Reader Writer Locks

- Test and Set Lock

- Monitors

- Sleep and Wake

- Race Condition

- Classical Synchronization Problems

- Dining Philosophers Problem

- Producer Consumer Problem

- Sleeping Barber Problem

- Reader Writer Problem

- OS Deadlock

- Introduction to Deadlock

- Conditions for Deadlock

- Deadlock Handling

- Deadlock Prevention

- Deadlock Avoidance (Banker's Algorithm)

- Deadlock Detection and Recovery

- Deadlock Ignorance

- Resource Allocation Graph

- Livelock

- Memory Management

- Memory Management

- Logical and Physical Address

- Contiguous Memory Allocation

- Non-Contiguous Memory Allocation

- First Fit Algorithm

- Next Fit Algorithm

- Best Fit Algorithm

- Worst Fit Algorithm

- Buffering

- Fragmentation

- Compaction

- Virtual Memory

- Segmentation

- Paged Segmentation & Segmented Paging

- Buddy System

- Slab Allocation

- Overlays

- Free Space Management

- Locality of Reference

- Paging and Page Replacement

- Paging

- Demand Paging

- Page Table

- Page Replacement Algorithms

- Second Chance Page Replacement

- Optimal Page Replacement Algorithm

- Belady's Anomaly

- Thrashing

- Storage and File Management

- File Systems

- File Attributes

- Structures of Directory

- Linked Index Allocation

- Indexed Allocation

- Disk Scheduling Algorithms

- FCFS Disk Scheduling

- SSTF Disk Scheduling

- SCAN Disk Scheduling

- LOOK Disk Scheduling

- I/O Systems

- I/O Hardware

- I/O Software

- I/O Programmed

- I/O Interrupt-Initiated

- Direct Memory Access

- OS Types

- OS - Types

- OS - Batch Processing

- OS - Multiprogramming

- OS - Multitasking

- OS - Multiprocessing

- OS - Distributed

- OS - Real-Time

- OS - Single User

- OS - Monolithic

- OS - Embedded

- Popular Operating Systems

- OS - Hybrid

- OS - Zephyr

- OS - Nix

- OS - Linux

- OS - Blackberry

- OS - Garuda

- OS - Tails

- OS - Clustered

- OS - Haiku

- OS - AIX

- OS - Solus

- OS - Tizen

- OS - Bharat

- OS - Fire

- OS - Bliss

- OS - VxWorks

- Miscellaneous Topics

- OS - Security

- OS Questions Answers

- OS - Questions Answers

- OS Useful Resources

- OS - Quick Guide

- OS - Useful Resources

- OS - Discussion

Operating System - Demand Paging

We already discussed the concept of paging in the previous chapter. In this chapter, we will discuss what is demand paging and how it works. Following topics will be covered in this chapter -

- What is Demand Paging?

- How Demand Paging Works?

- Example of Demand Paging

- Demand Paging vs Pre Paging

What is Demand Paging?

Demand paging is an optimization done on top of the paging memory management method. In demand paging, the pages of a process are loaded into memory only when they are needed (i.e., on demand) instead of loading all pages at once when the process starts.

To understand demand paging, suppose that a process is trying to access a page that is not currently in physical memory (RAM). It will lead to a page fault. So, the operating system loads the required pages from the virtual memory (disk) into physical memory. This happens on demand of process, hence the name demand paging. If the frames of the physical memory is full during the loading of new pages, then the OS uses page replacement algorithms to decide which pages are needed to be removed to make space for the new page.

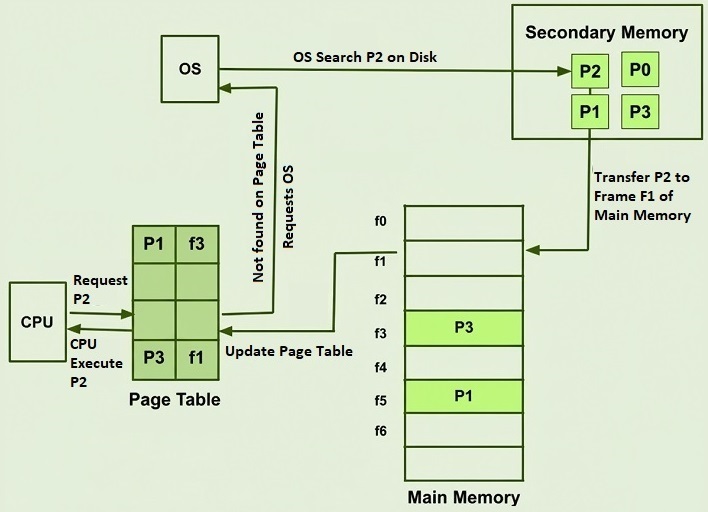

The following image shows an instance of demand paging. It highlights the set of activities that take place inside the system in the event of demand paging -

In the image,

- CPU request Page 2 − First, the CPU requests Page 2. The page table is used to check if Page 2 is in main memory. In this case, it is not. So a page fault occurs.

- Page Fault Handling − The operating system is notified of the page fault. It locates Page 2 on the disk and finds a free frame in main memory.

- Loading the Page − The OS loads Page 2 from the disk into the free frame in main memory. Then the page table is updated with the new frame number for Page 2.

- Resuming the Process − Finally, the CPU is notified that Page 2 is now in memory, and the process can continue executing.

How Demand Paging Works?

Here is detailed step-by-step explanation of working process of demand paging −

1. Page Table and Valid/Invalid Bit

Every process in the CPU has a page table that maps virtual addresses to physical addresses. For every entry in this page table, there is a column called the valid/invalid bit. If this bit is set to valid, it means that the corresponding page is currently in physical memory (RAM). And if it is invalid, it means that the page is not in physical memory but is stored on the disk. Accessing an invalid page will cause a page fault.

2. Page Fault Handling

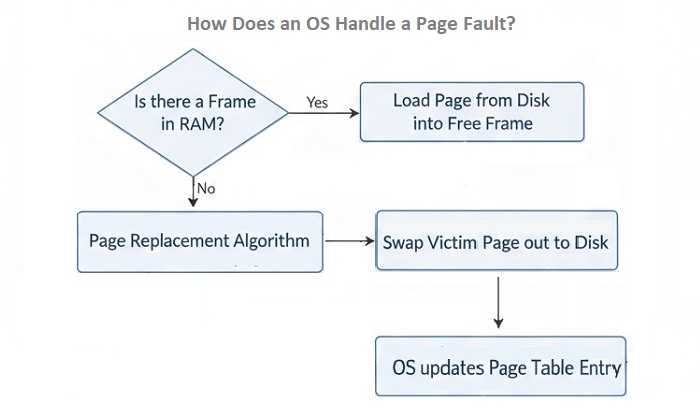

When a process tries to access a page in physical memory, the CPU checks the page table. Inside the page table, if the valid/invalid bit is set to invalid, a page fault occurs. The operating system then takes the following steps:

- Locate the Page on Disk The OS looks for where the required page is stored on the disk.

- Find a Free Frame − The OS checks for a free frame in physical memory (RAM). If no free frame is available, it uses a page replacement algorithm to remove a page from memory to free up space.

- Load the Page − The OS reads the required page from the disk and loads it into the selected frame in physical memory.

- Update the Page Table − The OS updates the page table with the new frame number and sets the valid/invalid bit to valid.

- Restart the Instruction − The instruction that caused the page fault is restarted, and the process can now access the required page in memory.

The following image shows how an OS handles a page fault −

3. Page Replacement

If there are no free frames available in physical memory, the OS uses page replacement algorithms to decide which page is to be removed from physical memory to free up space for the new page. Some common page replacement algorithms are −

- Least Recently Used (LRU) − The LRU algorithm removes the page that has not been used for the longest time.

- First-In-First-Out (FIFO) − The FIFO algorithm removes the oldest page in memory.

- Optimal Page Replacement − The optimal page replacement algorithm removes the page that will not be used for the longest period in the future (This is a theoretical concept and cannot be implemented in practice).

4. Thrashing

Thrashing occurs when a system spends more time handling page faults than executing actual processes. This can happen if the size of process is larger than the available physical memory. Meaning, if the size of your RAM is less, the system may constantly swap pages in and out of memory, which leads to thrashing. To avoid thrashing, the OS will implement strategies such as adjusting the degree of multiprogramming or using working set models.

Example of Demand Paging

Consider that, a program is contains four pages: P1, P2, P3, P4. Physical memory has only two available frames: F1, F2. Initially, the page table will look like this −

| Virtual Page | Physical Frame | Valid/Invalid Bit | Location on Disk |

|---|---|---|---|

| P1 | - | Invalid | Disk1 |

| P2 | - | Invalid | Disk2 |

| P3 | - | Invalid | Disk3 |

| P4 | - | Invalid | Disk4 |

- Access P1 − The CPU tries to access data in page P1. The page table shows P1 is Invalid (I). → Page Fault. The OS loads P1 from Disk into Frame F1. Updates page table: P1 is now Valid (V) and mapped to F1. The physical frame column in page table is updated to F1 for P1.

- Access P2 − The CPU tries to access data in page P2. The page table shows P2 is Invalid (I). → Page Fault. The OS loads P2 from Disk into Frame F2. Updates page table: P2 is now Valid (V) and mapped to F2. The physical frame column in page table is updated to F2 for P2.

- Access P3 − The CPU tries to access data in page P3. The page table shows P3 is Invalid (I). → Page Fault. Currently both frames F1 and F2 are occupied. Hence OS use a page replacement algorithm (e.g., LRU) to decide which page to remove. The LRU algorithm decides to remove P1 from F1. The OS writes P3 from Disk into Frame F1. Updates page table: P3 is now Valid (V) and mapped to F1, P1 is Invalid (I).

- Access P2 − The CPU tries to access data in page P2. The page table shows P2 is Valid (V) and mapped to F2. → No Page Fault. The CPU accesses data directly from Frame F2.

Demand Paging vs Pre Paging

Pre Paging is just opposite to demand paging. In pre paging, the operating system loads multiple pages into memory before they are actually needed. This is done based on the assumption that if one page is accessed, the pages near to it will be accessed soon.

We have tabulated the differences between demand paging and pre paging for better understanding −

| Aspect | Demand Paging | Pre Paging |

|---|---|---|

| Definition | Pages are loaded into memory only when they are needed (on demand). | Multiple pages are loaded into memory before they are actually needed. |

| Page Faults | More page faults can occur as pages are loaded only when accessed. | Less page faults expected because multiple pages are preloaded, so it will reduce chances of accessing a non-loaded page. |

| Memory Usage | More efficient memory usage. | Low memory efficiency. This leads to wastage of memory space. |

| Performance | Can lead to slower performance due to frequent page faults and disk I/O operations. | Improved performance because of no page faults. |

| Complexity | Easy to implement | More complex to implement as it requires predicting future page accesses. |

Conclusion

Demand paging is a memory management technique used to optimize paging in operating systems. In demand paging, pages are loaded into memory only when they are needed. It helps in reducing memory usage and improving the overall system performance. The main drawbacks of demand paging are increasing chances of page faults, slower performance and thrashing. However, it is still widely used in modern operating systems due to its efficiency in memory management.