- Home

- Basics of Algorithms

- DAA - Introduction to Algorithms

- DAA - Analysis of Algorithms

- DAA - Methodology of Analysis

- DAA - Asymptotic Notations & Apriori Analysis

- DAA - Time Complexity

- DAA - Master's Theorem

- DAA - Space Complexities

- Divide & Conquer

- DAA - Divide & Conquer Algorithm

- DAA - Max-Min Problem

- DAA - Merge Sort Algorithm

- DAA - Strassen's Matrix Multiplication

- DAA - Karatsuba Algorithm

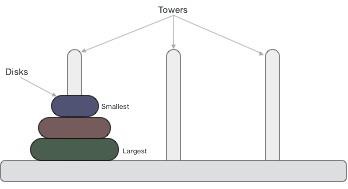

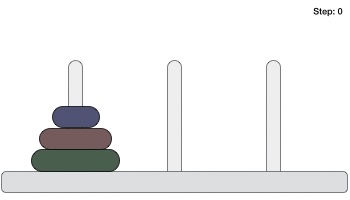

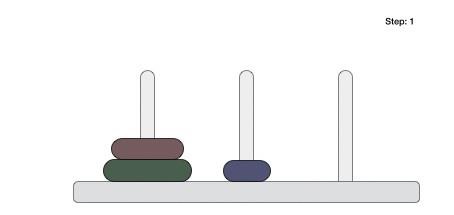

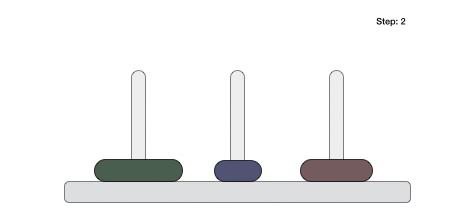

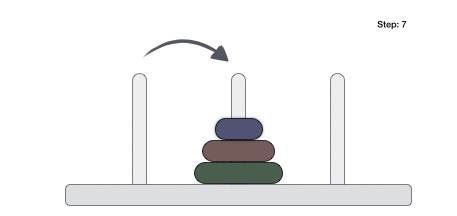

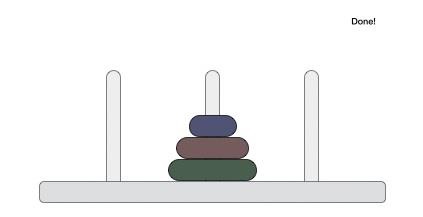

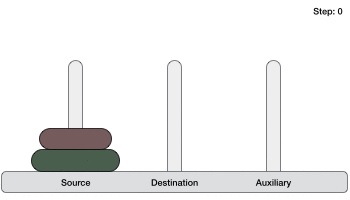

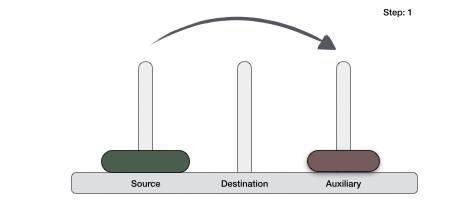

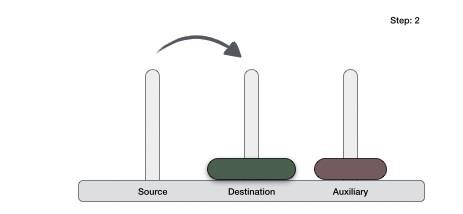

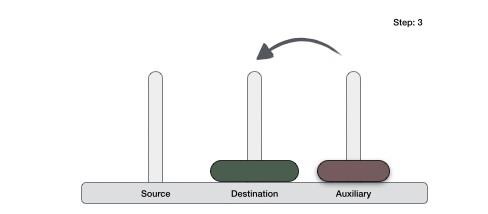

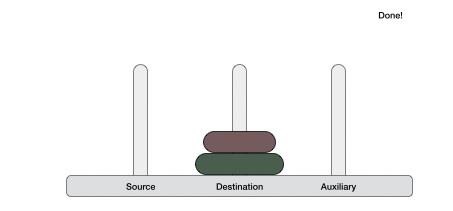

- DAA - Towers of Hanoi

- Greedy Algorithms

- DAA - Greedy Algorithms

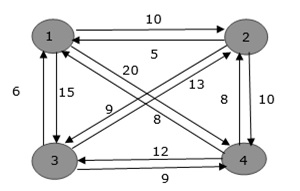

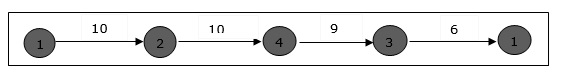

- DAA - Travelling Salesman Problem

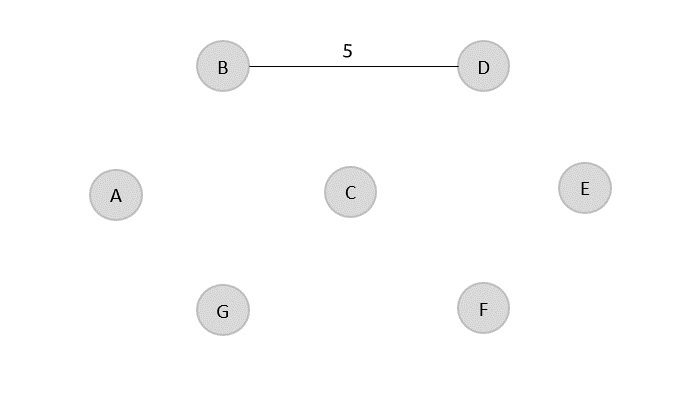

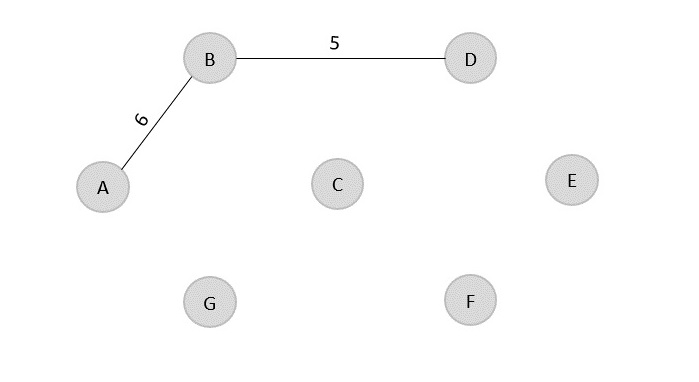

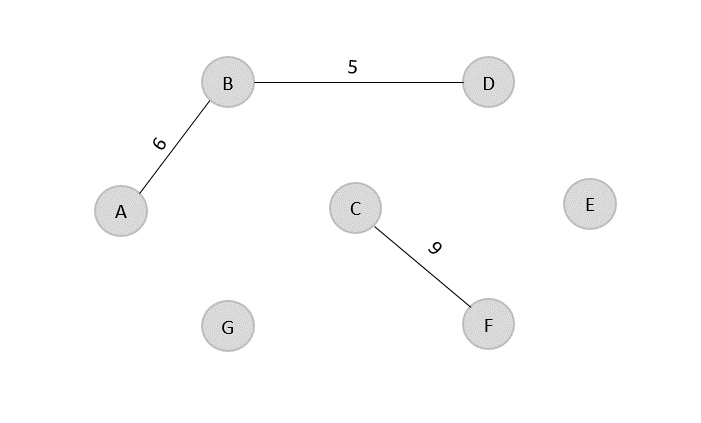

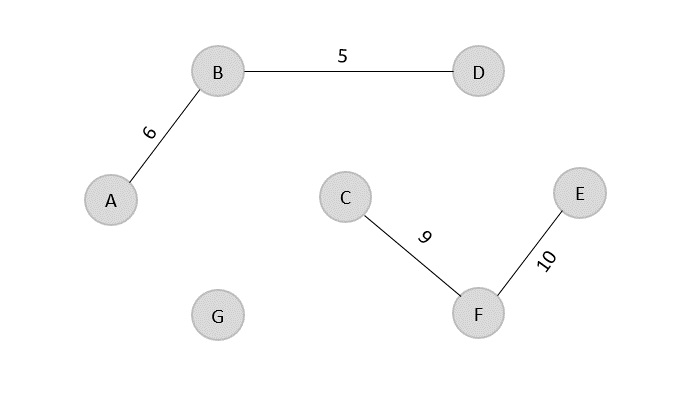

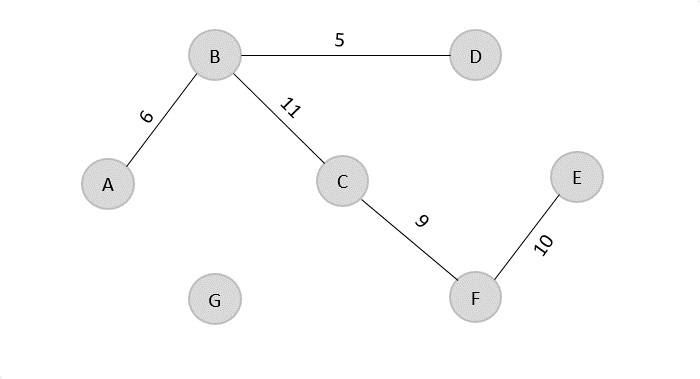

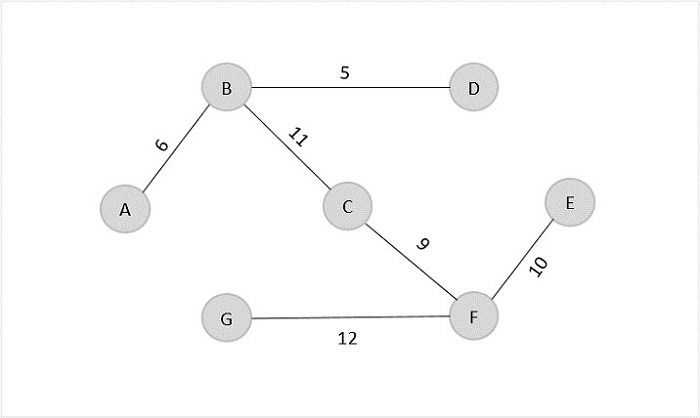

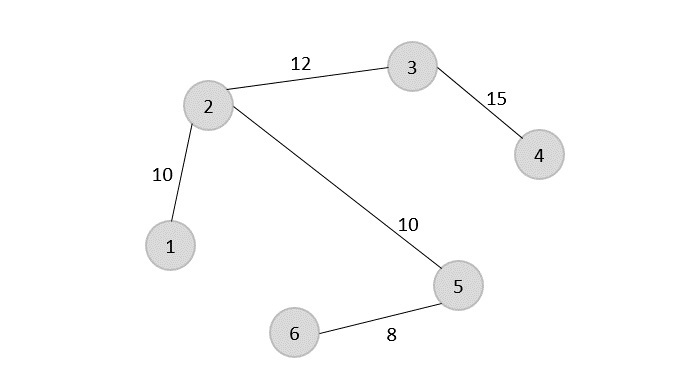

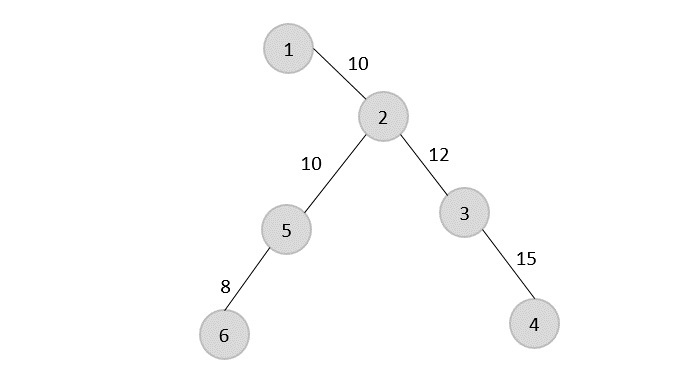

- DAA - Prim's Minimal Spanning Tree

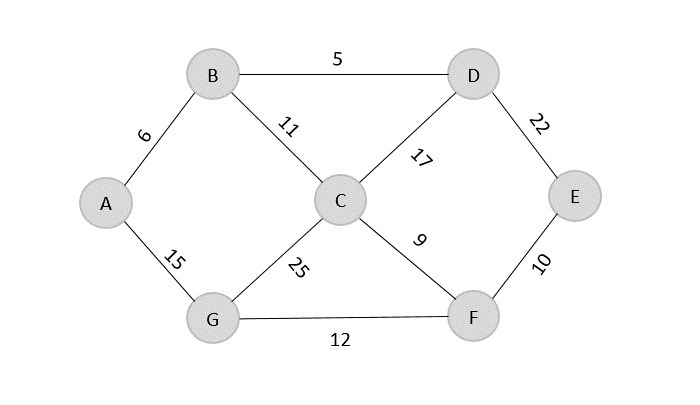

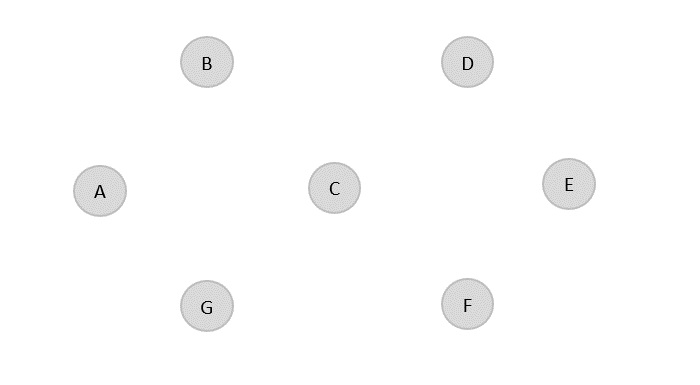

- DAA - Kruskal's Minimal Spanning Tree

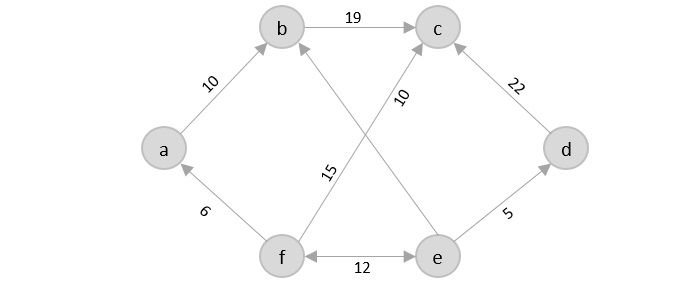

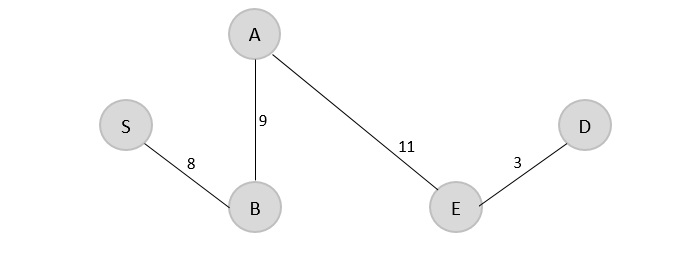

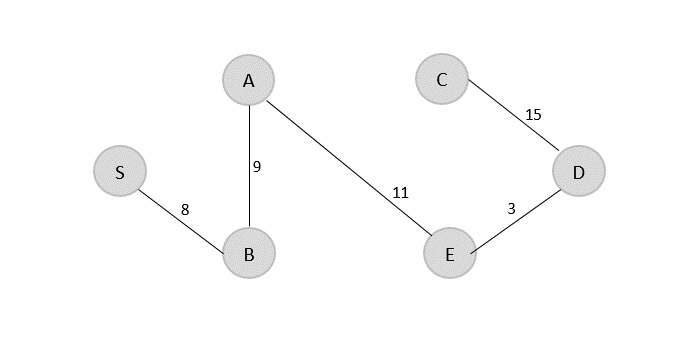

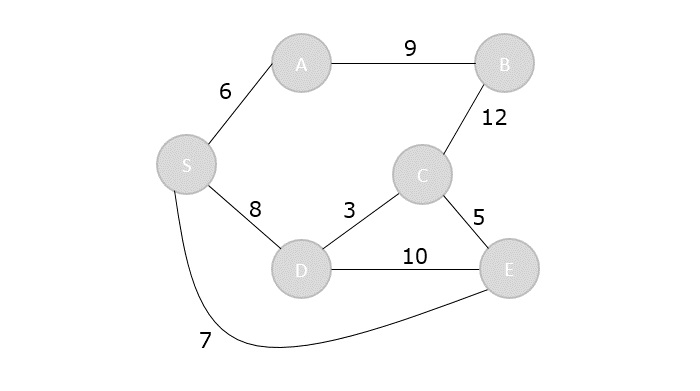

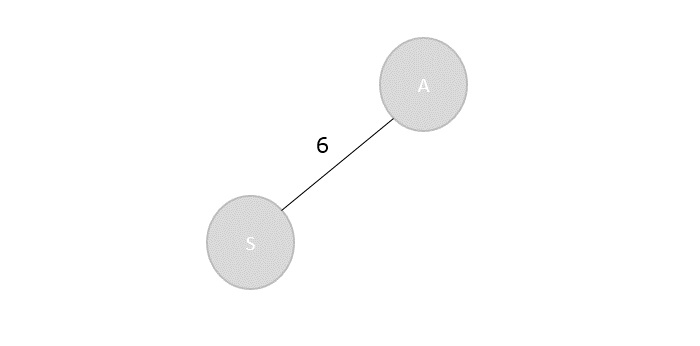

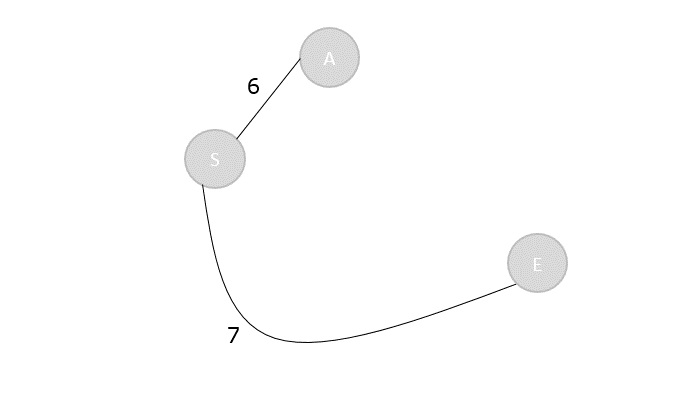

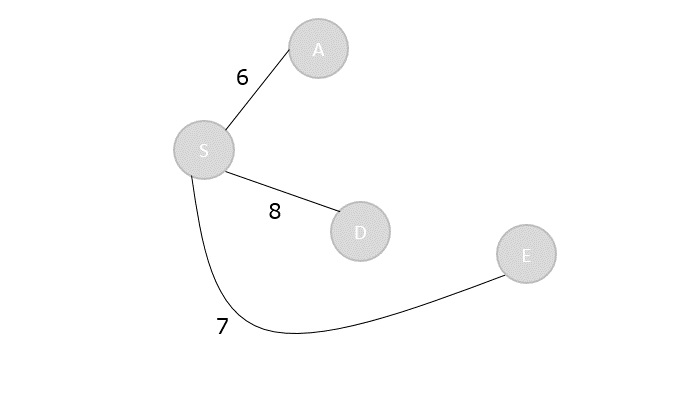

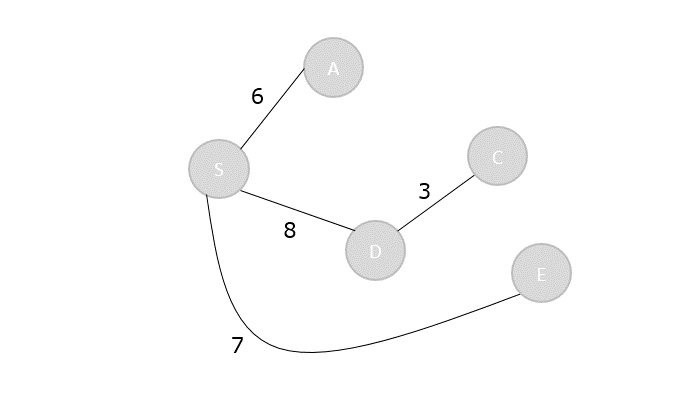

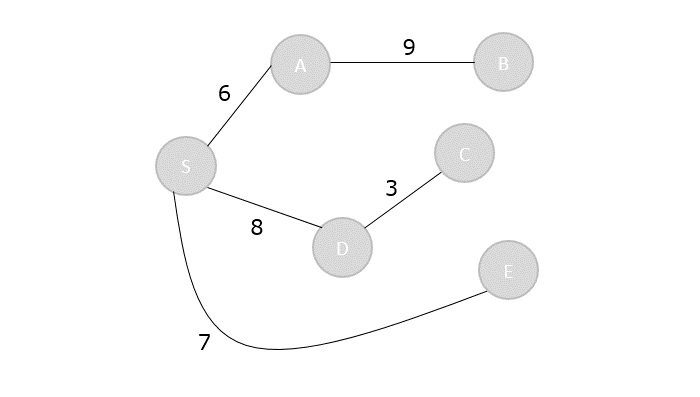

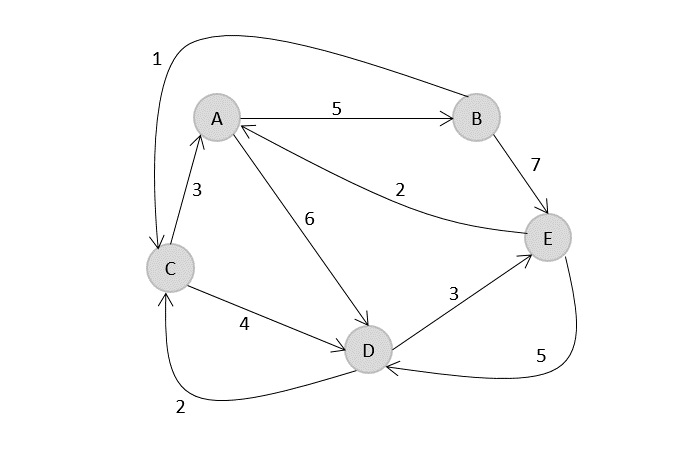

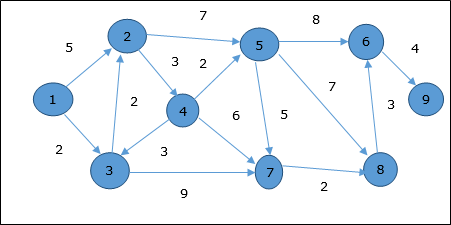

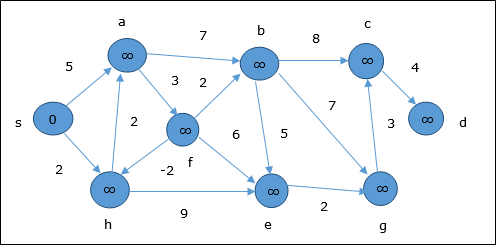

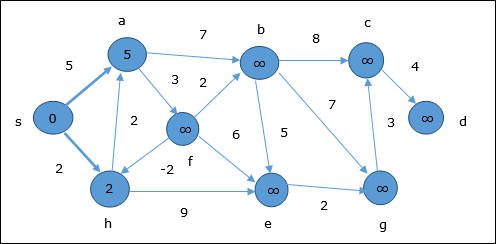

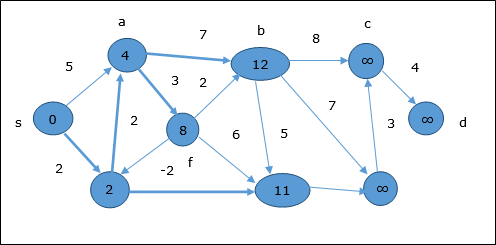

- DAA - Dijkstra's Shortest Path Algorithm

- DAA - Map Colouring Algorithm

- DAA - Fractional Knapsack

- DAA - Job Sequencing with Deadline

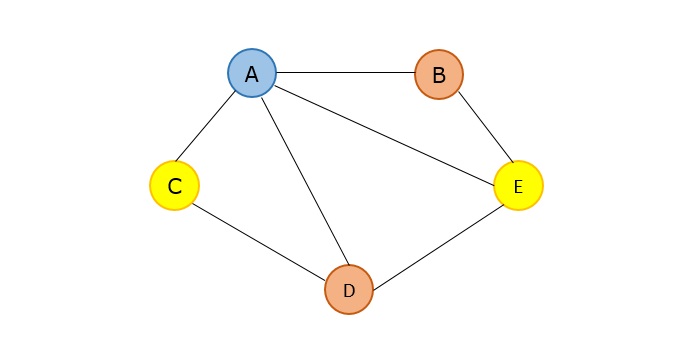

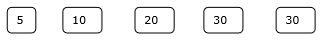

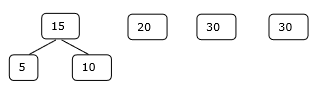

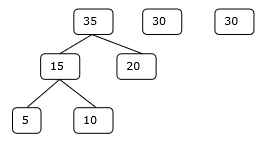

- DAA - Optimal Merge Pattern

- Dynamic Programming

- DAA - Dynamic Programming

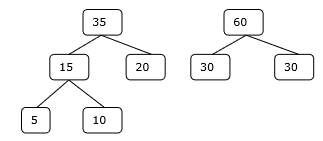

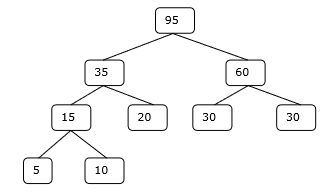

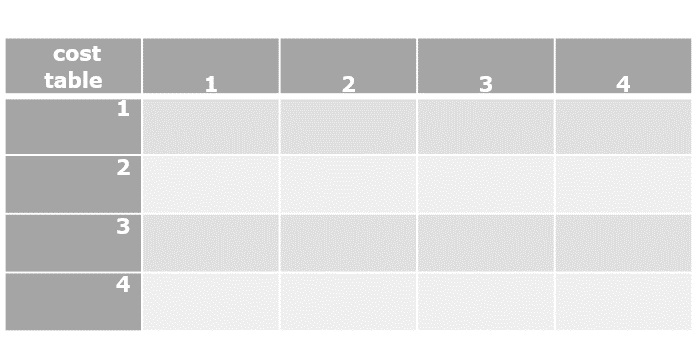

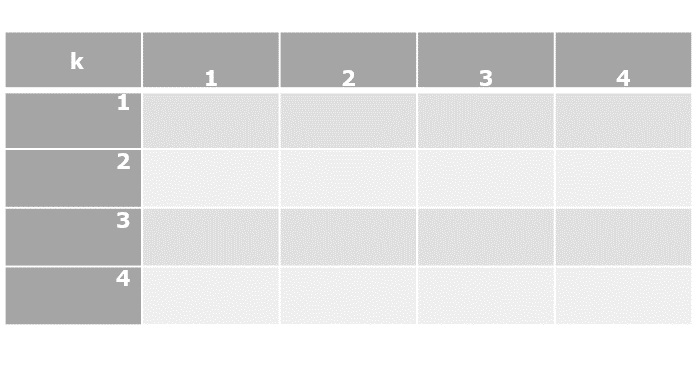

- DAA - Matrix Chain Multiplication

- DAA - Floyd Warshall Algorithm

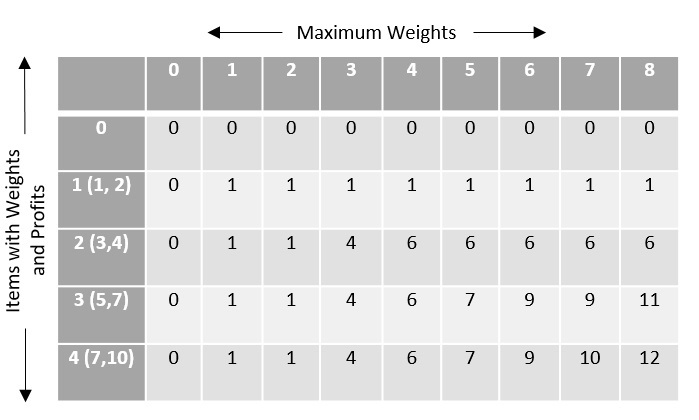

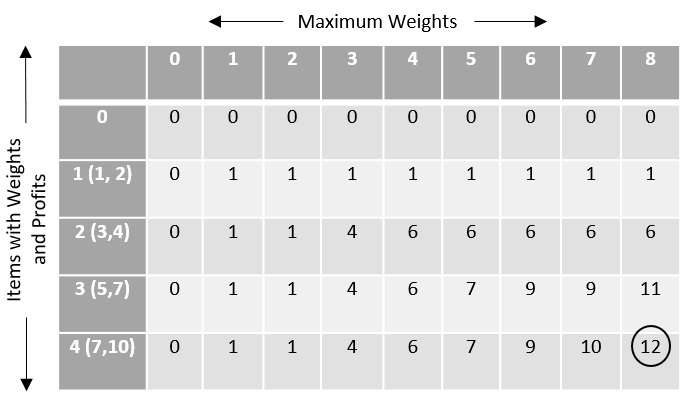

- DAA - 0-1 Knapsack Problem

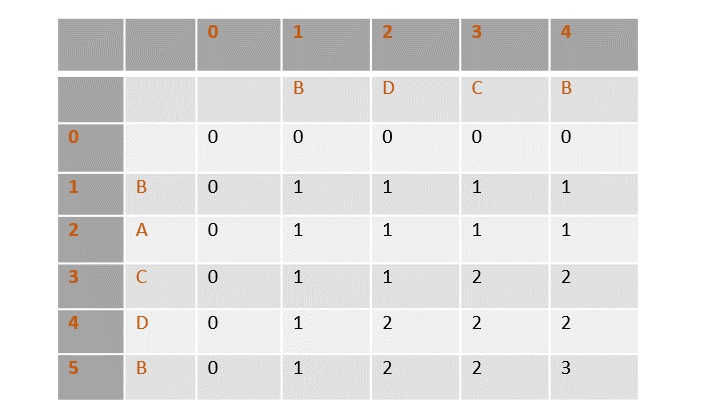

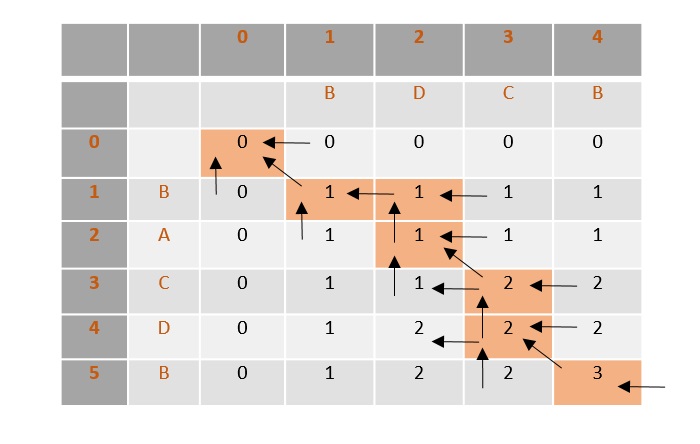

- DAA - Longest Common Subsequence Algorithm

- DAA - Travelling Salesman Problem using Dynamic Programming

- Randomized Algorithms

- DAA - Randomized Algorithms

- DAA - Randomized Quick Sort Algorithm

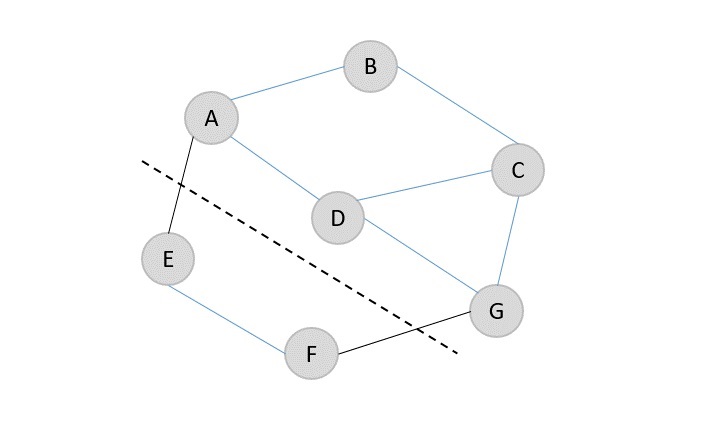

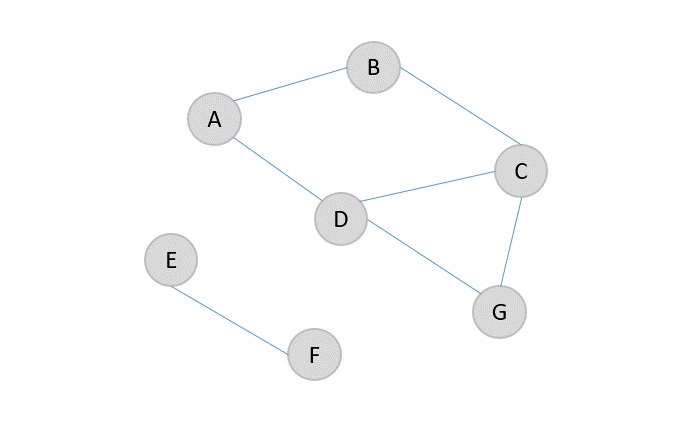

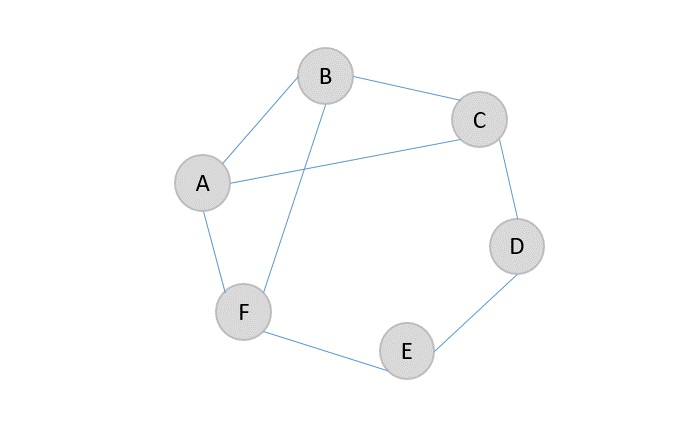

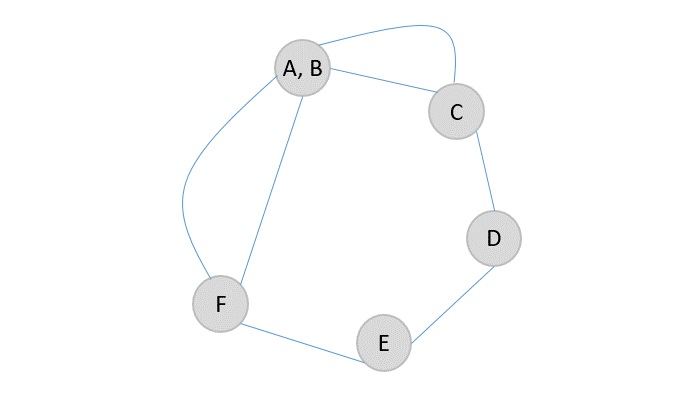

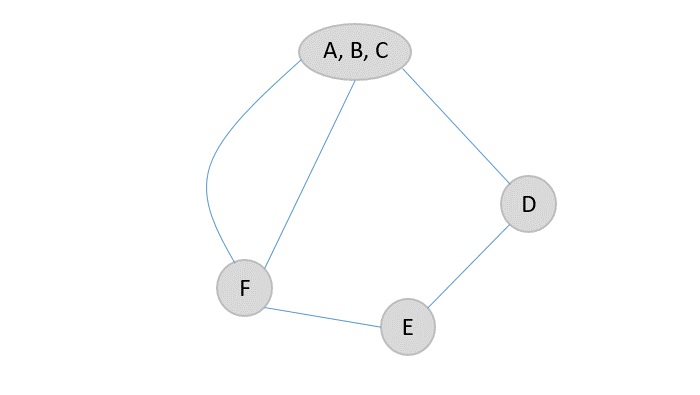

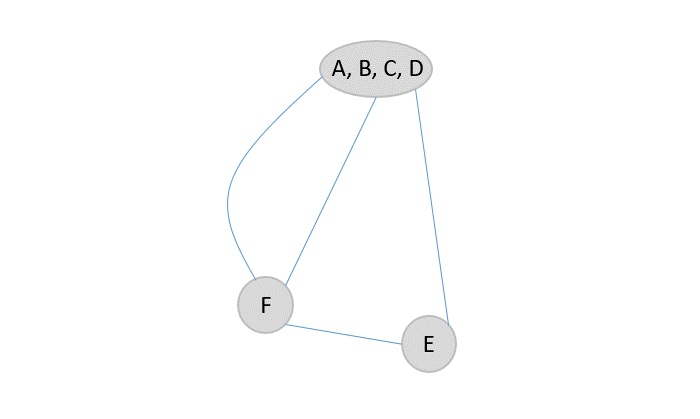

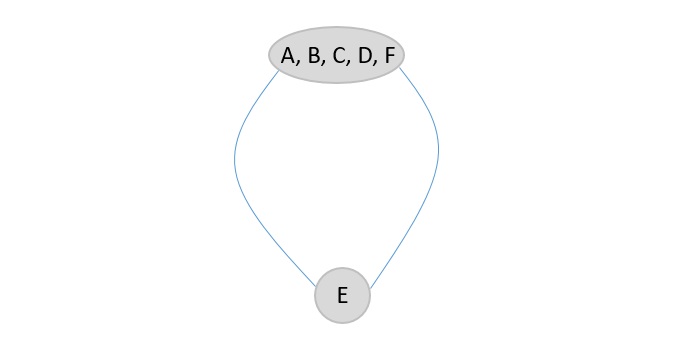

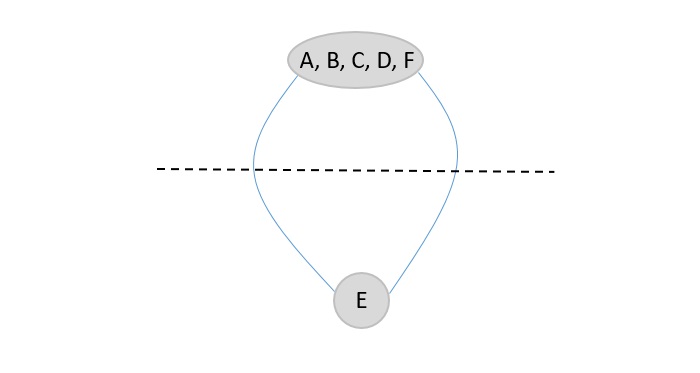

- DAA - Karger's Minimum Cut Algorithm

- DAA - Fisher-Yates Shuffle Algorithm

- Approximation Algorithms

- DAA - Approximation Algorithms

- DAA - Vertex Cover Problem

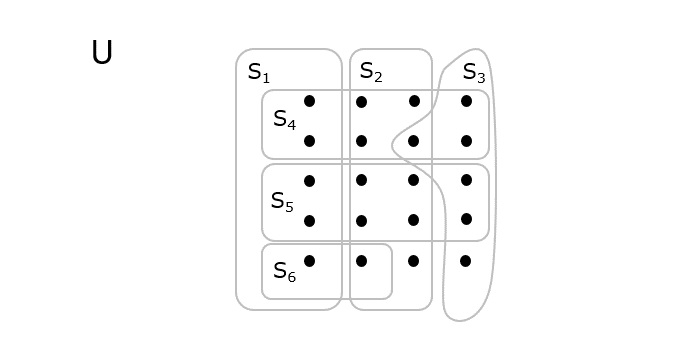

- DAA - Set Cover Problem

- DAA - Travelling Salesperson Approximation Algorithm

- Sorting Techniques

- DAA - Bubble Sort Algorithm

- DAA - Insertion Sort Algorithm

- DAA - Selection Sort Algorithm

- DAA - Shell Sort Algorithm

- DAA - Heap Sort Algorithm

- DAA - Bucket Sort Algorithm

- DAA - Counting Sort Algorithm

- DAA - Radix Sort Algorithm

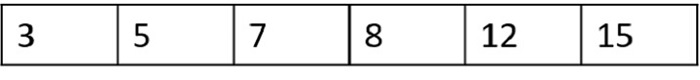

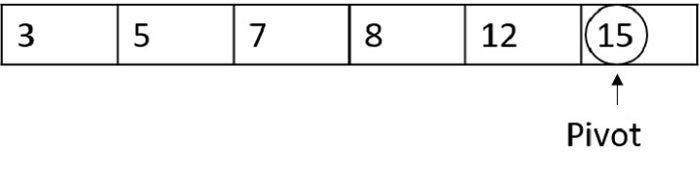

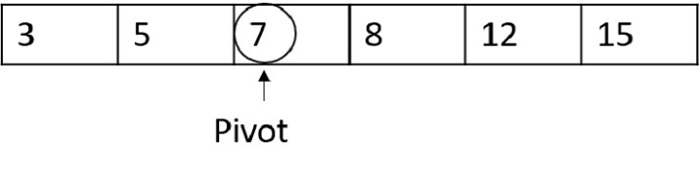

- DAA - Quick Sort Algorithm

- Searching Techniques

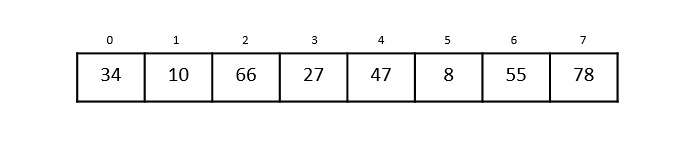

- DAA - Searching Techniques Introduction

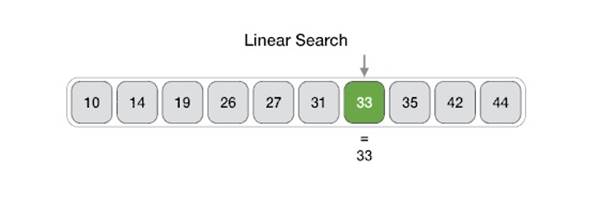

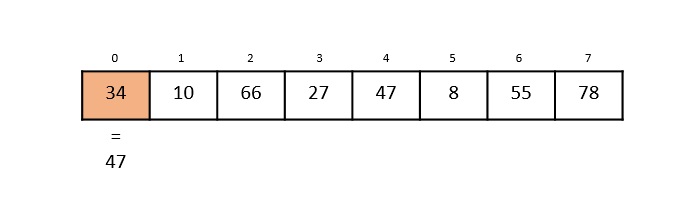

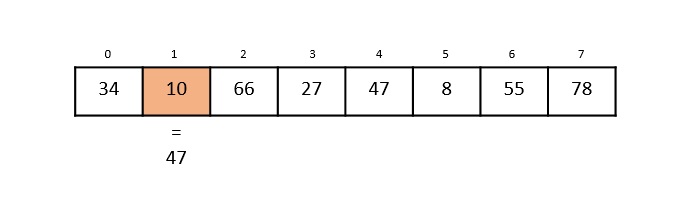

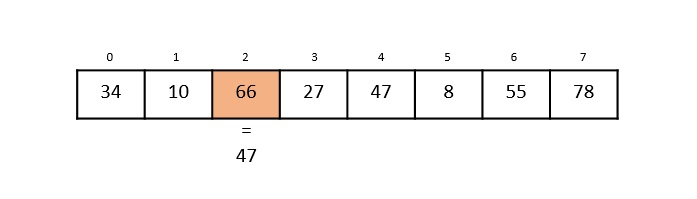

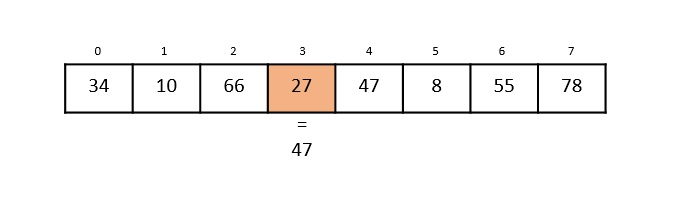

- DAA - Linear Search

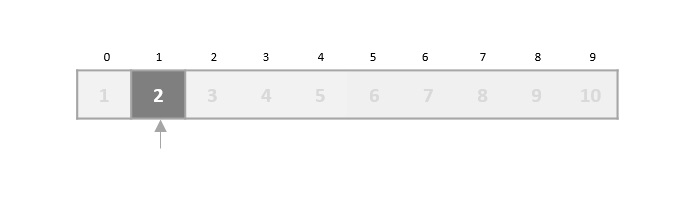

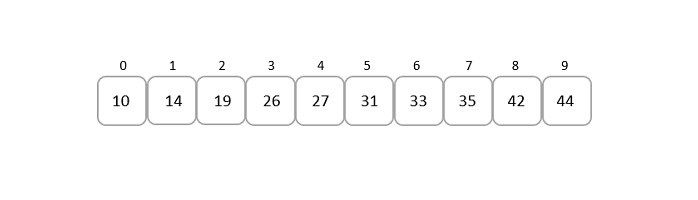

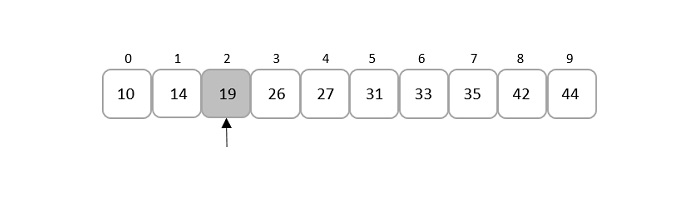

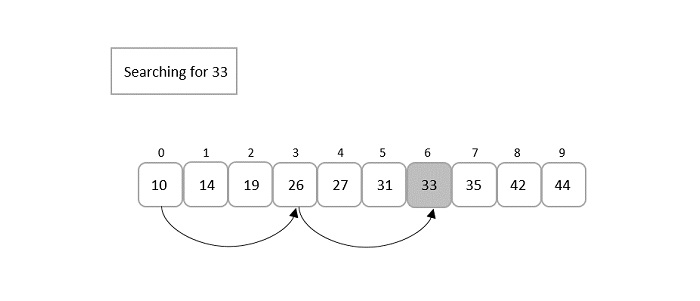

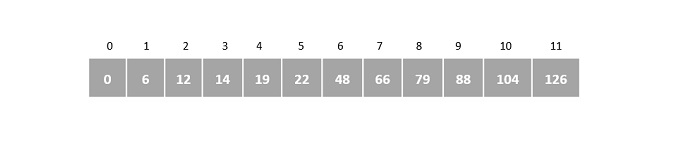

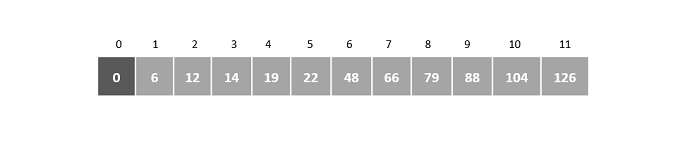

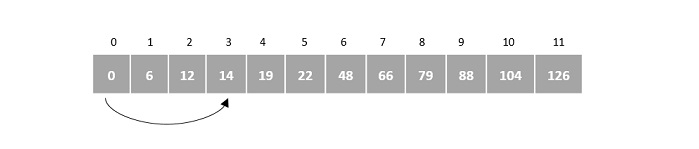

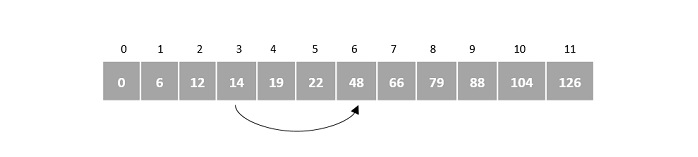

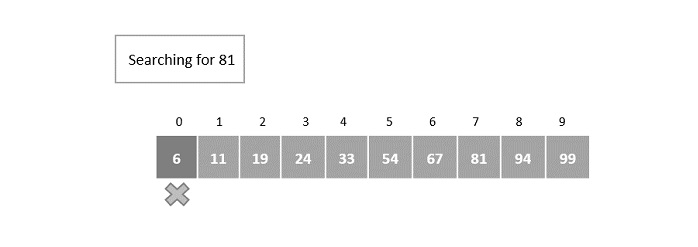

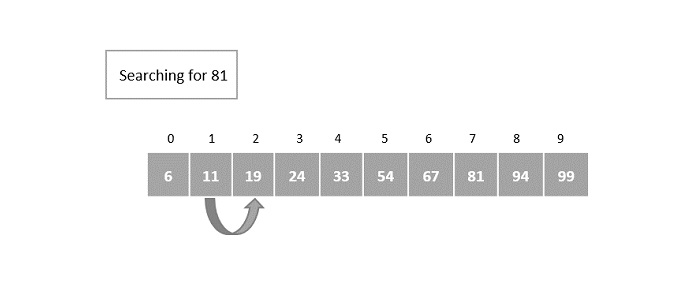

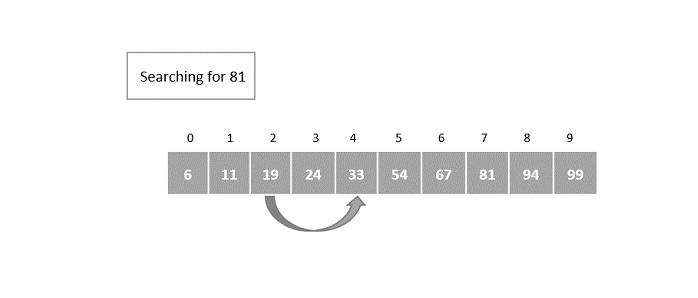

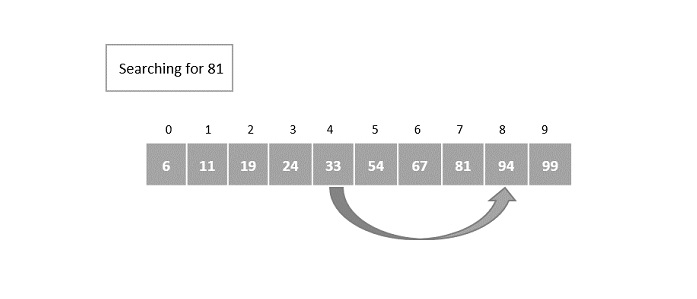

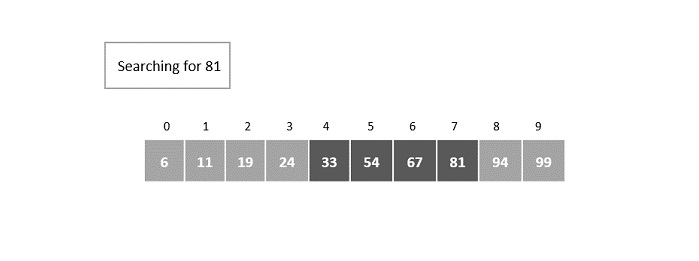

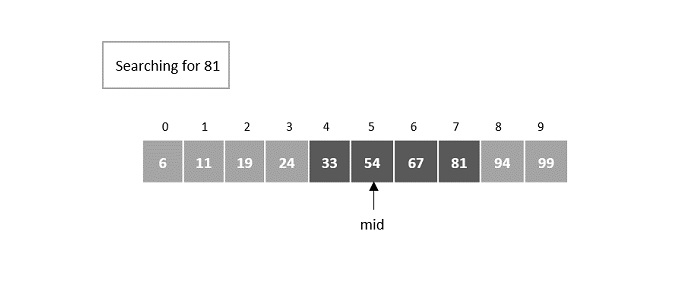

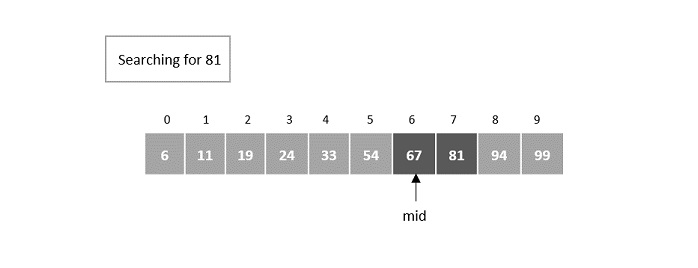

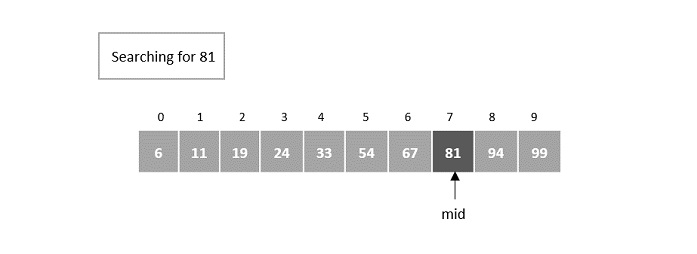

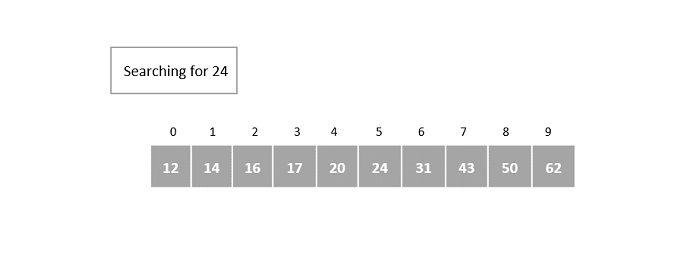

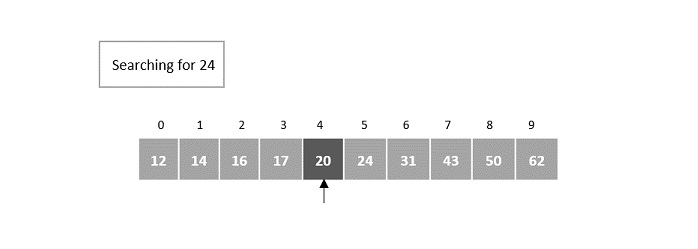

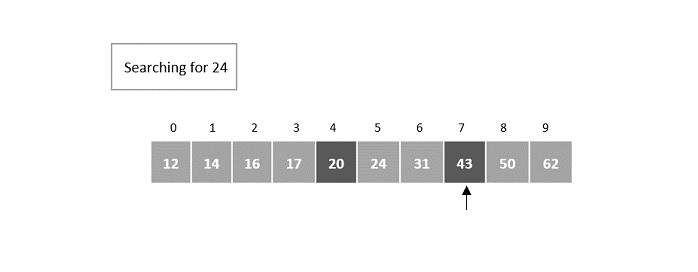

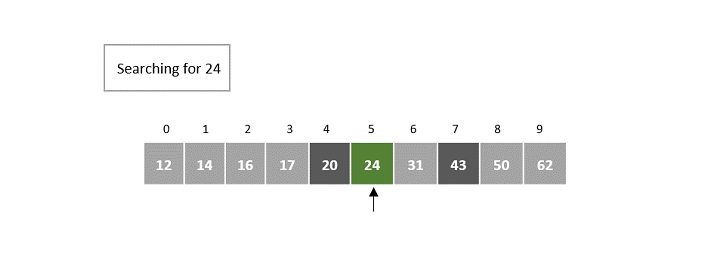

- DAA - Binary Search

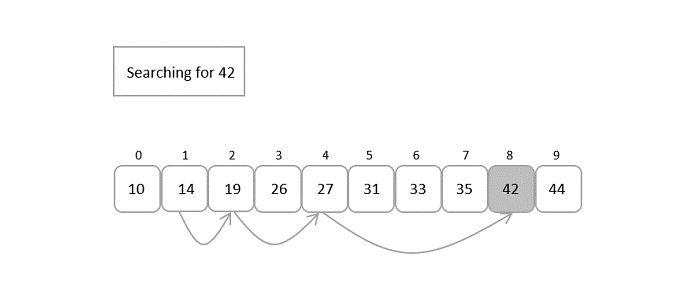

- DAA - Interpolation Search

- DAA - Jump Search

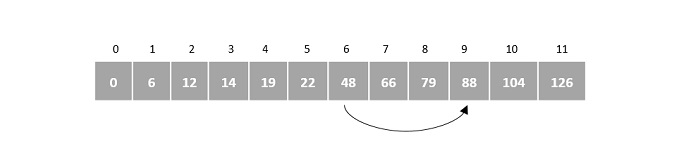

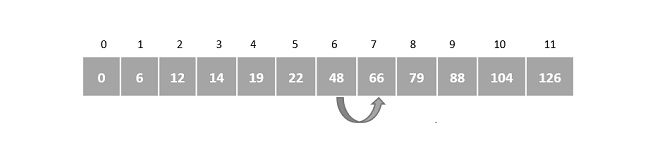

- DAA - Exponential Search

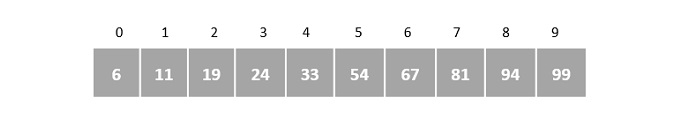

- DAA - Fibonacci Search

- DAA - Sublist Search

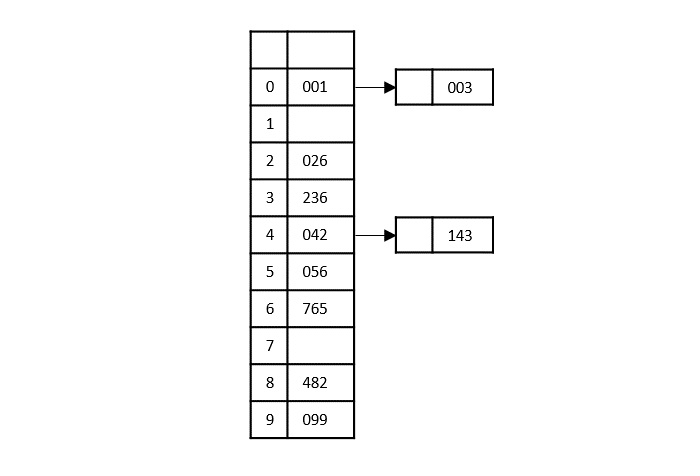

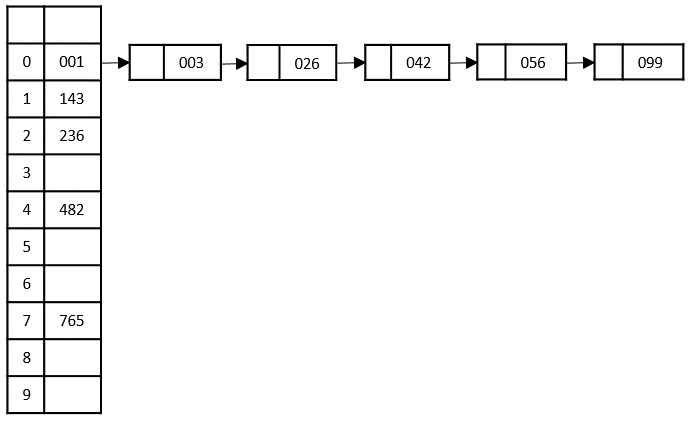

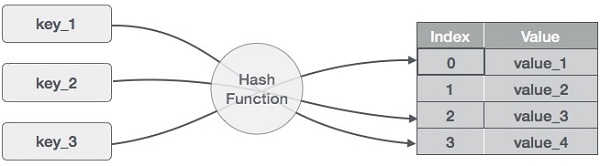

- DAA - Hash Table

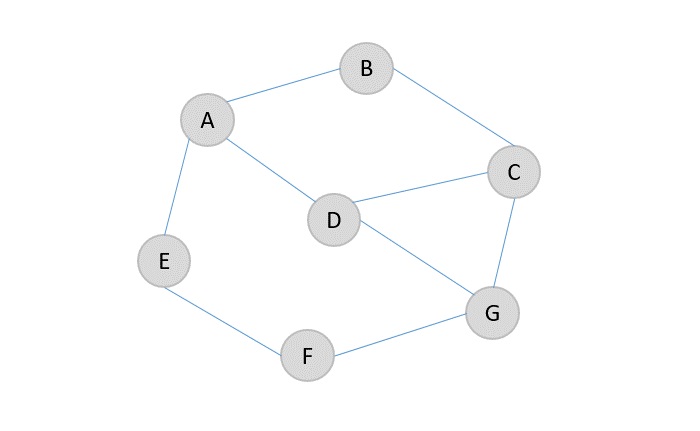

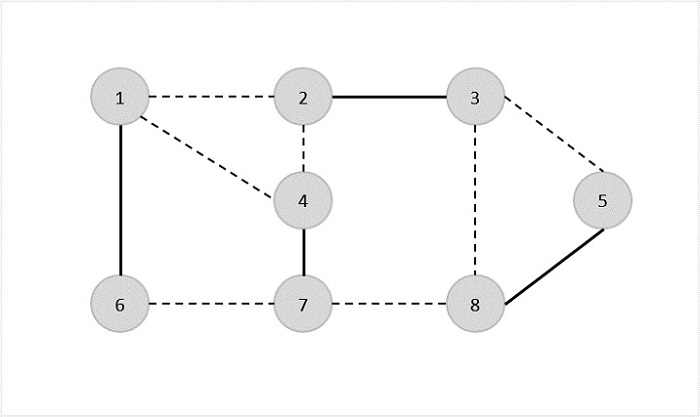

- Graph Theory

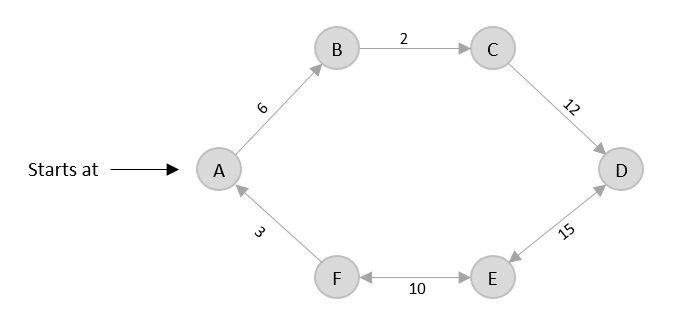

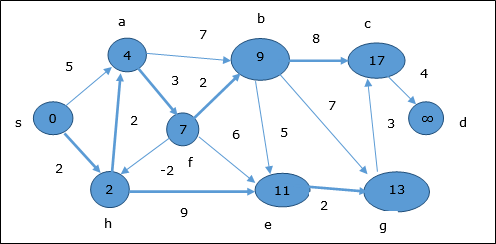

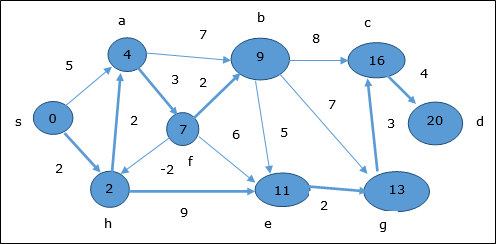

- DAA - Shortest Paths

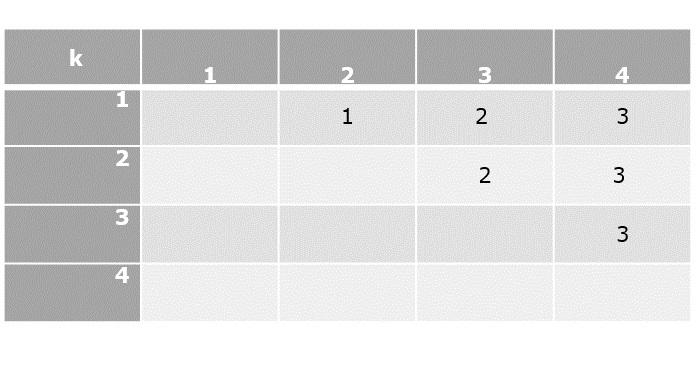

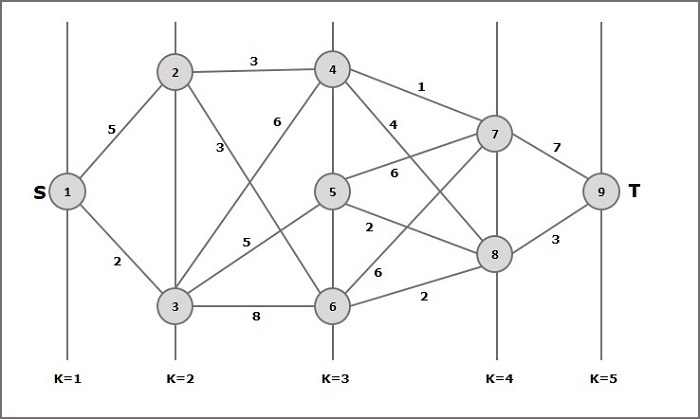

- DAA - Multistage Graph

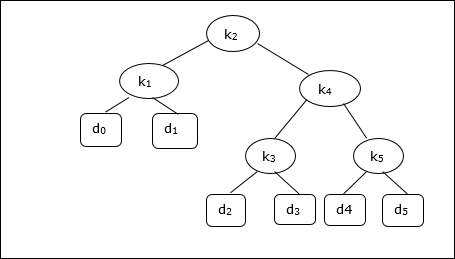

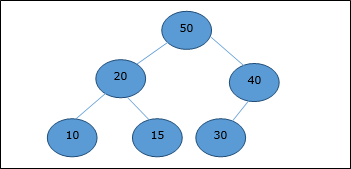

- DAA - Optimal Cost Binary Search Trees

- Heap Algorithms

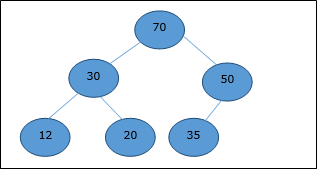

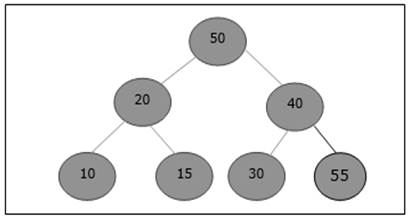

- DAA - Binary Heap

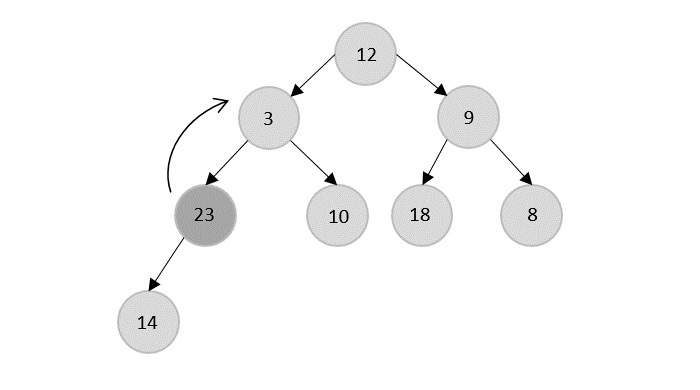

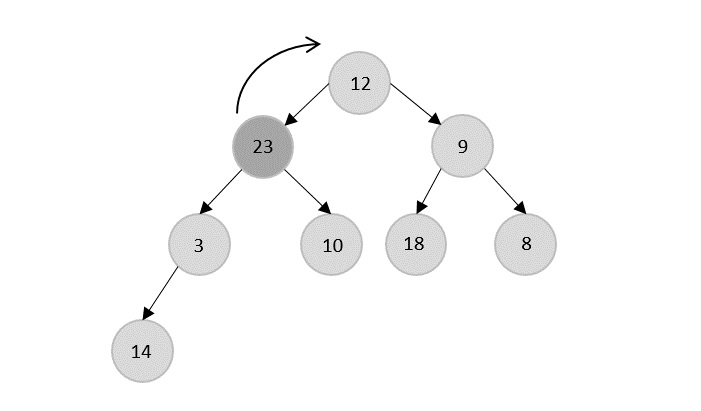

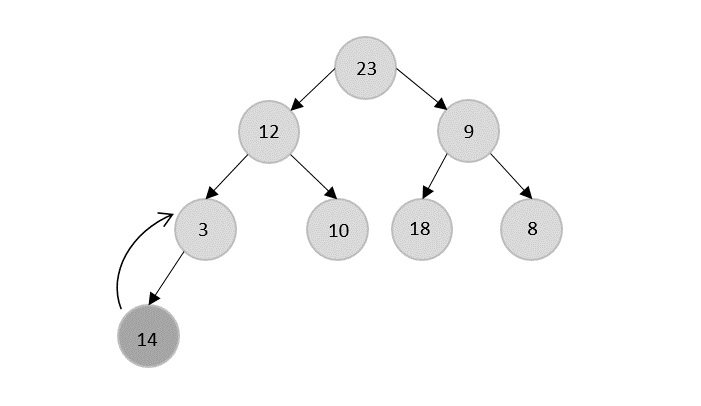

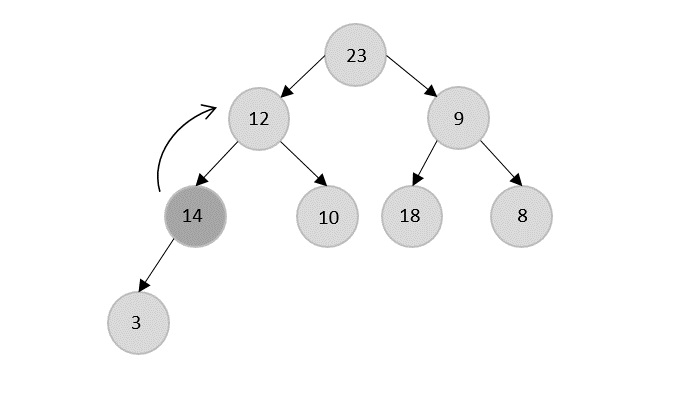

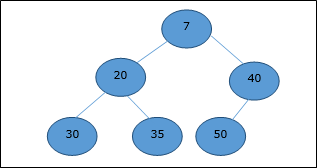

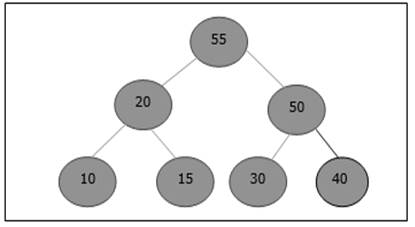

- DAA - Insert Method

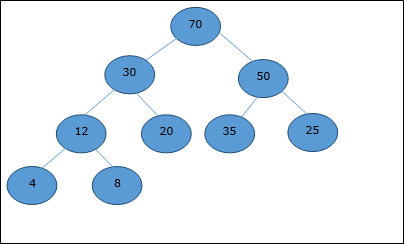

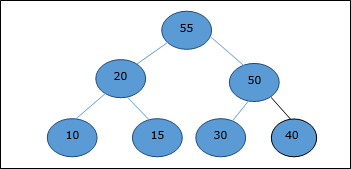

- DAA - Heapify Method

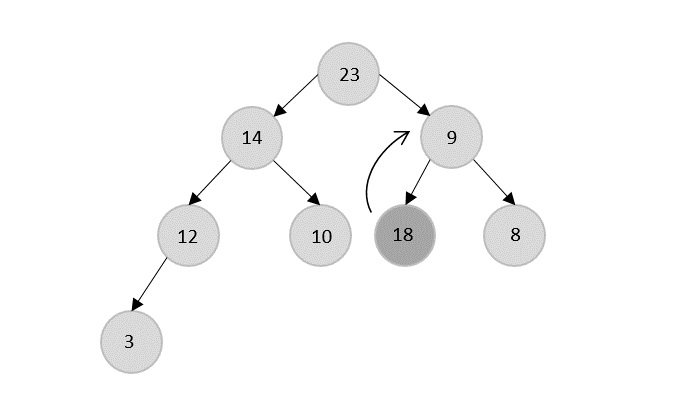

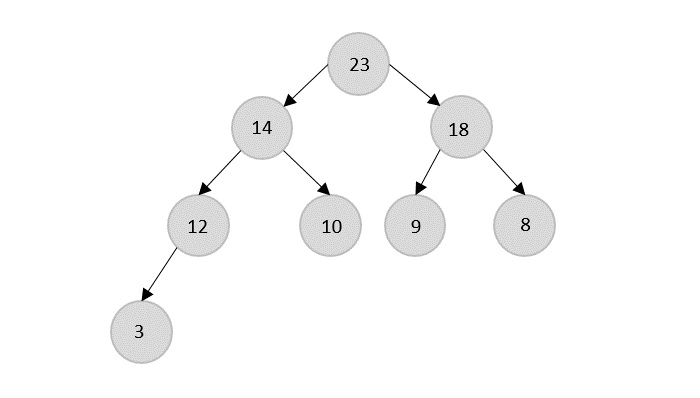

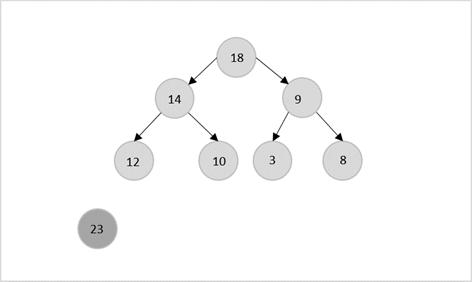

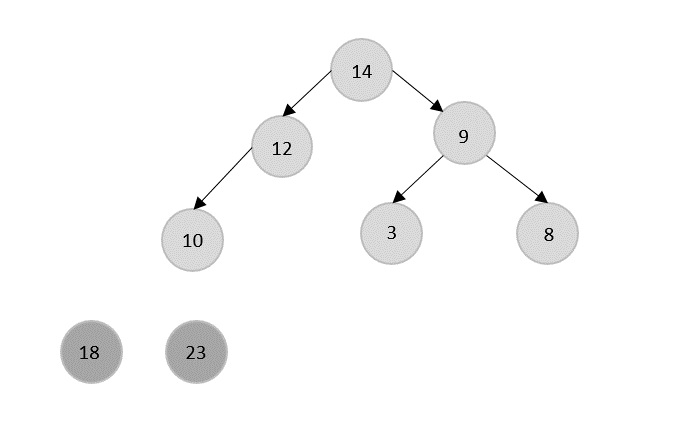

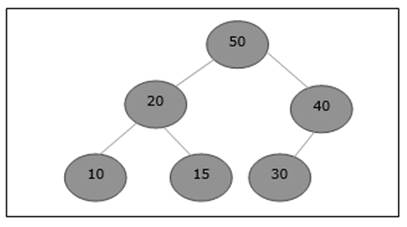

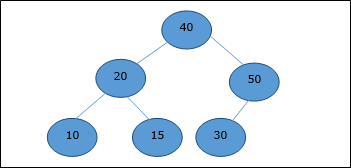

- DAA - Extract Method

- Complexity Theory

- DAA - Deterministic vs. Nondeterministic Computations

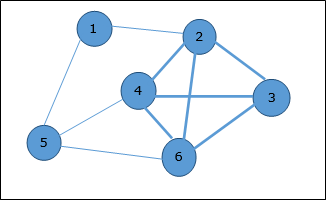

- DAA - Max Cliques

- DAA - Vertex Cover

- DAA - P and NP Class

- DAA - Cook's Theorem

- DAA - NP Hard & NP-Complete Classes

- DAA - Hill Climbing Algorithm

- DAA Useful Resources

- DAA - Quick Guide

- DAA - Useful Resources

- DAA - Discussion

Design and Analysis Quick Guide

Introduction to Algorithms

An algorithm is a set of steps of operations to solve a problem performing calculation, data processing, and automated reasoning tasks. An algorithm is an efficient method that can be expressed within finite amount of time and space.

An algorithm is the best way to represent the solution of a particular problem in a very simple and efficient way. If we have an algorithm for a specific problem, then we can implement it in any programming language, meaning that the algorithm is independent from any programming languages.

Algorithm Design

The important aspects of algorithm design include creating an efficient algorithm to solve a problem in an efficient way using minimum time and space.

To solve a problem, different approaches can be followed. Some of them can be efficient with respect to time consumption, whereas other approaches may be memory efficient. However, one has to keep in mind that both time consumption and memory usage cannot be optimized simultaneously. If we require an algorithm to run in lesser time, we have to invest in more memory and if we require an algorithm to run with lesser memory, we need to have more time.

Problem Development Steps

The following steps are involved in solving computational problems.

- Problem definition

- Development of a model

- Specification of an Algorithm

- Designing an Algorithm

- Checking the correctness of an Algorithm

- Analysis of an Algorithm

- Implementation of an Algorithm

- Program testing

- Documentation

How to Write an Algorithm?

There are no well-defined standards for writing algorithms. Rather, it is problem and resource dependent. Algorithms are never written to support a particular programming code.

As we know that all programming languages share basic code constructs like loops (do, for, while), flow-control (if-else), etc. These common constructs can be used to write an algorithm.

We write algorithms in a step-by-step manner, but it is not always the case. Algorithm writing is a process and is executed after the problem domain is well-defined. That is, we should know the problem domain, for which we are designing a solution.

Example

Let's try to learn algorithm-writing by using an example.

Problem − Design an algorithm to add two numbers and display the result.

Step 1 − START Step 2 − declare three integers a, b & c Step 3 − define values of a & b Step 4 − add values of a & b Step 5 − store output of step 4 to c Step 6 − print c Step 7 − STOP

Algorithms tell the programmers how to code the program. Alternatively, the algorithm can be written as −

Step 1 − START ADD Step 2 − get values of a & b Step 3 − c ← a + b Step 4 − display c Step 5 − STOP

In design and analysis of algorithms, usually the second method is used to describe an algorithm. It makes it easy for the analyst to analyze the algorithm ignoring all unwanted definitions. He can observe what operations are being used and how the process is flowing.

Characteristics of Algorithms

Not all procedures can be called an algorithm. An algorithm should have the following characteristics −

Unambiguous − Algorithm should be clear and unambiguous. Each of its steps (or phases), and their inputs/outputs should be clear and must lead to only one meaning.

Input − An algorithm should have 0 or more well-defined inputs.

Output − An algorithm should have 1 or more well-defined outputs, and should match the desired output.

Finiteness − Algorithms must terminate after a finite number of steps.

Feasibility − Should be feasible with the available resources.

Independent − An algorithm should have step-by-step directions, which should be independent of any programming code.

Pseudocode

Pseudocode gives a high-level description of an algorithm without the ambiguity associated with plain text but also without the need to know the syntax of a particular programming language.

The running time can be estimated in a more general manner by using Pseudocode to represent the algorithm as a set of fundamental operations which can then be counted.

Difference between Algorithm and Pseudocode

An algorithm is a formal definition with some specific characteristics that describes a process, which could be executed by a Turing-complete computer machine to perform a specific task. Generally, the word "algorithm" can be used to describe any high level task in computer science.

On the other hand, pseudocode is an informal and (often rudimentary) human readable description of an algorithm leaving many granular details of it. Writing a pseudocode has no restriction of styles and its only objective is to describe the high level steps of algorithm in a much realistic manner in natural language.

For example, following is an algorithm for Insertion Sort.

Algorithm: Insertion-Sort Input: A list L of integers of length n Output: A sorted list L1 containing those integers present in L Step 1: Keep a sorted list L1 which starts off empty Step 2: Perform Step 3 for each element in the original list L Step 3: Insert it into the correct position in the sorted list L1. Step 4: Return the sorted list Step 5: Stop

Here is a pseudocode which describes how the high level abstract process mentioned above in the algorithm Insertion-Sort could be described in a more realistic way.

for i <- 1 to length(A)

x <- A[i]

j <- i

while j > 0 and A[j-1] > x

A[j] <- A[j-1]

j <- j - 1

A[j] <- x

In this tutorial, algorithms will be presented in the form of pseudocode, that is similar in many respects to C, C++, Java, Python, and other programming languages.

Example

#include <stdio.h>

void insertionSort(int arr[], int n) {

int i, j, key;

for (i = 1; i < n; i++) {

key = arr[i];

j = i - 1;

// Move elements of arr[0..i-1] that are greater than key,

// to one position ahead of their current position.

while (j >= 0 && arr[j] > key) {

arr[j + 1] = arr[j];

j = j - 1;

}

arr[j + 1] = key; // Insert the current element (key) in the correct position.

}

}

int main() {

int arr[] = {6, 4, 26, 14, 33, 64, 46};

int n = sizeof(arr) / sizeof(arr[0]);

insertionSort(arr, n);

printf("Sorted array: ");

for (int i = 0; i < n; i++) {

printf("%d ", arr[i]);

}

printf("\n");

return 0;

}

Output

Sorted array: 4 6 14 26 33 46 64

#include <iostream>

using namespace std;

void insertionSort(int arr[], int n) {

int i, j, key;

for (i = 1; i < n; i++) {

key = arr[i];

j = i - 1;

// Move elements of arr[0..i-1] that are greater than key,

// to one position ahead of their current position.

while (j >= 0 && arr[j] > key) {

arr[j + 1] = arr[j];

j = j - 1;

}

arr[j + 1] = key; // Insert the current element (key) in the correct position.

}

}

int main() {

int arr[] = {6, 4, 26, 14, 33, 64, 46};

int n = sizeof(arr) / sizeof(arr[0]);

insertionSort(arr, n);

cout << "Sorted array: ";

for (int i = 0; i < n; i++) {

cout << arr[i] << " ";

}

cout << endl;

return 0;

}

Output

Sorted array: 4 6 14 26 33 46 64

import java.util.Arrays;

public class InsertionSort {

public static void insertionSort(int arr[]) {

int n = arr.length;

for (int i = 1; i < n; i++) {

int key = arr[i];

int j = i - 1;

// Move elements of arr[0..i-1] that are greater than key,

// to one position ahead of their current position.

while (j >= 0 && arr[j] > key) {

arr[j + 1] = arr[j];

j = j - 1;

}

arr[j + 1] = key; // Insert the current element (key) in the correct position.

}

}

public static void main(String[] args) {

int[] arr = {64, 34, 25, 12, 22, 11, 90};

insertionSort(arr);

System.out.println("Sorted array: " + Arrays.toString(arr));

}

}

Output

Sorted array: [11, 12, 22, 25, 34, 64, 90]

def insertion_sort(arr):

for i in range(1, len(arr)):

key = arr[i]

j = i - 1

# Move elements of arr[0..i-1] that are greater than key,

# to one position ahead of their current position.

while j >= 0 and arr[j] > key:

arr[j + 1] = arr[j]

j -= 1

arr[j + 1] = key # Insert the current element (key) in the correct position.

arr = [64, 34, 25, 12, 22, 11, 90]

insertion_sort(arr)

print("Sorted array:", arr)

Output

Sorted array: [11, 12, 22, 25, 34, 64, 90]

Analysis of Algorithms

In theoretical analysis of algorithms, it is common to estimate their complexity in the asymptotic sense, i.e., to estimate the complexity function for arbitrarily large input. The term "analysis of algorithms" was coined by Donald Knuth.

Algorithm analysis is an important part of computational complexity theory, which provides theoretical estimation for the required resources of an algorithm to solve a specific computational problem. Most algorithms are designed to work with inputs of arbitrary length. Analysis of algorithms is the determination of the amount of time and space resources required to execute it.

Usually, the efficiency or running time of an algorithm is stated as a function relating the input length to the number of steps, known as time complexity, or volume of memory, known as space complexity.

The Need for Analysis

In this chapter, we will discuss the need for analysis of algorithms and how to choose a better algorithm for a particular problem as one computational problem can be solved by different algorithms.

By considering an algorithm for a specific problem, we can begin to develop pattern recognition so that similar types of problems can be solved by the help of this algorithm.

Algorithms are often quite different from one another, though the objective of these algorithms are the same. For example, we know that a set of numbers can be sorted using different algorithms. Number of comparisons performed by one algorithm may vary with others for the same input. Hence, time complexity of those algorithms may differ. At the same time, we need to calculate the memory space required by each algorithm.

Analysis of algorithm is the process of analyzing the problem-solving capability of the algorithm in terms of the time and size required (the size of memory for storage while implementation). However, the main concern of analysis of algorithms is the required time or performance. Generally, we perform the following types of analysis −

Worst-case − The maximum number of steps taken on any instance of size a.

Best-case − The minimum number of steps taken on any instance of size a.

Average case − An average number of steps taken on any instance of size a.

Amortized − A sequence of operations applied to the input of size a averaged over time.

To solve a problem, we need to consider time as well as space complexity as the program may run on a system where memory is limited but adequate space is available or may be vice-versa. In this context, if we compare bubble sort and merge sort. Bubble sort does not require additional memory, but merge sort requires additional space. Though time complexity of bubble sort is higher compared to merge sort, we may need to apply bubble sort if the program needs to run in an environment, where memory is very limited.

Rate of Growth

Rate of growth is defined as the rate at which the running time of the algorithm is increased when the input size is increased.

The growth rate could be categorized into two types: linear and exponential. If the algorithm is increased in a linear way with an increasing in input size, it is linear growth rate. And if the running time of the algorithm is increased exponentially with the increase in input size, it is exponential growth rate.

Proving Correctness of an Algorithm

Once an algorithm is designed to solve a problem, it becomes very important that the algorithm always returns the desired output for every input given. So, there is a need to prove the correctness of an algorithm designed. This can be done using various methods −

Proof by Counterexample

Identify a case for which the algorithm might not be true and apply. If the counterexample works for the algorithm, then the correctness is proved. Otherwise, another algorithm that solves this counterexample must be designed.

Proof by Induction

Using mathematical induction, we can prove an algorithm is correct for all the inputs by proving it is correct for a base case input, say 1, and assume it is correct for another input k, and then prove it is true for k+1.

Proof by Loop Invariant

Find a loop invariant k, prove that the base case holds true for the loop invariant in the algorithm. Then apply mathematical induction to prove the rest of algorithm true.

Methodology of Analysis

To measure resource consumption of an algorithm, different strategies are used as discussed in this chapter.

Asymptotic Analysis

The asymptotic behavior of a function f(n) refers to the growth of f(n) as n gets large.

We typically ignore small values of n, since we are usually interested in estimating how slow the program will be on large inputs.

A good rule of thumb is that the slower the asymptotic growth rate, the better the algorithm. Though its not always true.

For example, a linear algorithm $f(n) = d * n + k$ is always asymptotically better than a quadratic one, $f(n) = c.n^2 + q$.

Solving Recurrence Equations

A recurrence is an equation or inequality that describes a function in terms of its value on smaller inputs. Recurrences are generally used in divide-and-conquer paradigm.

Let us consider T(n) to be the running time on a problem of size n.

If the problem size is small enough, say n < c where c is a constant, the straightforward solution takes constant time, which is written as θ(1). If the division of the problem yields a number of sub-problems with size $\frac{n}{b}$.

To solve the problem, the required time is a.T(n/b). If we consider the time required for division is D(n) and the time required for combining the results of sub-problems is C(n), the recurrence relation can be represented as −

$$T(n)=\begin{cases}\:\:\:\:\:\:\:\:\:\:\:\:\:\:\:\:\:\:\:\:\:\:\:\theta(1) & if\:n\leqslant c\\a T(\frac{n}{b})+D(n)+C(n) & otherwise\end{cases}$$

A recurrence relation can be solved using the following methods −

Substitution Method − In this method, we guess a bound and using mathematical induction we prove that our assumption was correct.

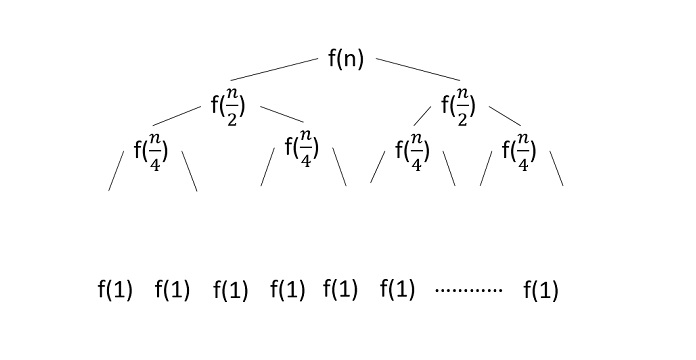

Recursion Tree Method − In this method, a recurrence tree is formed where each node represents the cost.

Masters Theorem − This is another important technique to find the complexity of a recurrence relation.

Amortized Analysis

Amortized analysis is generally used for certain algorithms where a sequence of similar operations are performed.

Amortized analysis provides a bound on the actual cost of the entire sequence, instead of bounding the cost of sequence of operations separately.

Amortized analysis differs from average-case analysis; probability is not involved in amortized analysis. Amortized analysis guarantees the average performance of each operation in the worst case.

It is not just a tool for analysis, its a way of thinking about the design, since designing and analysis are closely related.

Aggregate Method

The aggregate method gives a global view of a problem. In this method, if n operations takes worst-case time T(n) in total. Then the amortized cost of each operation is T(n)/n. Though different operations may take different time, in this method varying cost is neglected.

Accounting Method

In this method, different charges are assigned to different operations according to their actual cost. If the amortized cost of an operation exceeds its actual cost, the difference is assigned to the object as credit. This credit helps to pay for later operations for which the amortized cost less than actual cost.

If the actual cost and the amortized cost of ith operation are $c_{i}$ and $\hat{c_{l}}$, then

$$\displaystyle\sum\limits_{i=1}^n \hat{c_{l}}\geqslant\displaystyle\sum\limits_{i=1}^n c_{i}$$

Potential Method

This method represents the prepaid work as potential energy, instead of considering prepaid work as credit. This energy can be released to pay for future operations.

If we perform n operations starting with an initial data structure D0. Let us consider, ci as the actual cost and Di as data structure of ith operation. The potential function Ф maps to a real number Ф(Di), the associated potential of Di. The amortized cost $\hat{c_{l}}$ can be defined by

$$\hat{c_{l}}=c_{i}+\Phi (D_{i})-\Phi (D_{i-1})$$

Hence, the total amortized cost is

$$\displaystyle\sum\limits_{i=1}^n \hat{c_{l}}=\displaystyle\sum\limits_{i=1}^n (c_{i}+\Phi (D_{i})-\Phi (D_{i-1}))=\displaystyle\sum\limits_{i=1}^n c_{i}+\Phi (D_{n})-\Phi (D_{0})$$

Dynamic Table

If the allocated space for the table is not enough, we must copy the table into larger size table. Similarly, if large number of members are erased from the table, it is a good idea to reallocate the table with a smaller size.

Using amortized analysis, we can show that the amortized cost of insertion and deletion is constant and unused space in a dynamic table never exceeds a constant fraction of the total space.

In the next chapter of this tutorial, we will discuss Asymptotic Notations in brief.

Asymptotic Notations & Apriori Analysis

In designing of Algorithm, complexity analysis of an algorithm is an essential aspect. Mainly, algorithmic complexity is concerned about its performance, how fast or slow it works.

The complexity of an algorithm describes the efficiency of the algorithm in terms of the amount of the memory required to process the data and the processing time.

Complexity of an algorithm is analyzed in two perspectives: Time and Space.

Time Complexity

Its a function describing the amount of time required to run an algorithm in terms of the size of the input. "Time" can mean the number of memory accesses performed, the number of comparisons between integers, the number of times some inner loop is executed, or some other natural unit related to the amount of real time the algorithm will take.

Space Complexity

Its a function describing the amount of memory an algorithm takes in terms of the size of input to the algorithm. We often speak of "extra" memory needed, not counting the memory needed to store the input itself. Again, we use natural (but fixed-length) units to measure this.

Space complexity is sometimes ignored because the space used is minimal and/or obvious, however sometimes it becomes as important an issue as time.

Asymptotic Analysis

Asymptotic analysis of an algorithm refers to defining the mathematical foundation/framing of its run-time performance. Using asymptotic analysis, we can very well conclude the best case, average case, and worst case scenario of an algorithm.

Asymptotic analysis is input bound i.e., if there's no input to the algorithm, it is concluded to work in a constant time. Other than the "input" all other factors are considered constant.

Asymptotic analysis refers to computing the running time of any operation in mathematical units of computation. For example, the running time of one operation is computed as f(n) and may be for another operation it is computed as g(n2). This means the first operation running time will increase linearly with the increase in n and the running time of the second operation will increase exponentially when n increases. Similarly, the running time of both operations will be nearly the same if n is significantly small.

Usually, the time required by an algorithm falls under three types −

Best Case − Minimum time required for program execution.

Average Case − Average time required for program execution.

Worst Case − Maximum time required for program execution.

Asymptotic Notations

Execution time of an algorithm depends on the instruction set, processor speed, disk I/O speed, etc. Hence, we estimate the efficiency of an algorithm asymptotically.

Time function of an algorithm is represented by T(n), where n is the input size.

Different types of asymptotic notations are used to represent the complexity of an algorithm. Following asymptotic notations are used to calculate the running time complexity of an algorithm.

O − Big Oh

Ω − Big omega

θ − Big theta

o − Little Oh

ω − Little omega

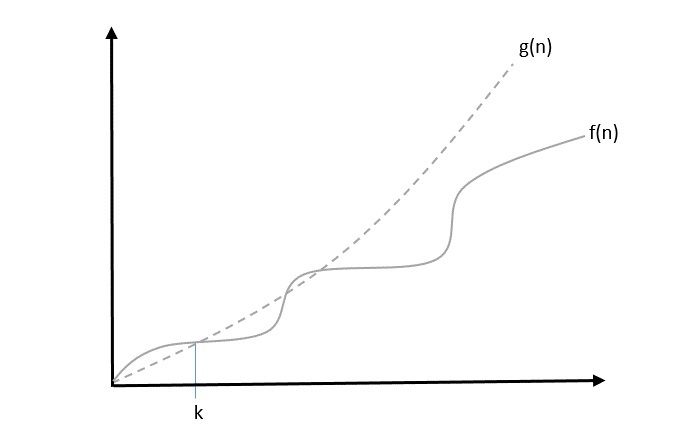

O: Asymptotic Upper Bound

O (Big Oh) is the most commonly used notation. A function f(n) can be represented is the order of g(n) that is O(g(n)), if there exists a value of positive integer n as n0 and a positive constant c such that −

$f(n)\leqslant c.g(n)$ for $n > n_{0}$ in all case

Hence, function g(n) is an upper bound for function f(n), as g(n) grows faster than f(n).

Example

Let us consider a given function, $f(n) = 4.n^3 + 10.n^2 + 5.n + 1$

Considering $g(n) = n^3$,

$f(n)\leqslant 5.g(n)$ for all the values of $n > 2$

Hence, the complexity of f(n) can be represented as $O(g(n))$, i.e. $O(n^3)$

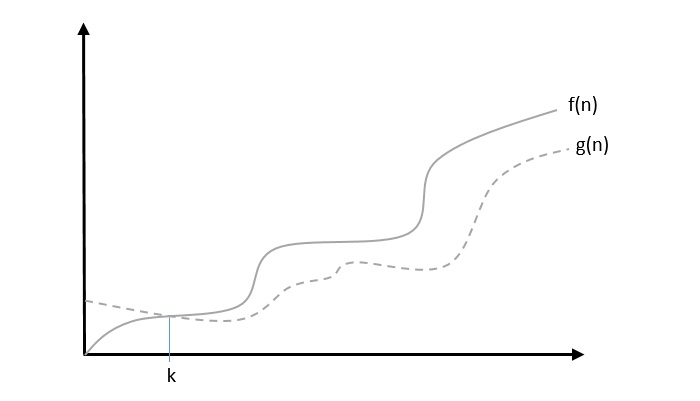

Ω: Asymptotic Lower Bound

We say that $f(n) = \Omega (g(n))$ when there exists constant c that $f(n)\geqslant c.g(n)$ for all sufficiently large value of n. Here n is a positive integer. It means function g is a lower bound for function f; after a certain value of n, f will never go below g.

Example

Let us consider a given function, $f(n) = 4.n^3 + 10.n^2 + 5.n + 1$.

Considering $g(n) = n^3$, $f(n)\geqslant 4.g(n)$ for all the values of $n > 0$.

Hence, the complexity of f(n) can be represented as $\Omega (g(n))$, i.e. $\Omega (n^3)$

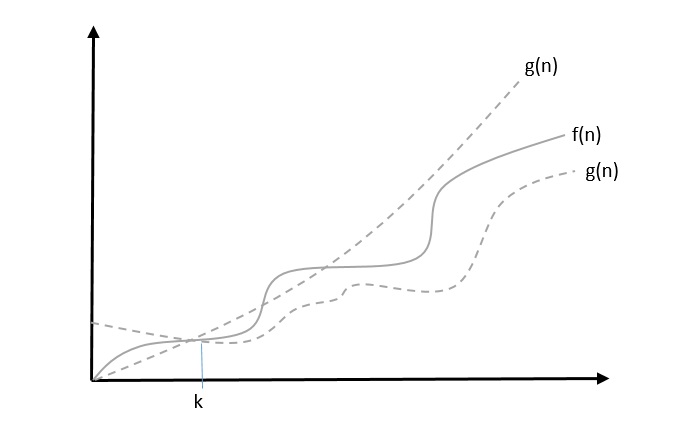

θ: Asymptotic Tight Bound

We say that $f(n) = \theta(g(n))$ when there exist constants c1 and c2 that $c_{1}.g(n) \leqslant f(n) \leqslant c_{2}.g(n)$ for all sufficiently large value of n. Here n is a positive integer.

This means function g is a tight bound for function f.

Example

Let us consider a given function, $f(n) = 4.n^3 + 10.n^2 + 5.n + 1$

Considering $g(n) = n^3$, $4.g(n) \leqslant f(n) \leqslant 5.g(n)$ for all the large values of n.

Hence, the complexity of f(n) can be represented as $\theta (g(n))$, i.e. $\theta (n^3)$.

O - Notation

The asymptotic upper bound provided by O-notation may or may not be asymptotically tight. The bound $2.n^2 = O(n^2)$ is asymptotically tight, but the bound $2.n = O(n^2)$ is not.

We use o-notation to denote an upper bound that is not asymptotically tight.

We formally define o(g(n)) (little-oh of g of n) as the set f(n) = o(g(n)) for any positive constant $c > 0$ and there exists a value $n_{0} > 0$, such that $0 \leqslant f(n) \leqslant c.g(n)$.

Intuitively, in the o-notation, the function f(n) becomes insignificant relative to g(n) as n approaches infinity; that is,

$$\lim_{n \rightarrow \infty}\left(\frac{f(n)}{g(n)}\right) = 0$$

Example

Let us consider the same function, $f(n) = 4.n^3 + 10.n^2 + 5.n + 1$

Considering $g(n) = n^{4}$,

$$\lim_{n \rightarrow \infty}\left(\frac{4.n^3 + 10.n^2 + 5.n + 1}{n^4}\right) = 0$$

Hence, the complexity of f(n) can be represented as $o(g(n))$, i.e. $o(n^4)$.

ω Notation

We use ω-notation to denote a lower bound that is not asymptotically tight. Formally, however, we define ω(g(n)) (little-omega of g of n) as the set f(n) = ω(g(n)) for any positive constant C > 0 and there exists a value $n_{0} > 0$, such that $0 \leqslant c.g(n)

For example, $\frac{n^2}{2} = \omega (n)$, but $\frac{n^2}{2} \neq \omega (n^2)$. The relation $f(n) = \omega (g(n))$ implies that the following limit exists

$$\lim_{n \rightarrow \infty}\left(\frac{f(n)}{g(n)}\right) = \infty$$

That is, f(n) becomes arbitrarily large relative to g(n) as n approaches infinity.

Example

Let us consider same function, $f(n) = 4.n^3 + 10.n^2 + 5.n + 1$

Considering $g(n) = n^2$,

$$\lim_{n \rightarrow \infty}\left(\frac{4.n^3 + 10.n^2 + 5.n + 1}{n^2}\right) = \infty$$

Hence, the complexity of f(n) can be represented as $o(g(n))$, i.e. $\omega (n^2)$.

Apriori and Apostiari Analysis

Apriori analysis means, analysis is performed prior to running it on a specific system. This analysis is a stage where a function is defined using some theoretical model. Hence, we determine the time and space complexity of an algorithm by just looking at the algorithm rather than running it on a particular system with a different memory, processor, and compiler.

Apostiari analysis of an algorithm means we perform analysis of an algorithm only after running it on a system. It directly depends on the system and changes from system to system.

In an industry, we cannot perform Apostiari analysis as the software is generally made for an anonymous user, which runs it on a system different from those present in the industry.

In Apriori, it is the reason that we use asymptotic notations to determine time and space complexity as they change from computer to computer; however, asymptotically they are the same.

Time Complexity

In this chapter, let us discuss the time complexity of algorithms and the factors that influence it.

Time complexity of an algorithm, in general, is simply defined as the time taken by an algorithm to implement each statement in the code. It is not the execution time of an algorithm. This entity can be influenced by various factors like the input size, the methods used and the procedure. An algorithm is said to be the most efficient when the output is produced in the minimal time possible.

The most common way to find the time complexity for an algorithm is to deduce the algorithm into a recurrence relation. Let us look into it further below.

Solving Recurrence Relations

A recurrence relation is an equation (or an inequality) that is defined by the smaller inputs of itself. These relations are solved based on Mathematical Induction. In both of these processes, a condition allows the problem to be broken into smaller pieces that execute the same equation with lower valued inputs.

These recurrence relations can be solved using multiple methods; they are −

Substitution Method

Recurrence Tree Method

Iteration Method

Master Theorem

Substitution Method

The substitution method is a trial and error method; where the values that we might think could be the solution to the relation are substituted and check whether the equation is valid. If it is valid, the solution is found. Otherwise, another value is checked.

Procedure

The steps to solve recurrences using the substitution method are −

Guess the form of solution based on the trial and error method

Use Mathematical Induction to prove the solution is correct for all the cases.

Example

Let us look into an example to solve a recurrence using the substitution method,

T(n) = 2T(n/2) + n

Here, we assume that the time complexity for the equation is O(nlogn). So according the mathematical induction phenomenon, the time complexity for T(n/2) will be O(n/2logn/2); substitute the value into the given equation, and we need to prove that T(n) must be greater than or equal to nlogn.

2n/2Log(n/2) + n = nLogn nLog2 + n = nLogn n + n nLogn

Recurrence Tree Method

In the recurrence tree method, we draw a recurrence tree until the program cannot be divided into smaller parts further. Then we calculate the time taken in each level of the recurrence tree.

Procedure

Draw the recurrence tree for the program

Calculate the time complexity in every level and sum them up to find the total time complexity.

Example

Consider the binary search algorithm and construct a recursion tree for it −

Since the algorithm follows divide and conquer technique, the recursion tree is drawn until it reaches the smallest input level $\mathrm{T\left ( \frac{n}{2^{k}} \right )}$.

$$\mathrm{T\left ( \frac{n}{2^{k}} \right )=T\left ( 1 \right )}$$

$$\mathrm{n=2^{k}}$$

Applying logarithm on both sides of the equation,

$$\mathrm{log\: n=log\: 2^{k}}$$

$$\mathrm{k=log_{2}\:n}$$

Therefore, the time complexity of a binary search algorithm is O(log n).

Masters Method

Masters method or Masters theorem is applied on decreasing or dividing recurrence relations to find the time complexity. It uses a set of formulae to deduce the time complexity of an algorithm.

To learn more about Masters theorem, please click here

Masters Theorem

Masters theorem is one of the many methods that are applied to calculate time complexities of algorithms. In analysis, time complexities are calculated to find out the best optimal logic of an algorithm. Masters theorem is applied on recurrence relations.

But before we get deep into the masters theorem, let us first revise what recurrence relations are −

Recurrence relations are equations that define the sequence of elements in which a term is a function of its preceding term. In algorithm analysis, the recurrence relations are usually formed when loops are present in an algorithm.

Problem Statement

Masters theorem can only be applied on decreasing and dividing recurring functions. If the relation is not decreasing or dividing, masters theorem must not be applied.

Masters Theorem for Dividing Functions

Consider a relation of type −

T(n) = aT(n/b) + f(n)

where, a >= 1 and b > 1,

n − size of the problem

a − number of sub-problems in the recursion

n/b − size of the sub problems based on the assumption that all sub-problems are of the same size.

f(n) − represents the cost of work done outside the recursion -> (nk logn p) ,where k >= 0 and p is a real number;

If the recurrence relation is in the above given form, then there are three cases in the master theorem to determine the asymptotic notations −

If a > bk , then T(n)= (nlogb a ) [ logb a = log a / log b. ]

If a = bk

If p > -1, then T(n) = (nlogb a logp+1 n)

If p = -1, then T(n) = (n logb a log log n)

If p < -1, then T(n) = (n logb a)

If a < bk,

If p >= 0, then T(n) = (nk logp n).

If p < 0, then T(n) = (nk)

Masters Theorem for Decreasing Functions

Consider a relation of type −

T(n) = aT(n-b) + f(n) where, a >= 1 and b > 1, f(n) is asymptotically positive

Here,

n − size of the problem

a − number of sub-problems in the recursion

n-b − size of the sub problems based on the assumption that all sub-problems are of the same size.

f(n) − represents the cost of work done outside the recursion -> (nk), where k >= 0.

If the recurrence relation is in the above given form, then there are three cases in the master theorem to determine the asymptotic notations −

if a = 1, T(n) = O (nk+1)

if a > 1, T(n) = O (an/b * nk)

if a < 1, T(n) = O (nk)

Examples

Few examples to apply masters theorem on dividing recurrence relations −

Example 1

Consider a recurrence relation given as T(n) = 8T(n/2) + n2

In this problem, a = 8, b = 2 and f(n) = (nk logn p) = n2, giving us k = 2 and p = 0. a = 8 > bk = 22 = 4, Hence, case 1 must be applied for this equation. To calculate, T(n) = (nlogb a ) = nlog28 = n( log 8 / log 2 ) = n3 Therefore, T(n) = (n3) is the tight bound for this equation.

Example 2

Consider a recurrence relation given as T(n) = 4T(n/2) + n2

In this problem, a = 4, b = 2 and f(n) = (nk logn p) = n2, giving us k = 2 and p = 0. a = 4 = bk = 22 = 4, p > -1 Hence, case 2(i) must be applied for this equation. To calculate, T(n) = (nlogb a logp+1 n) = nlog24 log0+1n = n2logn Therefore, T(n) = (n2logn) is the tight bound for this equation.

Example 3

Consider a recurrence relation given as T(n) = 2T(n/2) + n/log n

In this problem, a = 2, b = 2 and f(n) = (nk logn p) = n/log n, giving us k = 1 and p = -1. a = 2 = bk = 21 = 2, p = -1 Hence, case 2(ii) must be applied for this equation. To calculate, T(n) = (n logb a log log n) = nlog44 log logn = n.log(logn) Therefore, T(n) = (n.log(logn)) is the tight bound for this equation.

Example 4

Consider a recurrence relation given as T(n) = 16T(n/4) + n2/log2n

In this problem, a = 16, b = 4 and f(n) = (nk logn p) = n2/log2n, giving us k = 2 and p = -2. a = 16 = bk = 42 = 16, p < -1 Hence, case 2(iii) must be applied for this equation. To calculate, T(n) = (n logb a) = nlog416 = n2 Therefore, T(n) = (n2) is the tight bound for this equation.

Example 5

Consider a recurrence relation given as T(n) = 2T(n/2) + n2

In this problem, a = 2, b = 2 and f(n) = (nk logn p) = n2, giving us k = 2 and p = 0. a = 2 < bk = 22 = 4, p = 0 Hence, case 3(i) must be applied for this equation. To calculate, T(n) = (nk logp n) = n2 log0n = n2 Therefore, T(n) = (n2) is the tight bound for this equation.

Example 6

Consider a recurrence relation given as T(n) = 2T(n/2) + n3/log n

In this problem, a = 2, b = 2 and f(n) = (nk logn p) = n3/log n, giving us k = 3 and p = -1. a = 2 < bk = 23 = 8, p < 0 Hence, case 3(ii) must be applied for this equation. To calculate, T(n) = (nk) = n3 = n3 Therefore, T(n) = (n3) is the tight bound for this equation.

Few examples to apply masters theorem in decreasing recurrence relations −

Example 1

Consider a recurrence relation given as T(n) = T(n-1) + n2

In this problem, a = 1, b = 1 and f(n) = O(nk) = n2, giving us k = 2. Since a = 1, case 1 must be applied for this equation. To calculate, T(n) = O(nk+1) = n2+1 = n3 Therefore, T(n) = O(n3) is the tight bound for this equation.

Example 2

Consider a recurrence relation given as T(n) = 2T(n-1) + n

In this problem, a = 2, b = 1 and f(n) = O(nk) = n, giving us k = 1. Since a > 1, case 2 must be applied for this equation. To calculate, T(n) = O(an/b * nk) = O(2n/1 * n1) = O(n2n) Therefore, T(n) = O(n2n) is the tight bound for this equation.

Example 3

Consider a recurrence relation given as T(n) = n4

In this problem, a = 0 and f(n) = O(nk) = n4, giving us k = 4 Since a < 1, case 3 must be applied for this equation. To calculate, T(n) = O(nk) = O(n4) = O(n4) Therefore, T(n) = O(n4) is the tight bound for this equation.

Space Complexities

In this chapter, we will discuss the complexity of computational problems with respect to the amount of space an algorithm requires.

Space complexity shares many of the features of time complexity and serves as a further way of classifying problems according to their computational difficulties.

What is Space Complexity?

Space complexity is a function describing the amount of memory (space) an algorithm takes in terms of the amount of input to the algorithm.

We often speak of extra memory needed, not counting the memory needed to store the input itself. Again, we use natural (but fixed-length) units to measure this.

We can use bytes, but it's easier to use, say, the number of integers used, the number of fixed-sized structures, etc.

In the end, the function we come up with will be independent of the actual number of bytes needed to represent the unit.

Space complexity is sometimes ignored because the space used is minimal and/or obvious, however sometimes it becomes as important issue as time complexity

Definition

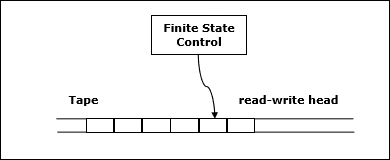

Let M be a deterministic Turing machine (TM) that halts on all inputs. The space complexity of M is the function $f \colon N \rightarrow N$, where f(n) is the maximum number of cells of tape and M scans any input of length M. If the space complexity of M is f(n), we can say that M runs in space f(n).

We estimate the space complexity of Turing machine by using asymptotic notation.

Let $f \colon N \rightarrow R^+$ be a function. The space complexity classes can be defined as follows −

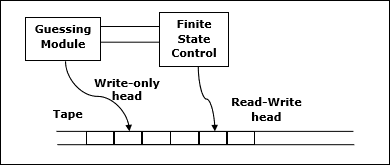

SPACE = {L | L is a language decided by an O(f(n)) space deterministic TM}

SPACE = {L | L is a language decided by an O(f(n)) space non-deterministic TM}

PSPACE is the class of languages that are decidable in polynomial space on a deterministic Turing machine.

In other words, PSPACE = Uk SPACE (nk)

Savitchs Theorem

One of the earliest theorem related to space complexity is Savitchs theorem. According to this theorem, a deterministic machine can simulate non-deterministic machines by using a small amount of space.

For time complexity, such a simulation seems to require an exponential increase in time. For space complexity, this theorem shows that any non-deterministic Turing machine that uses f(n) space can be converted to a deterministic TM that uses f2(n) space.

Hence, Savitchs theorem states that, for any function, $f \colon N \rightarrow R^+$, where $f(n) \geqslant n$

NSPACE(f(n)) ⊆ SPACE(f(n))

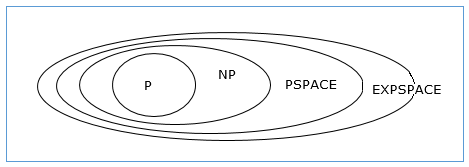

Relationship Among Complexity Classes

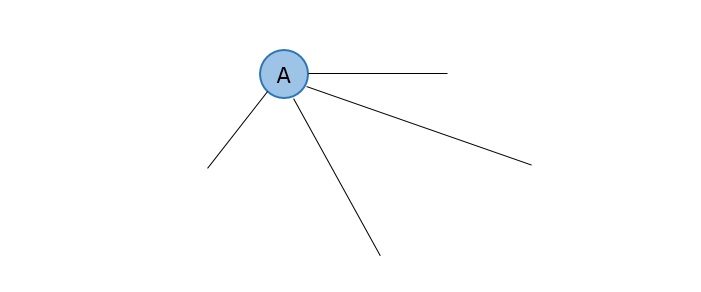

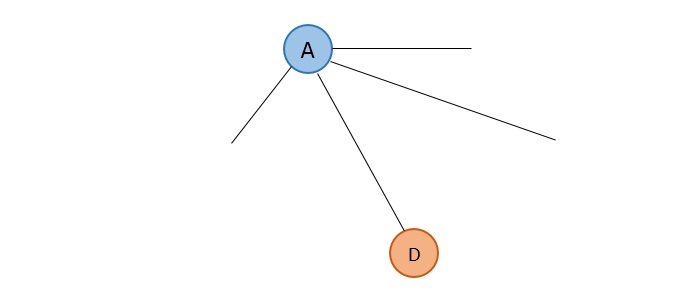

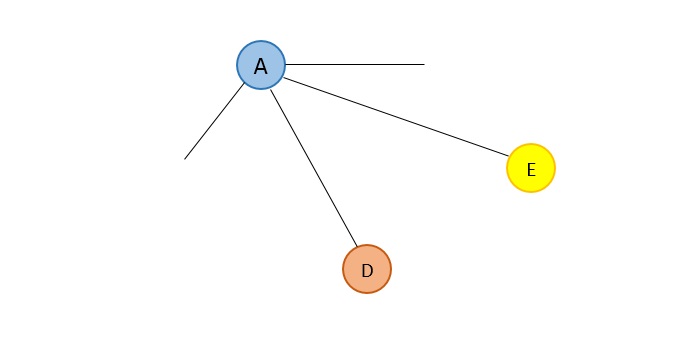

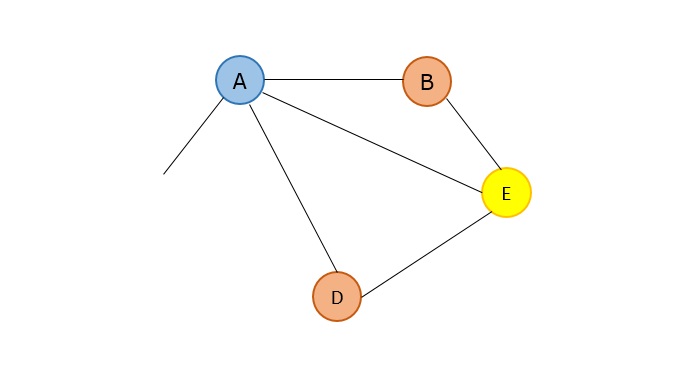

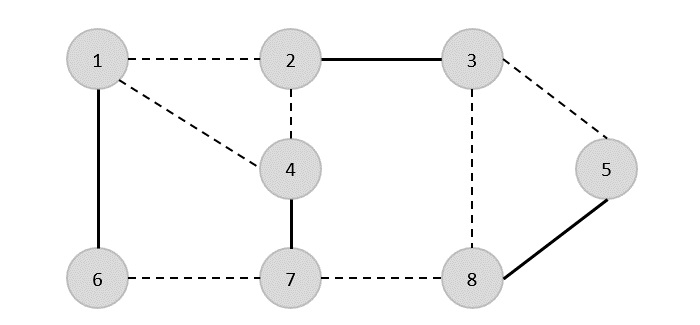

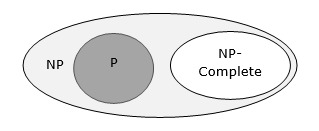

The following diagram depicts the relationship among different complexity classes.

Till now, we have not discussed P and NP classes in this tutorial. These will be discussed later.

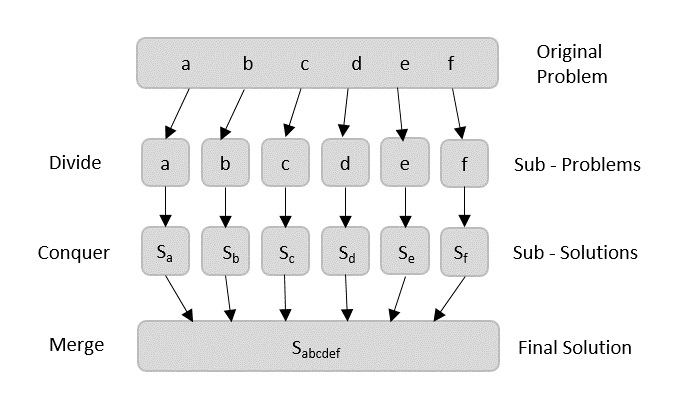

Divide & Conquer Algorithm

Using divide and conquer approach, the problem in hand, is divided into smaller sub-problems and then each problem is solved independently. When we keep dividing the sub-problems into even smaller sub-problems, we may eventually reach a stage where no more division is possible. Those smallest possible sub-problems are solved using original solution because it takes lesser time to compute. The solution of all sub-problems is finally merged in order to obtain the solution of the original problem.

Broadly, we can understand divide-and-conquer approach in a three-step process.

Divide/Break

This step involves breaking the problem into smaller sub-problems. Sub-problems should represent a part of the original problem. This step generally takes a recursive approach to divide the problem until no sub-problem is further divisible. At this stage, sub-problems become atomic in size but still represent some part of the actual problem.

Conquer/Solve

This step receives a lot of smaller sub-problems to be solved. Generally, at this level, the problems are considered 'solved' on their own.

Merge/Combine

When the smaller sub-problems are solved, this stage recursively combines them until they formulate a solution of the original problem. This algorithmic approach works recursively and conquer & merge steps works so close that they appear as one.

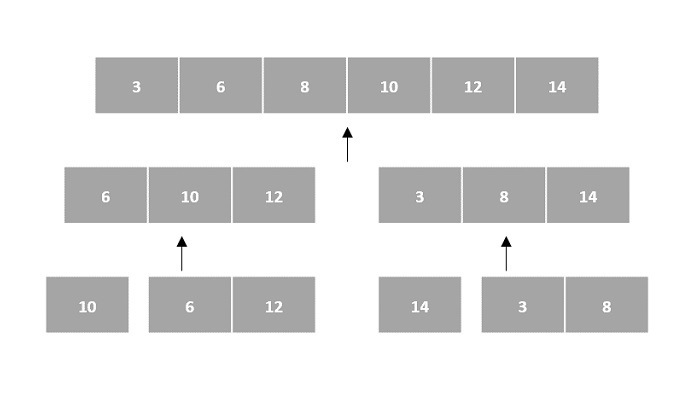

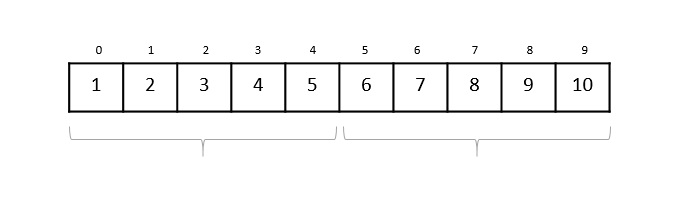

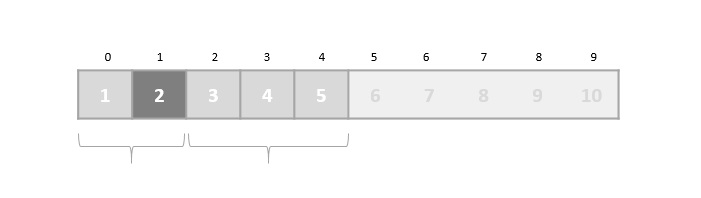

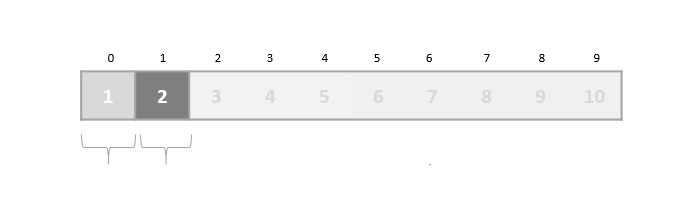

Arrays as Input

There are various ways in which various algorithms can take input such that they can be solved using the divide and conquer technique. Arrays are one of them. In algorithms that require input to be in the form of a list, like various sorting algorithms, array data structures are most commonly used.

In the input for a sorting algorithm below, the array input is divided into subproblems until they cannot be divided further.

Then, the subproblems are sorted (the conquer step) and are merged to form the solution of the original array back (the combine step).

Since arrays are indexed and linear data structures, sorting algorithms most popularly use array data structures to receive input.

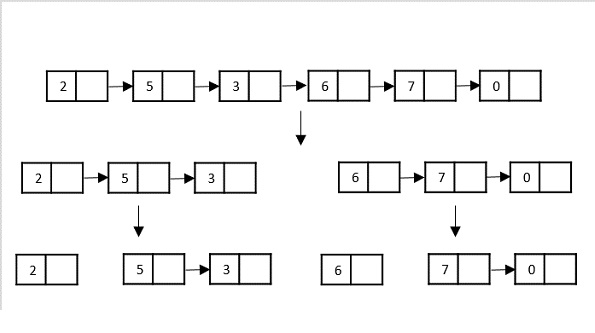

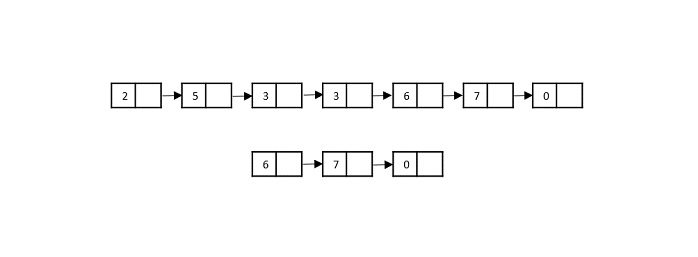

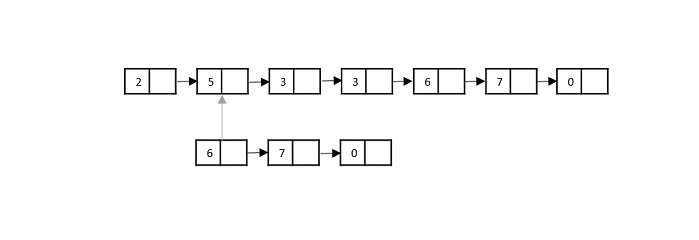

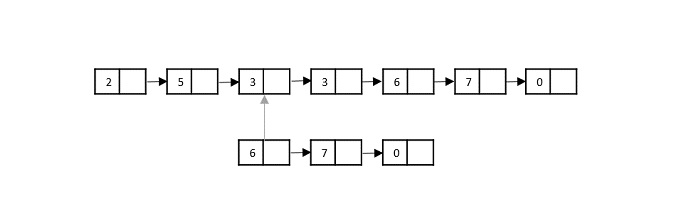

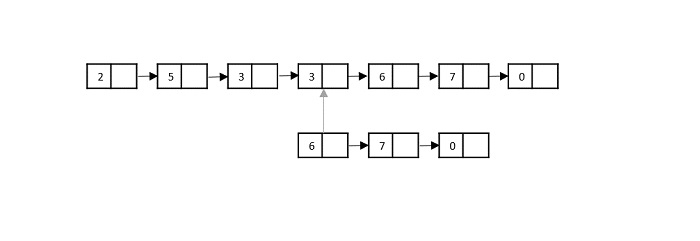

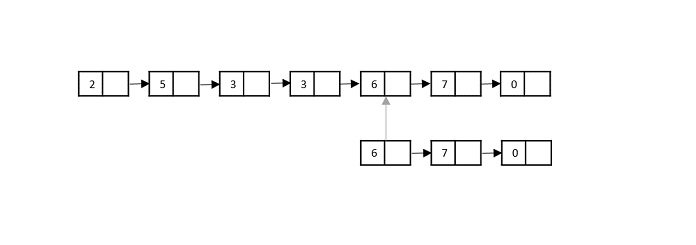

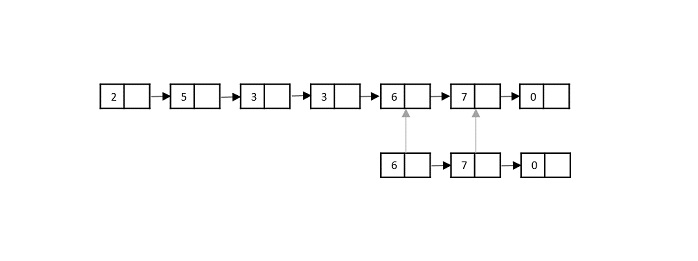

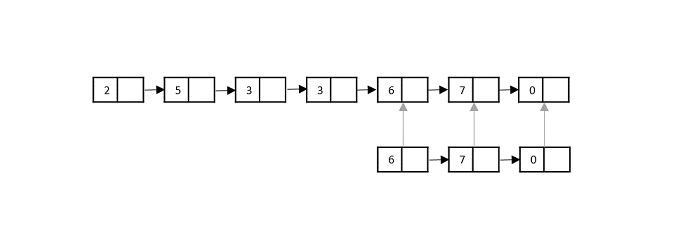

Linked Lists as Input

Another data structure that can be used to take input for divide and conquer algorithms is a linked list (for example, merge sort using linked lists). Like arrays, linked lists are also linear data structures that store data sequentially.

Consider the merge sort algorithm on linked list; following the very popular tortoise and hare algorithm, the list is divided until it cannot be divided further.

Then, the nodes in the list are sorted (conquered). These nodes are then combined (or merged) in recursively until the final solution is achieved.

Various searching algorithms can also be performed on the linked list data structures with a slightly different technique as linked lists are not indexed linear data structures. They must be handled using the pointers available in the nodes of the list.

Pros and cons of Divide and Conquer Approach

Divide and conquer approach supports parallelism as sub-problems are independent. Hence, an algorithm, which is designed using this technique, can run on the multiprocessor system or in different machines simultaneously.

In this approach, most of the algorithms are designed using recursion, hence memory management is very high. For recursive function stack is used, where function state needs to be stored.

Examples of Divide and Conquer Approach

The following computer algorithms are based on divide-and-conquer programming approach −

Merge Sort

Quick Sort

Binary Search

Strassen's Matrix Multiplication

Closest pair (points)

Karatsuba

There are various ways available to solve any computer problem, but the mentioned are a good example of divide and conquer approach.

Max-Min Problem

Let us consider a simple problem that can be solved by divide and conquer technique.

Problem Statement

The Max-Min Problem in algorithm analysis is finding the maximum and minimum value in an array.

Solution

To find the maximum and minimum numbers in a given array numbers[] of size n, the following algorithm can be used. First we are representing the naive method and then we will present divide and conquer approach.

Nave Method

Nave method is a basic method to solve any problem. In this method, the maximum and minimum number can be found separately. To find the maximum and minimum numbers, the following straightforward algorithm can be used.

Algorithm: Max-Min-Element (numbers[])

max := numbers[1]

min := numbers[1]

for i = 2 to n do

if numbers[i] > max then

max := numbers[i]

if numbers[i] < min then

min := numbers[i]

return (max, min)

Example

#include <stdio.h>

struct Pair {

int max;

int min;

};

// Function to find maximum and minimum using the naive algorithm

struct Pair maxMinNaive(int arr[], int n) {

struct Pair result;

result.max = arr[0];

result.min = arr[0];

// Loop through the array to find the maximum and minimum values

for (int i = 1; i < n; i++) {

if (arr[i] > result.max) {

result.max = arr[i]; // Update the maximum value if a larger element is found

}

if (arr[i] < result.min) {

result.min = arr[i]; // Update the minimum value if a smaller element is found

}

}

return result; // Return the pair of maximum and minimum values

}

int main() {

int arr[] = {6, 4, 26, 14, 33, 64, 46};

int n = sizeof(arr) / sizeof(arr[0]);

struct Pair result = maxMinNaive(arr, n);

printf("Maximum element is: %d\n", result.max);

printf("Minimum element is: %d\n", result.min);

return 0;

}

Output

Maximum element is: 64 Minimum element is: 4

#include <iostream>

using namespace std;

struct Pair {

int max;

int min;

};

// Function to find maximum and minimum using the naive algorithm

Pair maxMinNaive(int arr[], int n) {

Pair result;

result.max = arr[0];

result.min = arr[0];

// Loop through the array to find the maximum and minimum values

for (int i = 1; i < n; i++) {

if (arr[i] > result.max) {

result.max = arr[i]; // Update the maximum value if a larger element is found

}

if (arr[i] < result.min) {

result.min = arr[i]; // Update the minimum value if a smaller element is found

}

}

return result; // Return the pair of maximum and minimum values

}

int main() {

int arr[] = {6, 4, 26, 14, 33, 64, 46};

int n = sizeof(arr) / sizeof(arr[0]);

Pair result = maxMinNaive(arr, n);

cout << "Maximum element is: " << result.max << endl;

cout << "Minimum element is: " << result.min << endl;

return 0;

}

Output

Maximum element is: 64 Minimum element is: 4

public class MaxMinNaive {

static class Pair {

int max;

int min;

}

// Function to find maximum and minimum using the naive algorithm

static Pair maxMinNaive(int[] arr) {

Pair result = new Pair();

result.max = arr[0];

result.min = arr[0];

// Loop through the array to find the maximum and minimum values

for (int i = 1; i < arr.length; i++) {

if (arr[i] > result.max) {

result.max = arr[i]; // Update the maximum value if a larger element is found

}

if (arr[i] < result.min) {

result.min = arr[i]; // Update the minimum value if a smaller element is found

}

}

return result; // Return the pair of maximum and minimum values

}

public static void main(String[] args) {

int[] arr = {6, 4, 26, 14, 33, 64, 46};

Pair result = maxMinNaive(arr);

System.out.println("Maximum element is: " + result.max);

System.out.println("Minimum element is: " + result.min);

}

}

Output

Maximum element is: 64 Minimum element is: 4

def max_min_naive(arr):

max_val = arr[0]

min_val = arr[0]

# Loop through the array to find the maximum and minimum values

for i in range(1, len(arr)):

if arr[i] > max_val:

max_val = arr[i] # Update the maximum value if a larger element is found

if arr[i] < min_val:

min_val = arr[i] # Update the minimum value if a smaller element is found

return max_val, min_val # Return the pair of maximum and minimum values

arr = [6, 4, 26, 14, 33, 64, 46]

max_val, min_val = max_min_naive(arr)

print("Maximum element is:", max_val)

print("Minimum element is:", min_val)

Output

Maximum element is: 64 Minimum element is: 4

Analysis

The number of comparison in Naive method is 2n - 2.

The number of comparisons can be reduced using the divide and conquer approach. Following is the technique.

Divide and Conquer Approach

In this approach, the array is divided into two halves. Then using recursive approach maximum and minimum numbers in each halves are found. Later, return the maximum of two maxima of each half and the minimum of two minima of each half.

In this given problem, the number of elements in an array is $y - x + 1$, where y is greater than or equal to x.

$\mathbf{\mathit{Max - Min(x, y)}}$ will return the maximum and minimum values of an array $\mathbf{\mathit{numbers[x...y]}}$.

Algorithm: Max - Min(x, y) if y x ≤ 1 then return (max(numbers[x], numbers[y]), min((numbers[x], numbers[y])) else (max1, min1):= maxmin(x, ⌊((x + y)/2)⌋) (max2, min2):= maxmin(⌊((x + y)/2) + 1)⌋,y) return (max(max1, max2), min(min1, min2))

Example

#include <stdio.h>

// Structure to store both maximum and minimum elements

struct Pair {

int max;

int min;

};

struct Pair maxMinDivideConquer(int arr[], int low, int high) {

struct Pair result;

struct Pair left;

struct Pair right;

int mid;

// If only one element in the array

if (low == high) {

result.max = arr[low];

result.min = arr[low];

return result;

}

// If there are two elements in the array

if (high == low + 1) {

if (arr[low] < arr[high]) {

result.min = arr[low];

result.max = arr[high];

} else {

result.min = arr[high];

result.max = arr[low];

}

return result;

}

// If there are more than two elements in the array

mid = (low + high) / 2;

left = maxMinDivideConquer(arr, low, mid);

right = maxMinDivideConquer(arr, mid + 1, high);

// Compare and get the maximum of both parts

result.max = (left.max > right.max) ? left.max : right.max;

// Compare and get the minimum of both parts

result.min = (left.min < right.min) ? left.min : right.min;

return result;

}

int main() {

int arr[] = {6, 4, 26, 14, 33, 64, 46};

int n = sizeof(arr) / sizeof(arr[0]);

struct Pair result = maxMinDivideConquer(arr, 0, n - 1);

printf("Maximum element is: %d\n", result.max);

printf("Minimum element is: %d\n", result.min);

return 0;

}

Output

Maximum element is: 64 Minimum element is: 4

#include <iostream>

using namespace std;

// Structure to store both maximum and minimum elements

struct Pair {

int max;

int min;

};

Pair maxMinDivideConquer(int arr[], int low, int high) {

Pair result, left, right;

int mid;

// If only one element in the array

if (low == high) {

result.max = arr[low];

result.min = arr[low];

return result;

}

// If there are two elements in the array

if (high == low + 1) {

if (arr[low] < arr[high]) {

result.min = arr[low];

result.max = arr[high];

} else {

result.min = arr[high];

result.max = arr[low];

}

return result;

}

// If there are more than two elements in the array

mid = (low + high) / 2;

left = maxMinDivideConquer(arr, low, mid);

right = maxMinDivideConquer(arr, mid + 1, high);

// Compare and get the maximum of both parts

result.max = (left.max > right.max) ? left.max : right.max;

// Compare and get the minimum of both parts

result.min = (left.min < right.min) ? left.min : right.min;

return result;

}

int main() {

int arr[] = {6, 4, 26, 14, 33, 64, 46};

int n = sizeof(arr) / sizeof(arr[0]);

Pair result = maxMinDivideConquer(arr, 0, n - 1);

cout << "Maximum element is: " << result.max << endl;

cout << "Minimum element is: " << result.min << endl;

return 0;

}

Output

Maximum element is: 64 Minimum element is: 4

public class MaxMinDivideConquer {

// Class to store both maximum and minimum elements

static class Pair {

int max;

int min;

}

static Pair maxMinDivideConquer(int[] arr, int low, int high) {

Pair result = new Pair();

Pair left, right;

int mid;

// If only one element in the array

if (low == high) {

result.max = arr[low];

result.min = arr[low];

return result;

}

// If there are two elements in the array

if (high == low + 1) {

if (arr[low] < arr[high]) {

result.min = arr[low];

result.max = arr[high];

} else {

result.min = arr[high];

result.max = arr[low];

}

return result;

}

// If there are more than two elements in the array

mid = (low + high) / 2;

left = maxMinDivideConquer(arr, low, mid);

right = maxMinDivideConquer(arr, mid + 1, high);

// Compare and get the maximum of both parts

result.max = Math.max(left.max, right.max);

// Compare and get the minimum of both parts

result.min = Math.min(left.min, right.min);

return result;

}

public static void main(String[] args) {

int[] arr = {6, 4, 26, 14, 33, 64, 46};

Pair result = maxMinDivideConquer(arr, 0, arr.length - 1);

System.out.println("Maximum element is: " + result.max);

System.out.println("Minimum element is: " + result.min);

}

}

Output

Maximum element is: 64 Minimum element is: 4

def max_min_divide_conquer(arr, low, high):

# Structure to store both maximum and minimum elements

class Pair:

def __init__(self):

self.max = 0

self.min = 0

result = Pair()

# If only one element in the array

if low == high:

result.max = arr[low]

result.min = arr[low]

return result

# If there are two elements in the array

if high == low + 1:

if arr[low] < arr[high]:

result.min = arr[low]

result.max = arr[high]

else:

result.min = arr[high]

result.max = arr[low]

return result

# If there are more than two elements in the array

mid = (low + high) // 2

left = max_min_divide_conquer(arr, low, mid)

right = max_min_divide_conquer(arr, mid + 1, high)

# Compare and get the maximum of both parts

result.max = max(left.max, right.max)

# Compare and get the minimum of both parts

result.min = min(left.min, right.min)

return result

arr = [6, 4, 26, 14, 33, 64, 46]

result = max_min_divide_conquer(arr, 0, len(arr) - 1)

print("Maximum element is:", result.max)

print("Minimum element is:", result.min)

Output

Maximum element is: 64 Minimum element is: 4

Analysis

Let T(n) be the number of comparisons made by $\mathbf{\mathit{Max - Min(x, y)}}$, where the number of elements $n = y - x + 1$.

If T(n) represents the numbers, then the recurrence relation can be represented as

$$T(n) = \begin{cases}T\left(\lfloor\frac{n}{2}\rfloor\right)+T\left(\lceil\frac{n}{2}\rceil\right)+2 & for\: n>2\\1 & for\:n = 2 \\0 & for\:n = 1\end{cases}$$

Let us assume that n is in the form of power of 2. Hence, n = 2k where k is height of the recursion tree.

So,

$$T(n) = 2.T (\frac{n}{2}) + 2 = 2.\left(\begin{array}{c}2.T(\frac{n}{4}) + 2\end{array}\right) + 2 ..... = \frac{3n}{2} - 2$$

Compared to Nave method, in divide and conquer approach, the number of comparisons is less. However, using the asymptotic notation both of the approaches are represented by O(n).

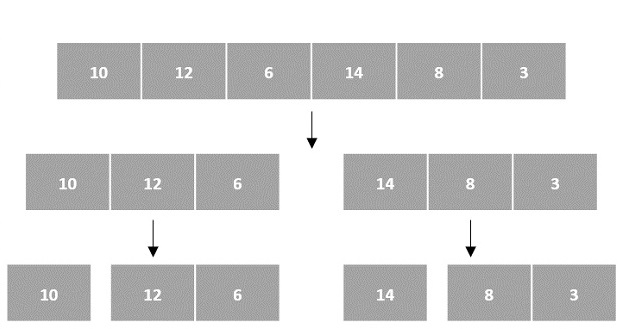

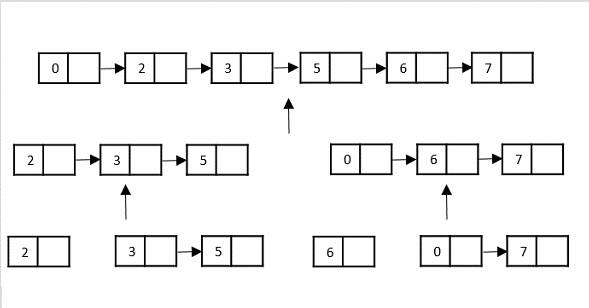

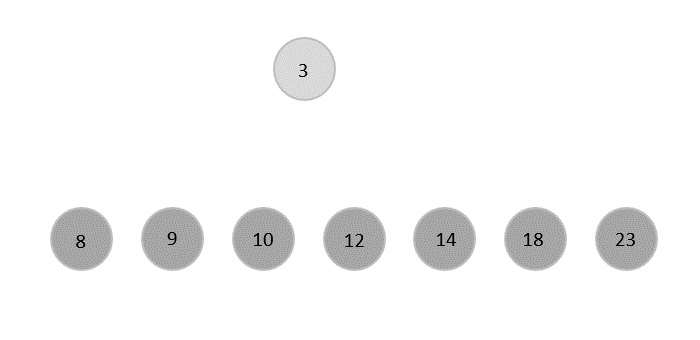

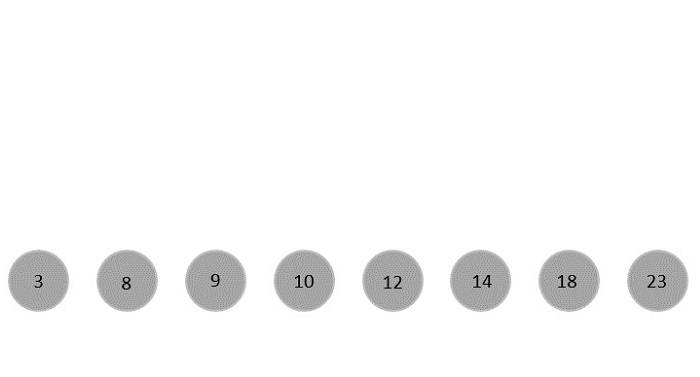

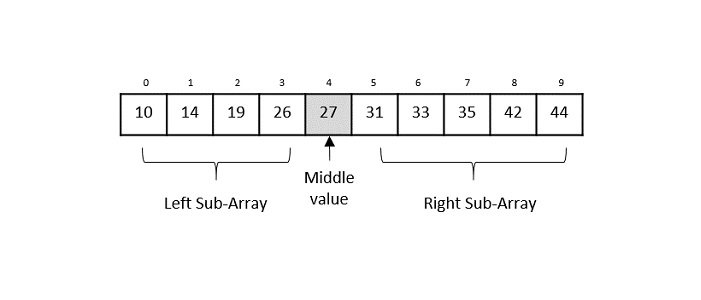

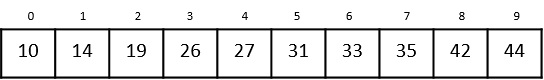

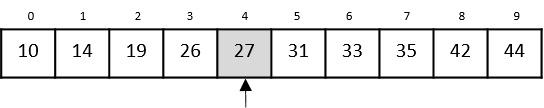

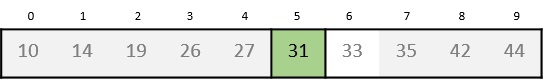

Merge Sort Algorithm

Merge sort is a sorting technique based on divide and conquer technique. With worst-case time complexity being (n log n), it is one of the most used and approached algorithms.

Merge sort first divides the array into equal halves and then combines them in a sorted manner.

How Merge Sort Works?

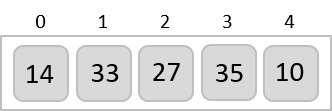

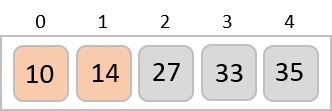

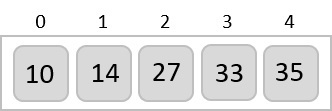

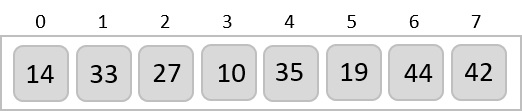

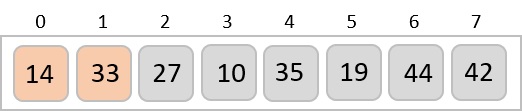

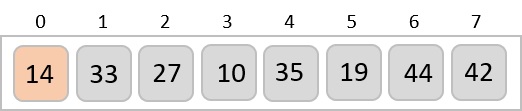

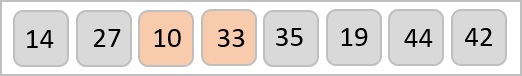

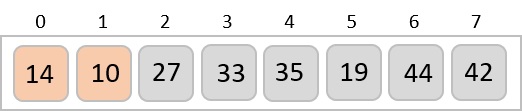

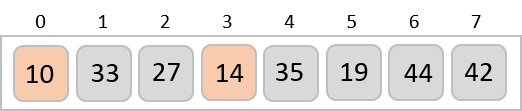

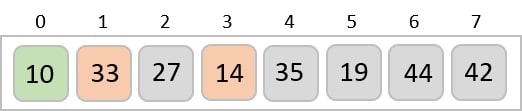

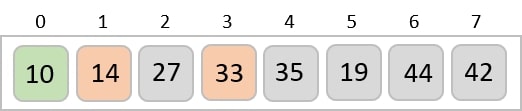

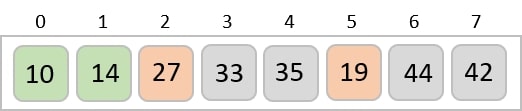

To understand merge sort, we take an unsorted array as the following −

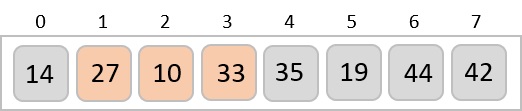

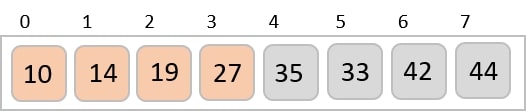

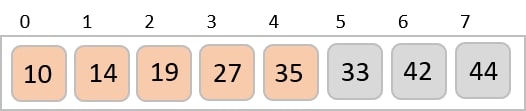

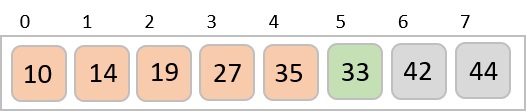

We know that merge sort first divides the whole array iteratively into equal halves unless the atomic values are achieved. We see here that an array of 8 items is divided into two arrays of size 4.

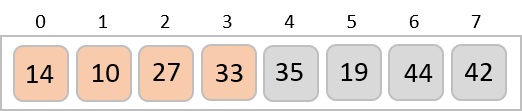

This does not change the sequence of appearance of items in the original. Now we divide these two arrays into halves.

We further divide these arrays and we achieve atomic value which can no more be divided.

Now, we combine them in exactly the same manner as they were broken down. Please note the color codes given to these lists.

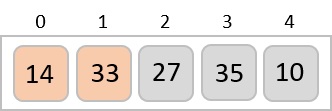

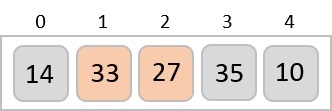

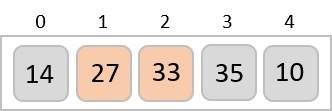

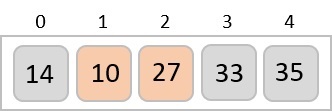

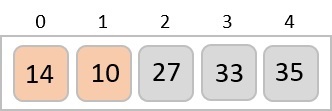

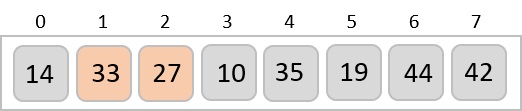

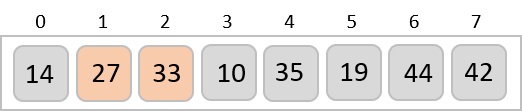

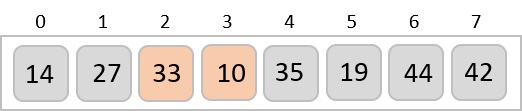

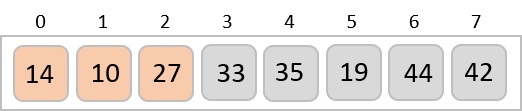

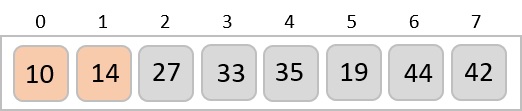

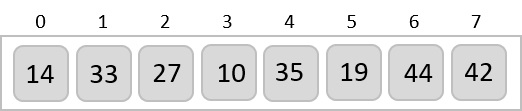

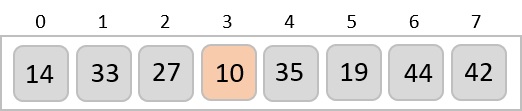

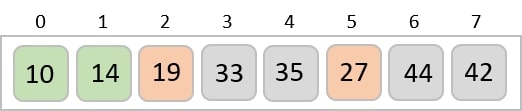

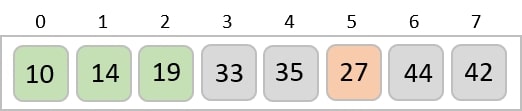

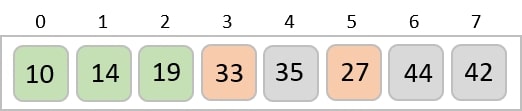

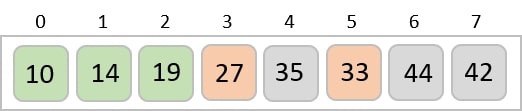

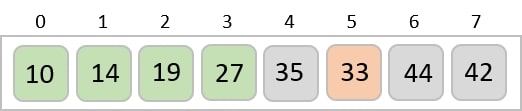

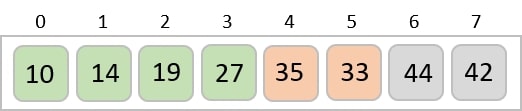

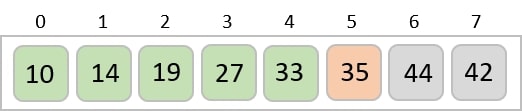

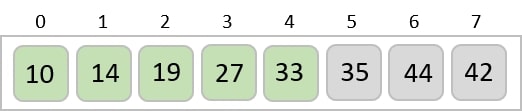

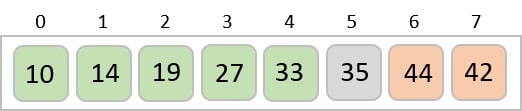

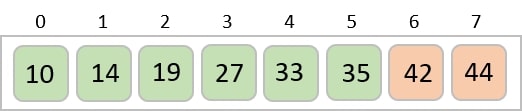

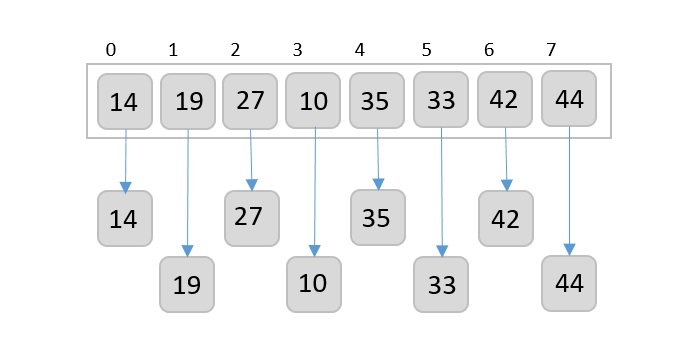

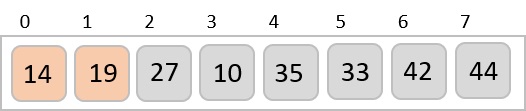

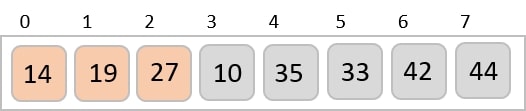

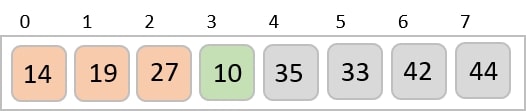

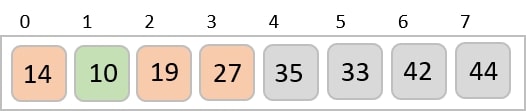

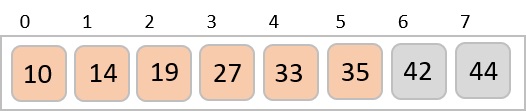

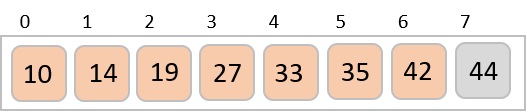

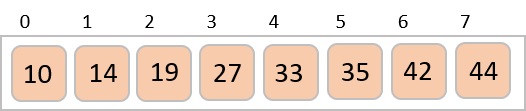

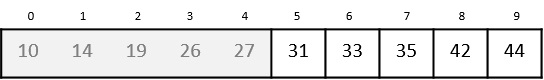

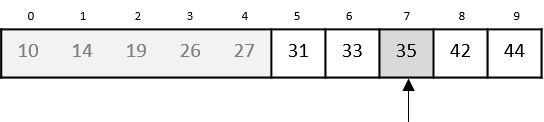

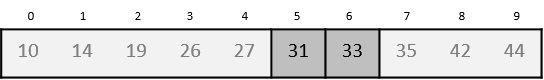

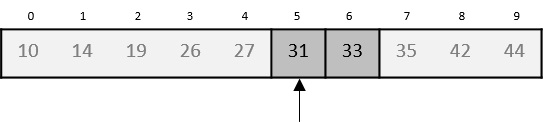

We first compare the element for each list and then combine them into another list in a sorted manner. We see that 14 and 33 are in sorted positions. We compare 27 and 10 and in the target list of 2 values we put 10 first, followed by 27. We change the order of 19 and 35 whereas 42 and 44 are placed sequentially.

In the next iteration of the combining phase, we compare lists of two data values, and merge them into a list of found data values placing all in a sorted order.

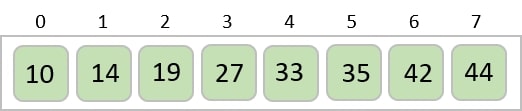

After the final merging, the list becomes sorted and is considered the final solution.

Merge Sort Algorithm

Merge sort keeps on dividing the list into equal halves until it can no more be divided. By definition, if it is only one element in the list, it is considered sorted. Then, merge sort combines the smaller sorted lists keeping the new list sorted too.

Step 1 − if it is only one element in the list, consider it already sorted, so return.

Step 2 − divide the list recursively into two halves until it can no more be divided.

Step 3 − merge the smaller lists into new list in sorted order.

Pseudocode

We shall now see the pseudocodes for merge sort functions. As our algorithms point out two main functions divide & merge.

Merge sort works with recursion and we shall see our implementation in the same way.

procedure mergesort( var a as array )

if ( n == 1 ) return a

var l1 as array = a[0] ... a[n/2]

var l2 as array = a[n/2+1] ... a[n]

l1 = mergesort( l1 )

l2 = mergesort( l2 )

return merge( l1, l2 )

end procedure

procedure merge( var a as array, var b as array )

var c as array

while ( a and b have elements )

if ( a[0] > b[0] )

add b[0] to the end of c

remove b[0] from b

else

add a[0] to the end of c

remove a[0] from a

end if

end while

while ( a has elements )

add a[0] to the end of c

remove a[0] from a

end while

while ( b has elements )

add b[0] to the end of c

remove b[0] from b

end while

return c

end procedure

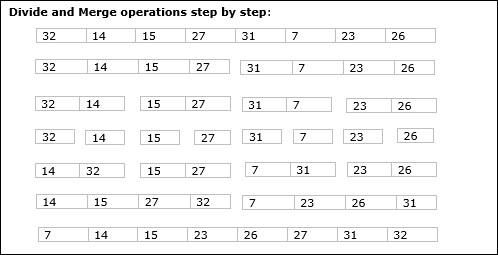

Example

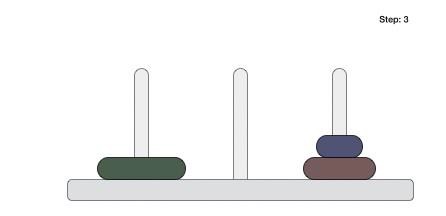

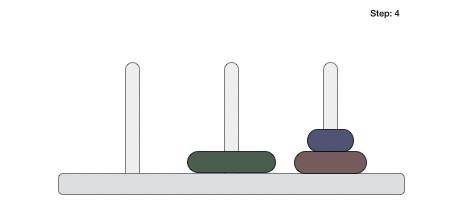

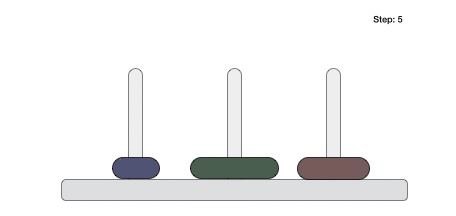

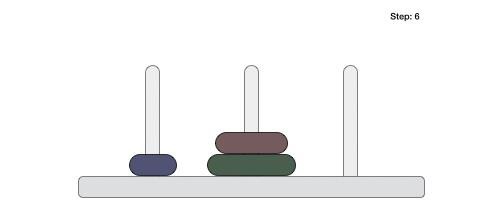

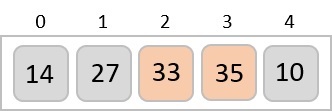

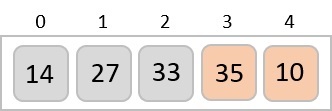

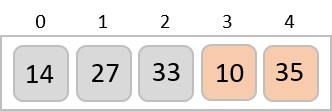

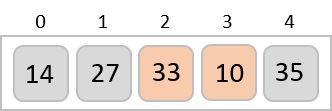

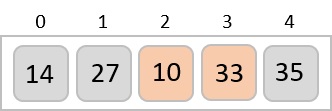

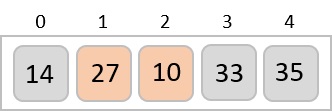

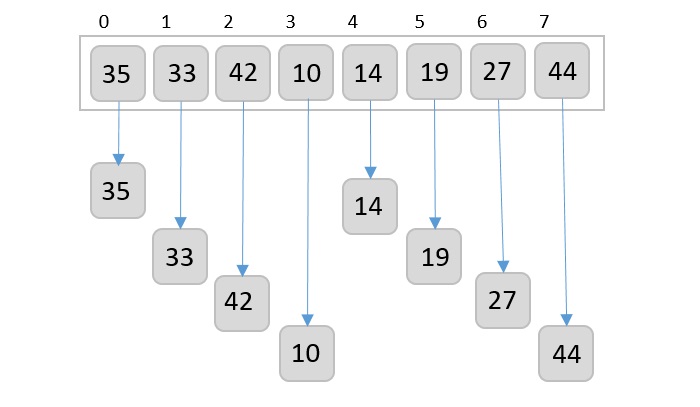

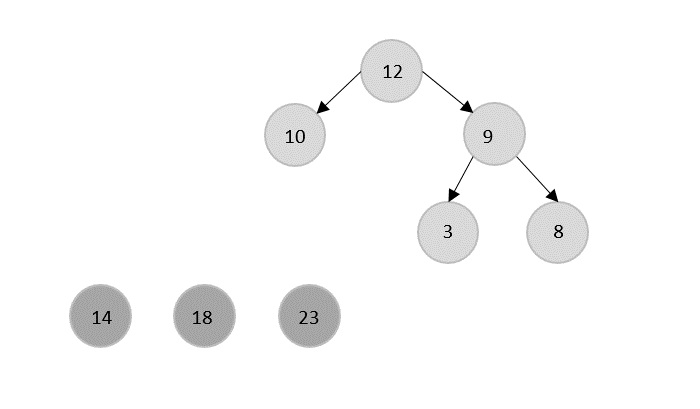

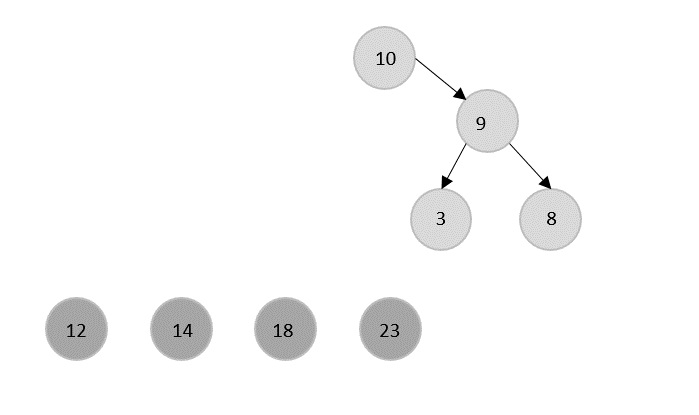

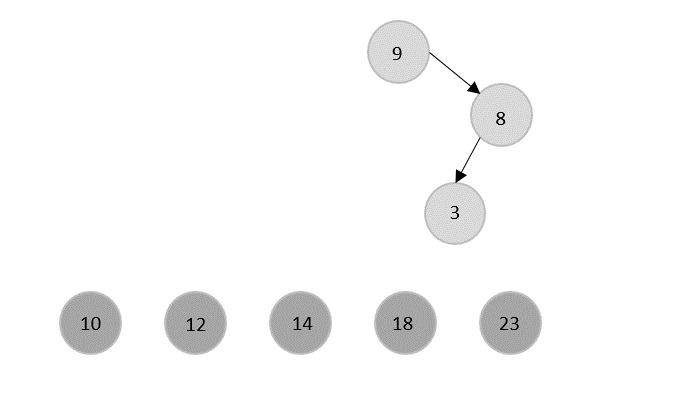

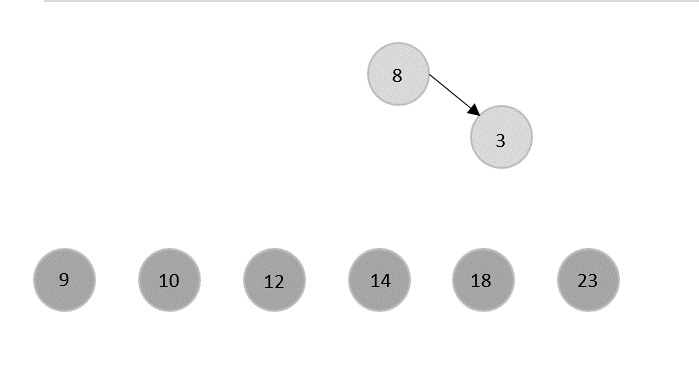

In the following example, we have shown Merge-Sort algorithm step by step. First, every iteration array is divided into two sub-arrays, until the sub-array contains only one element. When these sub-arrays cannot be divided further, then merge operations are performed.

Analysis

Let us consider, the running time of Merge-Sort as T(n). Hence,

$$\mathrm{T\left ( n \right )=\left\{\begin{matrix} c & if\, n\leq 1 \\ 2\, xT\left ( \frac{n}{2} \right )+dxn &otherwise \\ \end{matrix}\right.}\:where\: c\: and\: d\: are\: constants$$

Therefore, using this recurrence relation,

$$T\left ( n \right )=2^{i}\, T\left ( n/2^{i} \right )+i\cdot d\cdot n$$

$$As,\:\: i=log\: n,\: T\left ( n \right )=2^{log\, n}T\left ( n/2^{log\, n} \right )+log\, n\cdot d\cdot n$$

$$=c\cdot n+d\cdot n\cdot log\: n$$

$$Therefore,\: \: T\left ( n \right ) = O(n\: log\: n ).$$

Example

Following are the implementations of this operation in various programming languages −

#include <stdio.h>

#define max 10

int a[11] = { 10, 14, 19, 26, 27, 31, 33, 35, 42, 44, 0 };

int b[10];

void merging(int low, int mid, int high){

int l1, l2, i;

for(l1 = low, l2 = mid + 1, i = low; l1 <= mid && l2 <= high; i++) {

if(a[l1] <= a[l2])

b[i] = a[l1++];

else

b[i] = a[l2++];

}

while(l1 <= mid)

b[i++] = a[l1++];

while(l2 <= high)

b[i++] = a[l2++];

for(i = low; i <= high; i++)

a[i] = b[i];

}

void sort(int low, int high){

int mid;

if(low < high) {

mid = (low + high) / 2;

sort(low, mid);

sort(mid+1, high);

merging(low, mid, high);

} else {

return;

}

}

int main(){

int i;

printf("Array before sorting\n");

for(i = 0; i <= max; i++)

printf("%d ", a[i]);

sort(0, max);

printf("\nArray after sorting\n");

for(i = 0; i <= max; i++)

printf("%d ", a[i]);

}

Output

Array before sorting 10 14 19 26 27 31 33 35 42 44 0 Array after sorting 0 10 14 19 26 27 31 33 35 42 44

#include <iostream>

using namespace std;

#define max 10

int a[11] = { 10, 14, 19, 26, 27, 31, 33, 35, 42, 44, 0 };

int b[10];

void merging(int low, int mid, int high){

int l1, l2, i;

for(l1 = low, l2 = mid + 1, i = low; l1 <= mid && l2 <= high; i++) {

if(a[l1] <= a[l2])

b[i] = a[l1++];

else

b[i] = a[l2++];

}

while(l1 <= mid)

b[i++] = a[l1++];

while(l2 <= high)

b[i++] = a[l2++];

for(i = low; i <= high; i++)

a[i] = b[i];

}

void sort(int low, int high){

int mid;

if(low < high) {

mid = (low + high) / 2;

sort(low, mid);

sort(mid+1, high);

merging(low, mid, high);

} else {

return;

}

}

int main(){

int i;

cout << "Array before sorting\n";

for(i = 0; i <= max; i++)

cout<<a[i]<<" ";

sort(0, max);

cout<< "\nArray after sorting\n";

for(i = 0; i <= max; i++)

cout<<a[i]<<" ";

}

Output

Array before sorting 10 14 19 26 27 31 33 35 42 44 0 Array after sorting 0 10 14 19 26 27 31 33 35 42 44

public class Merge_Sort {

static int a[] = { 10, 14, 19, 26, 27, 31, 33, 35, 42, 44, 0 };

static int b[] = new int[a.length];

static void merging(int low, int mid, int high) {

int l1, l2, i;

for(l1 = low, l2 = mid + 1, i = low; l1 <= mid && l2 <= high; i++) {

if(a[l1] <= a[l2])

b[i] = a[l1++];

else

b[i] = a[l2++];

}

while(l1 <= mid)

b[i++] = a[l1++];

while(l2 <= high)

b[i++] = a[l2++];

for(i = low; i <= high; i++)

a[i] = b[i];

}

static void sort(int low, int high) {

int mid;

if(low < high) {

mid = (low + high) / 2;

sort(low, mid);

sort(mid+1, high);

merging(low, mid, high);

} else {

return;

}

}

public static void main(String args[]) {

int i;

int n = a.length;

System.out.println("Array before sorting");

for(i = 0; i < n; i++)

System.out.print(a[i] + " ");

sort(0, n-1);

System.out.println("\nArray after sorting");

for(i = 0; i < n; i++)

System.out.print(a[i]+" ");

}

}

Output

Array before sorting 10 14 19 26 27 31 33 35 42 44 0 Array after sorting 0 10 14 19 26 27 31 33 35 42 44

def merge_sort(a, n):

if n > 1:

m = n // 2

#divide the list in two sub lists

l1 = a[:m]

n1 = len(l1)

l2 = a[m:]

n2 = len(l2)

#recursively calling the function for sub lists

merge_sort(l1, n1)

merge_sort(l2, n2)

i = j = k = 0

while i < n1 and j < n2:

if l1[i] <= l2[j]:

a[k] = l1[i]

i = i + 1

else:

a[k] = l2[j]

j = j + 1

k = k + 1

while i < n1:

a[k] = l1[i]

i = i + 1

k = k + 1

while j < n2:

a[k]=l2[j]

j = j + 1

k = k + 1

a = [10, 14, 19, 26, 27, 31, 33, 35, 42, 44, 0]

n = len(a)

print("Array before Sorting")

print(a)

merge_sort(a, n)

print("Array after Sorting")

print(a)

Output

Array before Sorting [10, 14, 19, 26, 27, 31, 33, 35, 42, 44, 0] Array after Sorting [0, 10, 14, 19, 26, 27, 31, 33, 35, 42, 44]

Strassens Matrix Multiplication

Strassens Matrix Multiplication is the divide and conquer approach to solve the matrix multiplication problems. The usual matrix multiplication method multiplies each row with each column to achieve the product matrix. The time complexity taken by this approach is O(n3), since it takes two loops to multiply. Strassens method was introduced to reduce the time complexity from O(n3) to O(nlog 7).

Nave Method

First, we will discuss nave method and its complexity. Here, we are calculating Z=X Y. Using Nave method, two matrices (X and Y) can be multiplied if the order of these matrices are p q and q r and the resultant matrix will be of order p r. The following pseudocode describes the nave multiplication −

Algorithm: Matrix-Multiplication (X, Y, Z)

for i = 1 to p do

for j = 1 to r do

Z[i,j] := 0

for k = 1 to q do

Z[i,j] := Z[i,j] + X[i,k] × Y[k,j]

Complexity

Here, we assume that integer operations take O(1) time. There are three for loops in this algorithm and one is nested in other. Hence, the algorithm takes O(n3) time to execute.

Strassens Matrix Multiplication Algorithm

In this context, using Strassens Matrix multiplication algorithm, the time consumption can be improved a little bit.

Strassens Matrix multiplication can be performed only on square matrices where n is a power of 2. Order of both of the matrices are n × n.

Divide X, Y and Z into four (n/2)×(n/2) matrices as represented below −

$Z = \begin{bmatrix}I & J \\K & L \end{bmatrix}$ $X = \begin{bmatrix}A & B \\C & D \end{bmatrix}$ and $Y = \begin{bmatrix}E & F \\G & H \end{bmatrix}$

Using Strassens Algorithm compute the following −

$$M_{1} \: \colon= (A+C) \times (E+F)$$

$$M_{2} \: \colon= (B+D) \times (G+H)$$

$$M_{3} \: \colon= (A-D) \times (E+H)$$

$$M_{4} \: \colon= A \times (F-H)$$

$$M_{5} \: \colon= (C+D) \times (E)$$

$$M_{6} \: \colon= (A+B) \times (H)$$

$$M_{7} \: \colon= D \times (G-E)$$

Then,

$$I \: \colon= M_{2} + M_{3} - M_{6} - M_{7}$$

$$J \: \colon= M_{4} + M_{6}$$

$$K \: \colon= M_{5} + M_{7}$$

$$L \: \colon= M_{1} - M_{3} - M_{4} - M_{5}$$

Analysis

$$T(n)=\begin{cases}c & if\:n= 1\\7\:x\:T(\frac{n}{2})+d\:x\:n^2 & otherwise\end{cases} \:where\: c\: and \:d\:are\: constants$$

Using this recurrence relation, we get $T(n) = O(n^{log7})$

Hence, the complexity of Strassens matrix multiplication algorithm is $O(n^{log7})$.

Example

Let us look at the implementation of Strassen's Matrix Multiplication in various programming languages: C, C++, Java, Python.

#include<stdio.h>

int main(){

int z[2][2];

int i, j;

int m1, m2, m3, m4 , m5, m6, m7;

int x[2][2] = {

{12, 34},

{22, 10}

};

int y[2][2] = {

{3, 4},

{2, 1}

};

printf("\nThe first matrix is\n");

for(i = 0; i < 2; i++) {

printf("\n");

for(j = 0; j < 2; j++)

printf("%d\t", x[i][j]);

}

printf("\nThe second matrix is\n");

for(i = 0; i < 2; i++) {

printf("\n");

for(j = 0; j < 2; j++)

printf("%d\t", y[i][j]);

}

m1= (x[0][0] + x[1][1]) * (y[0][0] + y[1][1]);

m2= (x[1][0] + x[1][1]) * y[0][0];

m3= x[0][0] * (y[0][1] - y[1][1]);

m4= x[1][1] * (y[1][0] - y[0][0]);

m5= (x[0][0] + x[0][1]) * y[1][1];

m6= (x[1][0] - x[0][0]) * (y[0][0]+y[0][1]);

m7= (x[0][1] - x[1][1]) * (y[1][0]+y[1][1]);

z[0][0] = m1 + m4- m5 + m7;

z[0][1] = m3 + m5;

z[1][0] = m2 + m4;

z[1][1] = m1 - m2 + m3 + m6;

printf("\nProduct achieved using Strassen's algorithm \n");

for(i = 0; i < 2 ; i++) {

printf("\n");

for(j = 0; j < 2; j++)

printf("%d\t", z[i][j]);

}

return 0;

}

Output

The first matrix is 12 34 22 10 The second matrix is 3 4 2 1 Product achieved using Strassen's algorithm 104 82 86 98

#include<iostream>

using namespace std;

int main() {

int z[2][2];

int i, j;

int m1, m2, m3, m4 , m5, m6, m7;

int x[2][2] = {

{12, 34},

{22, 10}

};

int y[2][2] = {

{3, 4},

{2, 1}

};

cout<<"\nThe first matrix is\n";

for(i = 0; i < 2; i++) {

cout<<endl;

for(j = 0; j < 2; j++)

cout<<x[i][j]<<" ";

}

cout<<"\nThe second matrix is\n";

for(i = 0;i < 2; i++){

cout<<endl;

for(j = 0;j < 2; j++)

cout<<y[i][j]<<" ";

}

m1 = (x[0][0] + x[1][1]) * (y[0][0] + y[1][1]);

m2 = (x[1][0] + x[1][1]) * y[0][0];

m3 = x[0][0] * (y[0][1] - y[1][1]);

m4 = x[1][1] * (y[1][0] - y[0][0]);

m5 = (x[0][0] + x[0][1]) * y[1][1];

m6 = (x[1][0] - x[0][0]) * (y[0][0]+y[0][1]);

m7 = (x[0][1] - x[1][1]) * (y[1][0]+y[1][1]);

z[0][0] = m1 + m4- m5 + m7;

z[0][1] = m3 + m5;

z[1][0] = m2 + m4;

z[1][1] = m1 - m2 + m3 + m6;

cout<<"\nProduct achieved using Strassen's algorithm \n";

for(i = 0; i < 2 ; i++) {

cout<<endl;

for(j = 0; j < 2; j++)

cout<<z[i][j]<<" ";

}

return 0;

}

Output

The first matrix is 12 34 22 10 The second matrix is 3 4 2 1 Product achieved using Strassen's algorithm 104 82 86 98

public class Strassens {

public static void main(String[] args) {

int[][] x = {{12, 34}, {22, 10}};

int[][] y = {{3, 4}, {2, 1}};

int z[][] = new int[2][2];

int m1, m2, m3, m4 , m5, m6, m7;

System.out.println("The first matrix is: ");

for(int i = 0; i<2; i++) {

System.out.println();//new line

for(int j = 0; j<2; j++) {

System.out.print(x[i][j] + "\t");

}

}

System.out.println("\nThe second matrix is: ");

for(int i = 0; i<2; i++) {

System.out.println();//new line

for(int j = 0; j<2; j++) {

System.out.print(y[i][j] + "\t");

}

}

m1 = (x[0][0] + x[1][1]) * (y[0][0] + y[1][1]);

m2 = (x[1][0] + x[1][1]) * y[0][0];

m3 = x[0][0] * (y[0][1] - y[1][1]);

m4 = x[1][1] * (y[1][0] - y[0][0]);

m5 = (x[0][0] + x[0][1]) * y[1][1];

m6 = (x[1][0] - x[0][0]) * (y[0][0]+y[0][1]);

m7 = (x[0][1] - x[1][1]) * (y[1][0]+y[1][1]);

z[0][0] = m1 + m4- m5 + m7;

z[0][1] = m3 + m5;

z[1][0] = m2 + m4;

z[1][1] = m1 - m2 + m3 + m6;

System.out.println("\nProduct achieved using Strassen's algorithm: ");

for(int i = 0; i<2; i++) {

System.out.println();//new line

for(int j = 0; j<2; j++) {

System.out.print(z[i][j] + "\t");

}

}

}

}

Output

The first matrix is: 12 34 22 10 The second matrix is: 3 4 2 1 Product achieved using Strassen's algorithm: 104 82 86 98

import numpy as np

x = np.array([[12, 34], [22, 10]])

y = np.array([[3, 4], [2, 1]])

z = np.zeros((2, 2))

m1, m2, m3, m4, m5, m6, m7 = 0, 0, 0, 0, 0, 0, 0

print("The first matrix is: ")

for i in range(2):

print()

for j in range(2):

print(x[i][j], end="\t")

print("\nThe second matrix is: ")

for i in range(2):

print()

for j in range(2):

print(y[i][j], end="\t")

m1 = (x[0][0] + x[1][1]) * (y[0][0] + y[1][1])

m2 = (x[1][0] + x[1][1]) * y[0][0]

m3 = x[0][0] * (y[0][1] - y[1][1])

m4 = x[1][1] * (y[1][0] - y[0][0])

m5 = (x[0][0] + x[0][1]) * y[1][1]

m6 = (x[1][0] - x[0][0]) * (y[0][0] + y[0][1])

m7 = (x[0][1] - x[1][1]) * (y[1][0] + y[1][1])

z[0][0] = m1 + m4 - m5 + m7

z[0][1] = m3 + m5

z[1][0] = m2 + m4

z[1][1] = m1 - m2 + m3 + m6

print("\nProduct achieved using Strassen's algorithm: ")

for i in range(2):

print()

for j in range(2):

print(z[i][j], end="\t")

Output

The first matrix is: 12 34 22 10 The second matrix is: 3 4 2 1 Product achieved using Strassen's algorithm: 104.0 82.0 86.0 98.0

Karatsuba Algorithm

The Karatsuba algorithm is used by the system to perform fast multiplication on two n-digit numbers, i.e. the system compiler takes lesser time to compute the product than the time-taken by a normal multiplication.

The usual multiplication approach takes n2 computations to achieve the final product, since the multiplication has to be performed between all digit combinations in both the numbers and then the sub-products are added to obtain the final product. This approach of multiplication is known as Nave Multiplication.

To understand this multiplication better, let us consider two 4-digit integers: 1456 and 6533, and find the product using nave approach.

So, 1456 6533 =?

In this method of nave multiplication, given the number of digits in both numbers is 4, there are 16 single-digit single-digit multiplications being performed. Thus, the time complexity of this approach is O(42) since it takes 42 steps to calculate the final product.

But when the value of n keeps increasing, the time complexity of the problem also keeps increasing. Hence, Karatsuba algorithm is adopted to perform faster multiplications.

Karatsuba Algorithm

The main idea of the Karatsuba Algorithm is to reduce multiplication of multiple sub problems to multiplication of three sub problems. Arithmetic operations like additions and subtractions are performed for other computations.

For this algorithm, two n-digit numbers are taken as the input and the product of the two number is obtained as the output.

Step 1 − In this algorithm we assume that n is a power of 2.

Step 2 − If n = 1 then we use multiplication tables to find P = XY.

Step 3 − If n > 1, the n-digit numbers are split in half and represent the number using the formulae −

X = 10n/2X1 + X2 Y = 10n/2Y1 + Y2

where, X1, X2, Y1, Y2 each have n/2 digits.

Step 4 − Take a variable Z = W (U + V),

where,

U = X1Y1, V = X2Y2

W = (X1 + X2) (Y1 + Y2), Z = X1Y2 + X2Y1.

Step 5 − Then, the product P is obtained after substituting the values in the formula −

P = 10n(U) + 10n/2(Z) + V P = 10n (X1Y1) + 10n/2 (X1Y2 + X2Y1) + X2Y2.

Step 6 − Recursively call the algorithm by passing the sub problems (X1, Y1), (X2, Y2) and (X1 + X2, Y1 + Y2) separately. Store the returned values in variables U, V and W respectively.

Example

Let us solve the same problem given above using Karatsuba method, 1456 6533 −

The Karatsuba method takes the divide and conquer approach by dividing the problem into multiple sub-problems and applies recursion to make the multiplication simpler.

Step 1

Assuming that n is the power of 2, rewrite the n-digit numbers in the form of −

X = 10n/2X1 + X2 Y = 10n/2Y1 + Y2

That gives us,

1456 = 102(14) + 56 6533 = 102(65) + 33

First let us try simplifying the mathematical expression, we get,

(1400 6500) + (56 33) + (1400 33) + (6500 56) = 104 (14 65) + 102 [(14 33) + (56 65)] + (33 56)

The above expression is the simplified version of the given multiplication problem, since multiplying two double-digit numbers can be easier to solve rather than multiplying two four-digit numbers.

However, that holds true for the human mind. But for the system compiler, the above expression still takes the same time complexity as the normal nave multiplication. Since it has 4 double-digit double-digit multiplications, the time complexity taken would be −

14 65 → O(4) 14 33 → O(4) 65 56 → O(4) 56 33 → O(4) = O (16)

Thus, the calculation needs to be simplified further.

Step 2

X = 1456 Y = 6533

Since n is not equal to 1, the algorithm jumps to step 3.

X = 10n/2X1 + X2 Y = 10n/2Y1 + Y2

That gives us,

1456 = 102(14) + 56 6533 = 102(65) + 33

Calculate Z = W (U + V) −

Z = (X1 + X2) (Y1 + Y2) (X1Y1 + X2Y2) Z = X1Y2 + X2Y1 Z = (14 33) + (65 56)

The final product,

P = 10n. U + 10n/2. Z + V = 10n (X1Y1) + 10n/2 (X1Y2 + X2Y1) + X2Y2 = 104 (14 65) + 102 [(14 33) + (65 56)] + (56 33)

The sub-problems can be further divided into smaller problems; therefore, the algorithm is again called recursively.

Step 3

X1 and Y1 are passed as parameters X and Y.

So now, X = 14, Y = 65

X = 10n/2X1 + X2 Y = 10n/2Y1 + Y2 14 = 10(1) + 4 65 = 10(6) + 5

Calculate Z = W (U + V) −

Z = (X1 + X2) (Y1 + Y2) (X1Y1 + X2Y2) Z = X1Y2 + X2Y1 Z = (1 5) + (6 4) = 29 P = 10n (X1Y1) + 10n/2 (X1Y2 + X2Y1) + X2Y2 = 102 (1 6) + 101 (29) + (4 5) = 910

Step 4

X2 and Y2 are passed as parameters X and Y.

So now, X = 56, Y = 33

X = 10n/2X1 + X2 Y = 10n/2Y1 + Y2 56 = 10(5) + 6 33 = 10(3) + 3

Calculate Z = W (U + V) −

Z = (X1 + X2) (Y1 + Y2) (X1Y1 + X2Y2) Z = X1Y2 + X2Y1 Z = (5 3) + (6 3) = 33 P = 10n (X1Y1) + 10n/2 (X1Y2 + X2Y1) + X2Y2 = 102 (5 3) + 101 (33) + (6 3) = 1848

Step 5

X1 + X2 and Y1 + Y2 are passed as parameters X and Y.

So now, X = 70, Y = 98

X = 10n/2X1 + X2 Y = 10n/2Y1 + Y2 70 = 10(7) + 0 98 = 10(9) + 8

Calculate Z = W (U + V) −

Z = (X1 + X2) (Y1 + Y2) (X1Y1 + X2Y2) Z = X1Y2 + X2Y1 Z = (7 8) + (0 9) = 56 P = 10n (X1Y1) + 10n/2 (X1Y2 + X2Y1) + X2Y2 = 102 (7 9) + 101 (56) + (0 8) =

Step 6