Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

What is the difference between Concurrency and Parallel Execution in Computer Architecture?

Concurrency Execution

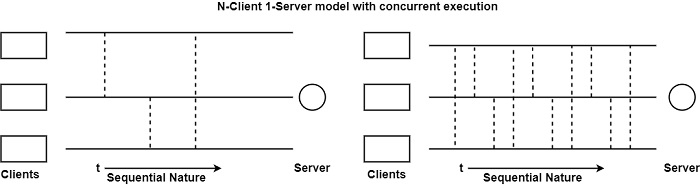

It is the sensual behavior of the N-Client I-Server model where one client is served at any provided moment. This model has dual characteristics. It is sequential on a small time scale, but together on a rather large time scale.

In this method, the elementary problem is how the competing clients, processors or threads, must be scheduled for service (Execution) through the single-level (processor). Scheduling policies can be oriented toward efficient service in terms of highest throughput (least intervention) or towards short average response time, and so on.

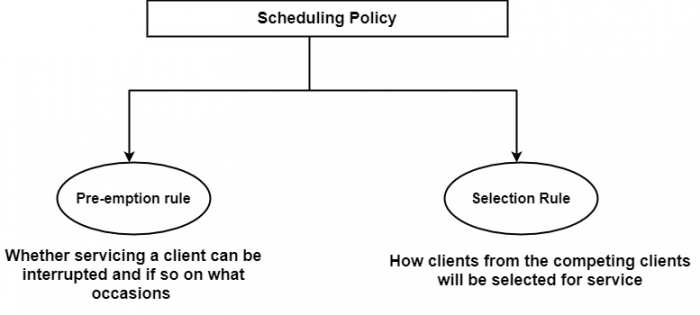

The scheduling policy can be considered as covering two methods as first deals with whether servicing a client can be stopped or not and therefore, on what occasions (pre-emption rule). The other states how one of the competing clients is choosing for services (selection-rule) as displayed in the figure.

If pre-emption is not enabled, a client is serviced for considering required. This gives results in slightly longer waiting times or the blocking of essential service requests from various clients. The pre-emption rule can determine time-sharing, which confines continuous service for each client to the continuation of a time slice, or can be priority-based, interrupting the functions of a client when a higher priority client request services.

The selection rule depends on specific parameters, including priority, time of arrival, etc. This rule defines an algorithm to specify a numeric value, which we will declare the rank, from the given parameter.

Parallel Execution

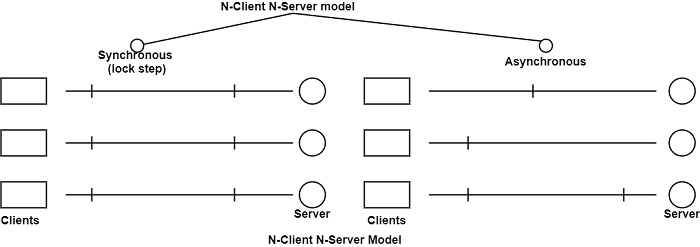

Parallel Execution is associated with the N-Client N-Server Model. It is carrying more than one server that enables the servicing of more than one client (processes or threads) at an equal time is known as Parallel Execution.

The aim of parallel execution to speed up the computer processing efficiency and raised its throughput, that is, the several processing that can be accomplished during a given interruption of time. The number of hardware increases with parallel execution and with it, the value of the system improves.

Parallel execution is created by assigning the information among the several functional units. For example, the arithmetic, logic, and shift operations can be separated into three units and the operands diverted to each unit under the direction of a control unit.