Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

TfLearn and its installation in TensorFlow

TFlearn is an open-source deep-learning library built on the TensorFlow framework. It provides a high-level API with which it is easy to create and train different neural network models.

It provides an array of pre-existing models such as Convolutional Neural Network (CNN), Deep Neural Networks (DNN), and many other models. It also includes a variety of activation functions such as ReLU (Rectified Linear Units), softmax, and also loss functions such as categorical cross-entropy and so on.

TfLearn is an ideal library for beginners due to not requiring extensive knowledge of Neural Network APIs in TensorFlow. It is a simple, easy-to-use library with us being able to define the input, hidden and output layers as opposed to building a computationally intensive network architecture such as AlexNet or LeNet architectures.

How to use TfLearn?

Firstly, check whether Python exists in your system. This can be checked by printing the version of it present in the system.

python ---version

In case you do not have Python installed in your system, you can go to the ?python.org' website to install Python version 3.8 or above.

After that, check whether the TensorFlow module is installed in your system. This can be done by executing

pip install tensorflow

In this case, TensorFlow is already installed. If it doesn't exist already, it will install the module in your system.

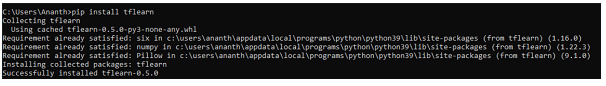

After this, install the TfLearn module into your system using the command:

pip install tflearn

Now that we have all the prerequisites downloaded, let us see an example where TfLearn is utilized.

Example 1

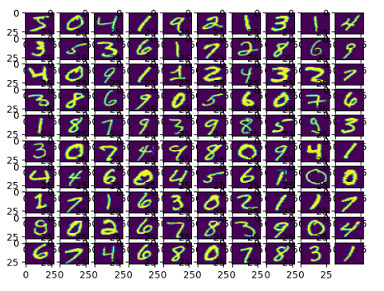

In the following program, the MNIST dataset is used to train and test our model., TfLearn has the MNIST dataset available in its package by default. Hence, we can make use of that.

To improve prediction accuracy, and for the computer to understand the images, we use the concept of one-hot encoding to define classes from [0,...,9]. The dataset is actually in the form of handwritten digits.

Algorithm

Import all the libraries.

Load the MNIST dataset and assign the values to x_train, y_train, x_test and y_test where x denotes the values and y denotes the labels in the dataset.

Convert x_train and x_test as float values and convert their pixel values to 0 or 1 by dividing by 255.

Define 10 subplots and print the images onto the subplots by automatically scaling their image ratio by initializing a for loop.

Print the image.

import tflearn

from tflearn.datasets import mnist

import matplotlib.pyplot as plt

(x_train, y_train),(x_test,y_test)=mnist.load_data()

x_train=x_train.astype('float32')

x_test=x_test.astype('float32')

x_train, x_test=x_train/255.0, x_test/255.0

#To show the dataset

fi,ax=plt.subplots(10,10)

k=0

for i in range(10):

for j in range(10):

ax[i][j].imshow(x_train[k].reshape(28,28), aspect='auto')

k+=1

plt.show()

We load the dataset and split it into training and testing data. We then convert the data type of training and testing data to float32 and normalize the pixel value of the images dividing them by 255.0 such that they within the range of 0 and 1.

We create a grid of 10x10 and initialize a pointer to keep track of the image index. We iterate over the grid using a loop. For every grid box, we display an image from the training set that reshapes the image from 1D array to 2D array with the dimensions 28x28.

Output

Example 2

In the previous example, we loaded the MNIST data without one-hot encoding. In the following example, we will print the shapes of the train and test label after applying one-hot encoding and print the first five lines of the training label.

Algorithm

Import the MNIST dataset using tflearn.

Load the MNIST dataset with the one-hot encoding values.

Print the shape and dimensions of the dataset.

Print the first five rows of the training labels.

from tflearn.datasets import mnist

x_train, y_train, x_test, y_test=mnist.load_data(one_hot=True)

print("Training Data shape: ", x_train.shape)

print("Training Labels shape: ", y_train.shape)

print("Testing Data shape: ", x_train.shape)

print("Testing Labels shape: ", y_train.shape)

print("First 5 training labels: ")

print(y_train[:5])

Here, we load the MNIST dataset which contains the handwritten images of the digits. We then assign training images and labels to separate variables and similarly assign the test images and labels to separate variables.

The one_hot = true argument makes sure that the labels are represented in one_hot encoded format which is a representation of required data in a binary vector format where one element is fixed as hot(1) and the rest are represented as cold (0). And, here hot element is the required target element.

We then print the testing and training data(images and labels).

Output

Building Neural Network Model

Now that we have an idea of what we're working with, let's build our neural network model. A neural network consists of 3 layers, namely ?

Input Layer

Hidden Layer

Output Layer

Example 3

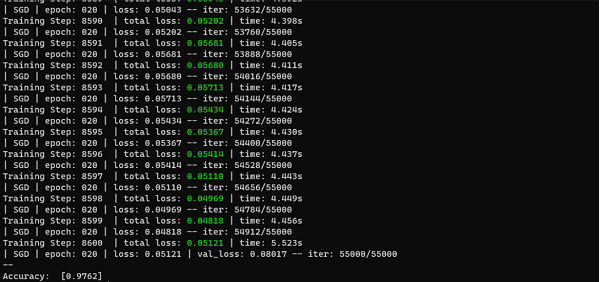

To build these layers in our neural network, we use the TfLearn module.

We define the neural network with 256 layers in the hidden layer with the activation function ReLU and an output layer consisting of 10 layers and the activation function, softmax. In most neural network architectures, the output layer always has a softmax activation function.

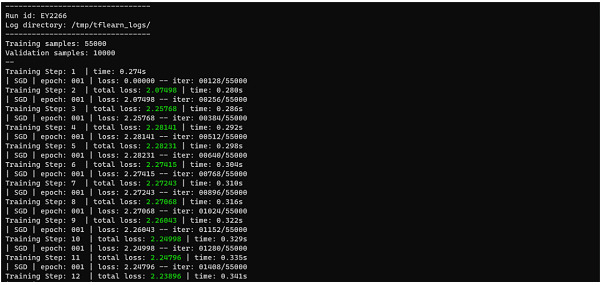

We then define the model with an SGD (Stochastic Gradient Descent) optimizer and a loss function of categorical cross-entropy along with a learning rate of 0.1. With these, we build our model and fit it to our train data and test data, after which we print the accuracy of the model.

Algorithm

Import all the libraries.

Load the MNIST dataset and assign the values to x_train, y_train, x_test and y_test where x denotes the values and y denotes the labels in the dataset.

Set one_hot=True while importing the dataset.

Print the shapes of all the variables.

Define an input layer as [None,784].

For the hidden layer, equate the input layer with 256 layers along with the ReLU activation function.

For the output layer, add 10 layers along with the softmax activation function.

Compile the model with the necessary optimizers and loss functions and print the accuracy.

import tflearn

from tflearn.datasets import mnist

x_train, y_train, x_test, y_test=mnist.load_data(one_hot=True)

print("Training Data shape: ", x_train.shape)

print("Training Labels shape: ", y_train.shape)

print("Testing Data shape: ", x_train.shape)

print("Testing Labels shape: ", y_train.shape)

print("First 5 training labels: ")

print(y_train[:5])

i_layer=tflearn.input_data(shape=[None,784])

h_layer=tflearn.fully_connected(i_layer, 256, activation='relu')

o_layer=tflearn.fully_connected(h_layer, 10, activation='softmax')

net=tflearn.regression(o_layer, optimizer='sgd', learning_rate=0.1,

loss='categorical_crossentropy')

model=tflearn.DNN(net)

model.fit(x_train, y_train, validation_set=(x_test,y_test),

n_epoch=20, batch_size=128)

acc=model.evaluate(x_test,y_test)

print("Accuracy: ", acc)

We split the data into training and testing data by loading the dataset from MNIST. We then apply the one-hot encoding format to the labels. We then define the architecture of neural network and set up the network's training training parameters like - learning rate, loss function etc.

We then train the model on the training data for 20 epochs and validate the model's performance on the testing data during training session. We then evaluate the trained model on the testing data and calculate the accuracy.

Output

Example 4

In the above example, we can see that we have an accuracy rate of 97.5% which is very good. In this way, we can fine-tune the accuracy of the model by changing the optimizer and loss function. In this example, we see using the Adam optimizer.

Algorithm

Import all the libraries.

Load the MNIST dataset and assign the values to x_train, y_train, x_test and y_test where x denotes the values and y denotes the labels in the dataset.

Set one_hot=True while importing the dataset.

Print the shapes of all the variables.

Define an input layer as [None,784].

For the hidden layer, equate the input layer with 256 layers along with the ReLU activation function.

For the output layer, add 10 layers along with the softmax activation function.

Compile the model with the necessary optimizers and loss functions and print the accuracy.

import tflearn

from tflearn.datasets import mnist

x_train, y_train, x_test, y_test=mnist.load_data(one_hot=True)

i_layer=tflearn.input_data(shape=[None,784])

h_layer=tflearn.fully_connected(i_layer, 256, activation='relu')

o_layer=tflearn.fully_connected(h_layer, 10, activation='softmax')

net=tflearn.regression(o_layer, optimizer='adam', learning_rate=0.1,

loss='categorical_crossentropy')

model=tflearn.DNN(net)

model.fit(x_train, y_train, validation_set=(x_test,y_test),

n_epoch=20, batch_size=128)

acc=model.evaluate(x_test,y_test)

Output

This is very low for a model, as shown, hence we use SGD optimizer for our purposes.

Conclusion

As seen above TfLearn is a very simple and easy-to-understand library used to construct deep learning models. But, since it is a very simple library, it doesn't support as many activation functions or loss functions as the libraries TensorFlow or PyTorch provide. It is also significantly lacking in customization of models compared to the libraries mentioned above.

The library is used in Computer Vision for tasks like image segmentation, object detection and so on with the help of Convolutional Neural Networks and in Education and Research where algorithms can be built and taught in an educational setting.