Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Non-Linear SVM in Machine Learning

Introduction

Support Vector Machine (SVM) is one of the most popular supervised Machine Learning algorithms for classification as well as regression. The SVM Algorithm strives to find a line of best fit between n?dimensional data to separate them into classes. a new data point can thus be classified into one of these classes.

The SVM algorithm creates two hyperplanes while maximizing the margin between them. The points that lie on these hyperplanes are known as Support Vectors and hence the name Support Vector Machine.

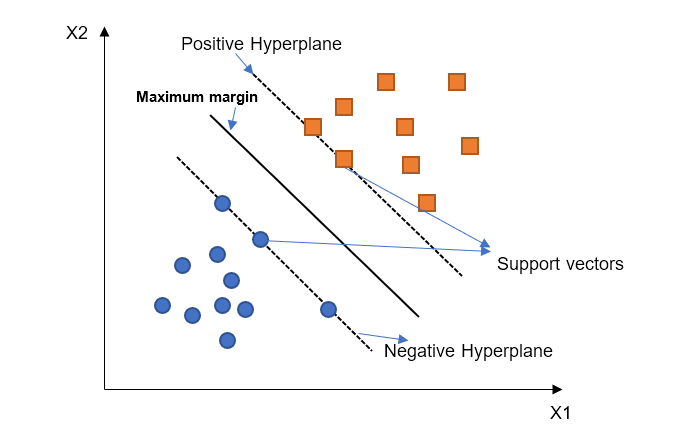

The below diagram shows the decision boundary and hyperplanes for an SVM that is used to classify data into two classes.

The above diagram explains the working of a Linear SVM. The diagram shows the two classes separated from each other by a maximum margin hyperplane.The separating hyperplane aims to maximize the margin between the negative and positive hyperplanes.Support vectors are points on the negative and positive hyperplanes respectively.

An example of an SVM algorithm can be a classifier that is used to classify images into cats and dogs given a data set of images containing cats and dog images.

A Non?Linear SVM

The data we receive in the real world is not always linearly separable. While a Linear SVM is the perfect type of SVM algorithm for linear data, non?linear data can be effectively handled with a Non?Linear SVM making use of a non?linear Kernel.

A straight line can classify two classes but to classify more than two classes a non?linear SVM would be required as data may span n ?dimensions.

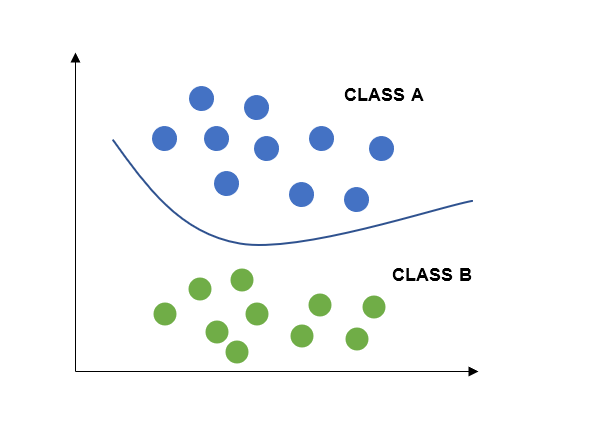

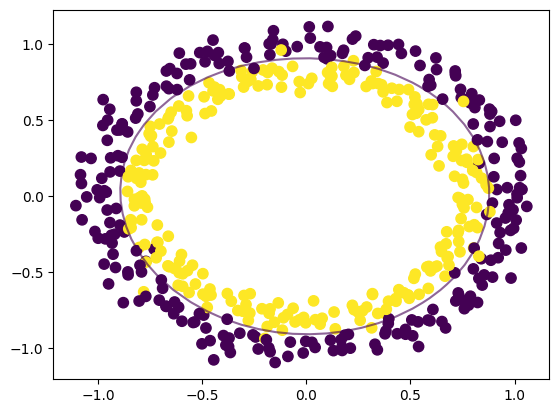

From the above diagram we can see that in non linear SVM the hyperplane separating the two classes in non linear in nature.In the diagram above the two classes Class A and Class B are separated by anon linear or curved margin or hyperplane that tends to maximize the margin between the two classes.

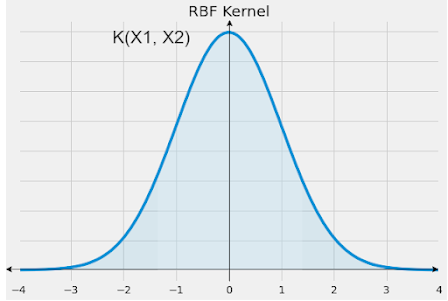

The most commonly used kernel with a non?linear SVM is the Radial Basis Function(RBF) kernel. However, there are other non?linear kernels as well like the polynomial kernel.

Let us know more about the RBF kernel and see how it helps in nonlinear classification in SVM.

Let us know more about the RBF kernel and see how it helps in nonlinear classification in SVM.

The RBF Kernel

A kernel is a function that transforms n?dimensional input data into m dimensions such that n >> m.

The RBF kernel tries to make non?linearly separable data almost linear using the kernel trick and transforming the data.

The RBF kernel is represented as.

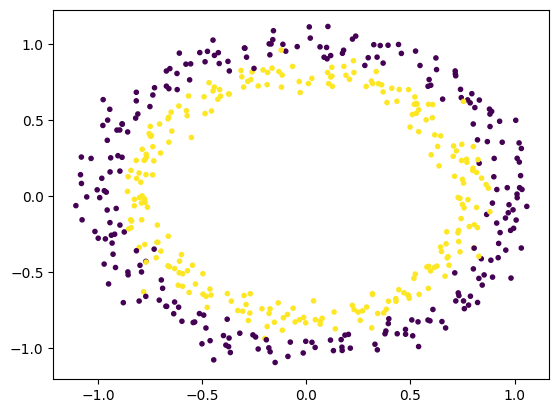

Example

import numpy as np

from sklearn import datasets as ds

from sklearn import svm

import matplotlib.pyplot as plt

%matplotlib inline

X, y = ds.make_circles(n_samples=500, noise=0.06)

plt.scatter(X[:, 0], X[:, 1], c=y, marker='.')

plt.show()

classifier_non_linear = svm.SVC(kernel='rbf', C=1.0)

classifier_non_linear.fit(X, y)

def boundary_plot(m, axis=None):

if axis is None:

axis = plt.gca()

limit_x = axis.get_xlim()

limit_x_y = axis.get_ylim()

x_lines = np.linspace(limit_x[0], limit_x[1], 30)

y_lines = np.linspace(limit_x_y[0], limit_x_y[1], 30)

Y, X = np.meshgrid(y_lines, x_lines)

xy = np.vstack([X.ravel(), Y.ravel()]).T

Plot = m.decision_function(xy).reshape(X.shape)

axis.contour(X, Y, Plot,

levels=[0], alpha=0.6,

linestyles=['-'])

plt.scatter(X[:, 0], X[:, 1], c=y, s=55)

boundary_plot(classifier_non_linear)

plt.scatter(classifier_non_linear.support_vectors_[:, 0], classifier_non_linear.support_vectors_[:, 1], s=55, lw=1, facecolors='none')

plt.show()

Output

Conclusion

Non-Linear SVM is a very handy tool and efficient algorithm in supervised learning for both classification and regression. It is quite useful when the data is non?linearly separable where it applies the kernel trick with a non?linear kernel like RBF kernel and any other suitable function.