Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

What is Linear Algebra Application in Machine Learning

Machine learning relies heavily on linear algebra, which helped to create the fundamental models and algorithms we use today. Imagine it as the language used to explain and make sense of complicated facts by machines. Machine learning would be like attempting to find your way through a thick forest without a map or compass without linear algebra. It gives us the resources we need to effectively represent and modify data, glean insightful conclusions, and improve models. The real potential of machine learning can be unlocked by using vectors, matrices, and operations like matrix multiplication and decomposition, which are all made possible by linear algebra. Understanding linear algebra is, therefore, a key first step to becoming a skilled machine learning practitioner, whether you're exploring regression, dimensionality reduction, or deep learning. In this article, we'll examine linear algebra applications in machine learning.

Understanding Linear algebra

The fundamental mathematics that supports many machine learning techniques is linear algebra. We can manage and analyze data in a systematic fashion thanks to linear algebra, which deals with vectors, matrices, and related operations.

Vectors are the first type of quantity; they are things that have both magnitude and direction. Vectors in the context of linear algebra can represent a variety of objects, including data points, features, or variables. Addition, subtraction, and scalar multiplication are operations that can be used on vectors to scale or alter their values.

Let's speak about matrices now. These are just rectangular arrays of numbers arranged in rows and columns. Matrix representations of data are a powerful tool, especially when dealing with several variables or traits. The addition, subtraction, and multiplication operations in a matrix are what allow us to mix, change, and analyze data in a systematic fashion.

The concept of vector spaces is another topic we explore in linear algebra. A collection of vectors that adhere to certain specifications is known as a vector space. It offers a mathematical foundation for understanding the characteristics and connections of vectors. By using vector spaces, we can define basic operations in linear algebra such as vector addition and scalar multiplication.

The idea of linear transformations is also crucial in linear algebra. These functions transfer vectors between different vector spaces while taking into consideration certain characteristics. Machine learning heavily relies on linear transformations because they provide us the ability to do operations that reshape or alter the data and give us the ability to represent data in a number of ways.

Linear Algebra in Machine Learning Algorithms

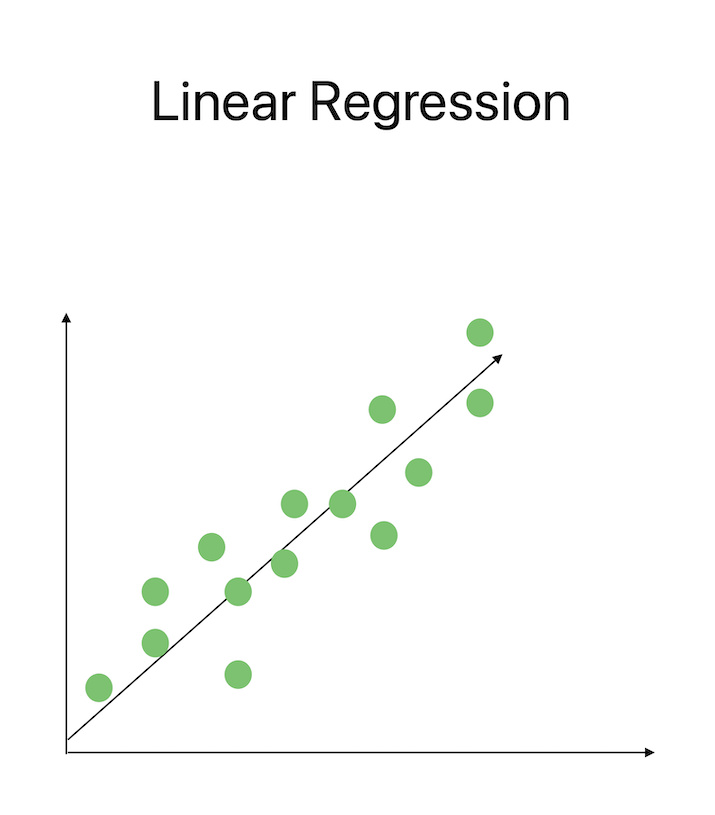

Linear Regression

The mathematical concept of linear regression is used to represent the relationship between the input features and the target variables in a crucial machine learning method. By resolving systems of linear equations using matrix operations, we can determine the ideal coefficients that minimize error and offer the best fit for the data.

Principal Component Analysis (PCA

A typical method for decreasing dimensionality in machine learning is PCA. High?dimensional data are transformed into a lower?dimensional space while retaining the most important aspects using linear algebra, particularly eigenvalues, and eigenvectors. In order to identify the underlying structure and trends in the data, the covariance matrix is dissected using PCA.

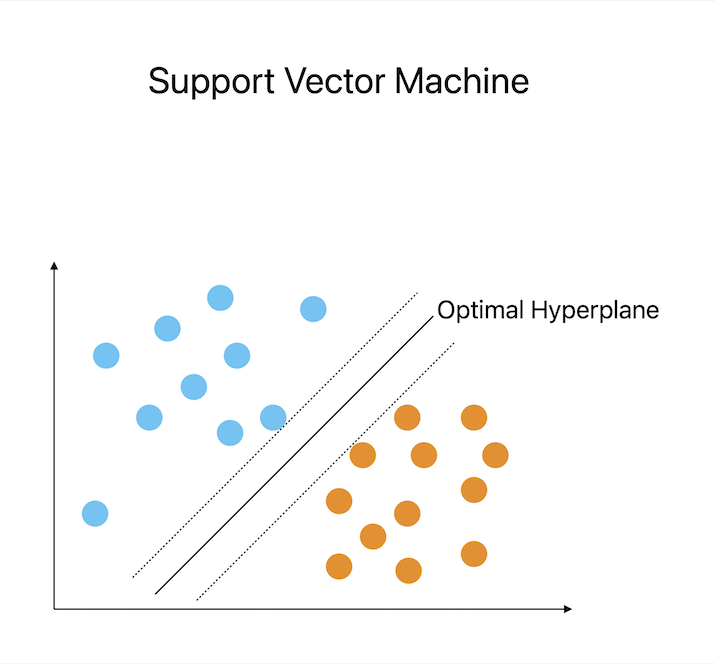

Support Vector Machines

To determine the ideal hyperplane that categorizes data points into multiple classes, Support Vector Machines employ linear algebra. SVMs are capable of effectively classifying new instances and managing complicated decision boundaries because they represent the data points as vectors and use linear algebra techniques like dot products and matrix operations.

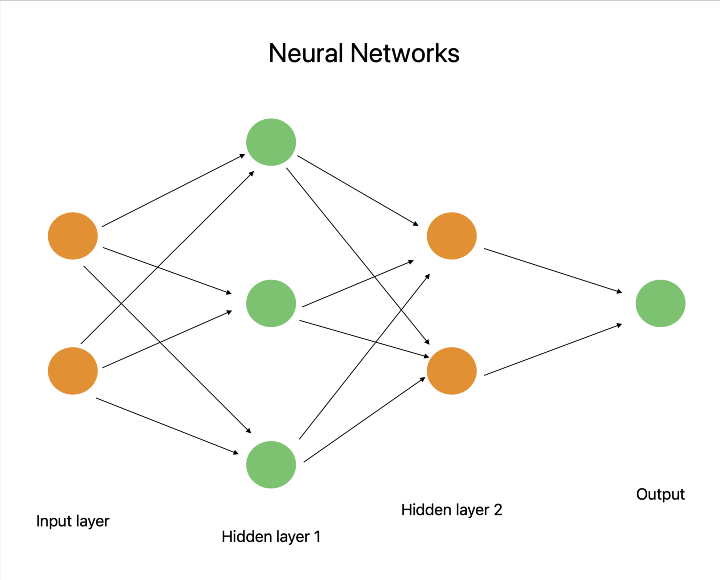

Neural Networks

Deep learning's core technology, neural networks, mainly rely on linear algebraic computations. Matrix multiplications and activation functions are used during forward and backward propagation in neural networks to represent the weights and biases as matrices. Neural networks can learn complicated patterns and generate precise predictions thanks to these linear algebra procedures.

Applications of Linear Algebra

Image recognition and computer vision

In problems involving image recognition, linear algebra is essential. Convolutional neural networks (CNNs) and similar methods extract characteristics from pictures by using linear algebra operations such as matrix convolutions and pooling. These algorithms are capable of very accurate object recognition, pattern detection, and picture classification because they represent images as matrices or tensors and carry out linear algebra operations on them.

Natural language processing and text analysis

Text analysis and natural language processing (NLP) both heavily rely on linear algebra. The semantic links between words are captured by word embeddings like Word2Vec and GloVe, which represent words as high?dimensional vectors. These embeddings, which are based on linear algebra ideas like eigendecomposition and matrix factorization, enable NLP systems to comprehend and interpret text input, do sentiment analysis, and even produce language.

Recommender systems and collaborative filtering

To generate customized suggestions, recommender systems employ linear algebraic techniques like collaborative filtering. These systems can identify comparable people and objects and provide precise suggestions based on their preferences and behavior by modeling user?item interactions as matrices and using matrix factorization techniques like Singular Value Decomposition (SVD).

Anomaly detection and clustering algorithms

Algorithms for anomaly identification and clustering use ideas from linear algebra to find anomalous patterns or group?related data points. In order to divide data into separate groups or identify outliers, methods like k?means clustering and spectral clustering make use of matrix operations and eigenvectors in linear algebra.

Conclusion

In conclusion, it is impossible to exaggerate the value of linear algebra in machine learning. It is the foundation of many algorithms and methods, allowing for effective representation, manipulation, and analysis of data. Complex equations can be solved using linear algebra, and dimensions can be reduced and variable relationships can be modeled. We can find trends, make precise predictions, and improve models thanks to the way it unleashes the power of matrix operations, eigenvectors, and vector spaces.