Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

TabNet in Machine Learning

In this Tutorial, we are going to learn about TabNet in Machine Learning. As far as we are aware, deep learning models have been increasingly popular for employing to solve tabular data. Due to the relevance and effectiveness of the features, they have chosen, XGBoost, RFE, and LightGBM have been dominating this stream. TabNet, however, alters the dynamic.

Researchers from Google Cloud made the TabNet proposal in 2019. The concept behind TabNet is to successfully apply deep neural networks to tabular data, which still contains a significant amount of user and processed data.

TabNet combines the best of both worlds: it is explainable (akin to simpler tree-based models) while still being fast (similar to deep neural networks). For applications in the retail, financial, and insurance sectors including forecasting, fraud detection, and credit score prediction, this makes it ideal.

Selecting the model characteristics to draw conclusions from at each stage of the model is done by TabNet using a machine learning approach called sequential attention. By using this approach, the model can learn more precise models and can explain how it generates its predictions. In addition to outperforming other neural networks and decision trees, TabNet's architecture also offers feature attributions that are easy to understand. Deep learning for tabular data is made possible by TabNet, which offers great performance and interpretability.

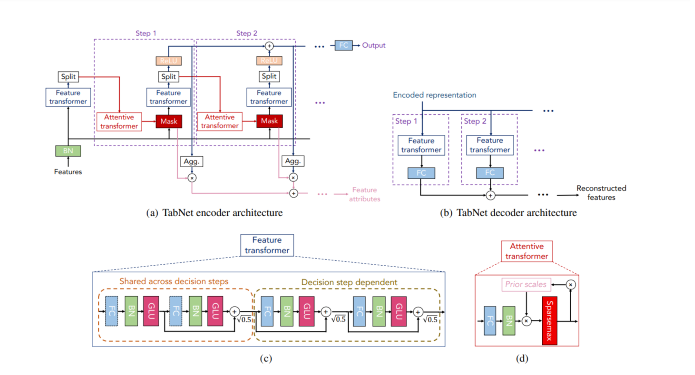

TabNet architecture

Encoder

The design, therefore, consists mostly of several consecutive stages that transmit inputs from one step to another. The report also includes some tips on how to choose the number of stages. As a result, when we take a single step, three processes occur ?

Four successive GLU decision blocks make up the feature transformer.

An attentive transformer that produces sparse feature selection using sparse-matrix facilitates interpretability and improves learning since the capacity is reserved for the most important characteristics.

The mask, together with the transformer, is used to output the decision-making parameters, n(d) and n(a), which are then passed on to the final phase.

As a result, no feature engineering has been done to the basic dataset, which includes all features. After being Batch normalized (BN), data is sent to the feature transformer where it goes through four GLU decision processes to produce two parameters.

In the case of regression or classification, the output decision n(d), which represents the prediction of continuous numbers or classes, is provided.

The next attentive transformer, where the following cycle is initiated, will receive n(a) as input.

Decoder

At the decision stage, the TabNet decoder architecture comprises a feature transformer followed by fully connected layers.

Feature Transformer

The Fully Connected Layer, Batch Normalization Layer, and GLU are the first four successive blocks in the Feature Transformer. GLU stands for the gated linear unit, which is basically the sigmoid of x multiplied by x. (GLU = ?(x) . x). Consequently, they are made up of two shared choice processes, followed by two autonomous decision phases. The layers are shared between two decision stages for robust learning since the same input characteristics are used in each phase. By guaranteeing that the variation throughout the network does not fluctuate much, normalization with a value of ?0.5 aids in stabilizing learning. It produces the two outputs n(d) and n(a), as previously explained.

Attentive Transformer

As you can see, the Attentive Transformer is made up of four layers: FC, BN, Prior Scales, and Sparsemax. Following Batch normalization, the n(a) input is sent to a fully linked layer. After that, it is multiplied by the Prior scale, a function that indicates how much you already know about the features from the preceding phases and how many features have been utilized in those steps. Every feature is equally important if it is set to 1. However, Tabnet's key benefit is that it uses soft feature selection with controlled sparsity in end-to-end learning, where one model handles both feature selection and output mapping.

Key Points to Remember

Feature, attentive, and feature masking transformers make up the TabNet encoder. A split block splits the processed representation so that it may be utilized for both the overall output and for the attentive transformer of the following phase. The feature selection mask gives interpretable data on the functionality of the model for each phase, and the masks can be combined to create a global feature significant attribution.

A feature transformer block is included in each phase of the TabNet decoder.

A feature transformer block example with a four-layer network is displayed, with two layers shared by all decision steps and the other two dependent on the decision step. Each layer is made up of BN, GLU nonlinearity, and a fully-connected (FC) layer.

An attentive transformer block illustrates this by modulating a single layer mapping using past scale data, which aggregates how much each feature was utilized before the current decision step.

TabNet Top Benefits

Encode several data kinds, such as pictures and tabular data, then solve using nonlinearity.

There is no need for Feature Engineering can toss all the columns at the model, and it will choose the best attributes that are also interpretable.

Implementing TabNet

For this tutorial, we will utilize the data from House Prices: Advanced Regression Techniques. In this example, I do not conduct any feature engineering or data cleaning, such as outlier removal, and instead, use the most basic imputation to account for any missing values.

You can download the data from here and can use it in your environment.

Installing and Importing libraries

!pip install pytorch-tabnet import pandas as pd import numpy as np from pytorch_tabnet.tab_model import TabNetRegressor from sklearn.model_selection import KFold

Dataset URL

train_data_url = "https://raw.githubusercontent.com/JayS420/Tabnetdataset/main/train.csv" test_data_url = "https://raw.githubusercontent.com/JayS420/Tabnetdataset/main/test.csv"

Importing Dataset

train_data = pd.read_csv(train_data_url, error_bad_lines=False) test_data = pd.read_csv(test_data_url, error_bad_lines = False)

Selecting some features

features = ['LotArea', 'OverallQual', 'OverallCond', 'YearBuilt', 'YearRemodAdd', 'BsmtFinSF1', 'BsmtFinSF2', 'TotalBsmtSF', '1stFlrSF', 'LowQualFinSF', 'GrLivArea', 'BsmtFullBath', 'BsmtHalfBath', 'HalfBath', 'BedroomAbvGr', 'Fireplaces', 'GarageCars', 'GarageArea', 'WoodDeckSF', 'OpenPorchSF', 'EnclosedPorch', 'PoolArea', 'YrSold']

Splitting dataset

X = train_data[features] y = np.log1p(train_data["SalePrice"]) X_test = test_data[features] y_test = ["SalePrice"]

Filling missing data

Any missing data will be filled up with a simple mean value. Concerning the relative benefits of doing this before utilizing cross-validation.

X = X.apply(lambda x: x.fillna(x.mean()),axis=0) X_test = X_test.apply(lambda x: x.fillna(x.mean()),axis=0)

Converting data to NumPy

X = X.to_numpy() y = y.to_numpy().reshape(-1, 1) X_test = X_test.to_numpy()

Applying Kfold validation

kf = KFold(n_splits=5, random_state=42, shuffle=True) predictions_array =[] CV_score_array =[] for train_index, test_index in kf.split(X): X_train, X_valid = X[train_index], X[test_index] y_train, y_valid = y[train_index], y[test_index] regressor = TabNetRegressor(verbose=0,seed=42) regressor.fit(X_train=X_train, y_train=y_train, eval_set=[(X_valid, y_valid)], patience=300, max_epochs=2000, eval_metric=['rmse']) CV_score_array.append(regressor.best_cost) predictions_array.append(np.expm1(regressor.predict(X_test))) predictions = np.mean(predictions_array,axis=0)

Output

Device used : cpu Early stopping occured at epoch 1598 with best_epoch = 1298 and best_val_0_rmse = 0.16444 Best weights from best epoch are automatically used! Device used : cpu Early stopping occured at epoch 1075 with best_epoch = 775 and best_val_0_rmse = 0.12027 Best weights from best epoch are automatically used! Device used : cpu Early stopping occured at epoch 691 with best_epoch = 391 and best_val_0_rmse = 0.16395 Best weights from best epoch are automatically used! Device used : cpu Early stopping occured at epoch 679 with best_epoch = 379 and best_val_0_rmse = 0.16833 Best weights from best epoch are automatically used! Device used : cpu Early stopping occured at epoch 1283 with best_epoch = 983 and best_val_0_rmse = 0.11103 Best weights from best epoch are automatically used!

Calculating Average CV score

print("The CV score is %.5f" % np.mean(CV_score_array,axis=0) )

Output

The CV score is 0.15161

Conclusion

To sum up, Tabnet is just deep learning applied to tabular data. As the learning capacity is employed for the most salient characteristics, it increases learning efficiency and enables interpretability by using sequential attention to select which features to reason from at each decision step.