Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Using GPU in Machine Learning

Machine learning has attracted a lot more attention lately. GPUs, sometimes referred to as "graphics processing units," are specialized computing systems that can continuously manage massive volumes of data. Therefore, GPUs are the ideal platform for machine learning applications. This post will explain how to get started while also exploring the several advantages of GPUs for machine learning.

Benefits of using GPU

Due to the following factors, GPU is an effective tool for speeding up machine learning workloads ?

Parallel Processing ? arge-scale machine-learning method parallelization is made possible by the simultaneous multitasking characteristics of GPUs. As a result, the complicated model training time can be reduced from days to hours or even minutes.

High Memory Bandwidth ? Data can be sent between the GPU memory and the main memory significantly more quickly because of GPUs' higher memory bandwidth than CPUs'. Shorter training times for machine learning models are the outcome.

Cost-Effective ? GPUs, which are inexpensive, are able to perform the same work that would need numerous CPUs.

You have a few choices for getting access to dedicated GPUs. You can make use of a local computer with a dedicated GPU to do machine learning jobs right away. However, if you'd rather use a cloud-based solution, services like Google Cloud, Amazon AWS, or Microsoft Azure provide GPU instances that are simple to set up and use from anywhere.

Setting up a GPU

A few things need to be set up before you can use GPU for machine learning ?

GPU Hardware ? To begin using it for machine learning, a GPU is required. Due to their outstanding performance and interoperability with well-known machine learning frameworks like TensorFlow, PyTorch, and Keras, NVIDIA GPUs are the most extensively utilized and well-liked in the machine learning community. Cloud service companies like AWS, Google Cloud, or Azure provide GPU rentals if you don't already have one.

Drivers & Libraries ? Installing the appropriate drivers and libraries is required after obtaining the hardware. The required drivers and libraries for GPU acceleration for machine learning are part of the CUDA toolkit that is offered by NVIDIA. Your desired machine learning framework's GPU version must also be installed.

Verify the setup ? Running a quick machine learning program after installation and ensuring that the GPU is being used for calculation will allow you to confirm your configuration.

Using GPU for Machine Learning

Your GPU is ready for machine learning once you've configured it. You should do the following actions ?

Importing necessary libraries ? Importing the appropriate libraries that allow GPU acceleration is required in order to use the GPU for machine learning. To use TensorFlow as an example, use the tensorflow-gpu library rather than the standard Tensorflow library.

Defining the mode ? Your machine-learning model has to be defined after you have imported the required libraries. Neural networks, decision trees, and other machine learning algorithms that allow GPU acceleration can all be used as the model.

Compiling the model ? After building the model, you must compile it by defining the metrics, optimizer, and loss function. In addition, the input shape, output shape, and the number of the model's hidden layers must also be specified in this stage.

Fitting the model ? Once the model has been built, you can fit your data to it by deciding on the batch size, number of epochs, and validation split. To use the CPU or GPU for calculation, you must additionally mention this. Use the GPU by setting the "use_gpu" option to "True."

Evaluating the model ? Calculating the accuracy, precision, recall, and F1 score after the model has been fitted allows you to assess its performance. Several plotting libraries, including matplotlib and sea born, can be used to visualize the results as well.

Code sample of using GPU in Google Colab

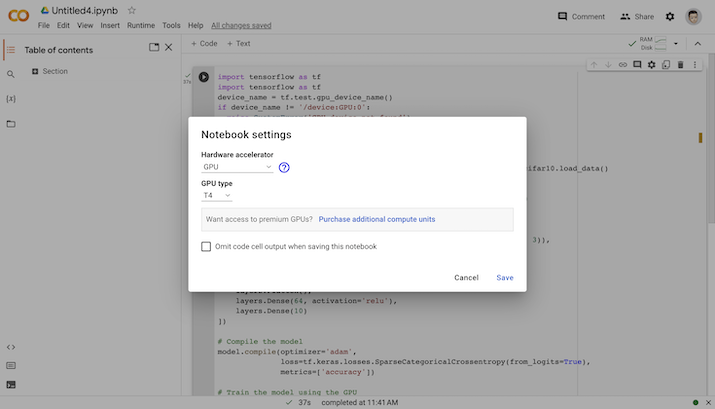

Google colab gives you the features of choosing CPU or GPU for training the machine learning model. You will find it in the runtime section and change the runtime. So, you can select the GPU in the runtime.

Choosing GPU in Google Colab

GPU in Machine Learning

import tensorflow as tf

from tensorflow.keras import datasets, layers, models

# Load the CIFAR-10 dataset

(train_images, train_labels), (test_images, test_labels) = datasets.cifar10.load_data()

# Normalize pixel values to be between 0 and 1

train_images, test_images = train_images / 255.0, test_images / 255.0

# Define the model architecture

model = models.Sequential([

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(32, 32, 3)),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.Flatten(),

layers.Dense(64, activation='relu'),

layers.Dense(10)

])

# Compile the model

model.compile(optimizer='adam', loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

# Train the model using the GPU

with tf.device('/GPU:0'):

history = model.fit(train_images, train_labels, epochs=10,

validation_data=(test_images, test_labels))

Output

Downloading data from https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz 170498071/170498071 [==============================] - 5s 0us/step Epoch 1/10 1563/1563 [==============================] - 74s 46ms/step - loss: 1.5585 - accuracy: 0.4281 - val_loss: 1.3461 - val_accuracy: 0.5172 Epoch 2/10 1563/1563 [==============================] - 73s 47ms/step - loss: 1.1850 - accuracy: 0.5775 - val_loss: 1.1289 - val_accuracy: 0.5985 Epoch 3/10 1563/1563 [==============================] - 72s 46ms/step - loss: 1.0245 - accuracy: 0.6394 - val_loss: 0.9837 - val_accuracy: 0.6557 Epoch 4/10 1563/1563 [==============================] - 73s 47ms/step - loss: 0.9284 - accuracy: 0.6741 - val_loss: 0.9478 - val_accuracy: 0.6640 Epoch 5/10 1563/1563 [==============================] - 72s 46ms/step - loss: 0.8537 - accuracy: 0.7000 - val_loss: 0.9099 - val_accuracy: 0.6844 Epoch 6/10 1563/1563 [==============================] - 75s 48ms/step - loss: 0.7992 - accuracy: 0.7182 - val_loss: 0.8807 - val_accuracy: 0.6963 Epoch 7/10 1563/1563 [==============================] - 73s 47ms/step - loss: 0.7471 - accuracy: 0.7381 - val_loss: 0.8739 - val_accuracy: 0.7065 Epoch 8/10 1563/1563 [==============================] - 76s 48ms/step - loss: 0.7082 - accuracy: 0.7491 - val_loss: 0.8840 - val_accuracy: 0.6977 Epoch 9/10 1563/1563 [==============================] - 72s 46ms/step - loss: 0.6689 - accuracy: 0.7640 - val_loss: 0.8708 - val_accuracy: 0.7096 Epoch 10/10 1563/1563 [==============================] - 75s 48ms/step - loss: 0.6348 - accuracy: 0.7779 - val_loss: 0.8644 - val_accuracy: 0.7147

The computations are done on the GPU with the help of the with tf.device('/GPU:0') context manager.

Conclusion

The performance of your models can be considerably increased by using GPUs for machine learning, and the training period can be cut from days to hours or even minutes. Before utilizing your GPU for machine learning, you must first configure it by installing the required drivers and libraries, checking the configuration, and then proceed. It will reduce the runtime and executes code faster.