Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Spaceship Titanic Project using Machine Learning in Python

The original Titanic project in Machine learning is aimed at finding whether a person on the Titanic will survive or not. However, this project named the spaceship Titanic is a bit different.

The problem statement here is that a spaceship has people going on a trip in space. But due to a collision, a few people need to be transported to some other dimension or planet. Now this can't be done randomly. So, we will use a Machine Learning technique in Python to find out who will get transported and who will not.

Algorithm

Step 1 ? Import the libraries like numpy, pandas, matplotlib, seaborn and sklearn, etc and load the dataset as pandas data frame.

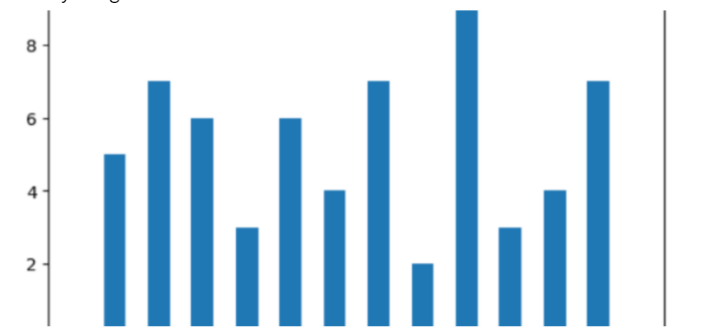

Step 2 ? For cleaning the data, first plot the bar chart to check for null values. If null values are found then look for the relationship between the various features and impute the null values. Check for outliers before imputing the values, if required.

Step 3 ? Check for the null values again. This time, use the naive value to fill all of them. At this step, you should get 0 as an output, meaning, all null values have been dealt with.

Step 4 ? Extract, reduce, merge or add features to derive important and most significant information. This is done by doing repeated comparison and related actions.

Step 5 ? For finding out further relations, use EDA or Exploratory Data Analysis where we make use of visual tools to see the relationship between different features. We will make a pie chart, a bar graph and a heatmap to see if there are any highly correlated features.

Step 6 ? Split the dataset into the test and train set and normalize the data using StandardScaler.

Step 7 ? Now train some machine learning models on this data and check which model fits the best. We are using logistic regression, XGBClassifier and SVC.

Step 8 ? Choose the model with the best performance.

Step 9 ? Use the chosen model to print the confusion matrix and validation data.

Example

In this example, we will take a spaceship titanic dataset that you can find here and then, we will perform the various steps required to predict whether or not a person will be transported from the spaceship to a different planet or dimension. Note that we haven't taken all the rows because it is a huge dataset but you can take as many rows as you wish.

#part 1

#--------------------------------------------------------------------

#setting up libraries and dataset

#Import the required libraries

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sb

import sklearn

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import LabelEncoder, StandardScaler

from sklearn import metrics

from sklearn.svm import SVC

from xgboost import XGBClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import ConfusionMatrixDisplay

from sklearn.metrics import confusion_matrix

import warnings

warnings.filterwarnings('ignore')

#load and print the dataset

df = pd.read_csv('titanic_dataset.csv')

df.head()

#print other information about the dataset

df.shape

df.info()

df.describe()

#part 2

#--------------------------------------------------------------------

#preprocessing the data

#find the null values

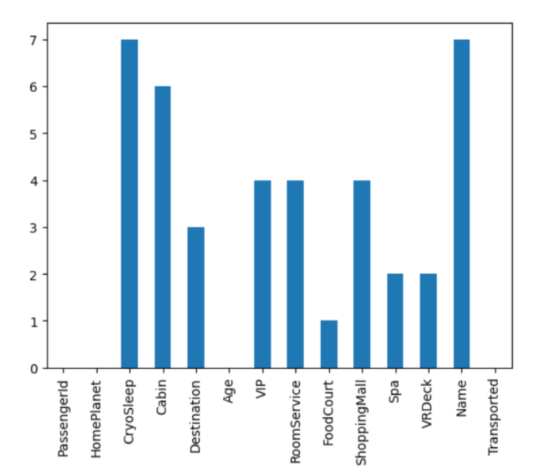

df.isnull().sum().plot.bar()

plt.show()

#substitute the null values as needed

col = df.loc[:,'RoomService':'VRDeck'].columns

df.groupby('VIP')[col].mean()

df.groupby('CryoSleep')[col].mean()

temp = df['CryoSleep'] == True

df.loc[temp, col] = 0.0

for c in col:

for val in [True, False]:

temp = df['VIP'] == val

k = df[temp].mean()

df.loc[temp, c] = df.loc[temp, c].fillna(k)

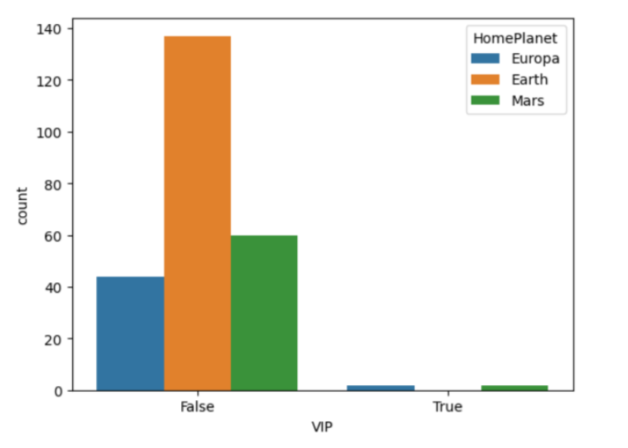

#check relationship bw VIP and HomePlanet feature

sb.countplot(data=df, x='VIP',

hue='HomePlanet')

plt.show()

col = 'HomePlanet'

temp = df['VIP'] == False

df.loc[temp, col] = df.loc[temp, col].fillna('Earth')

temp = df['VIP'] == True

df.loc[temp, col] = df.loc[temp, col].fillna('Europa')

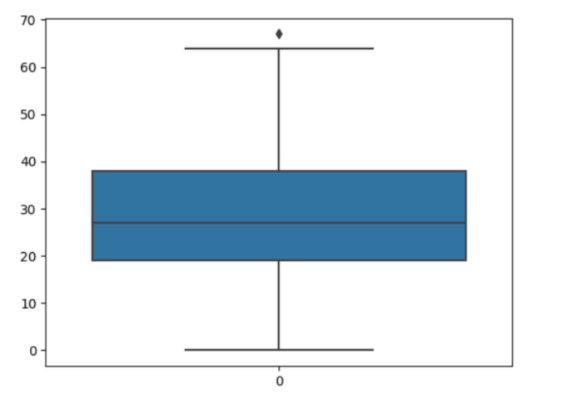

#check for outliers

sb.boxplot(df['Age'])

plt.show()

#exclude outliers while substituting null values

temp = df[df['Age'] < 61]['Age'].mean()

df['Age'] = df['Age'].fillna(temp)

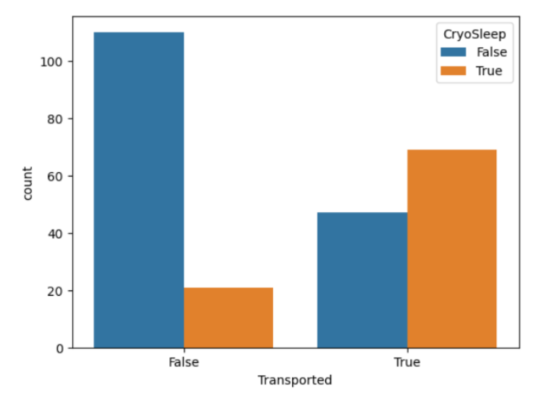

#check relationship between Transported and CryoSleep

sb.countplot(data=df,

x='Transported',

hue='CryoSleep')

plt.show()

#check for the null values again

df.isnull().sum().plot.bar()

plt.show()

#fill them all with the naive method

for col in df.columns:

if df[col].isnull().sum() == 0:

continue

if df[col].dtype == object or df[col].dtype == bool:

df[col] = df[col].fillna(df[col].mode()[0])

else:

df[col] = df[col].fillna(df[col].mean())

#this should return 0, meaning no null values are left

df.isnull().sum().sum()

#part 3

#--------------------------------------------------------------------

#feature engineering

#passenger id and room no represent the same kind of information

new = df["PassengerId"].str.split("_", n=1, expand=True)

df["RoomNo"] = new[0].astype(int)

df["PassengerNo"] = new[1].astype(int)

df.drop(['PassengerId', 'Name'],

axis=1, inplace=True)

#filling room no with max passengers

data = df['RoomNo']

for i in range(df.shape[0]):

temp = data == data[i]

df['PassengerNo'][i] = (temp).sum()

#removing roomno

df.drop(['RoomNo'], axis=1,

inplace=True)

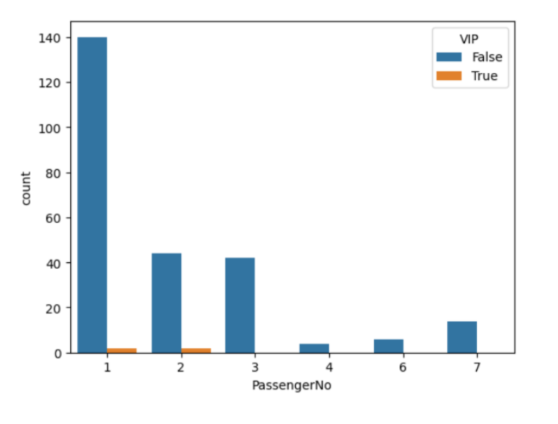

sb.countplot(data=df,

x = 'PassengerNo',

hue='VIP')

plt.show()

#not much relation in VIP sharing a room

new = df["Cabin"].str.split("/", n=2, expand=True)

data["F1"] = new[0]

df["F2"] = new[1].astype(int)

df["F3"] = new[2]

df.drop(['Cabin'], axis=1,

inplace=True)

#combining all expenses

df['LeasureBill'] = df['RoomService'] + df['FoodCourt']\

+ df['ShoppingMall'] + df['Spa'] + df['VRDeck']

#part 4

#--------------------------------------------------------------------

#EDA

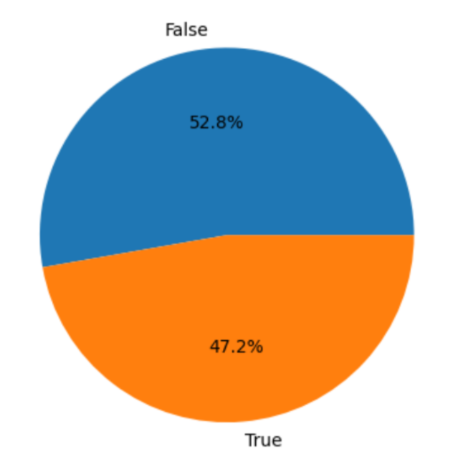

#checking if the data is balanced

x = df['Transported'].value_counts()

plt.pie(x.values,

labels=x.index,

autopct='%1.1f%%')

plt.show()

#relation bw VIP and leasureBill

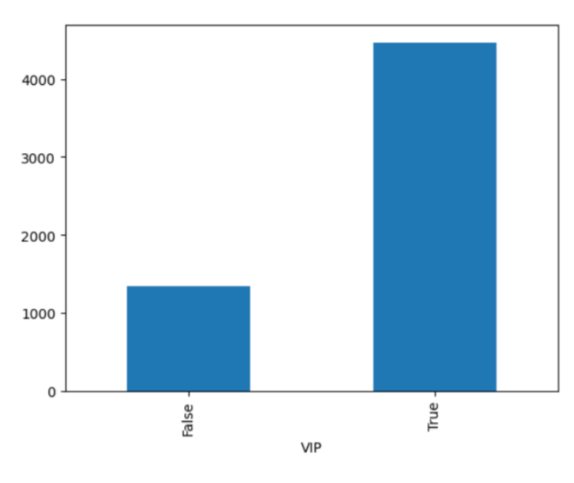

df.groupby('VIP').mean()['LeasureBill'].plot.bar()

plt.show()

#encoding and binary conversion

for col in df.columns:

if df[col].dtype == object:

le = LabelEncoder()

df[col] = le.fit_transform(df[col])

if df[col].dtype == 'bool':

df[col] = df[col].astype(int)

df.head()

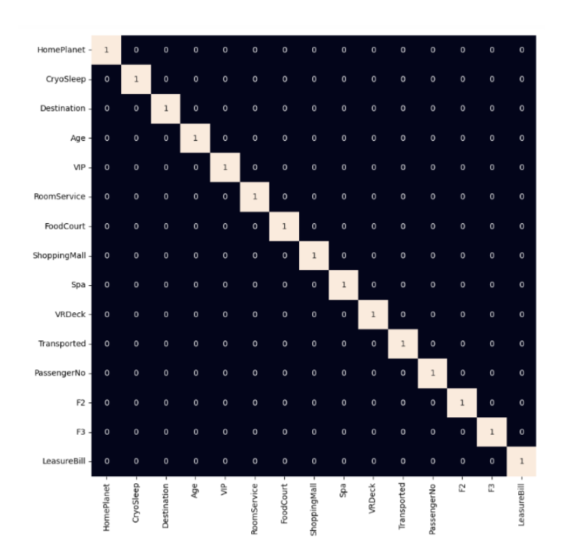

#checking correlated features with a heatmap

plt.figure(figsize=(10,10))

sb.heatmap(df.corr()>0.8,

annot=True,

cbar=False)

plt.show()

#part 5

#--------------------------------------------------------------------

#model training

#split the data

features = df.drop(['Transported'], axis=1)

target = df.Transported

X_train, X_val,\

Y_train, Y_val = train_test_split(features, target,

test_size=0.1,

random_state=22)

X_train.shape, X_val.shape

#normalize the data

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_val = scaler.transform(X_val)

#check fitting with various ML models

from sklearn.metrics import roc_auc_score as ras

models = [LogisticRegression(), XGBClassifier(),

SVC(kernel='rbf', probability=True)]

for i in range(len(models)):

models[i].fit(X_train, Y_train)

print(f'{models[i]} : ')

train_preds = models[i].predict_proba(X_train)[:, 1]

print('Training Accuracy : ', ras(Y_train, train_preds))

val_preds = models[i].predict_proba(X_val)[:, 1]

print('Validation Accuracy : ', ras(Y_val, val_preds))

print()

#part 6

#--------------------------------------------------------------------

#model evaluation

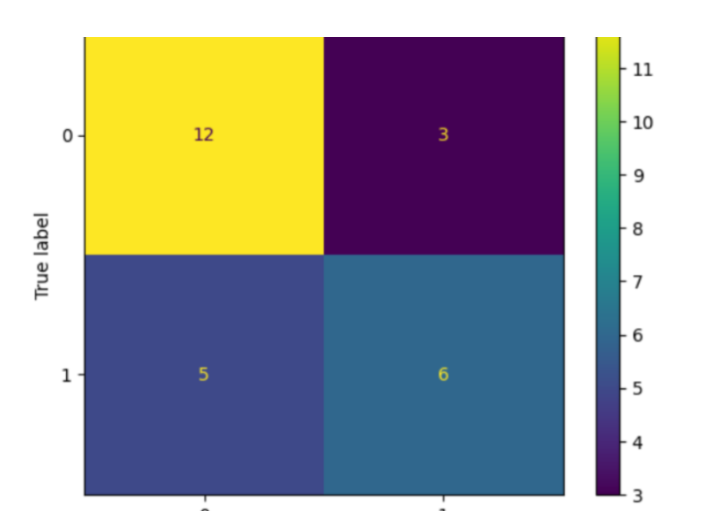

#plot confusion matrix using the best model

y_pred = models[1].predict(X_val)

cm = confusion_matrix(Y_val, y_pred)

disp = ConfusionMatrixDisplay(confusion_matrix=cm)

disp.plot()

plt.show()

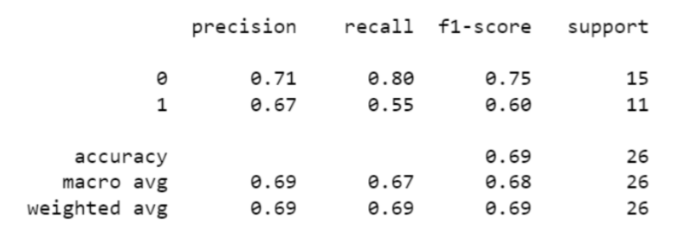

print(metrics.classification_report

(Y_val, models[1].predict(X_val)))

Once all the libraries are imported, the dataset is loaded into a dataframe where the dataset is processed to handle missing values and outliers. Later, null values in the dataset are identified and a bar plot is created to visualize the count of null values in each column and the relationship between transported and cryosleep is plotted.

The PassengerId column is split into two: RoomNo and PassengerNo, then the values in these columns are converted to integers. The balance of the target variable, Transported, is plotted as a pie chart and the relationship between VIP and LeasureBill is also displayed as a bar chart.

In the later part, label encoding and binary conversions are performed on the dataset to convert categorical columns to numeric values. Then, a heatmap is created to visualise the relation between the features in the dataset.

Then each model is trained on the training set, and the validation accuracies are evaluated using the roc_auc_score variable. After which a confusion matrix is plotted and along with classification report (including precision), F1 score and report is printed using the model.

Output

<class 'pandas.core.frame.DataFrame'> RangeIndex: 254 entries, 0 to 253 Data columns (total 14 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 PassengerId 254 non-null object 1 HomePlanet 249 non-null object 2 CryoSleep 247 non-null object 3 Cabin 248 non-null object 4 Destination 251 non-null object 5 Age 248 non-null float64 6 VIP 250 non-null object 7 RoomService 247 non-null float64 8 FoodCourt 252 non-null float64 9 ShoppingMall 242 non-null float64 10 Spa 251 non-null float64 11 VRDeck 250 non-null float64 12 Name 247 non-null object 13 Transported 254 non-null bool dtypes: bool(1), float64(6), object(7) memory usage: 26.2+ KB

LogisticRegression() ?

Training Accuracy : 0.8922982036851439 Validation Accuracy : 0.8060606060606061

XGBClassifier() ?

Training Accuracy : 1.0 Validation Accuracy : 0.7454545454545454

SVC(probability=True) ?

Training Accuracy : 0.9266825996453628 Validation Accuracy : 0.7878787878787878

Using the performance matrix, you can conclude that the model is easily able to predict positive values as positive but the same is not true for the negative values.

Conclusion

For doing this Spaceship Titanic project, you can also use other models like K-nearest neighbors (KNN), Support Vector machine (SVM), Random Forest (RF) and Naive Bayes etc. Also, here we preprocessed the data on our own. However, you can do it with other datasets available on the internet which are already preprocessed. This will save us a number of steps and make our task easier.