Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Unicodes in computer network

Unicode is the information technology standard for the consistent encoding, representation, and handling of text that is expressed in the world's writing systems. The standard is created by the Unicode Consortium in 1991. It includes symbols, arrows, characters, etc. Characters that are mostly used in the English language, are represented by the ASCII subset of Unicode. Unicode, on the other hand, is a more thorough encoding technique that can represent characters from various languages and scripts, including mathematical symbols and other specialist characters.

Unicode Standard approved by the Unicode consortium and international standard ISO.

Definition

Unicode is a universal character encoding standard created by Unicode Consortium. The Unicode Consortium developed the global character encoding standard, which offers a large character set. Software localization is made easier by Unicode, and multilingual text processing is enhanced. Unicode can solve the issue raised by ASCII and expand ASCII.Unicode adheres to a rigid set of rules and only utilizes 4-bytes to represent characters.Hence, several encodings are offered. The most significant encoding technique is UTF. For Unicode Transformation format, it is known. Unicode provides the rules, algorithms, and features needed to ensure compatibility between various platforms and languages.

There are primarily three of each types: UTF-7, UTF-8, UTF-16, and UTF-32.

Any programming language's default encoding is UTF-8.

UTF- 7

The ASCII standard is represented by the UTF-7.

The 7-bit ASCII encoding is used. The representation of ASCII characters in emails and communications that follow this protocol.

UTF- 8

Encodings like UTF-8 are frequently used. According to the ASCII standard, 1 byte for English letters and symbols, 2 bytes for letters and symbols from the Middle East, 3 bytes for letters from Asia, and 4 bytes for other characters. Mostly UTF-8 is used in web development, standard XML files, UNIX and Linux files, emojis, and many more.

UTF- 16

It primarily supports 4 bytes to express additional characters.

In programming languages like Java, Microsoft Windows, etc internal processing is. It is an extended version of UCS-2.

It is a global encoding standard.

Supports multiple script environments.

The efficiency in saving space and memory.

Increases cross-platform data interoperability of code.

UTF- 32

It depicts multibyte encoding determined only by byte counting.

For instance, used in UNIX System.

Example of Unicode

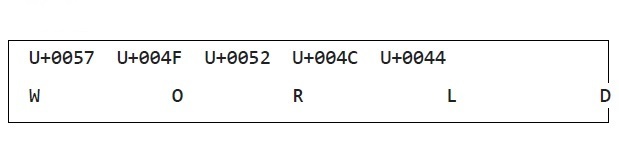

World ?

U+Hex is used to represent Unicode for each character.

Importance of Unicode

Because a single application's code can operate on multiple platforms without having to be completely rewritten.

Unicode is the superset for all encoding schemes. It is convertible to another standard coding.

Commonly seen in Coding Languages.

The conversion process is quick and causes little data loss.

Unicode and Ascii code

Numbers, alphabets, and symbols are represented by the alphanumeric code known as ASCII. American Standard Code for Information Interchange, or ASCII, is the abbreviation. Even though it's a seven-bit code, eight bits used instead for convenience.

For 7 bits of code, it supports 128 characters and for 8 bits there are 256 characters.

ASCII is less in demand than Unicode when memory is concerned.

The main problem associated with ASCII is maximum of 255 characters should be written for 8-bit characters.

Unicode and ISCII code

The Indian Script Code for Information Interchange (ISCII) coding system is used to represent the various Indian writing systems. 8-bit encoding is used in ISCII. The upper 128 code points are peculiar to ISCII, while the below 128 code points are normal ASCII. Sanskrit and Vedic script languages are included, as well as all Indian languages.

Indian Script code for Information interchange, or ISCII, was first used in 1997 by the Bureau of Indian Standards.

Disadvantages of Unicode

There needs to be a lot of memory to parse the variety of characters.

It requires large memory space for UTF-16 and UTF-32

The size of bytes increases with variant alphabetical symbols.

Conclusion

In this article, we have explained the Unicodes in a computer network. Unicode is a character encoding used in international standards to make characters readable and compatible with variousdevices. Also, ASCII characters are not enough to cover entire languages so to encounter this problem the Unicode consortium has introduced Unicode encoding.