Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Understanding Activation Function in Machine Learning

Activation functions are similar to the magic ingredient in neural networks when it comes to machine learning. They are mathematical formulas that decide, in response to the information a neuron receives, whether it should fire or not. The ability of neural networks to learn and represent intricate data patterns depends critically on activation functions. These functions provide non?linearity into the network, enabling it to handle various issues including complex connections and interactions. Simply enough, activation functions enable neural networks to discover hidden patterns, anticipate outcomes, and correctly categorize data. In this post, we will be understanding activation function in machine learning.

What is Activation Function?

An essential part of a neural network is an activation function, which chooses whether or not to activate a neuron based on the information it receives. An activation function's main function is to make the network less linear. The network's output under a linear model, in which inputs are only scaled and added, would also be a linear combination of the inputs.

Activation functions, on the other hand, provide neural networks the capacity to learn and express complicated functions that are incomparable to those modeled by straightforward linear connections. The network can identify complex patterns and relationships in the data because of activation functions' non?linear character. It gives the network the ability to handle inputs that fluctuate in a non?linear way, enabling it to handle a variety of real?world issues including time series forecasting, picture recognition, and natural language processing.

Importance of Non?linearity

A key factor in neural networks' success is non?linearity. It is essential since many occurrences and connections in the actual world are by their very nature non?linear. Given that they can only simulate basic linear connections, linear activation functions are limited in their ability to capture complicated patterns. Without non?linearity, neural networks could only represent linear functions, which would significantly limit their capacity to handle complex issues. On the other hand, neural networks can estimate and express complicated relationships in data thanks to non?linear activation functions. They provide networks the ability to learn and simulate complex patterns, reflecting the complexities and non?linear relationships that occur in the actual world.

Types of Activation function in machine learning

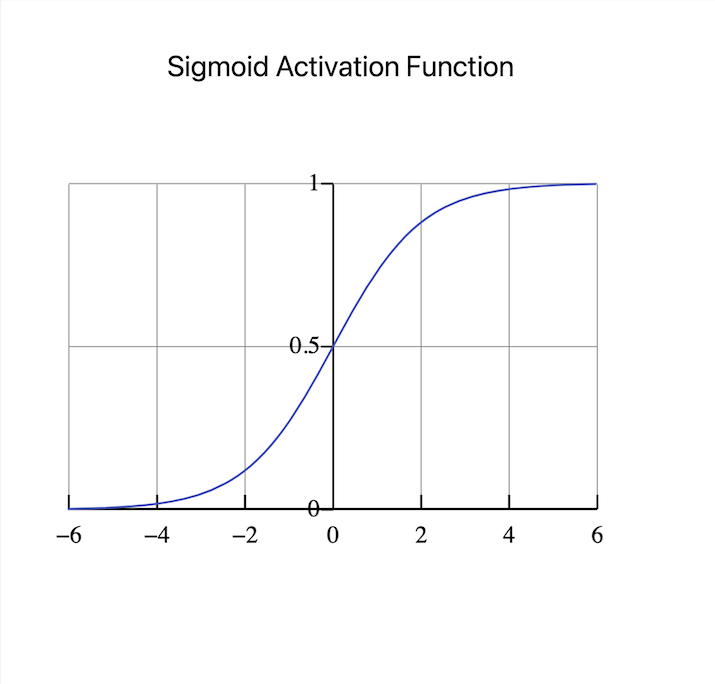

Sigmoid Activation function

Most people choose to use the sigmoid activation function. With an S?shaped curve, it transfers the input to a range between 0 and 1. It can be used for binary classification issues when the objective is to forecast which of two classes will occur. The sigmoid function produces a comprehensible output that can be understood as the likelihood of belonging to a certain class by condensing the input into a probabilistic range.

Sigmoid activation functions, however, are vulnerable to the vanishing gradient issue. The gradients grow incredibly tiny as the network depth increases, impeding learning and causing a delayed convergence. Due to this restriction, researchers are looking at new activation functions that solve the vanishing gradient issue and improve deep neural network training.

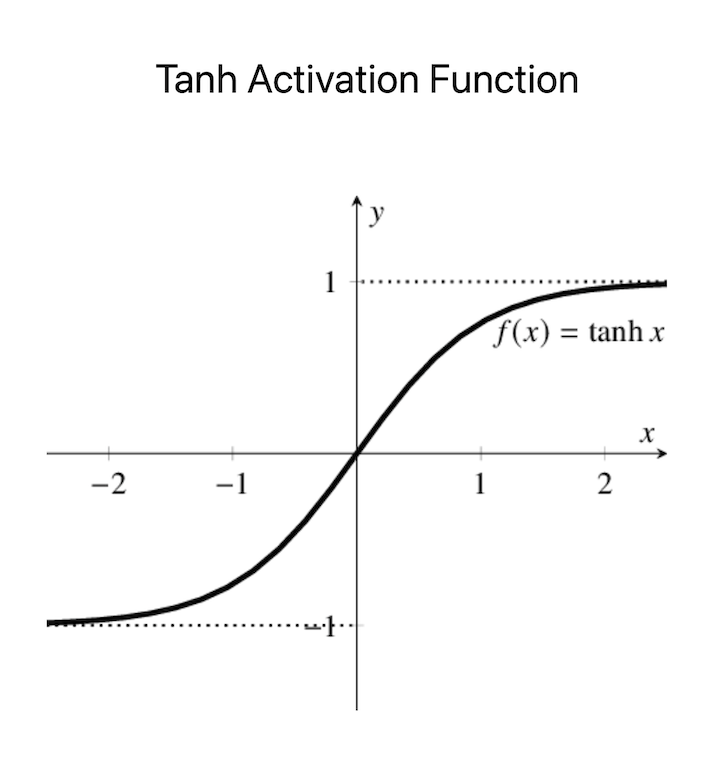

Tanh activation function

Although it translates the input to a range between ?1 and 1, the hyperbolic tangent (tanh) activation function is comparable to the sigmoid function in that it too has an S?shaped curve. Tanh is helpful in binary classification issues, much like the sigmoid function, by producing a probabilistic output that can be translated into class probabilities. The tanh function has the benefit of producing zero?centered outputs, which might be helpful for training specific models.

Its use in deep neural networks is nonetheless constrained by the vanishing gradient issue. Additionally, the tanh function is more susceptible to saturation than the sigmoid function since it has steeper gradients. As a result, it can be less stable throughout training and susceptible to starting parameter settings. However, in some circumstances, particularly where zero?centered outputs or balanced class forecasts are needed, the tanh activation function continues to be a feasible option.

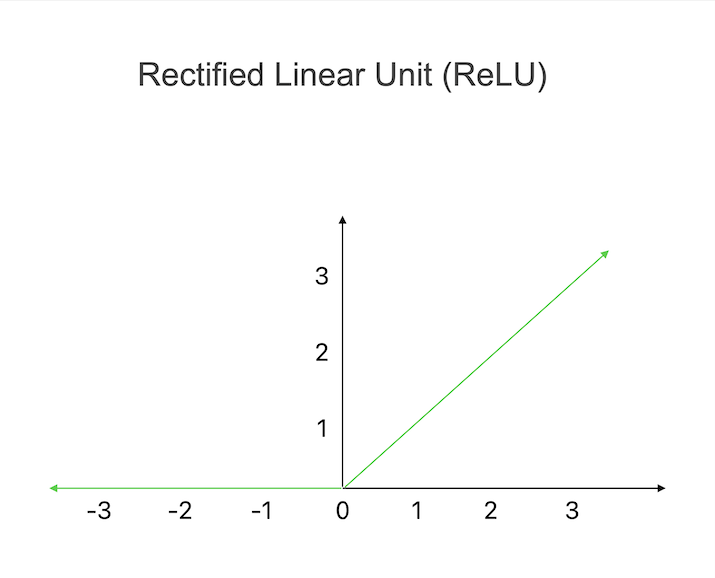

Rectified Linear Unit (ReLU)

The widely used activation function known as the Rectified Linear Unit (ReLU) zeroes out all negative inputs while maintaining positive inputs at their original value. With the help of this straightforward activation rule, ReLU can add nonlinearity and detect intricate patterns in the data. ReLU's computational efficiency is one of its main benefits. The activation function is simpler to compute than other functions since it just requires simple actions. ReLU does have certain difficulties, though.

One potential problem is referred to as "dying ReLU," in which certain neurons are permanently dormant and output 0 for any input. The training process can be negatively impacted by this event since the damaged neurons are no longer useful for learning. However, tactics like employing several ReLUs or appropriate initialization procedures might reduce the chance of dying ReLU and guarantee the success of deep neural network training.

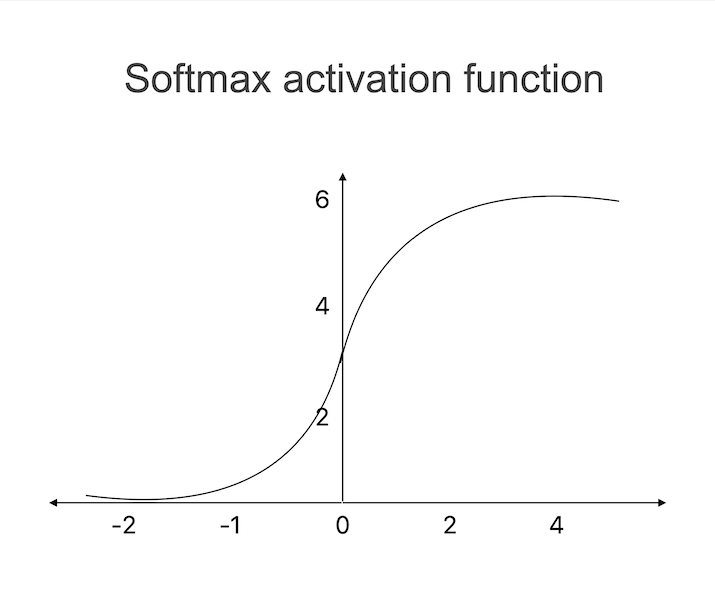

Softmax activation function

The softmax function is frequently employed in multi?class classification issues when the objective is to categorise an input into one of several potential classes. It normalises an input vector of real values into a probability distribution. The softmax function assures that the output probabilities add up to 1, making it appropriate for situations involving classes that cannot coexist. The softmax function creates a probability distribution, which enables us to interpret the outputs as the likelihood of the input falling into each class.

As a result, we can make predictions with confidence and distribute the input to the class with the highest likelihood. For applications like picture identification, natural language processing, and sentiment analysis where numerous classes must be taken into account concurrently, the softmax function is an important machine learning tool.

Conclusion

In conclusion, it is impossible to emphasize the importance of activation functions in machine learning. Within neural networks, they serve as the decision?makers, deciding whether or not to forward information. In short, activation functions are the key that allows neural networks to reach their full potential and learn, adapt, and make precise predictions in a variety of real?world environments.