Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Understanding Train and Split Criteria in Machine Learning

In the field of machine learning, the train-test split is a straightforward yet effective method. In essence, it entails separating your dataset into two separate sets, one for training your model and the other for evaluating its correctness. The efficiency of your model's predictions in light of fresh data may be assessed using this method. You can evaluate how effectively a model generalizes and, consequently, how well it will perform in the real world by giving it a brand-new dataset that it has not been trained on. The train-test split essentially acts as a "reality check" for the capabilities of your model, providing you with a better understanding of its advantages and disadvantages. This enables you to adjust and improve your model to better meet your goals, which will ultimately produce forecasts that are more accurate and trustworthy. We will examine the train and split criteria in this post, including their significance and practical applications.

What is a Train-test Split?

A train-test split in machine learning involves splitting your dataset into two distinct sets: one for training your model and the other for evaluating its performance. This split's objective is to assess your model's precision on hypothetical data, which is essential for ensuring that it can generalize effectively and produce precise predictions in practice. Your model can be tested by comparing the predictions it makes using the testing set to the actual values in the dataset after it has been trained using the training set to modify its weights and biases. To make sure that the data is correct throughout the whole dataset and that the model isn't overfitting the training set, the split is frequently performed at random. You can ensure that your model is as precise as possible and that it can accurately anticipate future data by using this method.

Why is a Train-test Split Important?

The effectiveness of a machine learning model on unseen data must be evaluated using data science. This is due to the fact that a model can perform incredibly well on a dataset it was trained on but poorly when used with brand-new, untested data. In other words, a model that has been overfitting to training data may produce incorrect predictions when applied to new data. When a model becomes overly complicated, overfitting happens, and the model starts to remember the training data rather than learning the underlying patterns. This results in a model that is too tuned for the training set of data and does badly on the test set. In order to avoid overfitting and guarantee that a model is accurate and dependable when employed in practical applications, it is important to assess its performance on unobserved data.

Understanding the Criteria for a Train-test Split

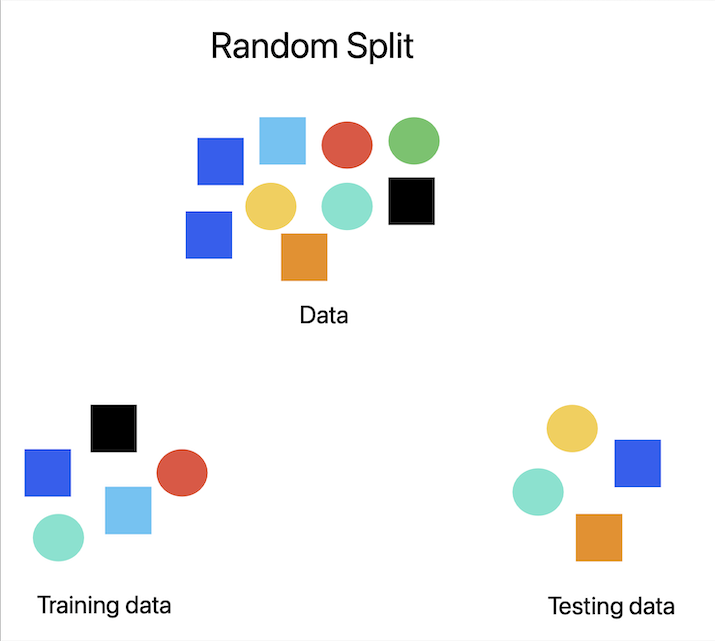

Random Split

Data splitting is most frequently done via a random split. The data is split into two groups at random, typically 70% for training and 30% for testing. When there are no innate patterns or structures in the data that you wish to keep in the test set, this method is quite helpful. Random split has the benefit of ensuring that the training and test sets are representative of the full dataset, which reduces the likelihood of overfitting.

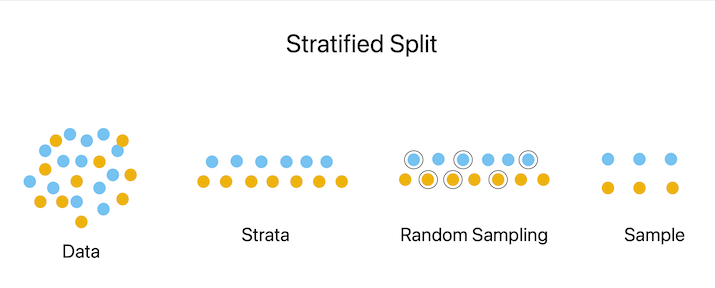

Stratified Split

In a stratified split, the distribution of a particular variable in both the training and test sets is preserved by splitting the data into subsets based on that variable. When working with datasets that are unbalanced?that is, when there are not an equal number of examples for each class?this criteria is very helpful. A stratified split can assist increase the model's precision by making sure that the training and test sets have the same number of cases for each class.

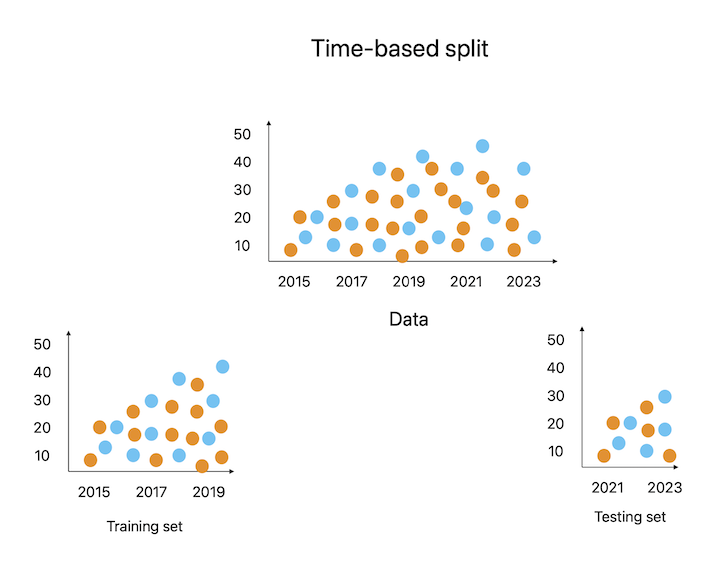

Time-based Split

The data is separated into subgroups according to time in a time-based split. When working with time-series data, where the sequence of the occurrences matters, this method is frequently utilized. In a time-based split, the test set normally contains all occurrences that occurred after a certain point in time, whereas the training set often contains all instances that occurred before that moment. In time-series forecasting, it is essential that the model be trained on historical data and evaluated on prospective data.

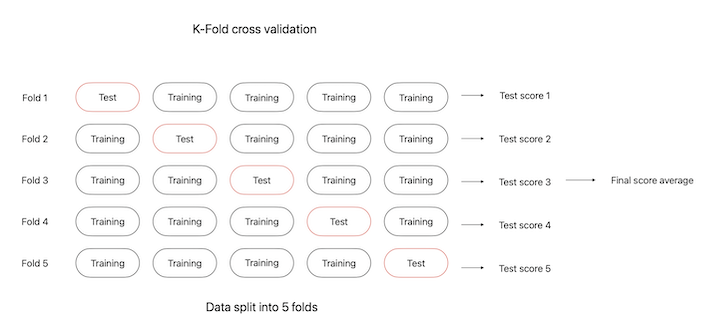

K-fold Cross-validation

K-fold cross-validation involves splitting the data into K subsets or folds, using each fold as the test set and the remaining K-1 folds as the training set. Each fold serves as the test set once over the K-time course of this procedure. When working with smaller datasets, where there might not be enough information to divide into training and test sets, K-fold cross-validation is very helpful.

Conclusion

The train-test split in machine learning is an essential stage in making sure your model can generalize effectively and make precise predictions on brand-new, untried data. The data can be divided into two subsets so that your model can be trained on one set while being evaluated on the other, producing predictions that are eventually more accurate. The importance of selecting the appropriate criterion for data splitting, however, cannot be stressed. Several criteria may be more suitable than others depending on the type of data and the issue you are attempting to address. The accuracy of the model can be increased, overfitting can be avoided, and the model's resilience to brand-new, untested data can all be guaranteed. In conclusion, the advantages of using various criteria in various circumstances might finally provide machine learning models that are more accurate and dependable.