Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Cost function in Logistic Regression in Machine Learning

Introduction

Logistic Regression is the simplest of all classification algorithms in Machine Learning. Logistic Regression uses log loss or cross?entropy loss instead of mean squared error for loss function. Since we already have linear regression why do we need Logistic Regression for classification and why can't use Linear Regression for classification?

Let us understand this fact through this article and explore the cost function used in Logistic Regression in detail.

Why do we need Logistic Regression and can't use Linear Regression?

In Linear Regression, we predict a continuous value. If we fit Linear Regression to the classification task, the line of best fit will look something like the diagram below.

According to the above graph, we would have values greater than 1 and less than 0 but that does not make sense for classification as we are only interested in binary output 0 or 1.

Thus we need values to be present between the lines Y=0 and Y=1. The above line needs to be transformed such that the values lie within 0 and 1. One such transformation is applying the sigmoid function as shown below.

$$\mathrm{K=MX+c}$$

$$\mathrm{Y=F(K)}$$

$$\mathrm{F(K)=\frac{1}{1+e^{?Z}}}$$

$$\mathrm{Y=\frac{1}{1+e^{?Z}}}$$

The graph will now like as shown below

The sigmoid function gives continuous values between 0 and 1 which are probability values.

Log loss and Cost function for Logistic Regression

One of the popular metrics to evaluate models for classification by using probabilities is log loss.

$$\mathrm{F=?\sum_{i=1}^M\:y_{i}\log(p_{\theta}(x_{i}))+(1?y_{i})\log(1?p_{\theta}(x_{i}))}$$

The cost function can be written as

$$\mathrm{F(\theta)=\frac{1}{n}\sum_{i=1}^n\frac{1}{2}[p_{\theta}(x^{i})?Y^{i}]^{2}}$$

For Logistic Regression,

$$\mathrm{p_{\theta}(x)=g(\theta^{T}x)}$$

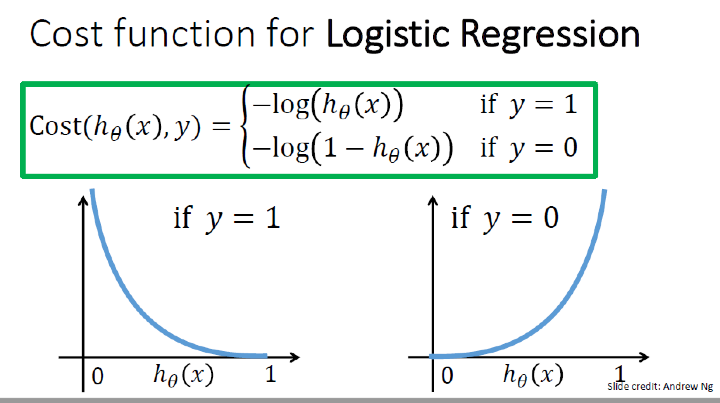

The above equation leads to a non?convex function that acts as the cost function. The cost function logistic regression is log loss and is summarized below.

$$\mathrm{cost(p_{\theta}(x),(y))=\left(\begin{array}{c}{?\log(p_{\theta}(x))\:if\:y=1}\ {?\log(1?p_{\theta}(x))\:if\:y=0}\end{array}\right)}$$

The gradient descent update equation becomes,

$$\mathrm{\theta_{k}:=\theta_{k}?\alpha \sum_{i=1}^n[p_{\theta}(x^{i})?y^{i}]x_j^i}$$

Conclusion

Logistic Regression is the most fundamental classification algorithm. It uses log loss or cross?entropy loss as the cost function that tends to predict probabilities of the outcome between 0 and 1.