Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Cross-Entropy Cost Functions used in Classification in Machine Learning

In machine learning, the purpose of a regression task is to determine the value of a function that can reliably predict the data pattern. A classification task, on the other hand, entails determining the value of the function that can properly identify the various classes of data. The model's accuracy is determined by how effectively the model predicts the output values given the input values. The cost function is one such metric utilized in iteratively calibrating the accuracy of the model that we shall explore below.

What is Cost Function?

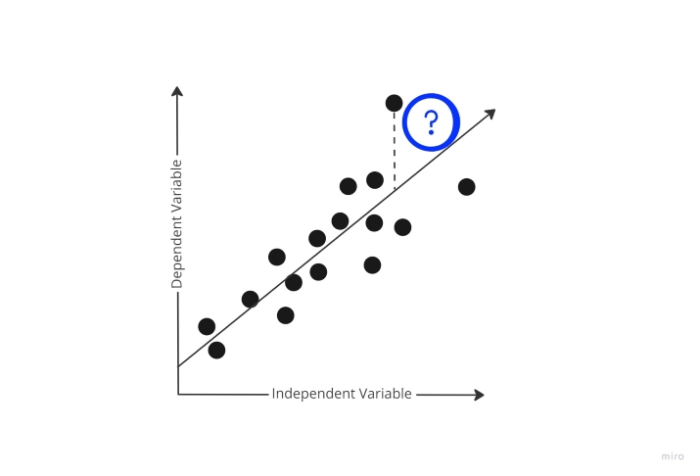

The model attempts to generate a prediction on training data while randomly selecting the starting weights throughout the training phase. But how would the model learn how "far" off the prediction it was? It unquestionably needs this knowledge in order to use gradient descent in the following iteration on training data and alter the weight accordingly.

Cost function enters the scene at this point. In order to determine how much inaccurate the model's prediction was, the cost function compares the model's predicted and actual outputs.

With this quantifiable data from the cost function, the model is now attempting to change the weight of its parameters for the following training data iteration in order to further minimize the error reported by the cost function. Iteratively creating weights that minimize this error to the point where it can no longer be minimized is the goal of the training phase. This is essentially an optimization issue.

Cost Function used in Classification

The cross-entropy loss metric is used to gauge how well a machine-learning classification model performs. The loss is represented by a number in the range of 0 and 1, where 0 corresponds to a perfect model (or mistake). The main goal is to go as near to 0 as you can with your model. Cross entropy loss is sometimes confused with logistic loss (or log loss, and sometimes referred to as binary cross entropy loss), however, this is not necessarily the case.

The gap between the found and expected probability distributions of a machine-learning classification model is measured by cross-entropy loss. All feasible predictions is saved, thus if you were searching for the odds in a coin toss, it would save that information at 0.5 and 0.5. (heads and tails). In contrast, binary cross entropy loss stores just one value. That is, it would only store 0.5, with the other 0.5 assumed in a different scenario (for example, if the first probability was 0.7, it would assume the second was 0.3). It also employs a logarithm (thus "log loss").

Although it is obvious that it would fail as soon as there were three or more outcomes, binary cross-entropy loss, also known as log loss, is used for this purpose when there are only two outcomes. Cross entropy loss is widely used in models that include three or more classification options. When just taking into account the error in a single training sample, the cost function can be analogously referred to as the "loss function."

Gradient Descent

An approach for minimizing the inaccuracy in the model or the cost function is called gradient descent. It is employed to locate even the slightest error in your model. You can see Gradient Descent as the route you must follow to make the fewest number of mistakes. Your model's accuracy may vary in different places, therefore you must determine the quickest way to reduce it to prevent wasting resources.

Gradient descent is a technique for figuring out how inaccurate your model is given different input variable values. You can observe that as this is done repeatedly, there are fewer and fewer errors over time. The cost function will be optimized and you will quickly reach the values for the variables with the least amount of error.

Types of Cost Function used in Classification

1. Binary Cross Entropy Cost Function

When there is just one output and it simply takes a binary value of 0 or 1 to represent the negative and positive class, respectively, binary cross-entropy is a specific instance of categorical cross-entropy. categorization of a dog and a cat, for instance.

Assuming that y represents the actual output, cross-entropy for a given set of data D can be reduced as follows ?

Cross-entropy(D) = - y*log(p) when y = 1

Cross-entropy(D) = - (1-y)*log(1-p) when y = 0

The mean of cross-entropy for all N training data, also known as binary cross-entropy, is what determines the error in binary classification for the whole model.

Binary Cross-Entropy = (Sum of Cross-Entropy for N data)/N

2. The multi-class Classification Cross Entropy Cost function

When there are several classes and only one class the input data belongs to, this cost function is utilized to solve the classification issues. Let's examine the cross-entropy calculation presently. Assume for the moment that the model outputs the probability distribution shown below for "n" classes and a specific input data set D.

Cross-entropy for that specific data D is then determined as

Cross-entropy loss(y,p) = - yT log(p)

= -(y1 log(p1) + y2 log(p2) + ??yn log(pn) )

Conclusion

As the last point, I can state that the cost function serves as a monitoring tool for various algorithms and models since it highlights discrepancies between expected and actual results and aids in model improvement.