- BigQuery - Home

- BigQuery - Overview

- BigQuery - Initial Setup

- BigQuery vs Local SQL Engines

- BigQuery - Google Cloud Console

- BigQuery - Google Cloud Hierarchy

- What is Dremel?

- What is BigQuery Studio?

- BigQuery - Datasets

- BigQuery - Tables

- BigQuery - Views

- BigQuery - Create Table

- BigQuery - Basic Schema Design

- BigQuery - Alter Table

- BigQuery - Copy Table

- Delete and Recover Table

- BigQuery - Populate Table

- Standard SQL vs Legacy SQL

- BigQuery - Write First Query

- BigQuery - CRUD Operations

- Partitioning & Clustering

- BigQuery - Data Types

- BigQuery - Complex Data Types

- BigQuery - STRUCT Data Type

- BigQuery - ARRAY Data Type

- BigQuery - JSON Data Type

- BigQuery - Table Metadata

- BigQuery - User-defined Functions

- Connecting to External Sources

- Integrate Scheduled Queries

- Integrate BigQuery API

- BigQuery - Integrate Airflow

- Integrate Connected Sheets

- Integrate Data Transfers

- BigQuery - Materialized View

- BigQuery - Roles & Permissions

- BigQuery - Query Optimization

- BigQuery - BI Engine

- Monitoring Usage & Performance

- BigQuery - Data Warehouse

- Challenges & Best Practices

Integrate BigQuery API

The BigQuery API allows developers to leverage the power of BigQuery's processing and Google SQL data manipulation functions for recurring tasks.

The BigQuery API is a REST API and supports the following languages −

Since Python is one of the most popular languages for data science and data analysis, this chapter will explore the BigQuery API within the context of Python.

BigQuery API Deployment Options

Just like developers can't deploy SQL directly from BigQuery Studio, for production workflows, code that accesses the BigQuery API must be deployed through a relevant GCP product.

Deployment options include −

- Cloud Run

- Cloud Functions

- Virtual Machines

- Cloud Composer (Airflow)

BigQuery API Requires Authentication

Using the BigQuery API requires authentication −

- If running a script locally, it's possible to download a credential file associated with the service account running BigQuery and then setting that file as an environment variable.

- If running BigQuery in a context connected to the cloud, like in a Vertex AI notebook, authentication is done automatically.

To avoid downloading a file, GCP also supports Oauth2 authentication flows for most applications.

Once authenticated, typical BigQuery API use cases include −

- Running a SQL script containing a CRUD operation for a given table.

- Retrieving project or dataset metadata to create monitoring frameworks.

- Running a SQL query to synthesize or enrich BigQuery data with data from another source.

The ".query()" Method

Undoubtedly, one of the most popular BigQuery API methods is the ".query() method". When paired with Pandas' .to_dataframe(), it presents a powerful option for querying and displaying data in a readable form.

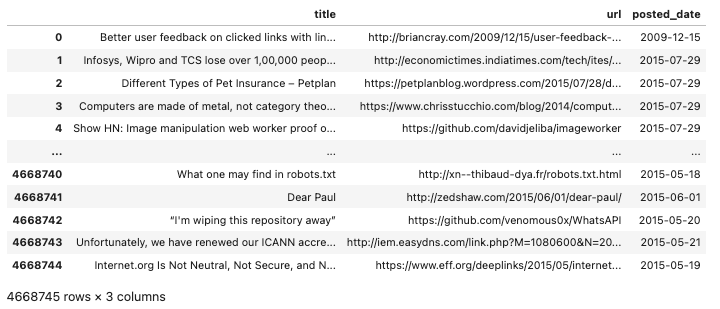

This query should fetch the following output −

The BigQuery API isn't a black box. In addition to logging (using a Google Cloud Logging client), developers can see real-time job information broken down at both a personal user and project level in the UI. To troubleshoot any failed jobs, this should be your first stop.