- BigQuery - Home

- BigQuery - Overview

- BigQuery - Initial Setup

- BigQuery vs Local SQL Engines

- BigQuery - Google Cloud Console

- BigQuery - Google Cloud Hierarchy

- What is Dremel?

- What is BigQuery Studio?

- BigQuery - Datasets

- BigQuery - Tables

- BigQuery - Views

- BigQuery - Create Table

- BigQuery - Basic Schema Design

- BigQuery - Alter Table

- BigQuery - Copy Table

- Delete and Recover Table

- BigQuery - Populate Table

- Standard SQL vs Legacy SQL

- BigQuery - Write First Query

- BigQuery - CRUD Operations

- Partitioning & Clustering

- BigQuery - Data Types

- BigQuery - Complex Data Types

- BigQuery - STRUCT Data Type

- BigQuery - ARRAY Data Type

- BigQuery - JSON Data Type

- BigQuery - Table Metadata

- BigQuery - User-defined Functions

- Connecting to External Sources

- Integrate Scheduled Queries

- Integrate BigQuery API

- BigQuery - Integrate Airflow

- Integrate Connected Sheets

- Integrate Data Transfers

- BigQuery - Materialized View

- BigQuery - Roles & Permissions

- BigQuery - Query Optimization

- BigQuery - BI Engine

- Monitoring Usage & Performance

- BigQuery - Data Warehouse

- Challenges & Best Practices

BigQuery - Integrate Airflow

Running a Python script to load a BigQuery table can be helpful for an individual job. However, when a developer needs to create several sequential tasks, isolated solutions aren't optimal. Therefore, it is necessary to think beyond simple execution. Orchestration is required.

BigQuery can be integrated with several popular orchestration solutions like Airflow and DBT. However, this tutorial will highlight Airflow.

Directed Acrylic Graphs (DAG)

Apache Airflow allows developers to create execution blocks called Directed Acrylic Graphs (DAG). Each DAG is comprised of many tasks.

Each task requires an operator. There are two important BigQuery-compatible operators −

- BigQueryCheck Operator

- BigQueryExecuteQuery Operator

BigQueryCheck Operator

The BigQueryCheckOperator allows developers to conduct upstream checks to determine whether or not data has been updated for the day.

If the table does not have an upload timestamp included in its schema, it is possible to query metadata (as discussed earlier).

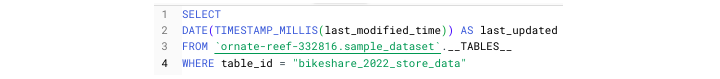

Developers can determine the time a table was last updated by running a version of this query −

BigQueryExecuteQuery Operator

To execute SQL scripts that depend on upstream data, SQL developers can use the BigQueryExecuteQuery operator to create a load job.

A deeper explanation of Airflow is beyond the scope of this tutorial, but GCP provides extensive documentation for those who would like to learn more.