- BigQuery - Home

- BigQuery - Overview

- BigQuery - Initial Setup

- BigQuery vs Local SQL Engines

- BigQuery - Google Cloud Console

- BigQuery - Google Cloud Hierarchy

- What is Dremel?

- What is BigQuery Studio?

- BigQuery - Datasets

- BigQuery - Tables

- BigQuery - Views

- BigQuery - Create Table

- BigQuery - Basic Schema Design

- BigQuery - Alter Table

- BigQuery - Copy Table

- Delete and Recover Table

- BigQuery - Populate Table

- Standard SQL vs Legacy SQL

- BigQuery - Write First Query

- BigQuery - CRUD Operations

- Partitioning & Clustering

- BigQuery - Data Types

- BigQuery - Complex Data Types

- BigQuery - STRUCT Data Type

- BigQuery - ARRAY Data Type

- BigQuery - JSON Data Type

- BigQuery - Table Metadata

- BigQuery - User-defined Functions

- Connecting to External Sources

- Integrate Scheduled Queries

- Integrate BigQuery API

- BigQuery - Integrate Airflow

- Integrate Connected Sheets

- Integrate Data Transfers

- BigQuery - Materialized View

- BigQuery - Roles & Permissions

- BigQuery - Query Optimization

- BigQuery - BI Engine

- Monitoring Usage & Performance

- BigQuery - Data Warehouse

- Challenges & Best Practices

BigQuery - Populate Table

When first learning BigQuery, one of the quickest ways to immediately use its functions and write SQL queries is to provide an existing data source. One of the easiest data sources to upload and begin working with is a static file.

File Types Accepted in BigQuery

BigQuery considers any table created from a file as an external table.

BigQuery accepts file inputs from either a local upload or cloud storage within GCP's Google Cloud Storage.

File types accepted include −

- CSV (comma separated value)

- JSONL (newline delimited JSON)

- Parquet

- Avro

- ORC

Just like creating an empty table, to populate data, it is necessary to specify a schema. As a reminder, the schema can either be inferred (auto-generated) or provided in the UI or as a JSON file.

Check if BigQuery Supports the Data Types

One of the challenges of working with external files, especially CSVs, is that BigQuery is particular about certain data types.

Before uploading a file, it is a good idea to check BigQuery supports the data types and adjust the input accordingly. For example, BigQuery is very particular about how it ingests timestamp data, preferring that each value have two integers rather than one, even for abbreviated dates.

How does BigQuery Avoid File Upload Errors?

Another challenge developers encounter when loading a file into any SQL table is the presence of special characters, like the newline character "\n." While it is always wise to remove these characters and sufficiently clean the data programmatically, BigQuery provides ways to filter out or entirely ignore lines that may result in a file upload error.

- First, BigQuery allows developers to specify an integer value representing how many errors to allow. This can be helpful if there is one particular bad row that doesn't contain any consequential data.

- Additionally, BigQuery provides a parameter for skipping header rows, including quoted newlines and allowing jagged or malformed rows.

Unfortunately, even with these options, the only way to determine if a file will upload is a bit of trial, error and iteration.

BigQuery Studio: Populate a Table from a SQL Statement

Depending on the situation, there are two ways to populate a table from "scratch", a.k.a. a simple SQL statement.

1. The CREATE OR REPLACE Command

The first, CREATE OR REPLACE, has already been covered. In this case, a developer is creating an entirely new table and must define the table schema in addition to the table content. Typically CREATE OR REPLACE is best used when aggregating or expanding upon data that already exists in a SQL table.

2. The INSERT Command

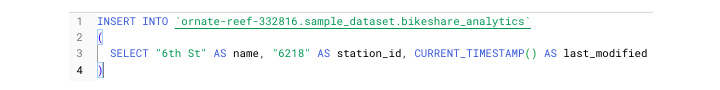

If you find yourself with the "shell" of a table, including a well-defined schema but no data added yet and you don't have a source like an external file or an API payload, then the way you add data is through the INSERT command.

In this case, to properly use INSERT, it's necessary to not just define the columns, types and modes, but also to provide the data being inserted.

Defining data within the confines of SQL involves supplying the value and column alias. For instance −

3. The UNION Command

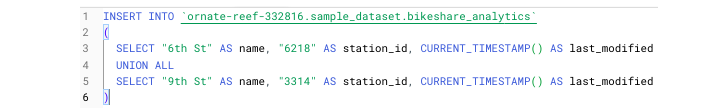

To add more than one row, use the UNION command. For those unfamiliar with UNION, it is similar to Pandas' concat and essentially "stacks" row entries on top of one another as opposed to joining on a common key.

There are two kinds of UNIONs −

- UNION ALL

- UNION DISTINCT

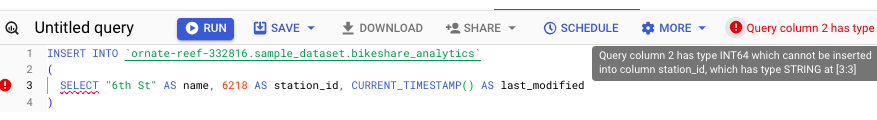

To ensure your data is properly inserted using UNION, it's necessary to ensure that data types agree with each other. For instance, since I provided a STRING value for station_id, I couldn't then change the type to an INTEGER for the following rows.

In professional environments, INSERT is not used to insert one row at a time. Instead, INSERT is commonly used to insert partitions of data, typically by date, i.e., the most recent day's data.

Populate a Table from a Connected Sheet

As is evident by now, querying in BigQuery is different from writing SQL in a local environment due in part to the ability of developers to synchronize BigQuery data with GCP's cloud-based tools. One of the most powerful and intuitive integrations is using BigQuery in combination with Google Sheets.

Exploring the dropdown of external table sources will reveal "Sheets" as a source developers can use to populate a table with data.

Google Sheet as a Primary Data Source

The reason using a Google Sheet as a primary data source is so useful is because, unlike a static CSV, Sheets are dynamic entities. This means that any changes made to the rows within the connected sheet will be reflected in the associated BigQuery tableall in real-time.

To connect a Google Sheet to a BigQuery table, follow the original create table process. Instead of selecting Create Table from Upload, you'll need to select "Drive" as the source. Even though Google Sheets are created in the Sheets UI, they live in Google Drive.

Like CSVs, connected sheets must have defined headers. However, unlike CSVs, developers can specify which columns to include and omit.

The syntax is similar to creating and executing Excel formulas. Simply write the column letter and row number. To select all rows after a given row, preface your column with an exclamation point.

Like: !A2:M

Using the same syntax, you can even select different tabs within a provided sheet. For example −

"Sheet2 !A2:M"

Like working with CSVs, you can specify which rows and headers to ignore and whether to allow quoted new lines or jagged rows.

An Important Caveat

DML statements like INSERT or DROP do not work on connected sheets. To omit a column, you would need to hide it in your sheet or specify this in your initial configuration.