Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

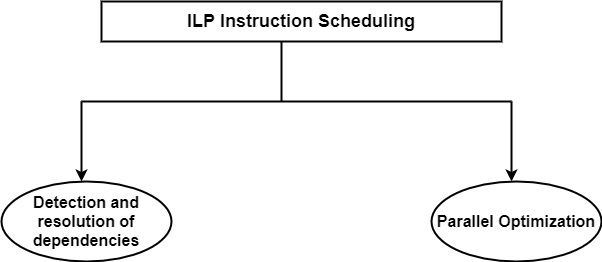

What are the approaches of Instruction Scheduling?

When instructions are handled in parallel, it is required to detect and resolve dependencies between instructions. It can generally discuss dependency detection and resolution as it associates to processor classes and the processing functions contained independently.

An instruction is resource-dependent on an issued instruction if it needed a hardware resource that can be utilized by a previously issued instruction. If, for instance, only a single non-pipelined division unit is accessible, as in general in ILP-processors, thus in the code sequence the second division instruction is resource-dependent on the first one and cannot be implemented in parallel.

Resource dependencies are constraints generated by multiple resources including EUs, buses, or buffers. They can lower the degree of parallelism that can be managed at multiple phases of execution, including instruction decoding, issue, renaming, and execution, etc.

Approaches of Instruction Scheduling

There are two basic approaches as static and dynamic. Static detection and resolution are adept by the compiler, which prevents dependencies by reordering the code. Thus the output of the compiler is reordered into dependency-free code. VLIW processors continually expect dependency-free code, whereas pipelined and superscalar processors generally do not.

In contrast, dynamic detection and resolution of dependencies are implemented by the processor. If dependencies have to be recognized in connection with instruction issues, the processor generally maintains two gliding windows.

The issue window includes all fetched instructions which are predetermined for the issue in the next cycle, while instructions that are in execution and whose results have not been created are retained in an implementation window. In each cycle, all the instructions in the issue window are tested for data, control, and resource dependencies concerning the execution instructions.

Advantage of Dynamic Detection

It allows handling some cases when dependencies are unknown at compile time.

It facilitates the compiler.

It enables code that was compiled with one pipeline in mind to run effectively on multiple pipelines.

The execution of an ILP-processor can be automatically raised by parallel code optimization. If dependencies are encountered and resolved statically by a compiler, certainly the compiler has to be improved to implement parallel code optimization as well.

If dependency detection and resolution are dynamic, code optimization has to be carried out previously by the compiler in connection with object code generation. Parallel optimization goes further than traditional sequential code optimization. It is managed by reordering the sequence of instructions by suitable code transformations for parallel execution.

In static scheduling promoted by parallel code optimization, it is the exclusive function of a compiler to detect information, control and resolve information, control, and resource dependencies during code generation. Further, the compiler also implements parallel code optimization. That is, the compiler makes dependency-free optimized code for parallel ILP-execution.