Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Discuss the Associative Mapping in Computer Architecture?

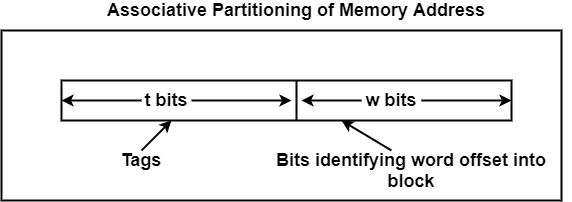

In the associative mapping function, any block of main memory can probably consist of any cache block position. It breaks the main memory address into two parts - the word ID and a tag as shown in the figure. To check for a block stored in the memory, the tag is pulled from the memory address and a search is performed through all of the lines of the cache to see if the block is present.

This method of searching for a block within a cache appears like it might be a slow process, but it is not. Each line of the cache has its compare circuitry, which can quickly analyze whether or not the block is contained at that line. With all of the lines performing this comparison process in parallel, the correct line is identified quickly.

This mapping technique is designed to solve a problem that exists with direct mapping where two active blocks of memory could map to the same line of the cache. When this happens, neither block of memory is allowed to stay in the cache as it is replaced quickly by the competing block. This leads to a condition that is referred to as thrashing.

In thrashing, a line in the cache goes back and forth between two or more blocks, usually replacing a block even before the processor goes through it. Thrashing can be avoided by allowing a block of memory to map to any line of the cache.

There are many replacement algorithms, none of which has any precedence over the others. In an attempt to realize the fastest operation, each of these algorithms is implemented in hardware.

- Least Recently Used (LRU) − This approach restores the block that has not been read by the processor in the highest period.

- First In First Out (FIFO) − This approach restores the block that has been in cache the highest.

- Least Frequently Used (LFU) − This approach restores the block which has fewer hits because being loaded into the cache.

- Random − This approach randomly chooses a block to be replaced. Its execution is slightly lower than LRU, FIFO, or LFU.