Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

CIFAR-10 Image Classification in TensorFlow

Image classification is an essential task in computer vision that involves recognizing and categorizing images based on their content. CIFAR-10 is a well-known dataset that contains 60,000 32Ã32 color images in 10 classes, with 6,000 images per class.

TensorFlow is a powerful framework that provides a variety of tools and APIs for building and training machine learning models. It is widely used for deep learning applications and has a large community of developers contributing to its development. TensorFlow provides a high-level API called Keras, which makes it easy to build and train deep neural networks.

In this tutorial, we will explore how to perform image classification on CIFAR-10 using TensorFlow, a popular open-source machine learning framework.

Loading the Data

The first step in any machine learning project is to prepare the data. In this case, we will use the CIFAR-10 dataset, which can be easily downloaded using TensorFlow's built-in datasets module.

Let's start by importing the necessary modules

import tensorflow as tf from tensorflow.keras.datasets import cifar10

Next, we can load the CIFAR-10 dataset using the load_data() function from the cifar10 module

# Load the CIFAR-10 dataset (train_images, train_labels), (test_images, test_labels) = cifar10.load_data()

This code loads the training and test images and their respective labels into four NumPy arrays. The train_images and test_images arrays contain the images themselves, while the train_labels and test_labels arrays contain the corresponding labels (i.e., integers from 0 to 9 representing the 10 classes).

It's always a good idea to visualize a few examples from the dataset to get a sense of what we're working with

import matplotlib.pyplot as plt import numpy as np # Define the class names for visualization purposes class_names = ['airplane', 'automobile', 'bird', 'cat', 'deer','dog', 'frog', 'horse', 'ship', 'truck'] # Plot a few examples plt.figure(figsize=(10,10)) for i in range(25): plt.subplot(5,5,i+1) plt.xticks([]) plt.yticks([]) plt.grid(False) plt.imshow(train_images[i], cmap=plt.cm.binary) plt.xlabel(class_names[train_labels[i][0]]) plt.show()

This will display a grid of 25 images from the training set, along with their corresponding labels.

Preprocessing the Data

Before we can train a model on the CIFAR-10 dataset, we need to preprocess the data. There are two main preprocessing steps we need to take

Normalize the Pixel Values

The pixel values in the images range from 0 to 255. We can improve the training performance of our model by scaling these values down to the range 0 to 1

# Normalize the pixel values to be between 0 and 1 train_images, test_images = train_images / 255.0, test_images / 255.0

One-hot Encode the Labels

The labels in the CIFAR-10 dataset are integers from 0 to 9. However, in order to train a model to classify the images, we need to convert these integers into one-hot encoded vectors. TensorFlow provides a convenient function for doing this

train_labels = tf.keras.utils.to_categorical(train_labels) test_labels = tf.keras.utils.to_categorical(test_labels)

Building the Model

Now that we have preprocessed the data, we can start building our model. We will use a convolutional neural network (CNN), which is a type of neural network that is particularly well-suited for image classification tasks.

Here's the architecture we will use for our CIFAR-10 model

Convolutional Layers We will start with two convolutional layers, each followed by a max pooling layer. The purpose of the convolutional layers is to learn features from the input images, while the max pooling layers downsample the output of the convolutional layers.

Flatten Layer We will then flatten the output of the convolutional layers into a 1D vector, which will be passed to the fully connected layers.

Fully Connected Layers We will use two fully connected layers, each with 512 neurons and a ReLU activation function. The purpose of the fully connected layers is to learn the class probabilities based on the features learned by the convolutional layers.

Output Layer Finally, we will add an output layer with 10 neurons (one for each class) and a softmax activation function, which will produce the final class probabilities.

Here's the code to build this model

# Define the CNN model model = tf.keras.models.Sequential([ tf.keras.layers.Conv2D(32, (3, 3), activation='relu', input_shape=(32, 32, 3)), tf.keras.layers.MaxPooling2D((2, 2)), tf.keras.layers.Conv2D(64, (3, 3), activation='relu'), tf.keras.layers.MaxPooling2D((2, 2)), tf.keras.layers.Conv2D(64, (3, 3), activation='relu'), tf.keras.layers.Flatten(), tf.keras.layers.Dense(64, activation='relu'), tf.keras.layers.Dense(10, activation='softmax') ])

Compiling and Training the Model

Now that we have defined our model, we need to compile it and train it on the CIFAR-10 dataset. We will use the compile() method to specify the loss function, optimizer, and metrics to use during training

Here's the code to compile the model

# Compile the model model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

We use the adam optimizer, which is a popular stochastic gradient descent (SGD) variant that adapts the learning rate during training. We also use the categorical_crossentropy loss function, which is a common choice for multi-class classification problems. Finally, we specify the accuracy metric, which will be used to evaluate the performance of our model during training.

To train the model, we simply call the fit method and pass in the training data and labels

# Train the model history = model.fit(train_images, train_labels, epochs=10, validation_data=(test_images, test_labels))

In the above code, we train our model for 10 epochs using the training data and validate it on the test data. The `fit()` method returns a `History` object that contains information about the training process, such as the loss and accuracy values at each epoch.

Here is the output which contains information about the training process

Epoch 1/10 1563/1563 [==============================] - 55s 34ms/step - loss: 1.7739 - accuracy: 0.3845 - val_loss: 1.4289 - val_accuracy: 0.4986 Epoch 2/10 1563/1563 [==============================] - 62s 40ms/step - loss: 1.2955 - accuracy: 0.5384 - val_loss: 1.2574 - val_accuracy: 0.5585 Epoch 3/10 1563/1563 [==============================] - 57s 36ms/step - loss: 1.1365 - accuracy: 0.6024 - val_loss: 1.1261 - val_accuracy: 0.6079 Epoch 4/10 1563/1563 [==============================] - 56s 36ms/step - loss: 1.0434 - accuracy: 0.6355 - val_loss: 1.0228 - val_accuracy: 0.6490 Epoch 5/10 1563/1563 [==============================] - 57s 36ms/step - loss: 0.9579 - accuracy: 0.6663 - val_loss: 1.0293 - val_accuracy: 0.6466 Epoch 6/10 1563/1563 [==============================] - 56s 36ms/step - loss: 0.8967 - accuracy: 0.6868 - val_loss: 1.0676 - val_accuracy: 0.6463 Epoch 7/10 1563/1563 [==============================] - 50s 32ms/step - loss: 0.8372 - accuracy: 0.7088 - val_loss: 1.0286 - val_accuracy: 0.6571 Epoch 8/10 1563/1563 [==============================] - 56s 36ms/step - loss: 0.7923 - accuracy: 0.7266 - val_loss: 1.0569 - val_accuracy: 0.6498 Epoch 9/10 1563/1563 [==============================] - 50s 32ms/step - loss: 0.7490 - accuracy: 0.7413 - val_loss: 1.0367 - val_accuracy: 0.6585 Epoch 10/10 1563/1563 [==============================] - 59s 38ms/step - loss: 0.7065 - accuracy: 0.7548 - val_loss: 1.0404 - val_accuracy: 0.6713

Evaluating the Model

After training the model, we can evaluate its performance on the test set using the evaluate method

# Evaluate the model on the test set

test_loss, test_acc = model.evaluate(test_images, test_labels)

print('Test accuracy:', test_acc)

This will print the test accuracy of our model, which indicates how well it can classify images that it has never seen before.

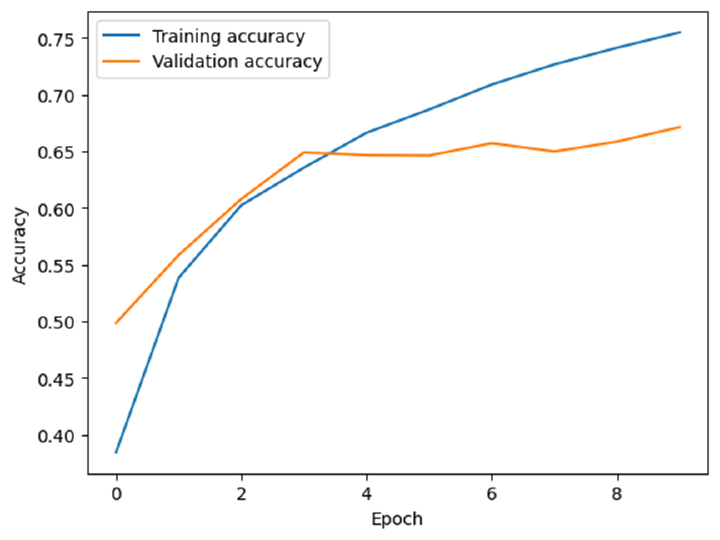

We can also visualize the training and validation accuracy over time using Matplotlib

# Plot the training and validation accuracy over time

plt.plot(history.history['accuracy'], label='accuracy')

plt.plot(history.history['val_accuracy'], label='val_accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.ylim([0.5, 1])

plt.legend(loc='lower right')

plt.show()

Here's an example of what the accuracy curves might look like

313/313 [==============================] - 3s 8ms/step - loss: 1.0404 - accuracy: 0.6713 Test accuracy: 0.6712999939918518

This will display a plot of the training and validation accuracy over the 10 epochs of training. We can see that our model achieves a training accuracy of around 75% and a validation accuracy of around 67%, which is not bad considering the small size of the CIFAR-10 dataset.

Making Predictions

After training and evaluating the model, we can use it to make predictions on new images. Here's an example of how to make a prediction

# Load a new image

new_image = plt.imread(r'C:\Users\Leekha\Desktop\sparrow.jpg')

new_image = tf.image.resize(new_image, (32, 32))

# Reshape the image to match the input shape of the model

new_image = np.expand_dims(new_image, axis=0)

# Make a prediction

predictions = model.predict(new_image)

# Get the index of the predicted class

predicted_class_index = np.argmax(predictions)

# Map the index to the corresponding class name

predicted_class_name = class_names[predicted_class_index]

# Print the predicted class name

print('Predicted class:', predicted_class_name)

It will give us the following prediction

1/1 [==============================] - 0s 32ms/step Predicted class: bird

Let's make one more prediction from our trained model

# Load a new image

new_image = plt.imread(r'C:\Users\Leekha\Desktop\car.jpg')

new_image = tf.image.resize(new_image, (32, 32))

# Reshape the image to match the input shape of the model

new_image = np.expand_dims(new_image, axis=0)

# Make a prediction

predictions = model.predict(new_image)

# Get the index of the predicted class

predicted_class_index = np.argmax(predictions)

# Map the index to the corresponding class name

predicted_class_name = class_names[predicted_class_index]

# Print the predicted class name

print('Predicted class:', predicted_class_name)

It will give us the following prediction

1/1 [==============================] - 0s 19ms/step Predicted class: automobile

In the above code blocks, we first load the new image using plt.imread and resize it to match the input shape of the model. We then expand the dimensions of the image to match the batch size of the model.

Finally, we use the predict method of the model to get the predicted class probabilities for the image. We use np.argmax to find the index of the predicted class, and then look up the corresponding class name in the class_names list. The predicted class name is then printed to the console.

Conclusion

In this article, we explored how to perform image classification on the CIFAR-10 dataset using TensorFlow and Keras. We built a convolutional neural network (CNN) and trained it on the CIFAR-10 dataset, achieving a test accuracy of around 67%. We also visualized the training and validation accuracy over time using Matplotlib.