Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

A Beginner’s Guide to Image Classification using CNN (Python implementation)

Convolutional Neural Networks (CNNs) are a type of neural network that is specifically designed to process data with a grid-like topology, such as an image. CNNs are composed of a number of convolutional and pooling layers, which are designed to extract features from the input data, and a fully connected layer, which is used to classify the features.

The primary advantage of CNNs is that they are able to automatically learn the features that are most relevant for the task at hand, rather than having to rely on manual feature engineering. This makes them particularly well-suited for image classification tasks, where the features that are important for the classification may not be known a priori.

In this article, we will provide a detailed overview of CNNs, including their architecture and the key concepts behind them. We will then demonstrate how to implement a CNN in Python for image classification using the popular Keras library.

CNN Architecture

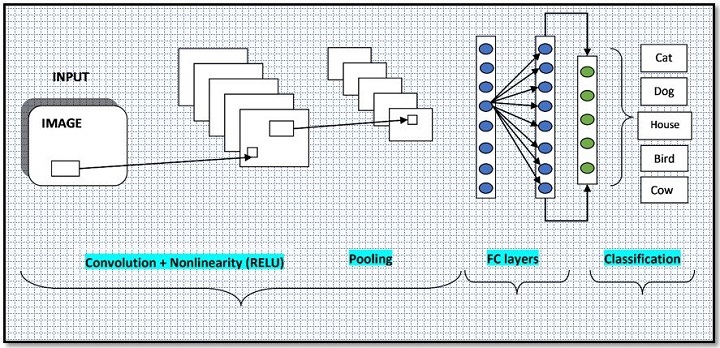

CNNs consist of a number of different layers, each of which performs a specific function. The most common types of layers in a CNN are convolutional layers, pooling layers, and fully connected layers.

Convolutional Layers

Convolutional layers are the primary building block of a CNN. They apply a convolution operation to the input data, which involves sliding a small matrix (called a kernel or filter) over the input data and computing the dot product between the entries in the kernel and the input data. This results in a new, transformed feature map, which captures the relationships between the entries in the input data.

The parameters of the convolutional layer include the size of the kernel, the stride (i.e., the number of pixels the kernel is moved at a time), and the padding (i.e., the number of pixels added to the borders of the input data to ensure that the kernel can be applied to all parts of the input data).

Pooling Layers

Pooling layers are used to down-sample the input data by applying a pooling operation to the feature maps produced by the convolutional layers. The most common types of pooling are max pooling and average pooling, which respectively take the maximum and average value of a set of inputs.

Pooling layers are typically used to reduce the spatial dimensions of the input data (i.e., the width and height), which can reduce the number of parameters in the model and improve its generalization performance.

Fully Connected Layers

Fully connected layers are used to classify the features extracted by the convolutional and pooling layers. They consist of a number of neurons, each of which is connected to every neuron in the previous layer. The output of the fully connected layer is a set of class scores, which can be used to predict the class label of the input data.

The typical architecture of Convolutional Neural Network (CNN) is as follows ?

Using CNN for Image Classification

There are a number of key concepts that are important to understand in order to effectively use CNNs for image classification.

Filters and Kernels

As mentioned above, convolutional layers apply a kernel or filter to the input data to produce a transformed feature map. The size of the kernel determines the size of the region of the input data that is considered at each step of the convolution operation. For example, a 3x3 kernel considers a 3x3 region of the input data at each step.

The weights of the kernel are learned during the training process and are used to extract features from the input data. Different kernels can be used to extract different types of features, such as edges, corners, or textures.

Stride

The stride determines the step size at which the kernel is applied to the input data. A larger stride results in a smaller output feature map, as the kernel is applied to fewer locations in the input data. A smaller stride, on the other hand, results in a larger output feature map, as the kernel is applied to more locations in the input data.

Padding

Padding is the number of pixels added to the borders of the input data before the convolution operation is applied. Padding is often used to ensure that the output feature map has the same spatial dimensions as the input data, which can be useful for certain types of downstream processing.

Pooling

Pooling is a down-sampling operation that is applied to the output of the convolutional layers. It reduces the spatial dimensions of the data and is typically used to reduce the number of parameters in the model and improve its generalization performance.

Implementing a CNN in Python for Image Classification

Now that we have a basic understanding of CNNs, let's see how we can implement a CNN in Python for image classification using the popular Keras library.

The first thing we need to do is install the required libraries. We will be using the following libraries ?

NumPy ? A library for working with arrays and matrices

Matplotlib ? A library for creating plots and charts

Keras ? A high-level library for building and training neural networks

You can install these libraries using pip ?

pip install numpy matplotlib keras

Next, we need to import the libraries that we will be using ?

import numpy as np import matplotlib.pyplot as plt from tensorflow import keras from tensorflow.keras.datasets import mnist from tensorflow.keras.utils import to_categorical from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense, Dropout

We will be using the MNIST dataset for this example, which consists of 60,000 training images and 10,000 test images of handwritten digits. The images are 28Ã28 pixels, and each pixel is represented by a grayscale value between 0 and 255. The goal of the task is to classify the images into one of 10 classes (i.e., the digits 0-9).

The first thing we need to do is load the dataset and pre-process the data. We can do this using the following code ?

# Load the dataset (X_train, y_train), (X_test, y_test) = mnist.load_data() # Pre-process the data X_train = X_train.astype(np.float32) / 255.0 X_test = X_test.astype(np.float32) / 255.0 # Reshape the data to add a channel dimension X_train = np.expand_dims(X_train, axis=-1) X_test = np.expand_dims(X_test, axis=-1) # One-hot encode the labels y_train = to_categorical(y_train, num_classes=10) y_test = to_categorical(y_test, num_classes=10)

Next, we need to define the model. We will use a Sequential model, which allows us to define the model as a sequence of layers. We will start by adding a convolutional layer with 32 filters of size 3x3, followed by a max pooling layer with a pool size of 2Ã2. We will then add a dropout layer with a rate of 0.25, which will randomly drop 25% of the units during training to prevent overfitting.

We will then add a second convolutional layer with 64 filters of size 3Ã3, followed by another max pooling layer with a pool size of 2Ã2. We will again add a dropout layer with a rate of 0.25.

Finally, we will flatten the output of the second pooling layer and add a fully connected layer with 128 units, followed by the output layer with 10 units (one for each class).

We can define the model using the following code ?

# Define the model model = Sequential() model.add(Conv2D(32, kernel_size=(3, 3), activation='relu', input_shape=(28, 28, 1))) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Dropout(0.25)) model.add(Conv2D(64, kernel_size=(3, 3), activation='relu')) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Dropout(0.25)) model.add(Flatten()) model.add(Dense(128, activation='relu')) model.add(Dense(10, activation='softmax'))

Next, we need to compile the model by specifying the loss function, optimizer, and metrics that we want to use. For this example, we will use the categorical cross-entropy loss, the Adam optimizer, and the accuracy metric.

# Compile the model model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

Finally, we can train the model by calling the fit method and specifying the training data, the number of epochs (i.e., the number of times the model will see the data), and the batch size (i.e., the number of samples per gradient update).

# Train the model history = model.fit(X_train, y_train, epochs=10, batch_size=32, validation_data=(X_test, y_test))

Here is the output after running the above code snippet ?

Epoch 1/10 1875/1875 [==============================] - 38s 20ms/step - loss: 0.1680 - accuracy: 0.9481 - val_loss: 0.0556 - val_accuracy: 0.9823 Epoch 2/10 1875/1875 [==============================] - 42s 22ms/step - loss: 0.0608 - accuracy: 0.9811 - val_loss: 0.0374 - val_accuracy: 0.9880 Epoch 3/10 1875/1875 [==============================] - 42s 22ms/step - loss: 0.0470 - accuracy: 0.9854 - val_loss: 0.0292 - val_accuracy: 0.9903 Epoch 4/10 1875/1875 [==============================] - 44s 24ms/step - loss: 0.0370 - accuracy: 0.9889 - val_loss: 0.0260 - val_accuracy: 0.9908 Epoch 5/10 1875/1875 [==============================] - 43s 23ms/step - loss: 0.0311 - accuracy: 0.9903 - val_loss: 0.0246 - val_accuracy: 0.9913 Epoch 6/10 1875/1875 [==============================] - 43s 23ms/step - loss: 0.0267 - accuracy: 0.9911 - val_loss: 0.0278 - val_accuracy: 0.9910 Epoch 7/10 1875/1875 [==============================] - 40s 21ms/step - loss: 0.0233 - accuracy: 0.9923 - val_loss: 0.0261 - val_accuracy: 0.9926 Epoch 8/10 1875/1875 [==============================] - 41s 22ms/step - loss: 0.0193 - accuracy: 0.9939 - val_loss: 0.0268 - val_accuracy: 0.9917 Epoch 9/10 1875/1875 [==============================] - 41s 22ms/step - loss: 0.0188 - accuracy: 0.9941 - val_loss: 0.0252 - val_accuracy: 0.9916 Epoch 10/10 1875/1875 [==============================] - 41s 22ms/step - loss: 0.0169 - accuracy: 0.9945 - val_loss: 0.0314 - val_accuracy: 0.9909

After training is complete, we can evaluate the model on the test data by calling the evaluate method ?

# Evaluate the model

loss, accuracy = model.evaluate(X_test, y_test)

print("Loss: ", loss)

print("Accuracy: ", accuracy)

Here is the output after running the above code snippet ?

313/313 [==============================] - 1s 5ms/step - loss: 0.0314 - accuracy: 0.9909 Loss: 0.031392790377140045 Accuracy: 0.9908999800682068

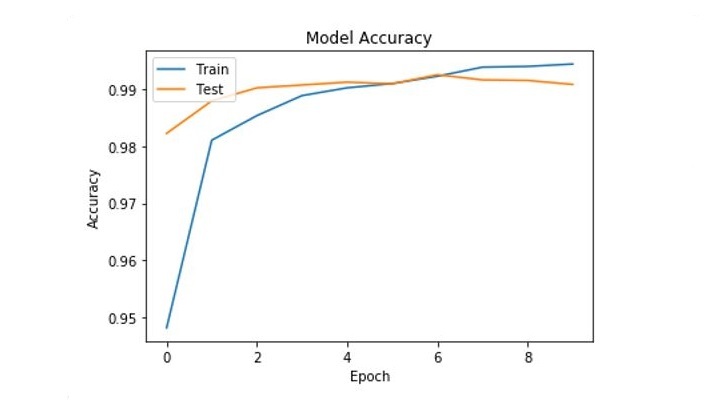

We can also plot the training and validation accuracy over the course of training by extracting the accuracy history from the history object ?

# Extract the accuracy history

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

# Plot the accuracy history

plt.plot(acc)

plt.plot(val_acc)

plt.title('Model Accuracy')

plt.ylabel('Accuracy')

plt.xlabel('Epoch')

plt.legend(['Train', 'Test'], loc='upper left')

plt.show()

It will plot the training and validation accuracy as follows ?

Improving the Performance

To further improve the performance of your CNN, you may want to consider the following tips ?

Fine-tune the architecture ? There are many different ways to design a CNN, and the best architecture for your task will depend on the specific characteristics of your data. You can experiment with different numbers of layers, kernel sizes, strides, and so on, to see what works best for your task.

Use data augmentation ? Data augmentation is a technique that involves generating additional training data by applying transformations to the existing data. This can be useful for improving generalization performance, especially when you have a limited amount of training data.

Use pre-trained models ? Pre-trained models are CNNs that have been trained on a large dataset, such as ImageNet, and can be used as a starting point for your own task. Transfer learning, which involves fine-tuning a pre-trained model on your own dataset, is a powerful technique that can greatly speed up training and improve performance.

Optimize the hyperparameters ? The performance of your CNN can also be improved by optimizing the hyperparameters, such as the learning rate, batch size, and so on. You can use techniques like grid search or random search to find the optimal set of hyperparameters for your task.

By following these tips and applying them to your own CNNs, you should be able to achieve good performance on a wide range of image classification tasks.

Conclusion

In this article, we provided a detailed overview of Convolutional Neural Networks (CNNs) and demonstrated how to implement a CNN in Python for image classification using the Keras library. We discussed the key concepts behind CNNs, including filters and kernels, stride, padding, and pooling, and explained how these concepts are used to extract features from the input data and classify it.

We also showed how to fine-tune the architecture and hyperparameters of a CNN, use data augmentation to improve generalization, and use pre-trained models to speed up training and improve performance.

By following the techniques outlined in this article, you should be able to achieve good performance on a wide range of image classification tasks. Overall, CNNs are a powerful tool for analyzing and classifying image data, and with the right approach, they can be used to solve a wide range of problems in computer vision and machine learning.