- Lucene - Home

- Lucene - Overview

- Lucene - Environment Setup

- Lucene - First Application

- Lucene - Indexing Classes

- Lucene - Searching Classes

- Lucene - Indexing Process

- Lucene - Search Operation

- Lucene - Sorting

Lucene - Indexing Operations

- Lucene - Indexing Operations

- Lucene - Add Document

- Lucene - Update Document

- Lucene - Delete Document

- Lucene - Field Options

Lucene - Query Programming

- Lucene - Query Programming

- Lucene - TermQuery

- Lucene - TermRangeQuery

- Lucene - PrefixQuery

- Lucene - BooleanQuery

- Lucene - PhraseQuery

- Lucene - WildCardQuery

- Lucene - FuzzyQuery

- Lucene - MatchAllDocsQuery

- Lucene - MatchNoDocsQuery

- Lucene - RegexpQuery

Lucene - Analysis

- Lucene - Analysis

- Lucene - WhitespaceAnalyzer

- Lucene - SimpleAnalyzer

- Lucene - StopAnalyzer

- Lucene - StandardAnalyzer

- Lucene - KeywordAnalyzer

- Lucene - CustomAnalyzer

- Lucene - EnglishAnalyzer

- Lucene - FrenchAnalyzer

- Lucene - SpanishAnalyzer

Lucene - Resources

Lucene - Quick Guide

Lucene - Overview

Lucene is a simple yet powerful Java-based Search library. It can be used in any application to add search capability to it. Lucene is an open-source project. It is scalable. This high-performance library is used to index and search virtually any kind of text. Lucene library provides the core operations which are required by any search application like Indexing and Searching.

How Search Application works?

A Search application performs all or a few of the following operations −

| Step | Title | Description |

|---|---|---|

| 1 | Acquire Raw Content |

The first step of any search application is to collect the target contents on which search application is to be conducted. |

| 2 | Build the document |

The next step is to build the document(s) from the raw content, which the search application can understand and interpret easily. |

| 3 | Analyze the document |

Before the indexing process starts, the document is to be analyzed as to which part of the text is a candidate to be indexed. This process is where the document is analyzed. |

| 4 | Indexing the document |

Once documents are built and analyzed, the next step is to index them so that this document can be retrieved based on certain keys instead of the entire content of the document. Indexing process is similar to indexes at the end of a book where common words are shown with their page numbers so that these words can be tracked quickly instead of searching the complete book. |

| 5 | User Interface for Search |

Once a database of indexes is ready then the application can make any search. To facilitate a user to make a search, the application must provide a user a mean or a user interface where a user can enter text and start the search process. |

| 6 | Build Query |

Once a user makes a request to search a text, the application should prepare a Query object using that text which can be used to inquire index database to get the relevant details. |

| 7 | Search Query |

Using a query object, the index database is then checked to get the relevant details and the content documents. |

| 8 | Render Results |

Once the result is received, the application should decide on how to show the results to the user using User Interface. How much information is to be shown at first look and so on. |

Apart from these basic operations, a search application can also provide administration user interface and help administrators of the application to control the level of search based on the user profiles. Analytics of search results is another important and advanced aspect of any search application.

Lucene's Role in Search Application

Lucene plays role in steps 2 to step 7 mentioned above and provides classes to do the required operations. In a nutshell, Lucene is the heart of any search application and provides vital operations pertaining to indexing and searching. Acquiring contents and displaying the results is left for the application part to handle.

In the next chapter, we will perform a simple Search application using Lucene Search library.

Lucene - Environment

This chapter will guide you on how to prepare a development environment to start your work with Lucene. It will also teach you how to set up JDK on your machine before you set up Apache Lucene −

Setup Java Development Kit (JDK)

You can download the latest version of SDK from Oracle's Java site − Java SE Downloads. You will find instructions for installing JDK in downloaded files, follow the given instructions to install and configure the setup. Finally set PATH and JAVA_HOME environment variables to refer to the directory that contains java and javac, typically java_install_dir/bin and java_install_dir respectively.

If you are running Windows and have installed the JDK in C:\jdk-24, you would have to put the following line in your C:\autoexec.bat file.

set PATH=C:\jdk-24;%PATH% set JAVA_HOME=C:\jdk-24

Alternatively, on Windows NT/2000/XP, you will have to right-click on My Computer, select Properties → Advanced → Environment Variables. Then, you will have to update the PATH value and click the OK button.

On Unix (Solaris, Linux, etc.), if the SDK is installed in /usr/local/jdk-24 and you use the C shell, you will have to put the following into your .cshrc file.

setenv PATH /usr/local/jdk-24/bin:$PATH setenv JAVA_HOME /usr/local/jdk-24

Alternatively, if you use an Integrated Development Environment (IDE) like Borland JBuilder, Eclipse, IntelliJ IDEA, or Sun ONE Studio, you will have to compile and run a simple program to confirm that the IDE knows where you have installed Java. Otherwise, you will have to carry out a proper setup as given in the document of the IDE.

Popular Java Editors

To write your Java programs, you need a text editor. There are many sophisticated IDEs available in the market. But for now, you can consider one of the following −

Notepad − On Windows machine, you can use any simple text editor like Notepad (Recommended for this tutorial), TextPad.

Netbeans − It is a Java IDE that is open-source and free, which can be downloaded from www.netbeans.org/index.html.

Eclipse − It is also a Java IDE developed by the eclipse open-source community and can be downloaded from www.eclipse.org.

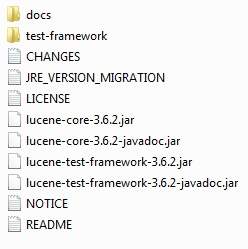

Step 3 - Setup Lucene Framework Libraries

If the startup is successful, then you can proceed to set up your Lucene framework. Following are the simple steps to download and install the framework on your machine.

https://downloads.apache.org/lucene/java/10.2.2/

Make a choice whether you want to install Lucene on Windows, or Unix and then proceed to the next step to download the .zip file for windows and .tz file for Unix.

Download the suitable version of Lucene framework binaries from https://downloads.apache.org/lucene/java/10.2.2/.

At the time of writing this tutorial, I downloaded lucene-10.2.2.tgz on my Windows machine and extracted to C:\lucene.

You will find all the Lucene libraries in the directory C:\lucene\modules. Make sure you set your CLASSPATH variable on this directory properly otherwise, you will face problem while running your application. If you are using Eclipse, then it is not required to set CLASSPATH because all the setting will be done through Eclipse.

Once you are done with this last step, you are ready to proceed for your first Example which you will see in the next chapter.

Lucene - First Application

In this chapter, we will learn the actual programming with Lucene Framework. Before you start writing your first example using Lucene framework, you have to make sure that you have set up your Lucene environment properly as explained in Lucene - Environment Setup tutorial. It is recommended you have the working knowledge of Eclipse IDE.

Let us now proceed by writing a simple Search Application which will print the number of search results found. We'll also see the list of indexes created during this process.

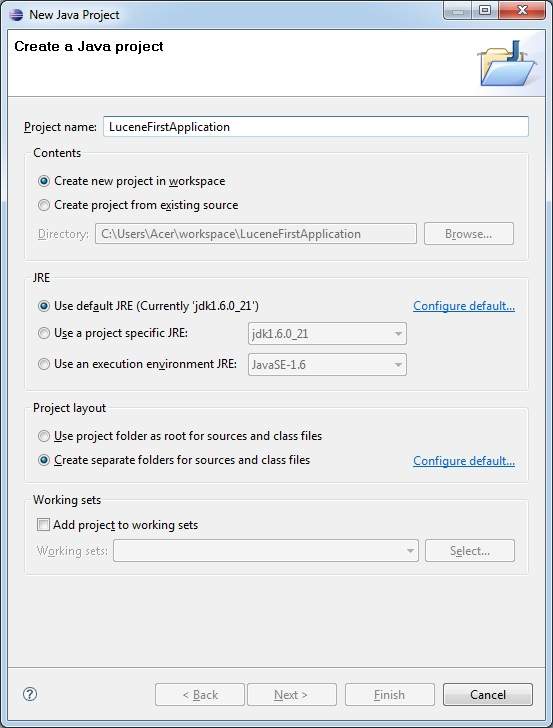

Step 1 - Create Java Project

The first step is to create a simple Java Project using Eclipse IDE. Follow the option File > New -> Project and finally select Java Project wizard from the wizard list. Now name your project as LuceneFirstApplication using the wizard window as follows −

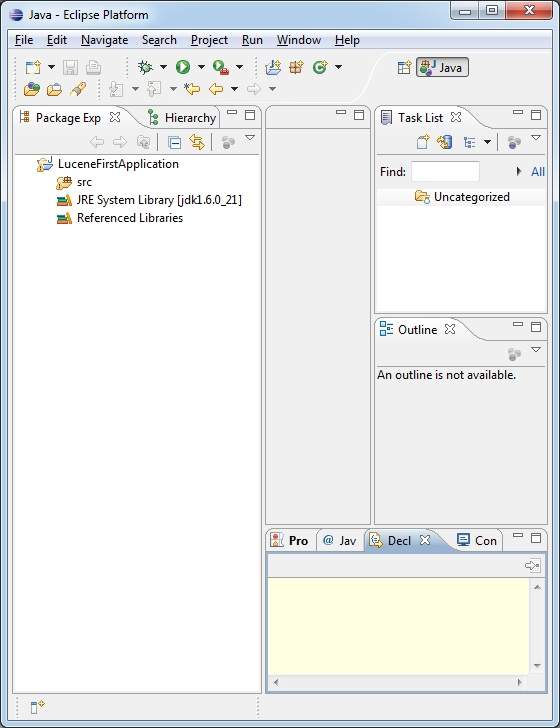

Once your project is created successfully, you will have following content in your Project Explorer −

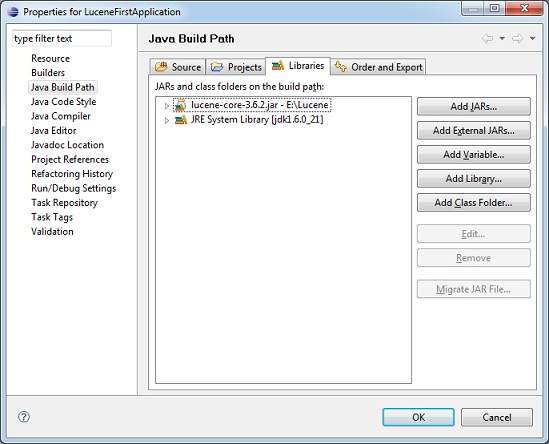

Step 2 - Add Required Libraries

Let us now add Lucene core Framework library in our project. To do this, right click on your project name LuceneFirstApplication and then follow the following option available in context menu: Build Path -> Configure Build Path to display the Java Build Path window as follows −

Now use Add External JARs button available under Libraries tab to add the following core JAR from the Lucene installation directory −

- lucene-core-10.2.2.jar

Step 3 - Create Source Files

Let us now create actual source files under the LuceneFirstApplication project. First we need to create a package called com.tutorialspoint.lucene. To do this, right-click on src in package explorer section and follow the option : New -> Package.

Next we will create LuceneTester.java and other java classes under the com.tutorialspoint.lucene package.

LuceneConstants.java

This class is used to provide various constants to be used across the sample application.

package com.tutorialspoint.lucene;

public class LuceneConstants {

public static final String CONTENTS = "contents";

public static final String FILE_NAME = "filename";

public static final String FILE_PATH = "filepath";

public static final int MAX_SEARCH = 10;

}

TextFileFilter.java

This class is used as a .txt file filter.

package com.tutorialspoint.lucene;

import java.io.File;

import java.io.FileFilter;

public class TextFileFilter implements FileFilter {

@Override

public boolean accept(File pathname) {

return pathname.getName().toLowerCase().endsWith(".txt");

}

}

Indexer.java

This class is used to index the raw data so that we can make it searchable using the Lucene library.

package com.tutorialspoint.lucene;

import java.io.File;

import java.io.FileFilter;

import java.io.FileReader;

import java.io.IOException;

import java.nio.file.Paths;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.StringField;

import org.apache.lucene.document.TextField;

import org.apache.lucene.index.CorruptIndexException;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

public class Indexer {

private IndexWriter writer;

public Indexer(String indexDirectoryPath) throws IOException {

//this directory will contain the indexes

Directory indexDirectory = FSDirectory.open(Paths.get(indexDirectoryPath));

StandardAnalyzer analyzer = new StandardAnalyzer();

IndexWriterConfig config = new IndexWriterConfig(analyzer);

writer = new IndexWriter(indexDirectory, config);

}

public void close() throws CorruptIndexException, IOException {

writer.close();

}

private Document getDocument(File file) throws IOException {

Document document = new Document();

//index file contents

Field contentField = new TextField(LuceneConstants.CONTENTS, new FileReader(file));

//index file name

Field fileNameField = new StringField(LuceneConstants.FILE_NAME,

file.getName(),Field.Store.YES);

//index file path

Field filePathField = new StringField(LuceneConstants.FILE_PATH,

file.getCanonicalPath(),Field.Store.YES);

document.add(contentField);

document.add(fileNameField);

document.add(filePathField);

return document;

}

private void indexFile(File file) throws IOException {

System.out.println("Indexing "+file.getCanonicalPath());

Document document = getDocument(file);

writer.addDocument(document);

}

public int createIndex(String dataDirPath, FileFilter filter)

throws IOException {

//get all files in the data directory

File[] files = new File(dataDirPath).listFiles();

for (File file : files) {

if(!file.isDirectory()

&& !file.isHidden()

&& file.exists()

&& file.canRead()

&& filter.accept(file)

){

indexFile(file);

}

}

return writer.getDocStats().numDocs;

}

}

Searcher.java

This class is used to search the indexes created by the Indexer to search the requested content.

package com.tutorialspoint.lucene;

import java.io.IOException;

import java.nio.file.Paths;

import java.text.ParseException;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.index.CorruptIndexException;

import org.apache.lucene.index.DirectoryReader;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.util.QueryBuilder;

public class Searcher {

IndexSearcher indexSearcher;

QueryBuilder queryBuilder;

Query query;

public Searcher(String indexDirectoryPath)

throws IOException {

DirectoryReader indexDirectory = DirectoryReader.open(FSDirectory.open(Paths.get(indexDirectoryPath)));

indexSearcher = new IndexSearcher(indexDirectory);

StandardAnalyzer analyzer = new StandardAnalyzer();

queryBuilder = new QueryBuilder(analyzer);

}

public TopDocs search( String searchQuery)

throws IOException, ParseException {

query = queryBuilder.createPhraseQuery(LuceneConstants.CONTENTS, searchQuery);

return indexSearcher.search(query, LuceneConstants.MAX_SEARCH);

}

public Document getDocument(ScoreDoc scoreDoc)

throws CorruptIndexException, IOException {

return indexSearcher.storedFields().document(scoreDoc.doc);

}

}

LuceneTester.java

This class is used to test the indexing and search capability of lucene library.

package com.tutorialspoint.lucene;

import java.io.IOException;

import java.text.ParseException;

import org.apache.lucene.document.Document;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TopDocs;

public class LuceneTester {

String indexDir = "D:\\lucene\\Index";

String dataDir = "D:\\lucene\\Data";

Indexer indexer;

Searcher searcher;

public static void main(String[] args) {

LuceneTester tester;

try {

tester = new LuceneTester();

tester.createIndex();

tester.search("Mohan");

} catch (IOException e) {

e.printStackTrace();

} catch (ParseException e) {

e.printStackTrace();

}

}

private void createIndex() throws IOException {

indexer = new Indexer(indexDir);

int numIndexed;

long startTime = System.currentTimeMillis();

numIndexed = indexer.createIndex(dataDir, new TextFileFilter());

long endTime = System.currentTimeMillis();

indexer.close();

System.out.println(numIndexed+" File indexed, time taken: "

+(endTime-startTime)+" ms");

}

private void search(String searchQuery) throws IOException, ParseException {

searcher = new Searcher(indexDir);

long startTime = System.currentTimeMillis();

TopDocs hits = searcher.search(searchQuery);

long endTime = System.currentTimeMillis();

System.out.println(hits.totalHits +

" documents found. Time :" + (endTime - startTime));

for(ScoreDoc scoreDoc : hits.scoreDocs) {

Document doc = searcher.getDocument(scoreDoc);

System.out.println("File: "

+ doc.get(LuceneConstants.FILE_PATH));

}

}

}

Step 4 - Data & Index directory creation

We have used 10 text files from record1.txt to record10.txt containing names and other details of the students and put them in the directory D:\Lucene\Data. Test Data. An index directory path should be created as D:\Lucene\Index. After running this program, you can see the list of index files created in that folder.

Step 5 - Running the program

Once you are done with the creation of the source, the raw data, the data directory and the index directory, you are ready for compiling and running of your program. To do this, keep the LuceneTester.Java file tab active and use either the Run option available in the Eclipse IDE or use Ctrl + F11 to compile and run your LuceneTester application. If the application runs successfully, it will print the following message in Eclipse IDE's console −

Output

Sept 08, 2025 5:39:24 PM org.apache.lucene.internal.vectorization.VectorizationProvider lookup WARNING: Java vector incubator module is not readable. For optimal vector performance, pass '--add-modules jdk.incubator.vector' to enable Vector API. Indexing D:\lucene\Data\record1.txt Indexing D:\lucene\Data\record10.txt Indexing D:\lucene\Data\record2.txt Indexing D:\lucene\Data\record3.txt Indexing D:\lucene\Data\record4.txt Indexing D:\lucene\Data\record5.txt Indexing D:\lucene\Data\record6.txt Indexing D:\lucene\Data\record7.txt Indexing D:\lucene\Data\record8.txt Indexing D:\lucene\Data\record9.txt 10 File indexed, time taken: 88 ms 1 hits documents found. Time :22 File: D:\lucene\Data\record4.txt

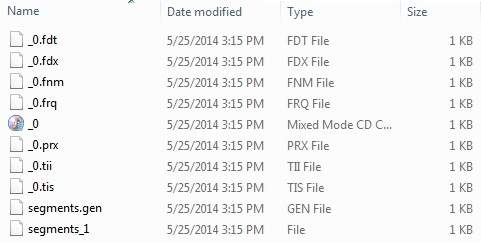

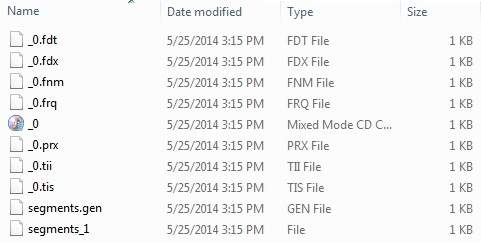

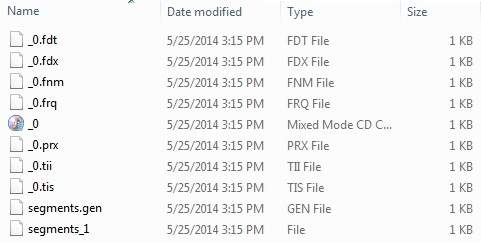

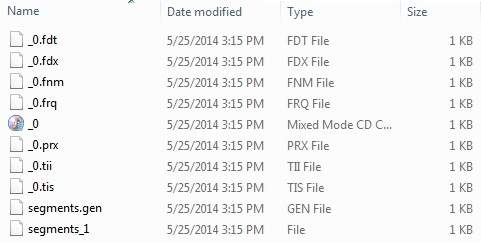

Once you've run the program successfully, you will have the following content in your index directory −

Lucene - Indexing Classes

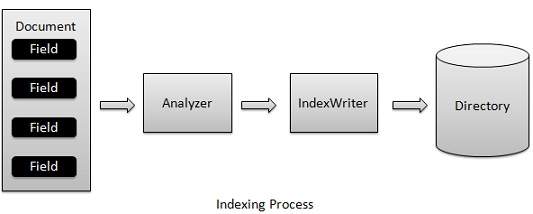

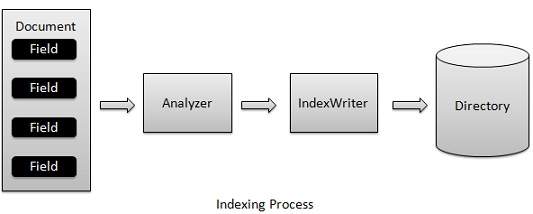

Indexing process is one of the core functionalities provided by Lucene. The following diagram illustrates the indexing process and the use of classes. IndexWriter is the most important and the core component of the indexing process.

We add Document(s) containing Field(s) to IndexWriter which analyzes the Document(s) using the Analyzer and then creates/open/edit indexes as required and store/update them in a Directory. IndexWriter is used to update or create indexes. It is not used to read indexes.

Indexing Classes

Following is a list of commonly-used classes during the indexing process.

| S.No. | Class & Description |

|---|---|

| 1 |

IndexWriter

This class acts as a core component which creates/updates indexes during the indexing process. |

| 2 |

Directory

This class represents the storage location of the indexes. |

| 3 |

Analyzer

This class is responsible to analyze a document and get the tokens/words from the text which is to be indexed. Without analysis done, IndexWriter cannot create index. |

| 4 |

Document

This class represents a virtual document with Fields where the Field is an object which can contain the physical document's contents, its meta data and so on. The Analyzer can understand a Document only. |

| 5 |

Field

This is the lowest unit or the starting point of the indexing process. It represents the key value pair relationship where a key is used to identify the value to be indexed. Let us assume a field used to represent contents of a document will have key as "contents" and the value may contain the part or all of the text or numeric content of the document. Lucene can index only text or numeric content only. |

| 6 |

TokenStream

TokenStream is an output of the analysis process and it comprises of a series of tokens. It is an abstract class. |

Lucene - Searching Classes

The process of Searching is again one of the core functionalities provided by Lucene. Its flow is similar to that of the indexing process. Basic search of Lucene can be made using the following classes which can also be termed as foundation classes for all search related operations.

Searching Classes

Following is a list of commonly-used classes during searching process.

| S.No. | Class & Description |

|---|---|

| 1 |

IndexSearcher

This class act as a core component which reads/searches indexes created after the indexing process. It takes directory instance pointing to the location containing the indexes. |

| 2 |

Term

This class is the lowest unit of searching. It is similar to Field in indexing process. |

| 3 |

Query

Query is an abstract class and contains various utility methods and is the parent of all types of queries that Lucene uses during search process. |

| 4 |

TermQuery

TermQuery is the most commonly-used query object and is the foundation of many complex queries that Lucene can make use of. |

| 5 |

TopDocs

TopDocs points to the top N search results which matches the search criteria. It is a simple container of pointers to point to documents which are the output of a search result. |

Lucene - Indexing Process

Indexing process is one of the core functionality provided by Lucene. Following diagram illustrates the indexing process and use of classes. IndexWriter is the most important and core component of the indexing process.

We add Document(s) containing Field(s) to IndexWriter which analyzes the Document(s) using the Analyzer and then creates/open/edit indexes as required and store/update them in a Directory. IndexWriter is used to update or create indexes. It is not used to read indexes.

Now we'll show you a step by step process to get a kick start in understanding of indexing process using a basic example.

Create a document

Create a method to get a lucene document from a text file.

Create various types of fields which are key value pairs containing keys as names and values as contents to be indexed.

Set field to be analyzed or not. In our case, only contents is to be analyzed as it can contain data such as a, am, are, an etc. which are not required in search operations.

Add the newly created fields to the document object and return it to the caller method.

private Document getDocument(File file) throws IOException {

Document document = new Document();

//index file contents

Field contentField = new TextField(LuceneConstants.CONTENTS, new FileReader(file));

//index file name

Field fileNameField = new StringField(LuceneConstants.FILE_NAME,

file.getName(),Field.Store.YES);

//index file path

Field filePathField = new StringField(LuceneConstants.FILE_PATH,

file.getCanonicalPath(),Field.Store.YES);

document.add(contentField);

document.add(fileNameField);

document.add(filePathField);

return document;

}

Create a IndexWriter

IndexWriter class acts as a core component which creates/updates indexes during indexing process. Follow these steps to create a IndexWriter −

Step 1 − Create object of IndexWriter.

Step 2 − Create a Lucene directory which should point to location where indexes are to be stored.

Step 3 − Initialize the IndexWriter object created with the index directory, a standard analyzer and other required/optional parameters.

private IndexWriter writer;

public Indexer(String indexDirectoryPath) throws IOException {

//this directory will contain the indexes

Directory indexDirectory = FSDirectory.open(Paths.get(indexDirectoryPath));

StandardAnalyzer analyzer = new StandardAnalyzer();

IndexWriterConfig config = new IndexWriterConfig(analyzer);

writer = new IndexWriter(indexDirectory, config);

}

Start Indexing Process

The following program shows how to start an indexing process −

private void indexFile(File file) throws IOException {

System.out.println("Indexing "+file.getCanonicalPath());

Document document = getDocument(file);

writer.addDocument(document);

}

Example Application

To test the indexing process, we need to create a Lucene application test.

| Step | Description |

|---|---|

| 1 | Create a project with a name LuceneFirstApplication under a package com.tutorialspoint.lucene as explained in the Lucene - First Application chapter. You can also use the project created in Lucene - First Application chapter as such for this chapter to understand the indexing process. |

| 2 | Create LuceneConstants.java,TextFileFilter.java and Indexer.java as explained in the Lucene - First Application chapter. Keep the rest of the files unchanged. |

| 3 | Create LuceneTester.java as mentioned below. |

| 4 | Clean and build the application to make sure the business logic is working as per the requirements. |

LuceneConstants.java

This class is used to provide various constants to be used across the sample application.

package com.tutorialspoint.lucene;

public class LuceneConstants {

public static final String CONTENTS = "contents";

public static final String FILE_NAME = "filename";

public static final String FILE_PATH = "filepath";

public static final int MAX_SEARCH = 10;

}

TextFileFilter.java

This class is used as a .txt file filter.

package com.tutorialspoint.lucene;

import java.io.File;

import java.io.FileFilter;

public class TextFileFilter implements FileFilter {

@Override

public boolean accept(File pathname) {

return pathname.getName().toLowerCase().endsWith(".txt");

}

}

Indexer.java

This class is used to index the raw data so that we can make it searchable using the Lucene library.

package com.tutorialspoint.lucene;

import java.io.File;

import java.io.FileFilter;

import java.io.FileReader;

import java.io.IOException;

import java.nio.file.Paths;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.StringField;

import org.apache.lucene.document.TextField;

import org.apache.lucene.index.CorruptIndexException;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

public class Indexer {

private IndexWriter writer;

public Indexer(String indexDirectoryPath) throws IOException {

//this directory will contain the indexes

Directory indexDirectory = FSDirectory.open(Paths.get(indexDirectoryPath));

StandardAnalyzer analyzer = new StandardAnalyzer();

IndexWriterConfig config = new IndexWriterConfig(analyzer);

writer = new IndexWriter(indexDirectory, config);

}

public void close() throws CorruptIndexException, IOException {

writer.close();

}

private Document getDocument(File file) throws IOException {

Document document = new Document();

//index file contents

Field contentField = new TextField(LuceneConstants.CONTENTS, new FileReader(file));

//index file name

Field fileNameField = new StringField(LuceneConstants.FILE_NAME,

file.getName(),Field.Store.YES);

//index file path

Field filePathField = new StringField(LuceneConstants.FILE_PATH,

file.getCanonicalPath(),Field.Store.YES);

document.add(contentField);

document.add(fileNameField);

document.add(filePathField);

return document;

}

private void indexFile(File file) throws IOException {

System.out.println("Indexing "+file.getCanonicalPath());

Document document = getDocument(file);

writer.addDocument(document);

}

public int createIndex(String dataDirPath, FileFilter filter)

throws IOException {

//get all files in the data directory

File[] files = new File(dataDirPath).listFiles();

for (File file : files) {

if(!file.isDirectory()

&& !file.isHidden()

&& file.exists()

&& file.canRead()

&& filter.accept(file)

){

indexFile(file);

}

}

return writer.getDocStats().numDocs;

}

}

LuceneTester.java

This class is used to test the indexing capability of the Lucene library.

package com.tutorialspoint.lucene;

import java.io.IOException;

public class LuceneTester {

String indexDir = "D:\\Lucene\\Index";

String dataDir = "D:\\Lucene\\Data";

Indexer indexer;

public static void main(String[] args) {

LuceneTester tester;

try {

tester = new LuceneTester();

tester.createIndex();

} catch (IOException e) {

e.printStackTrace();

}

}

private void createIndex() throws IOException {

indexer = new Indexer(indexDir);

int numIndexed;

long startTime = System.currentTimeMillis();

numIndexed = indexer.createIndex(dataDir, new TextFileFilter());

long endTime = System.currentTimeMillis();

indexer.close();

System.out.println(numIndexed+" File indexed, time taken: "

+(endTime-startTime)+" ms");

}

}

Data & Index Directory Creation

We have used 10 text files from record1.txt to record10.txt containing names and other details of the students and put them in the directory D:\Lucene\Data. Test Data. An index directory path should be created as D:\Lucene\Index. After running this program, you can see the list of index files created in that folder.

Running the Program

Once you are done with the creation of the source, the raw data, the data directory and the index directory, you can proceed by compiling and running your program. To do this, keep the LuceneTester.Java file tab active and use either the Run option available in the Eclipse IDE or use Ctrl + F11 to compile and run your LuceneTester application. If your application runs successfully, it will print the following message in Eclipse IDE's console −

Output

Indexing D:\Lucene\Data\record1.txt Indexing D:\Lucene\Data\record10.txt Indexing D:\Lucene\Data\record2.txt Indexing D:\Lucene\Data\record3.txt Indexing D:\Lucene\Data\record4.txt Indexing D:\Lucene\Data\record5.txt Indexing D:\Lucene\Data\record6.txt Indexing D:\Lucene\Data\record7.txt Indexing D:\Lucene\Data\record8.txt Indexing D:\Lucene\Data\record9.txt 10 File indexed, time taken: 109 ms

Once you've run the program successfully, you will have the following content in your index directory −

Lucene - Search Operation

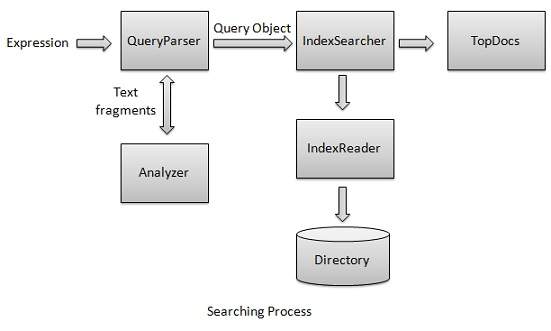

The process of searching is one of the core functionalities provided by Lucene. Following diagram illustrates the process and its use. IndexSearcher is one of the core components of the searching process.

We first create Directory(s) containing indexes and then pass it to IndexSearcher which opens the Directory using IndexReader. Then we create a Query with a Term and make a search using IndexSearcher by passing the Query to the searcher. IndexSearcher returns a TopDocs object which contains the search details along with document ID(s) of the Document which is the result of the search operation.

We will now show you a step-wise approach and help you understand the indexing process using a basic example.

Create a QueryBuilder

QueryBuilder class is used to build a query using the user entered input into Lucene understandable format query. Follow these steps to create a QueryBuilder −

Step 1 − Create object of QueryBuilder.

Step 2 − Initialize the QueryBuilder object created with a standard analyzer.

QueryBuilder queryBuilder;

public Searcher(String indexDirectoryPath) throws IOException {

StandardAnalyzer analyzer = new StandardAnalyzer();

queryBuilder = new QueryBuilder(analyzer);

}

Create a IndexSearcher

IndexSearcher class acts as a core component which searcher indexes created during indexing process. Follow these steps to create a IndexSearcher −

Step 1 − Create object of IndexSearcher.

Step 2 − Create a Lucene directory which should point to location where indexes are to be stored.

Step 3 − Initialize the IndexSearcher object created with the index directory.

IndexSearcher indexSearcher;

public Searcher(String indexDirectoryPath) throws IOException {

DirectoryReader indexDirectory = DirectoryReader.open(FSDirectory.open(Paths.get(indexDirectoryPath)));

indexSearcher = new IndexSearcher(indexDirectory);

}

Make search

Follow these steps to make search −

Step 1 − Create a Query object by parsing the search expression through QueryBuilder.

Step 2 − Make search by calling the IndexSearcher.search() method.

Query query;

public TopDocs search( String searchQuery) throws IOException, ParseException {

query = queryBuilder.createPhraseQuery(LuceneConstants.CONTENTS, searchQuery);

return indexSearcher.search(query, LuceneConstants.MAX_SEARCH);

}

Get the Document

The following program shows how to get the document.

public Document getDocument(ScoreDoc scoreDoc)

throws CorruptIndexException, IOException {

return indexSearcher.storedFields().document(scoreDoc.doc);

}

Example Application

Let us create a test Lucene application to test searching process.

| Step | Description |

|---|---|

| 1 | Create a project with a name LuceneFirstApplication under a package com.tutorialspoint.lucene as explained in the Lucene - First Application chapter. You can also use the project created in Lucene - First Application chapter as such for this chapter to understand the searching process. |

| 2 | Create LuceneConstants.java,TextFileFilter.java and Searcher.java as explained in the Lucene - First Application chapter. Keep the rest of the files unchanged. |

| 3 | Create LuceneTester.java as mentioned below. |

| 4 | Clean and Build the application to make sure business logic is working as per the requirements. |

LuceneConstants.java

This class is used to provide various constants to be used across the sample application.

package com.tutorialspoint.lucene;

public class LuceneConstants {

public static final String CONTENTS = "contents";

public static final String FILE_NAME = "filename";

public static final String FILE_PATH = "filepath";

public static final int MAX_SEARCH = 10;

}

TextFileFilter.java

This class is used as a .txt file filter.

package com.tutorialspoint.lucene;

import java.io.File;

import java.io.FileFilter;

public class TextFileFilter implements FileFilter {

@Override

public boolean accept(File pathname) {

return pathname.getName().toLowerCase().endsWith(".txt");

}

}

Searcher.java

This class is used to read the indexes made on raw data and searches data using the Lucene library.

package com.tutorialspoint.lucene;

import java.io.IOException;

import java.nio.file.Paths;

import java.text.ParseException;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.index.CorruptIndexException;

import org.apache.lucene.index.DirectoryReader;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.util.QueryBuilder;

public class Searcher {

IndexSearcher indexSearcher;

QueryBuilder queryBuilder;

Query query;

public Searcher(String indexDirectoryPath)

throws IOException {

DirectoryReader indexDirectory = DirectoryReader.open(FSDirectory.open(Paths.get(indexDirectoryPath)));

indexSearcher = new IndexSearcher(indexDirectory);

StandardAnalyzer analyzer = new StandardAnalyzer();

queryBuilder = new QueryBuilder(analyzer);

}

public TopDocs search( String searchQuery)

throws IOException, ParseException {

query = queryBuilder.createPhraseQuery(LuceneConstants.CONTENTS, searchQuery);

return indexSearcher.search(query, LuceneConstants.MAX_SEARCH);

}

public Document getDocument(ScoreDoc scoreDoc)

throws CorruptIndexException, IOException {

return indexSearcher.storedFields().document(scoreDoc.doc);

}

}

LuceneTester.java

This class is used to test the searching capability of the Lucene library.

package com.tutorialspoint.lucene;

import java.io.IOException;

import org.apache.lucene.document.Document;

import org.apache.lucene.queryParser.ParseException;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TopDocs;

public class LuceneTester {

String indexDir = "D:\\Lucene\\Index";

String dataDir = "D:\\Lucene\\Data";

Searcher searcher;

public static void main(String[] args) {

LuceneTester tester;

try {

tester = new LuceneTester();

tester.search("Mohan");

} catch (IOException e) {

e.printStackTrace();

} catch (ParseException e) {

e.printStackTrace();

}

}

private void search(String searchQuery) throws IOException, ParseException {

searcher = new Searcher(indexDir);

long startTime = System.currentTimeMillis();

TopDocs hits = searcher.search(searchQuery);

long endTime = System.currentTimeMillis();

System.out.println(hits.totalHits +

" documents found. Time :" + (endTime - startTime) +" ms");

for(ScoreDoc scoreDoc : hits.scoreDocs) {

Document doc = searcher.getDocument(scoreDoc);

System.out.println("File: "+ doc.get(LuceneConstants.FILE_PATH));

}

}

}

Data & Index Directory Creation

We have used 10 text files named record1.txt to record10.txt containing names and other details of the students and put them in the directory D:\Lucene\Data. Test Data. An index directory path should be created as D:\Lucene\Index. After running the indexing program in the chapter Lucene - Indexing Process, you can see the list of index files created in that folder.

Running the Program

Once you are done with the creation of the source, the raw data, the data directory, the index directory and the indexes, you can proceed by compiling and running your program. To do this, keep LuceneTester.Java file tab active and use either the Run option available in the Eclipse IDE or use Ctrl + F11 to compile and run your LuceneTesterapplication. If your application runs successfully, it will print the following message in Eclipse IDE's console −

Output

1 hits documents found. Time :30 ms File: D:\lucene\Data\record4.txt

Lucene - Sorting

Lucene gives the search results by default sorted by relevance and which can be manipulated as required.

Sorting by Relevance is the default sorting mode used by Lucene. Lucene provides results by the most relevant hit at the top.

Steps to sort Search results

Step 1: Create Index for the item to be sorted.

Add SortedDocValuesField for the field to be sorted.

//index file name Field fileNameField = new Field(LuceneConstants.FILE_NAME, file.getName(),type); //sort file name Field sortedFileNameField = new SortedDocValuesField(LuceneConstants.FILE_NAME, new BytesRef(file.getName())); // add fields document.add(fileNameField); document.add(sortedFileNameField);

Step 2: Create SortField and Sort Objects

Create Sort Object for the field to be searched.

// Sort by a string field SortField fileNameSort = new SortField(LuceneConstants.FILE_NAME, SortField.Type.STRING); Sort sort = new Sort(fileNameSort);

Step 3: Search using Sort Object

// sort and return search results return indexSearcher.search(query, LuceneConstants.MAX_SEARCH, sort);

Example Application

To test sorting by relevance, let us create a test Lucene application.

| Step | Description |

|---|---|

| 1 | Create a project with a name LuceneFirstApplication under a package com.tutorialspoint.lucene as explained in the Lucene - First Application chapter. You can also use the project created in Lucene - First Application chapter as such for this chapter to understand the searching process. |

| 2 | Create LuceneConstants.java,TextFileFilter.java, Indexer.java and Searcher.java as explained in the Lucene - First Application chapter. Keep the rest of the files unchanged. |

| 3 | Create LuceneTester.java as mentioned below. |

| 4 | Clean and Build the application to make sure business logic is working as per the requirements. |

LuceneConstants.java

This class is used to provide various constants to be used across the sample application.

package com.tutorialspoint.lucene;

public class LuceneConstants {

public static final String CONTENTS = "contents";

public static final String FILE_NAME = "filename";

public static final String FILE_PATH = "filepath";

public static final int MAX_SEARCH = 10;

}

TextFileFilter.java

This class is used as a .txt file filter.

package com.tutorialspoint.lucene;

import java.io.File;

import java.io.FileFilter;

public class TextFileFilter implements FileFilter {

@Override

public boolean accept(File pathname) {

return pathname.getName().toLowerCase().endsWith(".txt");

}

}

Indexer.java

This class is used to create index using lucene library.

package com.tutorialspoint.lucene;

import java.io.BufferedReader;

import java.io.File;

import java.io.FileFilter;

import java.io.FileReader;

import java.io.IOException;

import java.nio.file.Paths;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.FieldType;

import org.apache.lucene.document.SortedDocValuesField;

import org.apache.lucene.document.StringField;

import org.apache.lucene.document.TextField;

import org.apache.lucene.index.CorruptIndexException;

import org.apache.lucene.index.DocValuesType;

import org.apache.lucene.index.IndexOptions;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.index.Term;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.util.BytesRef;

public class Indexer {

private IndexWriter writer;

public Indexer(String indexDirectoryPath) throws IOException {

//this directory will contain the indexes

Directory indexDirectory = FSDirectory.open(Paths.get(indexDirectoryPath));

StandardAnalyzer analyzer = new StandardAnalyzer();

IndexWriterConfig config = new IndexWriterConfig(analyzer);

writer = new IndexWriter(indexDirectory, config);

}

public void close() throws CorruptIndexException, IOException {

writer.close();

}

private Document getDocument(File file) throws IOException {

Document document = new Document();

//index file contents

Field contentField = new TextField(LuceneConstants.CONTENTS,

new FileReader(file));

FieldType type = new FieldType();

type.setStored(true);

type.setTokenized(false);

type.setIndexOptions(IndexOptions.DOCS);

type.setOmitNorms(true);

//index file name

Field fileNameField = new Field(LuceneConstants.FILE_NAME,

file.getName(),type);

//sort file name

Field sortedFileNameField = new SortedDocValuesField(LuceneConstants.FILE_NAME, new BytesRef(file.getName()));

//index file path

Field filePathField = new Field(LuceneConstants.FILE_PATH,

file.getCanonicalPath(),type);

document.add(contentField);

document.add(fileNameField);

document.add(sortedFileNameField);

document.add(filePathField);

return document;

}

private void indexFile(File file) throws IOException {

System.out.println("Indexing "+file.getCanonicalPath());

Document document = getDocument(file);

writer.addDocument(document);

}

public int createIndex(String dataDirPath, FileFilter filter)

throws IOException {

//get all files in the data directory

File[] files = new File(dataDirPath).listFiles();

for (File file : files) {

if(!file.isDirectory()

&& !file.isHidden()

&& file.exists()

&& file.canRead()

&& filter.accept(file)

){

indexFile(file);

}

}

return writer.getDocStats().numDocs;

}

}

Searcher.java

This class is used to read the indexes made on raw data and searches data using the Lucene library.

package com.tutorialspoint.lucene;

import java.io.IOException;

import java.nio.file.Paths;

import java.text.ParseException;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.index.CorruptIndexException;

import org.apache.lucene.index.DirectoryReader;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.Sort;

import org.apache.lucene.search.SortField;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.util.QueryBuilder;

public class Searcher {

IndexSearcher indexSearcher;

QueryBuilder queryBuilder;

Query query;

public Searcher(String indexDirectoryPath)

throws IOException {

DirectoryReader indexDirectory = DirectoryReader.open(FSDirectory.open(Paths.get(indexDirectoryPath)));

indexSearcher = new IndexSearcher(indexDirectory);

StandardAnalyzer analyzer = new StandardAnalyzer();

queryBuilder = new QueryBuilder(analyzer);

}

public TopDocs search(Query query) throws IOException, ParseException {

// Sort by a string field

SortField fileNameSort = new SortField(LuceneConstants.FILE_NAME, SortField.Type.STRING);

Sort sort = new Sort(fileNameSort);

return indexSearcher.search(query, LuceneConstants.MAX_SEARCH, sort);

}

public Document getDocument(ScoreDoc scoreDoc)

throws CorruptIndexException, IOException {

return indexSearcher.storedFields().document(scoreDoc.doc);

}

}

LuceneTester.java

This class is used to test the searching capability of the Lucene library.

package com.tutorialspoint.lucene;

import java.io.IOException;

import java.text.ParseException;

import org.apache.lucene.document.Document;

import org.apache.lucene.index.Term;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.search.WildcardQuery;

public class LuceneTester {

String indexDir = "D:\\lucene\\Index";

String dataDir = "D:\\lucene\\Data";

Indxer indexer;

Searcher searcher;

public static void main(String[] args) {

LuceneTester tester;

try {

tester = new LuceneTester();

tester.createIndex();

tester.searchUsingWildCardQuery("record1*");

} catch (IOException e) {

e.printStackTrace();

} catch (ParseException e) {

e.printStackTrace();

}

}

private void createIndex() throws IOException {

indexer = new Indexer(indexDir);

int numIndexed;

long startTime = System.currentTimeMillis();

numIndexed = indexer.createIndex(dataDir, new TextFileFilter());

long endTime = System.currentTimeMillis();

indexer.close();

System.out.println(numIndexed+" File indexed, time taken: "

+(endTime-startTime)+" ms");

}

private void searchUsingWildCardQuery(String searchQuery)

throws IOException, ParseException {

searcher = new Searcher(indexDir);

long startTime = System.currentTimeMillis();

//create a term to search file name

Term term = new Term(LuceneConstants.FILE_NAME, searchQuery);

//create the term query object

Query query = new WildcardQuery(term);

//do the search

TopDocs hits = searcher.search(query);

long endTime = System.currentTimeMillis();

System.out.println(hits.totalHits +

" documents found. Time :" + (endTime - startTime) + "ms");

for(ScoreDoc scoreDoc : hits.scoreDocs) {

Document doc = searcher.getDocument(scoreDoc);

System.out.println("File: "+ doc.get(LuceneConstants.FILE_PATH));

}

}

}

Data & Index Directory Creation

We have used 10 text files from record1.txt to record10.txt containing names and other details of the students and put them in the directory D:\Lucene\Data. Test Data. An index directory path should be created as D:\Lucene\Index. Before running this program, delete any list of index files present in that folder.

Running the Program

Once you are done with the creation of the source, the raw data, the data directory, the index directory and the indexes, you can compile and run your program. To do this, Keep the LuceneTester.Java file tab active and use either the Run option available in the Eclipse IDE or use Ctrl + F11 to compile and run your LuceneTester application. If your application runs successfully, it will print the following message in Eclipse IDE's console −

Indexing D:\lucene\Data\record1.txt Indexing D:\lucene\Data\record10.txt Indexing D:\lucene\Data\record2.txt Indexing D:\lucene\Data\record3.txt Indexing D:\lucene\Data\record4.txt Indexing D:\lucene\Data\record5.txt Indexing D:\lucene\Data\record6.txt Indexing D:\lucene\Data\record7.txt Indexing D:\lucene\Data\record8.txt Indexing D:\lucene\Data\record9.txt 10 File indexed, time taken: 63 ms 2 hits documents found. Time :69ms File: D:\lucene\Data\record1.txt File: D:\lucene\Data\record10.txt

Lucene - Indexing Operations

In this chapter, we'll discuss the four major operations of indexing. These operations are useful at various times and are used throughout of a software search application.

Indexing Operations

Following is a list of commonly-used operations during indexing process.

| S.No. | Operation & Description |

|---|---|

| 1 |

Add Document

This operation is used in the initial stage of the indexing process to create the indexes on the newly available content. |

| 2 |

Update Document

This operation is used to update indexes to reflect the changes in the updated contents. It is similar to recreating the index. |

| 3 |

Delete Document

This operation is used to update indexes to exclude the documents which are not required to be indexed/searched. |

| 4 |

Field Options

Field options specify a way or control the ways in which the contents of a field are to be made searchable. |

Lucene - Add Document Operation

Add document is one of the core operations of the indexing process.

We add Document(s) containing Field(s) to IndexWriter where IndexWriter is used to update or create indexes.

We will now show you a step-wise approach and help you understand how to add a document using a basic example.

Add a document to an index

Follow these steps to add a document to an index −

Step 1 − Create a method to get a Lucene document from a text file.

Step 2 − Create various fields which are key value pairs containing keys as names and values as contents to be indexed.

Step 3 − Set field to be analyzed or not. In our case, only the content is to be analyzed as it can contain data such as a, am, are, an etc. which are not required in search operations.

Step 4 − Add the newly-created fields to the document object and return it to the caller method.

private Document getDocument(File file) throws IOException {

Document document = new Document();

//index file contents

Field contentField = new TextField(LuceneConstants.CONTENTS, new FileReader(file));

//index file name

Field fileNameField = new StringField(LuceneConstants.FILE_NAME,

file.getName(),Field.Store.YES);

//index file path

Field filePathField = new StringField(LuceneConstants.FILE_PATH,

file.getCanonicalPath(),Field.Store.YES);

document.add(contentField);

document.add(fileNameField);

document.add(filePathField);

return document;

}

Create a IndexWriter

IndexWriter class acts as a core component which creates/updates indexes during the indexing process.

Follow these steps to create a IndexWriter −

Step 1 − Create object of IndexWriter.

Step 2 − Create a Lucene directory which should point to location where indexes are to be stored.

Initialize the IndexWriter object created with the index directory, a standard analyzer having version information and other required/optional parameters.

private IndexWriter writer;

public Indexer(String indexDirectoryPath) throws IOException {

//this directory will contain the indexes

Directory indexDirectory = FSDirectory.open(Paths.get(indexDirectoryPath));

StandardAnalyzer analyzer = new StandardAnalyzer();

IndexWriterConfig config = new IndexWriterConfig(analyzer);

writer = new IndexWriter(indexDirectory, config);

}

Add Document and Start Indexing Process

Following two are the ways to add the document.

addDocument(Document) − Adds the document using the default analyzer (specified when the index writer is created.)

addDocument(Document,Analyzer) − Adds the document using the provided analyzer.

private void indexFile(File file) throws IOException {

System.out.println("Indexing "+file.getCanonicalPath());

Document document = getDocument(file);

writer.addDocument(document);

}

Example Application

To test the indexing process, we need to create Lucene application test.

| Step | Description |

|---|---|

| 1 | Create a project with a name LuceneFirstApplication under a package com.tutorialspoint.lucene as explained in the Lucene - First Application chapter. To understand the indexing process, you can also use the project created in Lucene - First Application chapter as such for this chapter. |

| 2 | Create LuceneConstants.java,TextFileFilter.java and Indexer.java as explained in the Lucene - First Application chapter. Keep the rest of the files unchanged. |

| 3 | Create LuceneTester.java as mentioned below. |

| 4 | Clean and Build the application to make sure business logic is working as per the requirements. |

LuceneConstants.java

This class is used to provide various constants to be used across the sample application.

package com.tutorialspoint.lucene;

public class LuceneConstants {

public static final String CONTENTS = "contents";

public static final String FILE_NAME = "filename";

public static final String FILE_PATH = "filepath";

public static final int MAX_SEARCH = 10;

}

TextFileFilter.java

This class is used as a .txt file filter.

package com.tutorialspoint.lucene;

import java.io.File;

import java.io.FileFilter;

public class TextFileFilter implements FileFilter {

@Override

public boolean accept(File pathname) {

return pathname.getName().toLowerCase().endsWith(".txt");

}

}

Indexer.java

This class is used to index the raw data so that we can make it searchable using the Lucene library.

package com.tutorialspoint.lucene;

import java.io.File;

import java.io.FileFilter;

import java.io.FileReader;

import java.io.IOException;

import java.nio.file.Paths;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.StringField;

import org.apache.lucene.document.TextField;

import org.apache.lucene.index.CorruptIndexException;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

public class Indexer {

private IndexWriter writer;

public Indexer(String indexDirectoryPath) throws IOException {

//this directory will contain the indexes

Directory indexDirectory = FSDirectory.open(Paths.get(indexDirectoryPath));

StandardAnalyzer analyzer = new StandardAnalyzer();

IndexWriterConfig config = new IndexWriterConfig(analyzer);

writer = new IndexWriter(indexDirectory, config);

}

public void close() throws CorruptIndexException, IOException {

writer.close();

}

private Document getDocument(File file) throws IOException {

Document document = new Document();

//index file contents

Field contentField = new TextField(LuceneConstants.CONTENTS, new FileReader(file));

//index file name

Field fileNameField = new StringField(LuceneConstants.FILE_NAME,

file.getName(),Field.Store.YES);

//index file path

Field filePathField = new StringField(LuceneConstants.FILE_PATH,

file.getCanonicalPath(),Field.Store.YES);

document.add(contentField);

document.add(fileNameField);

document.add(filePathField);

return document;

}

private void indexFile(File file) throws IOException {

System.out.println("Indexing "+file.getCanonicalPath());

Document document = getDocument(file);

writer.addDocument(document);

}

public int createIndex(String dataDirPath, FileFilter filter)

throws IOException {

//get all files in the data directory

File[] files = new File(dataDirPath).listFiles();

for (File file : files) {

if(!file.isDirectory()

&& !file.isHidden()

&& file.exists()

&& file.canRead()

&& filter.accept(file)

){

indexFile(file);

}

}

return writer.getDocStats().numDocs;

}

}

LuceneTester.java

This class is used to test the indexing capability of the Lucene library.

package com.tutorialspoint.lucene;

import java.io.IOException;

public class LuceneTester {

String indexDir = "D:\\Lucene\\Index";

String dataDir = "D:\\Lucene\\Data";

Indexer indexer;

public static void main(String[] args) {

LuceneTester tester;

try {

tester = new LuceneTester();

tester.createIndex();

} catch (IOException e) {

e.printStackTrace();

}

}

private void createIndex() throws IOException {

indexer = new Indexer(indexDir);

int numIndexed;

long startTime = System.currentTimeMillis();

numIndexed = indexer.createIndex(dataDir, new TextFileFilter());

long endTime = System.currentTimeMillis();

indexer.close();

System.out.println(numIndexed+" File indexed, time taken: "

+(endTime-startTime)+" ms");

}

}

Data & Index Directory Creation

We have used 10 text files from record1.txt to record10.txt containing names and other details of the students and put them in the directory D:\Lucene\Data. Test Data. An index directory path should be created as D:\Lucene\Index. After running this program, you can see the list of index files created in that folder.

Running the Program

Once you are done with the creation of the source, creating the raw data, data directory and index directory, you are ready for this step which is compiling and running your program. To do this, keep LuceneTester.Java file tab active and use either Run option available in the Eclipse IDE or use Ctrl + F11 to compile and run your LuceneTester application. If your application runs successfully, it will print the following message in Eclipse IDE's console −

Indexing D:\lucene\Data\record1.txt Indexing D:\lucene\Data\record10.txt Indexing D:\lucene\Data\record2.txt Indexing D:\lucene\Data\record3.txt Indexing D:\lucene\Data\record4.txt Indexing D:\lucene\Data\record5.txt Indexing D:\lucene\Data\record6.txt Indexing D:\lucene\Data\record7.txt Indexing D:\lucene\Data\record8.txt Indexing D:\lucene\Data\record9.txt 10 File indexed, time taken: 88 ms

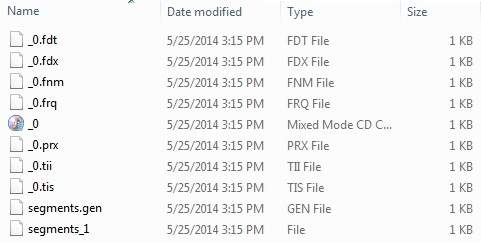

Once you've run the program successfully, you will have following content in your index directory −

Lucene - Update Document Operation

Update document is another important operation as part of indexing process. This operation is used when already indexed contents are updated and indexes become invalid. This operation is also known as re-indexing.

We update Document(s) containing Field(s) to IndexWriter where IndexWriter is used to update indexes.

We will now show you a step-wise approach and help you understand how to update document using a basic example.

Update a Document to an Index

Follow this step to update a document to an index −

Step 1 − Create a method to update a Lucene document from an updated text file.

private void updateDocument(File file) throws IOException {

Document document = new Document();

String contents = "Updated Contents : ";

try(BufferedReader reader = new BufferedReader(new FileReader(file))) {

String line;

while((line = reader.readLine()) != null) {

contents += line;

}

}

//update indexes for file contents

writer.updateDocument(new Term

(LuceneConstants.CONTENTS,

contents),document);

}

Create an IndexWriter

Follow these steps to create an IndexWriter −

Step 1 − IndexWriter class acts as a core component which creates/updates indexes during the indexing process.

Step 2 − Create object of IndexWriter.

Step 3 − Create a Lucene directory which should point to location where indexes are to be stored.

Step 4 − Initialize the IndexWriter object created with the index directory, a standard analyzer having version information and other required/optional parameters.

private IndexWriter writer;

public Indexer(String indexDirectoryPath) throws IOException {

//this directory will contain the indexes

Directory indexDirectory = FSDirectory.open(Paths.get(indexDirectoryPath));

StandardAnalyzer analyzer = new StandardAnalyzer();

IndexWriterConfig config = new IndexWriterConfig(analyzer);

writer = new IndexWriter(indexDirectory, config);

}

Update document and start reindexing process

Following are the two ways to update the document.

updateDocument(Term, Document) − Delete the document containing the term and add the document using the default analyzer (specified when index writer is created).

updateDocument(Term, Document,Analyzer) − Delete the document containing the term and add the document using the provided analyzer.

private void indexFile(File file) throws IOException {

System.out.println("Updating index for "+file.getCanonicalPath());

updateDocument(file);

}

Example Application

To test the indexing process, let us create a Lucene application test.

| Step | Description |

|---|---|

| 1 | Create a project with a name LuceneFirstApplication under a package com.tutorialspoint.lucene as explained in the Lucene - First Application chapter. You can also use the project created in Lucene - First Application chapter as such for this chapter to understand the indexing process. |

| 2 | Create LuceneConstants.java,TextFileFilter.java and Indexer.java as explained in the Lucene - First Application chapter. Keep the rest of the files unchanged. |

| 3 | Create LuceneTester.java as mentioned below. |

| 4 | Clean and Build the application to make sure business logic is working as per the requirements. |

LuceneConstants.java

This class is used to provide various constants to be used across the sample application.

package com.tutorialspoint.lucene;

public class LuceneConstants {

public static final String CONTENTS = "contents";

public static final String FILE_NAME = "filename";

public static final String FILE_PATH = "filepath";

public static final int MAX_SEARCH = 10;

}

TextFileFilter.java

This class is used as a .txt file filter.

package com.tutorialspoint.lucene;

import java.io.File;

import java.io.FileFilter;

public class TextFileFilter implements FileFilter {

@Override

public boolean accept(File pathname) {

return pathname.getName().toLowerCase().endsWith(".txt");

}

}

Indexer.java

This class is used to index the raw data so that we can make it searchable using the Lucene library.

package com.tutorialspoint.lucene;

import java.io.BufferedReader;

import java.io.File;

import java.io.FileFilter;

import java.io.FileReader;

import java.io.IOException;

import java.nio.file.Paths;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.index.CorruptIndexException;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.index.Term;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

public class Indexer {

private IndexWriter writer;

public Indexer(String indexDirectoryPath) throws IOException {

//this directory will contain the indexes

Directory indexDirectory = FSDirectory.open(Paths.get(indexDirectoryPath));

StandardAnalyzer analyzer = new StandardAnalyzer();

IndexWriterConfig config = new IndexWriterConfig(analyzer);

writer = new IndexWriter(indexDirectory, config);

}

public void close() throws CorruptIndexException, IOException {

writer.close();

}

private void indexFile(File file) throws IOException {

System.out.println("Updating index for "+file.getCanonicalPath());

updateDocument(file);

}

private void updateDocument(File file) throws IOException {

Document document = new Document();

String contents = "Updated Contents : ";

try(BufferedReader reader = new BufferedReader(new FileReader(file))) {

String line;

while((line = reader.readLine()) != null) {

contents += line;

}

}

//update indexes for file contents

writer.updateDocument(new Term

(LuceneConstants.CONTENTS,

contents),document);

}

public int createIndex(String dataDirPath, FileFilter filter)

throws IOException {

//get all files in the data directory

File[] files = new File(dataDirPath).listFiles();

for (File file : files) {

if(!file.isDirectory()

&& !file.isHidden()

&& file.exists()

&& file.canRead()

&& filter.accept(file)

){

indexFile(file);

}

}

return writer.getDocStats().numDocs;

}

}

LuceneTester.java

This class is used to test the indexing capability of the Lucene library.

package com.tutorialspoint.lucene;

import java.io.IOException;

public class LuceneTester {

String indexDir = "D:\\Lucene\\Index";

String dataDir = "D:\\Lucene\\Data";

Indexer indexer;

public static void main(String[] args) {

LuceneTester tester;

try {

tester = new LuceneTester();

tester.createIndex();

} catch (IOException e) {

e.printStackTrace();

}

}

private void createIndex() throws IOException {

indexer = new Indexer(indexDir);

int numIndexed;

long startTime = System.currentTimeMillis();

numIndexed = indexer.createIndex(dataDir, new TextFileFilter());

long endTime = System.currentTimeMillis();

indexer.close();

}

}

Data & Index Directory Creation

Here, we have used 10 text files from record1.txt to record10.txt containing names and other details of the students and put them in the directory D:\Lucene\Data. Test Data. An index directory path should be created as D:\Lucene\Index. After running this program, you can see the list of index files created in that folder.

Running the Program

Once you are done with the creation of the source, the raw data, the data directory and the index directory, you can proceed with the compiling and running of your program. To do this, keep the LuceneTester.Java file tab active and use either the Run option available in the Eclipse IDE or use Ctrl + F11 to compile and run your LuceneTester application. If your application runs successfully, it will print the following message in Eclipse IDE's console −

Output

Updating index for D:\lucene\Data\record1.txt Updating index for D:\lucene\Data\record10.txt Updating index for D:\lucene\Data\record2.txt Updating index for D:\lucene\Data\record3.txt Updating index for D:\lucene\Data\record4.txt Updating index for D:\lucene\Data\record5.txt Updating index for D:\lucene\Data\record6.txt Updating index for D:\lucene\Data\record7.txt Updating index for D:\lucene\Data\record8.txt Updating index for D:\lucene\Data\record9.txt 10 File indexed, time taken: 50 ms

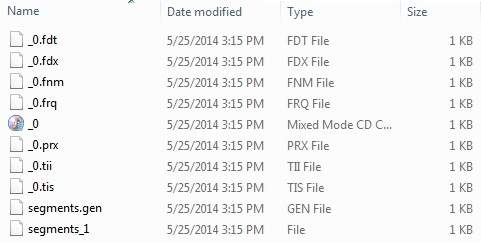

Once you've run the above program successfully, you will have the following content in your index directory −

Lucene - Delete Document Operation

Delete document is another important operation of the indexing process. This operation is used when already indexed contents are updated and indexes become invalid or indexes become very large in size, then in order to reduce the size and update the index, delete operations are carried out.

We delete Document(s) containing Field(s) to IndexWriter where IndexWriter is used to update indexes.

We will now show you a step-wise approach and make you understand how to delete a document using a basic example.

Delete a document from an index

Follow these steps to delete a document from an index −

Step 1 − Create a method to delete a Lucene document of an obsolete text file.

private void deleteDocument(File file) throws IOException {

//delete indexes for a file

writer.deleteDocument(new Term(LuceneConstants.FILE_NAME,file.getName()));

writer.commit();

}

Create an IndexWriter

IndexWriter class acts as a core component which creates/updates indexes during the indexing process.

Follow these steps to create an IndexWriter −

Step 1 − Create object of IndexWriter.

Step 2 − Create a Lucene directory which should point to a location where indexes are to be stored.

Step 3 − Initialize the IndexWriter object created with the index directory, a standard analyzer having the version information and other required/optional parameters.

private IndexWriter writer;

public Indexer(String indexDirectoryPath) throws IOException {

//this directory will contain the indexes

Directory indexDirectory = FSDirectory.open(Paths.get(indexDirectoryPath));

StandardAnalyzer analyzer = new StandardAnalyzer();

IndexWriterConfig config = new IndexWriterConfig(analyzer);

writer = new IndexWriter(indexDirectory, config);

}

Delete Document and Start Reindexing Process

Following are the ways to delete the document.

deleteDocuments(Term) − Delete all the documents containing the term.

deleteDocuments(Term[]) − Delete all the documents containing any of the terms in the array.

deleteDocuments(Query) − Delete all the documents matching the query.

deleteDocuments(Query[]) − Delete all the documents matching the query in the array.

deleteAll() − Delete all the documents.

private void indexFile(File file) throws IOException {

System.out.println("Deleting index for "+file.getCanonicalPath());

deleteDocument(file);

}

Example Application

To test the indexing process, let us create a Lucene application test.

| Step | Description |

|---|---|

| 1 | Create a project with a name LuceneFirstApplication under a package com.tutorialspoint.lucene as explained in the Lucene - First Application chapter. You can also use the project created in Lucene - First Application chapter as such for this chapter to understand the indexing process. |

| 2 | Create LuceneConstants.java,TextFileFilter.java and Indexer.java as explained in the Lucene - First Application chapter. Keep the rest of the files unchanged. |

| 3 | Create LuceneTester.java as mentioned below. |

| 4 | Clean and Build the application to make sure business logic is working as per the requirements. |

LuceneConstants.java

This class provides various constants that can be used across the sample application.

package com.tutorialspoint.lucene;

public class LuceneConstants {

public static final String CONTENTS = "contents";

public static final String FILE_NAME = "filename";

public static final String FILE_PATH = "filepath";

public static final int MAX_SEARCH = 10;

}

TextFileFilter.java

This class is used as a .txt file filter.

package com.tutorialspoint.lucene;

import java.io.File;

import java.io.FileFilter;

public class TextFileFilter implements FileFilter {

@Override

public boolean accept(File pathname) {

return pathname.getName().toLowerCase().endsWith(".txt");

}

}

Indexer.java

This class is used to index the raw data thereby, making it searchable using the Lucene library.

package com.tutorialspoint.lucene;

import java.io.File;

import java.io.FileFilter;

import java.io.IOException;

import java.nio.file.Paths;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.index.CorruptIndexException;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.index.Term;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

public class Indexer {

private IndexWriter writer;

public Indexer(String indexDirectoryPath) throws IOException {

//this directory will contain the indexes

Directory indexDirectory = FSDirectory.open(Paths.get(indexDirectoryPath));

StandardAnalyzer analyzer = new StandardAnalyzer();

IndexWriterConfig config = new IndexWriterConfig(analyzer);

writer = new IndexWriter(indexDirectory, config);

}

public void close() throws CorruptIndexException, IOException {

writer.close();

}

private void deleteDocument(File file) throws IOException {

//delete indexes for a file

writer.deleteDocuments(

new Term(LuceneConstants.FILE_NAME,file.getName()));

writer.commit();

}

private void indexFile(File file) throws IOException {

System.out.println("Deleting index: "+file.getCanonicalPath());

deleteDocument(file);

}

public int createIndex(String dataDirPath, FileFilter filter)

throws IOException {

//get all files in the data directory

File[] files = new File(dataDirPath).listFiles();

for (File file : files) {

if(!file.isDirectory()

&& !file.isHidden()

&& file.exists()

&& file.canRead()

&& filter.accept(file)

){

indexFile(file);

}

}

return writer.getDocStats().numDocs;

}

}

LuceneTester.java

This class is used to test the indexing capability of the Lucene library.

package com.tutorialspoint.lucene;

import java.io.IOException;

public class LuceneTester {

String indexDir = "D:\\Lucene\\Index";

String dataDir = "D:\\Lucene\\Data";

Indexer indexer;

public static void main(String[] args) {

LuceneTester tester;

try {

tester = new LuceneTester();

tester.createIndex();

} catch (IOException e) {

e.printStackTrace();

}

}

private void createIndex() throws IOException {

indexer = new Indexer(indexDir);

int numIndexed;

long startTime = System.currentTimeMillis();

numIndexed = indexer.createIndex(dataDir, new TextFileFilter());

long endTime = System.currentTimeMillis();

indexer.close();

}

}

Data & Index Directory Creation

Weve used 10 text files from record1.txt to record10.txt containing names and other details of the students and put them in the directory D:\Lucene\Data. Test Data. An index directory path should be created as D:\Lucene\Index. After running this program, you can see the list of index files created in that folder.

Running the Program

Once you are done with the creation of the source, the raw data, the data directory and the index directory, you can compile and run your program. To do this, keep the LuceneTester.Java file tab active and use either the Run option available in the Eclipse IDE or use Ctrl + F11 to compile and run your LuceneTester application. If your application runs successfully, it will print the following message in Eclipse IDE's console −

Output

Deleting index: D:\lucene\Data\record1.txt Deleting index: D:\lucene\Data\record10.txt Deleting index: D:\lucene\Data\record2.txt Deleting index: D:\lucene\Data\record3.txt Deleting index: D:\lucene\Data\record4.txt Deleting index: D:\lucene\Data\record5.txt Deleting index: D:\lucene\Data\record6.txt Deleting index: D:\lucene\Data\record7.txt Deleting index: D:\lucene\Data\record8.txt Deleting index: D:\lucene\Data\record9.txt 10 File indexed, time taken: 325 ms