Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

How to Create Google Lens Application in Android?

The ability to extract and analyse text from photos or video feeds is a valuable capability that may considerably improve an Android application's functionality and user experience. Google Lens, a sophisticated image recognition and augmented reality technology developed by Google, is one famous example of such capabilities. Although duplicating the whole functionality of Google Lens is a difficult endeavour, we may investigate a simpler implementation that focuses on text recognition in an Android app using the Google Vision API.

Methods Used

-

Text Recognition using Google Vision API in Android

Text Recognition using Google Vision API in Android

Algorithm

Step 1 ? Set up the layout.

-

Design the layout for your activity. Create a layout file that includes a SurfaceView for the camera preview and a TextView to display the recognised text.

Step 2 ? Create the activity.

-

Create a new activity class; let's name it GoogleLensActivity.

-

In the onCreate() method, initialise the camera and start the camera preview on the SurfaceView.

-

Set up a camera callback to capture images when the camera preview is available.

Step 3 ? Display the recognised text.

-

Implement the text recognizer. Processor interface to handle the text recognition process.

-

In the receiveDetections() method of the TextRecognizer. Processor, update the recognised text TextView with the recognised text from the camera image.

Step 4 ? Update the layout and manifest.

-

Update the layout XML file to include the necessary views (SurfaceView and TextView).

-

Add the camera and internet permissions to the app's AndroidManifest.xml file.

Xml Code

<!-- below line is use to add camera feature in our app --> <uses-feature android:name="android.hardware.camera" android:required="true" /> <!-- permission for internet --> <uses-permission android:name="android.permission.INTERNET" />

<meta-data android:name="com.google.firebase.ml.vision.DEPENDENCIES" android:value="label" />

XML Program

<?xml version="1.0" encoding="utf-8"?> <manifest xmlns:android="http://schemas.android.com/apk/res/android" package="com.example.googlelens"> <!-- below line is use to add camera feature in our app --> <uses-feature android:name="android.hardware.camera" android:required="true" /> <!-- permission for internet --> <uses-permission android:name="android.permission.INTERNET" /> <application android:allowBackup="true" android:icon="@mipmap/ic_launcher" android:label="@string/app_name" android:roundIcon="@mipmap/ic_launcher_round" android:supportsRtl="true" android:theme="@style/Theme.GoogleLens"> <activity android:name=".MainActivity"> <intent-filter> <action android:name="android.intent.action.MAIN" /> <category android:name="android.intent.category.LAUNCHER" /> </intent-filter> </activity> <meta-data android:name="com.google.firebase.ml.vision.DEPENDENCIES" android:value="label" /> </application> </manifest>

XML Program

<?xml version="1.0" encoding="utf-8"?>

<RelativeLayout

xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity">

<!--image view for displaying our image-->

<ImageView

android:id="@+id/image"

android:layout_width="match_parent"

android:layout_height="300dp"

android:layout_alignParentTop="true"

android:layout_centerHorizontal="true"

android:layout_marginTop="10dp"

android:scaleType="centerCrop" />

<LinearLayout

android:id="@+id/idLLButtons"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_below="@id/image"

android:orientation="horizontal">

<!--button for capturing our image-->

<Button

android:id="@+id/snapbtn"

android:layout_width="0dp"

android:layout_height="wrap_content"

android:layout_gravity="center"

android:layout_margin="5dp"

android:layout_marginTop="30dp"

android:layout_weight="1"

android:lines="2"

android:text="Snap"

android:textAllCaps="false"

android:textSize="18sp"

android:textStyle="bold" />

<!--button for detecting the objects-->

<Button

android:id="@+id/idBtnSearchResuts"

android:layout_width="0dp"

android:layout_height="wrap_content"

android:layout_gravity="center"

android:layout_margin="5dp"

android:layout_marginTop="30dp"

android:layout_weight="1"

android:lines="2"

android:text="Get Search Results"

android:textAllCaps="false"

android:textSize="18sp"

android:textStyle="bold" />

</LinearLayout>

<!--recycler view for displaying the list of result-->

<androidx.recyclerview.widget.RecyclerView

android:id="@+id/idRVSearchResults"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_below="@id/idLLButtons" />

</RelativeLayout>

Java Program

import android.content.Intent;

import android.graphics.Bitmap;

import android.os.Bundle;

import android.provider.MediaStore;

import android.view.View;

import android.widget.Button;

import android.widget.ImageView;

import android.widget.Toast;

import androidx.annotation.NonNull;

import androidx.appcompat.app.AppCompatActivity;

import androidx.recyclerview.widget.LinearLayoutManager;

import androidx.recyclerview.widget.RecyclerView;

import com.android.volley.Request;

import com.android.volley.RequestQueue;

import com.android.volley.Response;

import com.android.volley.VolleyError;

import com.android.volley.toolbox.JsonObjectRequest;

import com.android.volley.toolbox.Volley;

import com.google.android.gms.tasks.OnFailureListener;

import com.google.android.gms.tasks.OnSuccessListener;

import com.google.firebase.ml.vision.FirebaseVision;

import com.google.firebase.ml.vision.common.FirebaseVisionImage;

import com.google.firebase.ml.vision.label.FirebaseVisionImageLabel;

import com.google.firebase.ml.vision.label.FirebaseVisionImageLabeler;

import org.json.JSONArray;

import org.json.JSONException;

import org.json.JSONObject;

import java.util.ArrayList;

import java.util.List;

public class MainActivity extends AppCompatActivity {

static final int REQUEST_IMAGE_CAPTURE = 1;

// variables for our image view, image bitmap,

// buttons, recycler view, adapter and array list.

private ImageView img;

private Button snap, searchResultsBtn;

private Bitmap imageBitmap;

private RecyclerView resultRV;

private SearchResultsRVAdapter searchResultsRVAdapter;

private ArrayList<DataModal> dataModalArrayList;

private String title, link, displayed_link, snippet;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

// initializing all our variables for views

img = (ImageView) findViewById(R.id.image);

snap = (Button) findViewById(R.id.snapbtn);

searchResultsBtn = findViewById(R.id.idBtnSearchResuts);

resultRV = findViewById(R.id.idRVSearchResults);

// initializing our array list

dataModalArrayList = new ArrayList<>();

// initializing our adapter class.

searchResultsRVAdapter = new SearchResultsRVAdapter(dataModalArrayList, MainActivity.this);

// layout manager for our recycler view.

LinearLayoutManager manager = new LinearLayoutManager(MainActivity.this, LinearLayoutManager.HORIZONTAL, false);

// on below line we are setting layout manager

// and adapter to our recycler view.

resultRV.setLayoutManager(manager);

resultRV.setAdapter(searchResultsRVAdapter);

// adding on click listener for our snap button.

snap.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

// calling a method to capture an image.

dispatchTakePictureIntent();

}

});

// adding on click listener for our button to search data.

searchResultsBtn.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

// calling a method to get search results.

getResults();

}

});

}

private void getResults() {

// inside the label image method we are calling a firebase vision image

// and passing our image bitmap to it.

FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(imageBitmap);

// on below line we are creating a labeler for our image bitmap and

// creating a variable for our firebase vision image labeler.

FirebaseVisionImageLabeler labeler = FirebaseVision.getInstance().getOnDeviceImageLabeler();

// calling a method to process an image and adding on success listener method to it.

labeler.processImage(image).addOnSuccessListener(new OnSuccessListener<List<FirebaseVisionImageLabel>>() {

@Override

public void onSuccess(List<FirebaseVisionImageLabel> firebaseVisionImageLabels) {

String searchQuery = firebaseVisionImageLabels.get(0).getText();

searchData(searchQuery);

}

}).addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

// displaying error message.

Toast.makeText(MainActivity.this, "Fail to detect image..", Toast.LENGTH_SHORT).show();

}

});

}

private void searchData(String searchQuery) {

String apiKey = "Enter your API key here";

String url = "https://serpapi.com/search.json?q=" + searchQuery.trim() + "&location=Delhi,India&hl=en&gl=us&google_domain=google.com&api_key=" + apiKey;

// creating a new variable for our request queue

RequestQueue queue = Volley.newRequestQueue(MainActivity.this);

JsonObjectRequest jsonObjectRequest = new JsonObjectRequest(Request.Method.GET, url, null, new Response.Listener<JSONObject>() {

@Override

public void onResponse(JSONObject response) {

try {

// on below line we are extracting data from our json.

JSONArray organicResultsArray = response.getJSONArray("organic_results");

for (int i = 0; i < organicResultsArray.length(); i++) {

JSONObject organicObj = organicResultsArray.getJSONObject(i);

if (organicObj.has("title")) {

title = organicObj.getString("title");

}

if (organicObj.has("link")) {

link = organicObj.getString("link");

}

if (organicObj.has("displayed_link")) {

displayed_link = organicObj.getString("displayed_link");

}

if (organicObj.has("snippet")) {

snippet = organicObj.getString("snippet");

}

// on below line we are adding data to our array list.

dataModalArrayList.add(new DataModal(title, link, displayed_link, snippet));

}

// notifying our adapter class

// on data change in array list.

searchResultsRVAdapter.notifyDataSetChanged();

} catch (JSONException e) {

e.printStackTrace();

}

}

}, new Response.ErrorListener() {

@Override

public void onErrorResponse(VolleyError error) {

// displaying error message.

Toast.makeText(MainActivity.this, "No Result found for the search query..", Toast.LENGTH_SHORT).show();

}

});

// adding json object request to our queue.

queue.add(jsonObjectRequest);

}

// method to capture image.

private void dispatchTakePictureIntent() {

// inside this method we are calling an implicit intent to capture an image.

Intent takePictureIntent = new Intent(MediaStore.ACTION_IMAGE_CAPTURE);

if (takePictureIntent.resolveActivity(getPackageManager()) != null) {

// calling a start activity for result when image is captured.

startActivityForResult(takePictureIntent, REQUEST_IMAGE_CAPTURE);

}

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

// inside on activity result method we are

// setting our image to our image view from bitmap.

if (requestCode == REQUEST_IMAGE_CAPTURE && resultCode == RESULT_OK) {

Bundle extras = data.getExtras();

imageBitmap = (Bitmap) extras.get("data");

// on below line we are setting our

// bitmap to our image view.

img.setImageBitmap(imageBitmap);

}

}

}

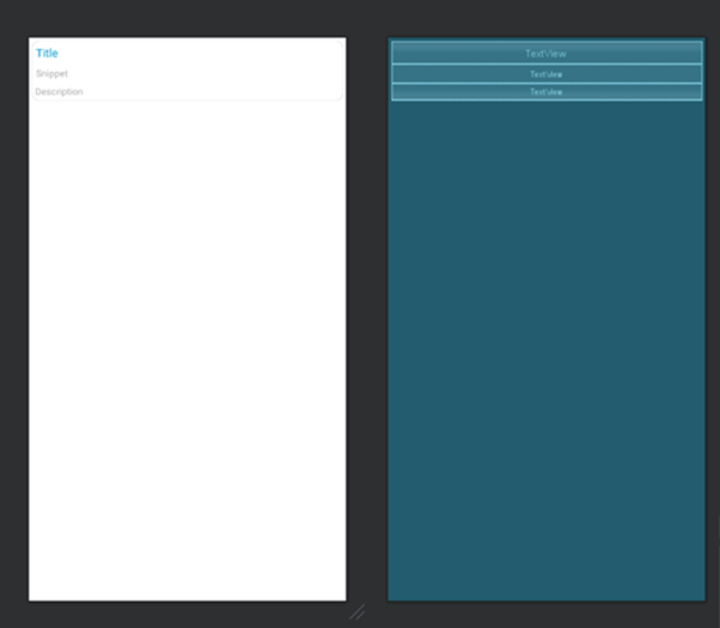

Output

Conclusion

This article is aiming to serve as a presentation to content acknowledgment utilising the Google Vision API, with a bounty of space for alteration and development based on your person application prerequisites. You will progress the usefulness by including capabilities like dialect interpretation, standardised identification checking, and question recognition.Text acknowledgment offers up a world of possibilities, permitting you to form apps that extricate and translate content from photographs, offer assistance to outwardly disabled clients, robotize information passage, and much more.