Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

ELK Stack Tutorial: Get Started with Elasticsearch, Logstash, Kibana, & Beats

ELK Stack is one of the best tools to view and handle files in the ELK Stack or the Elastic Stack. This tool consists of E- Elasticsearch, L- Logstash, and K- Kibana, the three open-source tools.

ELK Stack tools are used to process and analyze large amounts of data in real time. However, Beats is an open and independent platform for single-purpose data shippers. It sends data from hundreds or thousands of systems and machines to Elasticsearch or Logstash.

ELK Stack is widely used in various industries, such as healthcare, finance, and IT, for troubleshooting, monitoring, and log analysis. So this long blog will give you the complete details about the ELK Stack and the methods to set it up in Linux.

ELK Stack Tutorial: Elasticsearch, Logstash, Kibana, & Beats

As we have mentioned above, ELK Stack is the combination of different tools, so let's discuss them all one by one to get a brief idea about them ?

-

Elasticsearch ? Elasticsearch is a searchable database for log files, allowing efficient and fast data retrieval. Majorly this tool is used for indexing and storing data. It is used as a searchable, scalable database to store data.

-

Logstash ? Logstash acts as a data processing pipeline to read, collect, transform, and parse data from various sources and then send the data to ElasticSearch. This tool collects logs and other data from various sources, including applications, servers, and devices.

-

Kibana ? Kibana uses a web browser interface to organize and display data. This tool is highly configurable so that users can adjust it accordingly. At the same time, it allows you to create reports and interactive dashboards for analyzing the data stored in ElasticSearch.

It is a visualization tool offering a user-friendly UI to review user data. This feature makes it easy for users to explore and visualize data.

-

Beta ? Beats are lightweight data shippers or small data collection applications specific to individual tasks. Different Beats applications are used for various purposes, such as accessing other parts of the server, reading files, and sending them out. For example, Packetbeat analyzes network traffic, and Filebeat is used to collect log files.

Note ? Some users use Logstash directly by skipping the beats altogether. At the same time, some connect the Beats directly to Elasticsearch.

Features of ELK Stack

-

JSON document replicated searchable, sharded store.

-

NRT (near real-time) search.

-

Full-text search

-

Geolocation & multi-language support.

-

Has REST API web interface with JSON output

How to Install ELK Stack?

You can install ELK locally on Docker, Cloud, and configuration management systems like Chef, Puppet, and Ansible. You can download the tool according to the Linux distro you are using on your device.

Prerequisites to Install ELK Stack

Let's use Ubuntu to show the way to install the ELK stack ?

Step1: Update the Repositories

First, please update the system as per the latest updates available ?

sudo apt update

Step 2: Install Java (required for Elasticsearch and Logstash)

Logstash installation requires Java 8 or Java 11 in the systems. Hence, please install it first through the following command ?

sudo apt install default-jre

Step 3: Install Nginx

Kibana must be publicly accessed, and nginx is configured as a reverse proxy. So please run the below command to install nginx ?

sudo apt install nginx -y

To check whether nginx is installed, go to the browser and type your local IP server. If nginx is successfully installed, you will see the same output as the following image.

Once you are done, It is time to set up all the components from apt. Moreover, you can also download these components directly from the official website. Here we will look at installing all the components of the ELK stack separately in Ubuntu 22.04.

How to Install Elasticsearch

Step 1: Add Elasticsearch Key

To install Elasticsearch, first, add the signing key of Elasticsearch with the help of the following command ?

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

Note ? If you have already installed the above packages, you can skip the above step.

Now install the transport-https package through the following command ?

sudo apt-get update && sudo apt-get install apt-transport-https

Next, run the below command in the terminal to add the repository definition to your system ?

echo "deb https://artifacts.elastic.co/packages/7.x/apt stable main" | sudo tee -a /etc/apt/sources.list.d/elastic-7.x.list

Use the following command to install the version of Elasticsearch with features licensed under Apache 2.0 ?

echo "deb https://artifacts.elastic.co/packages/oss-7.x/apt stable main" | sudo tee -a /etc/apt/sources.list.d/elastic-7.x.list

Step 2: Install the Elasticsearch

Now, update the repositories of the system and then install Elasticsearch ?

sudo apt-get update && sudo apt-get install elasticsearch

Step 3: Configure the Elasticsearch

Let's now configure the system after installing Elasticsearch. Its configuration file allows you to configure network settings like port and host, where details like log files and memory are included. Add your private IP address with the below command to open the Elasticsearch configuration file ?

sudo nano /etc/elasticsearch/elasticsearch.yml

Note ? You can choose any editor to open the above configuration file.

Uncomment the above two marked lines in the configuration file and add your local host at 192.168.0.1.

network host: <local host name>

As you are configuring a single-node cluster here, please go to the 'Discovery section' and add the following line.

discovery .type: single-node

JVM heap size is set at 1GB by default. You should not set the heap size as not more than half of your total memory. Open the below file to set the heap size using the following command ?

sudo nano /etc/elasticsearch/jvm.options

Hence, please find the lines starting with -Xms (minimum) and -Xmx (maximum) and set their size to 512MB.

-xms512m -xms512m

Step 4: Run the Elasticsearch

After completing all the configuration in the Elasticsearch configuration file, start and enable the Elasticsearch service using the following systemctl command ?

sudo systemctl start elasticsearch sudo systemctl enable elasticsearch

After that, check the status of Elasticsearch ?

sudo systemctl status elasticsearch

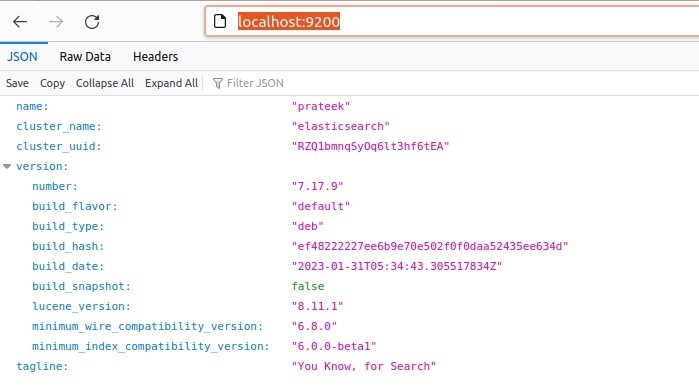

Step 5: Verify the Installed Elasticsearch

If you want to verify the Elasticsearch, please run the following command ?

http://localhost:9200

Furthermore, you can also confirm this with the help of the following curl command in the terminal.

Curl -X GET "localhost:9200"

If your output is the same as the image above, it shows that Elasticsearch works correctly.

How to Install Kibana?

Step 1: Install Kibana

You can also install Kibana through the repository through the below command ?

sudo apt install kibana

Step 2: Configure Kibana

Run the below command to open the configuration file of Kibana.

sudo nano /etc/kibana/kibana.yml

Delete the '#' sign from the below-marked lines to uncomment the following lines ?

server.port: 5601 server.host: "localhost" elasticsearch.hosts: ["http://localhost:9200"]

A server block in Nginx will route all traffic on localhost. For this, you must create a new site in the following directory.

sudo nano /etc/nginx/sites-available/file.com

For example, we will name the file 'file.com' and copy the following lines in it ?

server {

listen 80;

server_name file.com;

auth_basic "Restricted Access";

auth_basic_user_file /etc/nginx/htpasswd.users;

location / {

proxy_pass http://localhost:5601;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

prxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;

}

The above server will redirect all incoming traffic to localhost:5601 only on port 80, where Kibana is listening. Along with this, it also authenticates all requests to the Nginx user list.

Create the symbolic link using the following command to enable the newly created site.

sudo ln -s /etc/nginx/sites-available/file.com /etc/nginx/sites-enabled/file.com

Next, verify the configuration file for any configuration or syntax using the below command ?

sudo nginx -t

A default site is located in the Nginx site-enabled directory, and the system can load it by default. Go to the Nginx configuration directory and include the newly created file 'file.com.'

nano /etc/nginx/nginx.conf

Finally, restart the Nginx service by saving the configuration file.

Step 3: Start and Enable the Kibana

Start and enable Kibana using the simple systemctl command.

sudo systemctl start kibana sudo systemctl enable kibana

To check whether Kibana is running, you can check its status with the following command ?

sudo systemctl status kibana

Now, you can open up Kibana in your browser with http://localhost:5601.

Note ? If the UFW firewall is enabled, you must allow traffic on port 5601 for accessing the Kibana dashboard.

sudo ufw allow 5601/tcp

Installing Logstash

Step 1: Install Logstash

It is the highly customizable component of the ELK stack. You can install Logstash directly from the repository with the help of the following command ?

sudo apt install logstash

Step 2: Configure Logstash

This component's configuration files are in /etc/logstash/conf.d/. You can configure its output, filters, and input pipelines according to your requirements. All custom Logstash configuration files are stored in /etc/logstash/conf.d/.

Step 3: Start and Enable Logstash

To start and enable the logstash, run the below command.

sudo systemctl start logstsash

You can check the status of Logstash, whether it is active or not, using the below command ?

sudo systemctl status logstsash

Installing Beats

Like most other components, many of Beat's shippers can be installed similarly. So let's take a look at the way to install Metricbeat.

Metricbeat sends system-level metrics such as memory, CPU usage, etc. Here we will send data directly to Elasticsearch.

Step 1: Install Metricbeat

First, we will install Metricbeat on the server using the simple apt command ?

sudo apt install metricbeat

Step 2: Configure the Metricbeat

Open the Metricbeat.yml file in any editor you want and search for Kibana. After that, uncomment the localhost by removing the '#' symbol.

sudo /etc/metricbeat/metricbeat.yml host: "localhost:5601"

Similarly, uncomment the Elasticsearch, where it will be a local host. Moreover, we will do the configuration keeping the rest of the settings as default.

host: ["localhost:9200"]

Verify the above configuration by running the following command.

sudo metribeat test output

Since there is no error in the above output, Kibana and Elasticsearch successfully communicate with Metricbeat. If your system module is not enabled, you can enable it with the help of the following command ?

sudo metricbeat modules enable <module_name>

To see each module's configuration files, go to the metricbeat install directory. All its configuration files are present in this modules.d directory. For example, to open the system.yml module, use the below command ?

sudo nano /etc/metricbeat/modules.d/system.yml

You can make changes in the above configuration file, but we will keep all the configurations as default here. Kibana uses several dashboards to visualize the data. These are the default dashboard for each dashboard. Use the below command to set the default Metricbeat dashboard using the following command ?

sudo metricbeat setup

Step 3: Start and Enable the Metricbeat

Start the Metricbeat using the below command ?

sudo systemctl start metricbeat

Check the current status of Metricbeat.

sudo systemctl status metricbeat

Now, Metricbeat starts monitoring the server and creates an Elasticsearch index that you can also define in Kibana.

Pros and Cons of ELK Stack

The Elastic Stack comes with certain benefits and drawbacks.

|

Pros |

Cons |

|---|---|

|

Free to get started |

Complex Management Requirements |

|

Official Clients in Multiple Programming Languages |

Scaling Challenges |

|

Real-Time Data Analysis & Visualization |

Data Retention Tradeoffs |

|

Centralized Logging Capabilities |

Stability & Uptime Issues |

|

Multiple Hosting Options |

High Cost of Ownership |

Uses of ELK Stack

Real-time log monitoring provides the number of creative use cases of Elastic Stack from various resources as follows ?

-

Error detection in new application ? When creating any new application, the ELK Stack informs you about its errors. With this, you can fix the errors and bugs as soon as possible and improve the design of your application.

-

Traffic monitoring ? It helps monitor website traffic data and gives information on whether your server is overloaded. That is, ELK Stack generates data with the help of which users can make sound business decisions and solve problems.

-

Web scraping ? ELK Stack can search, index, and collect unstructured data from various sources, making it simple to visualize and gather web-scraped information.

-

E-commerce solutions ? Faster responses, aggregation, indexing, and full-text search give a better user experience. Visually monitoring search behaviors and trends of Elasticsearch helps to enhance trend analysis.

-

Security monitoring ? The server monitoring system is one of the important security applications of the ELK stack. It helps to detect server attacks with a real-time warning system, checking for unusual requests as soon as it is noticed and minimizing the damage caused by them.

Wrapping Up

In this long blog, we have explained the brief details about the ELK Stack. As we have mentioned earlier, ELK Stack is an open-source tool, meaning you can get it without paying anything. Furthermore, we have described the detailed approach to setting up ELK Stack, including all its related tools.