Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Boosting in Machine Learning | Boosting and AdaBoost

Introduction

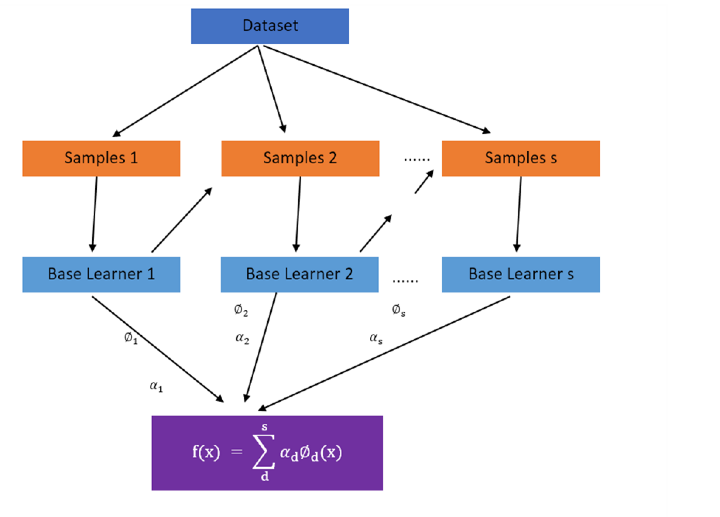

Boosting is a class of ensemble modeling algorithms where we build a strong model from several weak models. In boosting all the classifiers are present in series. First, a single model is trained on the actual training data. Then the second classifier is built which is trained on the errors produced by the first model and it tries to correct the errors produced by the previous model. This process is repeated continuously and new models are added till there are non?errors and the prediction on training data is accurate or we have reached the maximum threshold of models to be added.

Boosting Technique

Boosting technique reduces the bias in the model. Boosting algorithms may face overfitting problems. To tackle problems of overfitting hyperparameter tuning is of prime importance in boosting.

Examples of boosting are AdaBoost, XGBoost, and CatBoost.

What advantages do boosting method give?

Boosting can help increase accuracy as the power of many weak learners is combined to produce a better model. In regression, the accuracies of each weak learner are averaged and majority voted in case classification

Boosting can prevent overfitting as the weights are continuously adjusted to minimize errors.

More interpretability is achieved with boosting as the process is segregated into multiple decision processes.

In this article, we are going to see AdaBoost boosting technique in detail.

AdaBoost Ensemble Technique

The AdaBoost ensemble method is also known as Adaptive Boosting. This method tries to correct the errors produced by the predecessor model. At each step, it tries to work more or the underfitted training data points present in the previous model.

A series of weak learners are trained on differentially weighted training data. First, a prediction is made on the initial training data and equal weight is given to each observation. After the first learner has been fitted it gives higher weight to observations that are predicted incorrectly. This is an iterative process and continues adding learners until optimal accuracy is reached.

AdaBoost is commonly used with decision trees and for classification problems. But it can also be used for regression.

If we look into an example of a decision tree with the AdaBoost algorithm, first a decision tree is trained on the initial training data. The weight of points that are misclassified during fitting is increased. Then a second decision tree classifier is added and it takes the updated weights. This is an iterative process and is repeated continuously. we can see here that the second model boosts the weights from the previous model. AdaBoost adds learners sequentially, to produce better results.

Disadvantage of this algorithm is that it cannot be parallelized.

Working steps of AdaBoost

Assign equal weights to each observation

On the initial subset of the data, a model is fitted.

Now on the entire data, predictions are done.

Deviation of predicted values from actual values gives the error.

In this step, while the next model is trained higher weights are assigned to misclassified points with higher errors.

This process is repeated again and again till the error value does not change or the threshold of models is reached.

How to best prepare data for AdaBoost?

The quality of the training data should be rich since AdaBoost tries to correct the misclassifications.

Outliers should be removed from the training data otherwise the algorithm may try to correct unrealistic errors.

The training data should be free from unnecessary noise that may affect data quality in general.

Example

from sklearn.datasets import make_classification

from sklearn.ensemble import AdaBoostClassifier

train_X, train_y = make_classification(n_samples=2000, n_features=30, n_informative=25, n_redundant=5)

clf = AdaBoostClassifier()

clf.fit(train_X, train_y)

test_rowdata = [[-2.56789,1.9012436,0.0490456,-0.945678,-3.545673,1.945555,-7.746789,-2.4566667,-1.845677896,-1.6778994,2.336788043,-4.305666617,0.466641,-1.2866634,-10.6777077,-0.766663,-3.5556621,2.045456,0.055673,0.94545456,0.5677,-1.4567,4.3333,3.89898,1.56565,-0.56565,-0.45454,4.33535,6.34343,-4.42424]]

y_hat = clf.predict(test_rowdata)

print('Class predicted %d' % y_hat[0])

Output

Class predicted 0

Conclusion

Boosting is an approach to using multiple weak learners to improve the final accuracy and produce better results. Boosting helps tackle the bias in the models to some extent and also prevents overfitting. The AdaBoost ensemble is boosting technique primarily focussed on the weighting technique applied to misclassified points that are then improved over the iterative process.