- XGBoost - Home

- XGBoost - Overview

- XGBoost - Architecture

- XGBoost - Installation

- XGBoost - Hyper-parameters

- XGBoost - Tuning with Hyper-parameters

- XGBoost - Using DMatrix

- XGBoost - Classification

- XGBoost - Regressor

- XGBoost - Regularization

- XGBoost - Learning to Rank

- XGBoost - Over-fitting Control

- XGBoost - Quantile Regression

- XGBoost - Bootstrapping Approach

- XGBoost - Python Implementation

- XGBoost vs Other Boosting Algorithms

- XGBoost Useful Resources

- XGBoost - Quick Guide

- XGBoost - Useful Resources

- XGBoost - Discussion

XGBoost - History & Architecture

XGBoost, or Extreme Gradient Boosting is a machine learning method that use a gradient boosting framework. It offers features like regularization to prevent over-fitting, missing data management, and a customizable method that allows users to define their own optimization goals and criteria.

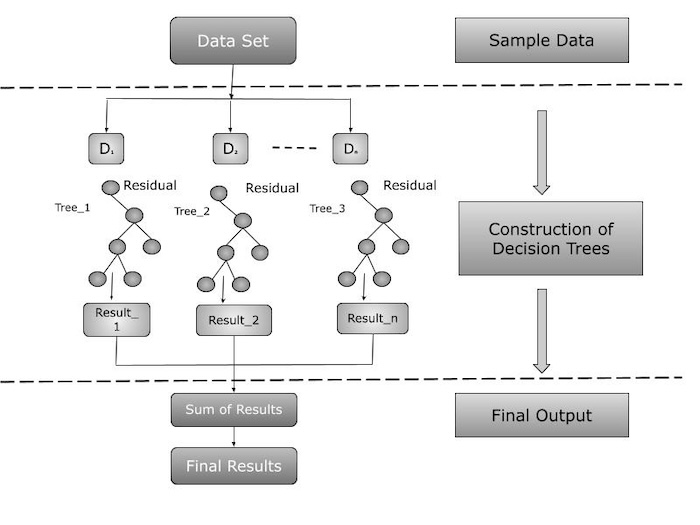

Architecture of XGBoost

The architecture of XGBoost stands out by its scalable and efficient implementation of gradient boosted decision trees. It includes features like regularization to prevent over-fitting, missing data management, and a customizable approach that allows users to create their own optimization objectives and criteria. These features contribute to the predictive model's robustness and accuracy.

Its architecture can be broadly divided into two main components −

Sequential Learning

XGBoost commonly uses decision trees as its foundational learning. Each subsequent tree is constructed on the previous one's errors, with a focus on misclassified data points. The approach use gradient descent to find the optimal weights for each tree while minimizing the loss function.

Ensemble

XGBoost generates an ensemble of decision trees and combines their predictions to improve overall accuracy. The final forecast is a weighted sum of all the trees' predictions and weighted based on performance.

Key Features of XGBoost Architecture

The XGBoost architecture shows its major components and interconnections. Here is an overview of the architecture's features −

Regularization: XGBoost uses regularization techniques to avoid over-fitting.

Parallel Processing: It employs parallel processing to accelerate training.

Flexibility: It can deal with regression and classification challenges.

High Performance: XGBoost has consistently done well in a wide range of machine learning events.

XGBoost Learning Type

XGBoost depends mainly on supervised learning, which involves learning from labeled data. This method requires building the model on a dataset which has both input features and output labels. This training helps the model to understand the relationship between inputs and outputs, allowing it to make predictions or classifications based on previously unknown data.

XGBoost excels at dealing with structured data and is commonly used for regression (predicting continuous values) and classification (predicting discrete labels).

XGBoost Algorithmic Approach

XGBoost's algorithmic foundation is based on tree based techniques, mainly gradient boosting. Gradient boosting is an ensemble technique that creates multiple decision trees in order, with each tree trying to correct any weaknesses of the previous ones. This creates a strong learner from a large number of weak learners, improving the model's overall accuracy and robustness.

XGBoost is known for combining regularization techniques into its gradient boosting framework. Regularization (both L1 and L2) is used to prevent over-fitting and increase the model's adaptability to fresh data. Also, XGBoost optimizes a loss function during the tree building process, which is crucial for effective learning in regression and classification tasks.

XGBoost's effectiveness and popularity in many types of machine learning applications can be given to its combination of supervised learning and a complicated tree based gradient boosting strategy, which is enhanced with regularization methods.

Summary

XGBoost is a powerful machine learning algorithm that improves predictions by generating and combining several decision trees. It became popular in the mid 2010s after winning several competitions. XGBoost works well with structured data can solve regression and classification problems and use regularization methods to avoid over-fitting.