Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Types Of Activation Functions in ANN

This article we will learn about ANN and its types. We will also see programs on different types of Activation. Before diving into the types let's get to know what ANN is.

ANN

Artificial neural network (ANN) is a branch of machine learning which performs computation by forming a structure of biological neural network where each neuron can transmit the signal or processed data to other connected neurons. This structure is similar to the human brain in which neurons are interconnected to each other. Neural network is created when a connection of nodes or neurons forms a connection. Artificial Neural Networks are kinds of models which work similar to the human nerve system.

There are Three Layers of ANN

Input layer

In this layer we give input as data into the neural network. The number of neurons in this layer is same as the number of features in the data.

Hidden Layer

This layer is called hidden because this is the layer where the data is processed and the layer is hidden behind the input layer. The output of this layer will get transferred to the output layer.

Output Layer

This layer is where the output of the network is produced.

The neurons in each layer are connected to each other with weights. The weights are adjusted during training so that the network can learn to produce the desired output.

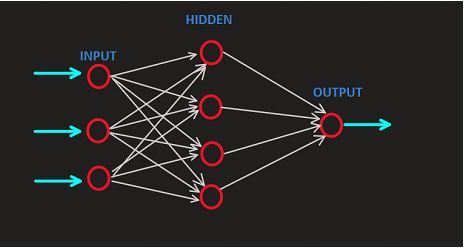

Representation of the ANN network

The above image diagram represents the neural network where the first layer is the input layer where we provide the data to the model and after its manipulation it will forward the output to the hidden layer which is present after the input layer. The outcome which will come out from the hidden layer will be given as output from the output layer. Each of the neurons has its weight assigned to it which represents the strength of the input value.

Types of Activation Function in ANN

There are many types of activation functions present in ANN, but we will see the functions which is used mostly

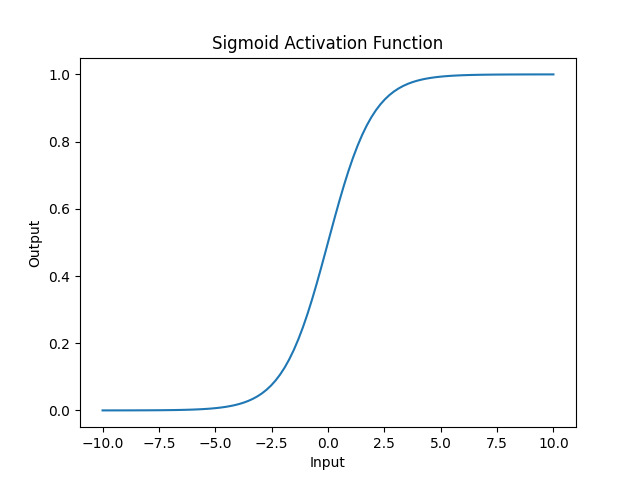

Sigmoid Activation Function

This activation is non-linear function in ANN which is used to map the input data to the value between 0 and 1 which denotes the probability. This activation function is used in the binary classification problems as an output layer.

Example

import numpy as np

import matplotlib.pyplot as plt

def sigmoid_Activation_fun(inp):

return 1 / (1 + np.exp(-inp))

inp= np.linspace(-10, 10, 100)

# applying sigmoid function

out = sigmoid_Activation_fun(inp);

plt.plot(inp, out)

plt.xlabel('Input')

plt.ylabel('Output')

plt.title('Sigmoid Activation Function')

plt.show()

Output

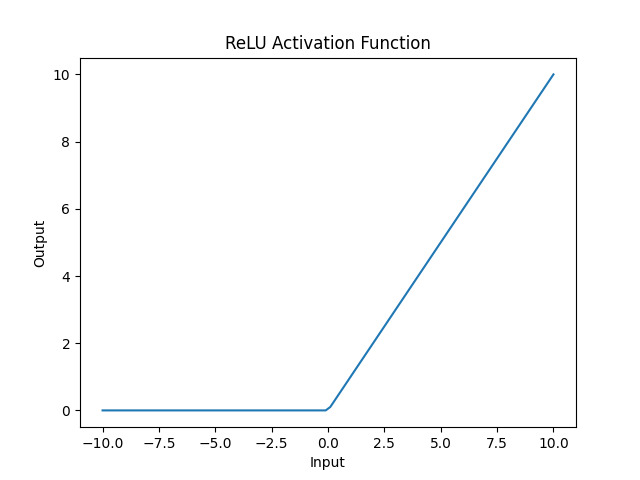

Rectified Linear Unit (ReLU)

ReLu is used widely among all other activation functions which brings non-linearity by mapping negative values to 0 and leaving the positive value unchanged. It provides much fast-learning during training and avoids the vanishing gradient problem. Compared to other activation functions like Tanh and sigmoid it provides better performance.

Example

import numpy as np

import matplotlib.pyplot as plt

def relu_activation_fun(inp):

return np.maximum(0, inp)

inp = np.linspace(-10, 10, 100)

# Applying the ReLU

out = relu_activation_fun(inp)

plt.plot(inp, out)

plt.xlabel('Input')

plt.ylabel('Output')

plt.title('ReLU Activation Function')

plt.show()

Output

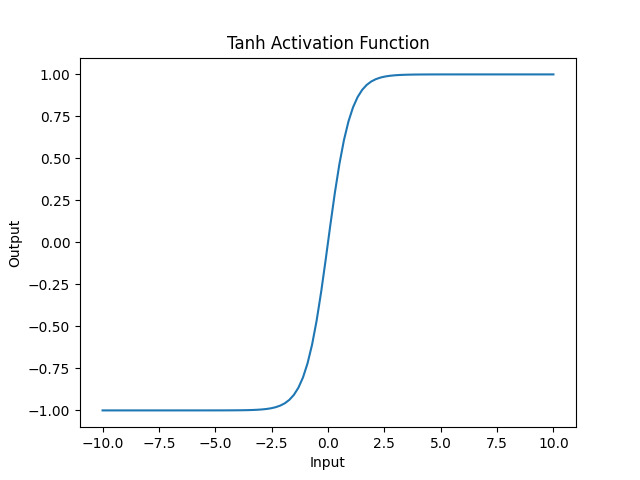

Hyperbolic Tangent (Tanh)

Hyperbolic tangent function, which is also called tanh function and we use it same as the sigmoid function and maps input values into a range of -1 to 1. THis activation function is mostly used in the hidden layer of ANN.

Example

import numpy as np

import matplotlib.pyplot as plt

def Hyperbolic_tanh_fun(inp):

return np.tanh(inp)

inp = np.linspace(-10, 10, 100)

# Applying tanh activation function

out= Hyperbolic_tanh_fun(inp)

plt.plot(inp, out)

plt.xlabel('Input')

plt.ylabel('Output')

plt.title('Tanh Activation Function')

plt.show()

Output

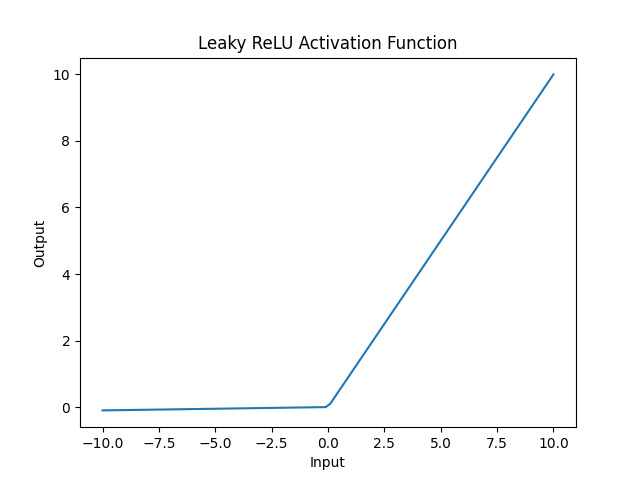

Leaky ReLU

This is a modified version of the ReLU function where the ReLU was setting the small value to 0 but this will allow the small negative value. This modified version improves the performance of the neural network.

Example

import numpy as np

import matplotlib.pyplot as plt

def leaky_relu(inp, alpha=0.01):

return np.where(inp >= 0, inp, alpha * inp)

inp = np.linspace(-10, 10, 100)

# Applying leaky ReLU activation function

out = leaky_relu(inp)

plt.plot(inp, out)

plt.xlabel('Input')

plt.ylabel('Output')

plt.title('Leaky ReLU Activation Function')

plt.show()

Output

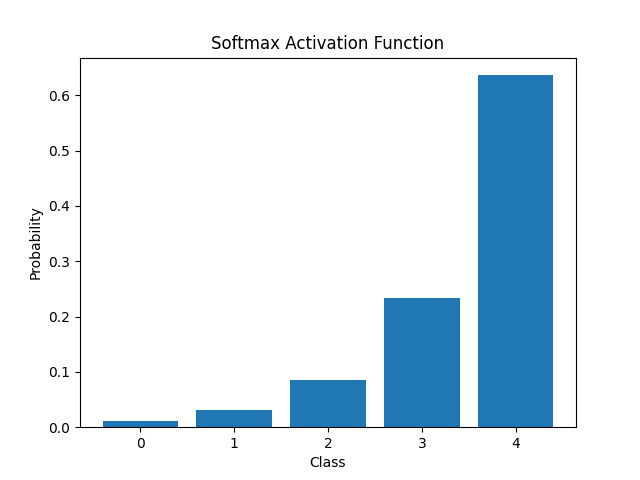

Softmax Activation Function

The softmax activation function is a type of activation function using which we compute probability distribution and it ensures that the sum of these probability comes out to be 1. This activation function is mostly used in the output layer of multi-class classification problems.

Example

import numpy as np

import matplotlib.pyplot as plt

def softmax_activation_function(inp):

exps = np.exp(inp)

return exps / np.sum(exps)

inp = np.array([1, 2, 3, 4, 5])

# Applying softmax activation function

out = softmax_activation_function(inp)

plt.bar(range(len(inp)), out)

plt.xlabel('Class')

plt.ylabel('Probability')

plt.xticks(range(len(inp)))

plt.title('Softmax Activation Function')

plt.show()

Output

We usually choose the activation function based on the requirement of the problem which we want to solve and the output of ANN. Like tanh and sigmoid functions are often used in classification problems whereas ReLU functions are often used in regression problems.

Conclusion

So, we get to know about ANN and its types. We saw various types of activation functions like sigmoid function, ReLU, tanh, leaky ReLU in ANN and its usage. To use the activation function suitably we must have the knowledge of it to build artificial neural network models. Every activation function has its unique properties, working mechanism, behavior and training process. We can use these functions in our ANN task to enhance the model.