- Signals & Systems Home

- Signals & Systems Overview

- Introduction

- Signals Basic Types

- Signals Classification

- Signals Basic Operations

- Systems Classification

- Types of Signals

- Representation of a Discrete Time Signal

- Continuous-Time Vs Discrete-Time Sinusoidal Signal

- Even and Odd Signals

- Properties of Even and Odd Signals

- Periodic and Aperiodic Signals

- Unit Step Signal

- Unit Ramp Signal

- Unit Parabolic Signal

- Energy Spectral Density

- Unit Impulse Signal

- Power Spectral Density

- Properties of Discrete Time Unit Impulse Signal

- Real and Complex Exponential Signals

- Addition and Subtraction of Signals

- Amplitude Scaling of Signals

- Multiplication of Signals

- Time Scaling of Signals

- Time Shifting Operation on Signals

- Time Reversal Operation on Signals

- Even and Odd Components of a Signal

- Energy and Power Signals

- Power of an Energy Signal over Infinite Time

- Energy of a Power Signal over Infinite Time

- Causal, Non-Causal, and Anti-Causal Signals

- Rectangular, Triangular, Signum, Sinc, and Gaussian Functions

- Signals Analysis

- Types of Systems

- What is a Linear System?

- Time Variant and Time-Invariant Systems

- Linear and Non-Linear Systems

- Static and Dynamic System

- Causal and Non-Causal System

- Stable and Unstable System

- Invertible and Non-Invertible Systems

- Linear Time-Invariant Systems

- Transfer Function of LTI System

- Properties of LTI Systems

- Response of LTI System

- Fourier Series

- Fourier Series

- Fourier Series Representation of Periodic Signals

- Fourier Series Types

- Trigonometric Fourier Series Coefficients

- Exponential Fourier Series Coefficients

- Complex Exponential Fourier Series

- Relation between Trigonometric & Exponential Fourier Series

- Fourier Series Properties

- Properties of Continuous-Time Fourier Series

- Time Differentiation and Integration Properties of Continuous-Time Fourier Series

- Time Shifting, Time Reversal, and Time Scaling Properties of Continuous-Time Fourier Series

- Linearity and Conjugation Property of Continuous-Time Fourier Series

- Multiplication or Modulation Property of Continuous-Time Fourier Series

- Convolution Property of Continuous-Time Fourier Series

- Convolution Property of Fourier Transform

- Parseval’s Theorem in Continuous Time Fourier Series

- Average Power Calculations of Periodic Functions Using Fourier Series

- GIBBS Phenomenon for Fourier Series

- Fourier Cosine Series

- Trigonometric Fourier Series

- Derivation of Fourier Transform from Fourier Series

- Difference between Fourier Series and Fourier Transform

- Wave Symmetry

- Even Symmetry

- Odd Symmetry

- Half Wave Symmetry

- Quarter Wave Symmetry

- Fourier Transform

- Fourier Transforms

- Fourier Transforms Properties

- Fourier Transform – Representation and Condition for Existence

- Properties of Continuous-Time Fourier Transform

- Table of Fourier Transform Pairs

- Linearity and Frequency Shifting Property of Fourier Transform

- Modulation Property of Fourier Transform

- Time-Shifting Property of Fourier Transform

- Time-Reversal Property of Fourier Transform

- Time Scaling Property of Fourier Transform

- Time Differentiation Property of Fourier Transform

- Time Integration Property of Fourier Transform

- Frequency Derivative Property of Fourier Transform

- Parseval’s Theorem & Parseval’s Identity of Fourier Transform

- Fourier Transform of Complex and Real Functions

- Fourier Transform of a Gaussian Signal

- Fourier Transform of a Triangular Pulse

- Fourier Transform of Rectangular Function

- Fourier Transform of Signum Function

- Fourier Transform of Unit Impulse Function

- Fourier Transform of Unit Step Function

- Fourier Transform of Single-Sided Real Exponential Functions

- Fourier Transform of Two-Sided Real Exponential Functions

- Fourier Transform of the Sine and Cosine Functions

- Fourier Transform of Periodic Signals

- Conjugation and Autocorrelation Property of Fourier Transform

- Duality Property of Fourier Transform

- Analysis of LTI System with Fourier Transform

- Relation between Discrete-Time Fourier Transform and Z Transform

- Convolution and Correlation

- Convolution in Signals and Systems

- Convolution and Correlation

- Correlation in Signals and Systems

- System Bandwidth vs Signal Bandwidth

- Time Convolution Theorem

- Frequency Convolution Theorem

- Energy Spectral Density and Autocorrelation Function

- Autocorrelation Function of a Signal

- Cross Correlation Function and its Properties

- Detection of Periodic Signals in the Presence of Noise (by Autocorrelation)

- Detection of Periodic Signals in the Presence of Noise (by Cross-Correlation)

- Autocorrelation Function and its Properties

- PSD and Autocorrelation Function

- Sampling

- Signals Sampling Theorem

- Nyquist Rate and Nyquist Interval

- Signals Sampling Techniques

- Effects of Undersampling (Aliasing) and Anti Aliasing Filter

- Different Types of Sampling Techniques

- Laplace Transform

- Laplace Transforms

- Common Laplace Transform Pairs

- Laplace Transform of Unit Impulse Function and Unit Step Function

- Laplace Transform of Sine and Cosine Functions

- Laplace Transform of Real Exponential and Complex Exponential Functions

- Laplace Transform of Ramp Function and Parabolic Function

- Laplace Transform of Damped Sine and Cosine Functions

- Laplace Transform of Damped Hyperbolic Sine and Cosine Functions

- Laplace Transform of Periodic Functions

- Laplace Transform of Rectifier Function

- Laplace Transforms Properties

- Linearity Property of Laplace Transform

- Time Shifting Property of Laplace Transform

- Time Scaling and Frequency Shifting Properties of Laplace Transform

- Time Differentiation Property of Laplace Transform

- Time Integration Property of Laplace Transform

- Time Convolution and Multiplication Properties of Laplace Transform

- Initial Value Theorem of Laplace Transform

- Final Value Theorem of Laplace Transform

- Parseval's Theorem for Laplace Transform

- Laplace Transform and Region of Convergence for right sided and left sided signals

- Laplace Transform and Region of Convergence of Two Sided and Finite Duration Signals

- Circuit Analysis with Laplace Transform

- Step Response and Impulse Response of Series RL Circuit using Laplace Transform

- Step Response and Impulse Response of Series RC Circuit using Laplace Transform

- Step Response of Series RLC Circuit using Laplace Transform

- Solving Differential Equations with Laplace Transform

- Difference between Laplace Transform and Fourier Transform

- Difference between Z Transform and Laplace Transform

- Relation between Laplace Transform and Z-Transform

- Relation between Laplace Transform and Fourier Transform

- Laplace Transform – Time Reversal, Conjugation, and Conjugate Symmetry Properties

- Laplace Transform – Differentiation in s Domain

- Laplace Transform – Conditions for Existence, Region of Convergence, Merits & Demerits

- Z Transform

- Z-Transforms (ZT)

- Common Z-Transform Pairs

- Z-Transform of Unit Impulse, Unit Step, and Unit Ramp Functions

- Z-Transform of Sine and Cosine Signals

- Z-Transform of Exponential Functions

- Z-Transforms Properties

- Properties of ROC of the Z-Transform

- Z-Transform and ROC of Finite Duration Sequences

- Conjugation and Accumulation Properties of Z-Transform

- Time Shifting Property of Z Transform

- Time Reversal Property of Z Transform

- Time Expansion Property of Z Transform

- Differentiation in z Domain Property of Z Transform

- Initial Value Theorem of Z-Transform

- Final Value Theorem of Z Transform

- Solution of Difference Equations Using Z Transform

- Long Division Method to Find Inverse Z Transform

- Partial Fraction Expansion Method for Inverse Z-Transform

- What is Inverse Z Transform?

- Inverse Z-Transform by Convolution Method

- Transform Analysis of LTI Systems using Z-Transform

- Convolution Property of Z Transform

- Correlation Property of Z Transform

- Multiplication by Exponential Sequence Property of Z Transform

- Multiplication Property of Z Transform

- Residue Method to Calculate Inverse Z Transform

- System Realization

- Cascade Form Realization of Continuous-Time Systems

- Direct Form-I Realization of Continuous-Time Systems

- Direct Form-II Realization of Continuous-Time Systems

- Parallel Form Realization of Continuous-Time Systems

- Causality and Paley Wiener Criterion for Physical Realization

- Discrete Fourier Transform

- Discrete-Time Fourier Transform

- Properties of Discrete Time Fourier Transform

- Linearity, Periodicity, and Symmetry Properties of Discrete-Time Fourier Transform

- Time Shifting and Frequency Shifting Properties of Discrete Time Fourier Transform

- Inverse Discrete-Time Fourier Transform

- Time Convolution and Frequency Convolution Properties of Discrete-Time Fourier Transform

- Differentiation in Frequency Domain Property of Discrete Time Fourier Transform

- Parseval’s Power Theorem

- Miscellaneous Concepts

- What is Mean Square Error?

- What is Fourier Spectrum?

- Region of Convergence

- Hilbert Transform

- Properties of Hilbert Transform

- Symmetric Impulse Response of Linear-Phase System

- Filter Characteristics of Linear Systems

- Characteristics of an Ideal Filter (LPF, HPF, BPF, and BRF)

- Zero Order Hold and its Transfer Function

- What is Ideal Reconstruction Filter?

- What is the Frequency Response of Discrete Time Systems?

- Basic Elements to Construct the Block Diagram of Continuous Time Systems

- BIBO Stability Criterion

- BIBO Stability of Discrete-Time Systems

- Distortion Less Transmission

- Distortionless Transmission through a System

- Rayleigh’s Energy Theorem

Signals Analysis

Analogy Between Vectors and Signals

There is a perfect analogy between vectors and signals.

Vector

A vector contains magnitude and direction. The name of the vector is denoted by bold face type and their magnitude is denoted by light face type.

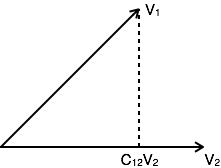

Example: V is a vector with magnitude V. Consider two vectors V1 and V2 as shown in the following diagram. Let the component of V1 along with V2 is given by C12V2. The component of a vector V1 along with the vector V2 can obtained by taking a perpendicular from the end of V1 to the vector V2 as shown in diagram:

The vector V1 can be expressed in terms of vector V2

V1= C12V2 + Ve

Where Ve is the error vector.

But this is not the only way of expressing vector V1 in terms of V2. The alternate possibilities are:

V1=C1V2+Ve1

V2=C2V2+Ve2

The error signal is minimum for large component value. If C12=0, then two signals are said to be orthogonal.

Dot Product of Two Vectors

V1 . V2 = V1.V2 cosθ

θ = Angle between V1 and V2

V1 . V2 =V2.V1

The components of V1 alogn V2 = V1 Cos θ = $V1.V2 \over V2$

From the diagram, components of V1 alogn V2 = C 12 V2

$$V_1.V_2 \over V_2 = C_12\,V_2$$

$$ \Rightarrow C_{12} = {V_1.V_2 \over V_2}$$

Signal

The concept of orthogonality can be applied to signals. Let us consider two signals f1(t) and f2(t). Similar to vectors, you can approximate f1(t) in terms of f2(t) as

f1(t) = C12 f2(t) + fe(t) for (t1 2)

$ \Rightarrow $ fe(t) = f1(t) C12 f2(t)

One possible way of minimizing the error is integrating over the interval t1 to t2.

$${1 \over {t_2 - t_1}} \int_{t_1}^{t_2} [f_e (t)] dt$$

$${1 \over {t_2 - t_1}} \int_{t_1}^{t_2} [f_1(t) - C_{12}f_2(t)]dt $$

However, this step also does not reduce the error to appreciable extent. This can be corrected by taking the square of error function.

$\varepsilon = {1 \over {t_2 - t_1}} \int_{t_1}^{t_2} [f_e (t)]^2 dt$

$\Rightarrow {1 \over {t_2 - t_1}} \int_{t_1}^{t_2} [f_e (t) - C_{12}f_2]^2 dt $

Where ε is the mean square value of error signal. The value of C12 which minimizes the error, you need to calculate ${d\varepsilon \over dC_{12} } = 0 $

$\Rightarrow {d \over dC_{12} } [ {1 \over t_2 - t_1 } \int_{t_1}^{t_2} [f_1 (t) - C_{12} f_2 (t)]^2 dt]= 0 $

$\Rightarrow {1 \over {t_2 - t_1}} \int_{t_1}^{t_2} [ {d \over dC_{12} } f_{1}^2(t) - {d \over dC_{12} } 2f_1(t)C_{12}f_2(t)+ {d \over dC_{12} } f_{2}^{2} (t) C_{12}^2 ] dt =0 $

Derivative of the terms which do not have C12 term are zero.

$\Rightarrow \int_{t_1}^{t_2} - 2f_1(t) f_2(t) dt + 2C_{12}\int_{t_1}^{t_2}[f_{2}^{2} (t)]dt = 0 $

If $C_{12} = {{\int_{t_1}^{t_2}f_1(t)f_2(t)dt } \over {\int_{t_1}^{t_2} f_{2}^{2} (t)dt }} $ component is zero, then two signals are said to be orthogonal.

Put C12 = 0 to get condition for orthogonality.

0 = $ {{\int_{t_1}^{t_2}f_1(t)f_2(t)dt } \over {\int_{t_1}^{t_2} f_{2}^{2} (t)dt }} $

$$ \int_{t_1}^{t_2} f_1 (t)f_2(t) dt = 0 $$

Orthogonal Vector Space

A complete set of orthogonal vectors is referred to as orthogonal vector space. Consider a three dimensional vector space as shown below:

Consider a vector A at a point (X1, Y1, Z1). Consider three unit vectors (VX, VY, VZ) in the direction of X, Y, Z axis respectively. Since these unit vectors are mutually orthogonal, it satisfies that

$$V_X. V_X= V_Y. V_Y= V_Z. V_Z = 1 $$

$$V_X. V_Y= V_Y. V_Z= V_Z. V_X = 0 $$

You can write above conditions as

$$V_a . V_b = \left\{ \begin{array}{l l} 1 & \quad a = b \\ 0 & \quad a \neq b \end{array} \right. $$

The vector A can be represented in terms of its components and unit vectors as

$A = X_1 V_X + Y_1 V_Y + Z_1 V_Z................(1) $

Any vectors in this three dimensional space can be represented in terms of these three unit vectors only.

If you consider n dimensional space, then any vector A in that space can be represented as

$ A = X_1 V_X + Y_1 V_Y + Z_1 V_Z+...+ N_1V_N.....(2) $

As the magnitude of unit vectors is unity for any vector A

The component of A along x axis = A.VX

The component of A along Y axis = A.VY

The component of A along Z axis = A.VZ

Similarly, for n dimensional space, the component of A along some G axis

$= A.VG...............(3)$

Substitute equation 2 in equation 3.

$\Rightarrow CG= (X_1 V_X + Y_1 V_Y + Z_1 V_Z +...+G_1 V_G...+ N_1V_N)V_G$

$= X_1 V_X V_G + Y_1 V_Y V_G + Z_1 V_Z V_G +...+ G_1V_G V_G...+ N_1V_N V_G$

$= G_1 \,\,\,\,\, \text{since } V_G V_G=1$

$If V_G V_G \neq 1 \,\,\text{i.e.} V_G V_G= k$

$AV_G = G_1V_G V_G= G_1K$

$G_1 = {(AV_G) \over K}$

Orthogonal Signal Space

Let us consider a set of n mutually orthogonal functions x1(t), x2(t)... xn(t) over the interval t1 to t2. As these functions are orthogonal to each other, any two signals xj(t), xk(t) have to satisfy the orthogonality condition. i.e.

$$\int_{t_1}^{t_2} x_j(t)x_k(t)dt = 0 \,\,\, \text{where}\, j \neq k$$

$$\text{Let} \int_{t_1}^{t_2}x_{k}^{2}(t)dt = k_k $$

Let a function f(t), it can be approximated with this orthogonal signal space by adding the components along mutually orthogonal signals i.e.

$\,\,\,f(t) = C_1x_1(t) + C_2x_2(t) + ... + C_nx_n(t) + f_e(t) $

$\quad\quad=\Sigma_{r=1}^{n} C_rx_r (t) $

$\,\,\,f(t) = f(t) - \Sigma_{r=1}^n C_rx_r (t) $

Mean sqaure error $ \varepsilon = {1 \over t_2 - t_2 } \int_{t_1}^{t_2} [ f_e(t)]^2 dt$

$$ = {1 \over t_2 - t_2 } \int_{t_1}^{t_2} [ f[t] - \sum_{r=1}^{n} C_rx_r(t) ]^2 dt $$

The component which minimizes the mean square error can be found by

$$ {d\varepsilon \over dC_1} = {d\varepsilon \over dC_2} = ... = {d\varepsilon \over dC_k} = 0 $$

Let us consider ${d\varepsilon \over dC_k} = 0 $

$${d \over dC_k}[ {1 \over t_2 - t_1} \int_{t_1}^{t_2} [ f(t) - \Sigma_{r=1}^n C_rx_r(t)]^2 dt] = 0 $$

All terms that do not contain Ck is zero. i.e. in summation, r=k term remains and all other terms are zero.

$$\int_{t_1}^{t_2} - 2 f(t)x_k(t)dt + 2C_k \int_{t_1}^{t_2} [x_k^2 (t)] dt=0 $$

$$\Rightarrow C_k = {{\int_{t_1}^{t_2}f(t)x_k(t)dt} \over {int_{t_1}^{t_2} x_k^2 (t)dt}} $$

$$\Rightarrow \int_{t_1}^{t_2} f(t)x_k(t)dt = C_kK_k $$

Mean Square Error

The average of square of error function fe(t) is called as mean square error. It is denoted by ε (epsilon).

.$\varepsilon = {1 \over t_2 - t_1 } \int_{t_1}^{t_2} [f_e (t)]^2dt$

$\,\,\,\,= {1 \over t_2 - t_1 } \int_{t_1}^{t_2} [f_e (t) - \Sigma_{r=1}^n C_rx_r(t)]^2 dt $

$\,\,\,\,= {1 \over t_2 - t_1 } [ \int_{t_1}^{t_2} [f_e^2 (t) ]dt + \Sigma_{r=1}^{n} C_r^2 \int_{t_1}^{t_2} x_r^2 (t) dt - 2 \Sigma_{r=1}^{n} C_r \int_{t_1}^{t_2} x_r (t)f(t)dt$

You know that $C_{r}^{2} \int_{t_1}^{t_2} x_r^2 (t)dt = C_r \int_{t_1}^{t_2} x_r (t)f(d)dt = C_r^2 K_r $

$\varepsilon = {1 \over t_2 - t_1 } [ \int_{t_1}^{t_2} [f^2 (t)] dt + \Sigma_{r=1}^{n} C_r^2 K_r - 2 \Sigma_{r=1}^{n} C_r^2 K_r] $

$\,\,\,\,= {1 \over t_2 - t_1 } [\int_{t_1}^{t_2} [f^2 (t)] dt - \Sigma_{r=1}^{n} C_r^2 K_r ] $

$\, \therefore \varepsilon = {1 \over t_2 - t_1 } [\int_{t_1}^{t_2} [f^2 (t)] dt + (C_1^2 K_1 + C_2^2 K_2 + ... + C_n^2 K_n)] $

The above equation is used to evaluate the mean square error.

Closed and Complete Set of Orthogonal Functions

Let us consider a set of n mutually orthogonal functions x1(t), x2(t)...xn(t) over the interval t1 to t2. This is called as closed and complete set when there exist no function f(t) satisfying the condition $\int_{t_1}^{t_2} f(t)x_k(t)dt = 0 $

If this function is satisfying the equation $\int_{t_1}^{t_2} f(t)x_k(t)dt=0 \,\, \text{for}\, k = 1,2,..$ then f(t) is said to be orthogonal to each and every function of orthogonal set. This set is incomplete without f(t). It becomes closed and complete set when f(t) is included.

f(t) can be approximated with this orthogonal set by adding the components along mutually orthogonal signals i.e.

$$f(t) = C_1 x_1(t) + C_2 x_2(t) + ... + C_n x_n(t) + f_e(t) $$

If the infinite series $C_1 x_1(t) + C_2 x_2(t) + ... + C_n x_n(t)$ converges to f(t) then mean square error is zero.

Orthogonality in Complex Functions

If f1(t) and f2(t) are two complex functions, then f1(t) can be expressed in terms of f2(t) as

$f_1(t) = C_{12}f_2(t) \,\,\,\,\,\,\,\,$ ..with negligible error

Where $C_{12} = {{\int_{t_1}^{t_2} f_1(t)f_2^*(t)dt} \over { \int_{t_1}^{t_2} |f_2(t)|^2 dt}} $

Where $f_2^* (t)$ = complex conjugate of f2(t).

If f1(t) and f2(t) are orthogonal then C12 = 0

$$ {\int_{t_1}^{t_2} f_1 (t) f_2^*(t) dt \over \int_{t_1}^{t_2} |f_2 (t) |^2 dt} = 0 $$

$$\Rightarrow \int_{t_1}^{t_2} f_1 (t) f_2^* (dt) = 0$$

The above equation represents orthogonality condition in complex functions.