Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Predicting Customer Churn in Python

Every business depends on customer's loyalty. The repeat business from customer is one of the cornerstone for business profitability. So it is important to know the reason of customers leaving a business. Customers going away is known as customer churn. By looking at the past trends we can judge what factors influence customer churn and how to predict if a particular customer will go away from the business. In this article we will use ML algorithm to study the past trends in customer churn and then judge which customers are likely to churn.

Data Preparation

As an example will consider the Telecom customer churn for this article. The source data is available at kaggel. The URL to download the data is mentioned in the below program. We use Pandas library to load the csv file into the Python program and look at some of the sample rows.

Example

import pandas as pd

#Loading the Telco-Customer-Churn.csv dataset

#https://www.kaggle.com/blastchar/telco-customer-churn

datainput = pd.read_csv('E:\Telecom_customers.csv')

print("Given input data :\n",datainput)

Output

Running the above code gives us the following result −

Given input data : customerID gender SeniorCitizen ... MonthlyCharges TotalCharges Churn 0 7590-VHVEG Female 0 ... 29.85 29.85 No 1 5575-GNVDE Male 0 ... 56.95 1889.5 No 2 3668-QPYBK Male 0 ... 53.85 108.15 Yes 3 7795-CFOCW Male 0 ... 42.30 1840.75 No 4 9237-HQITU Female 0 ... 70.70 151.65 Yes ... ... ... ... ... ... ... ... 7038 6840-RESVB Male 0 ... 84.80 1990.5 No 7039 2234-XADUH Female 0 ... 103.20 7362.9 No 7040 4801-JZAZL Female 0 ... 29.60 346.45 No 7041 8361-LTMKD Male 1 ... 74.40 306.6 Yes 7042 3186-AJIEK Male 0 ... 105.65 6844.5 No [7043 rows x 21 columns]

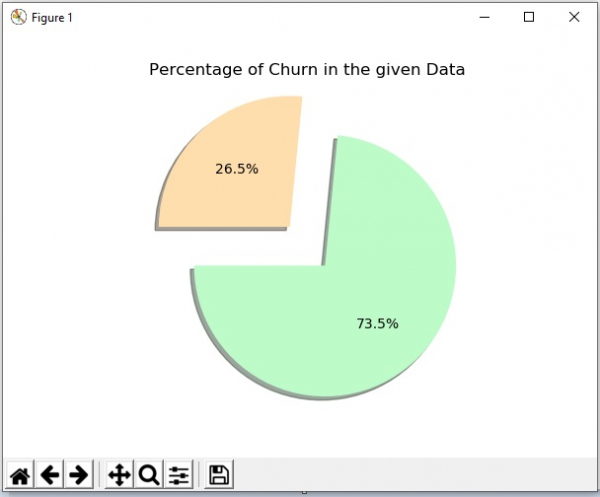

Study Existing Pattern

Next we study the data set to find the existing patterns of when the chain occurs. We also drop some columns from the data friend which does not impact the condition. For example, the customer ID column will not have an impact on whether the customer leaves are not so we drop such columns by using the drop all the pop method. Then we plot a chart showing the percentage of chance in the given data set.

Example 2

import pandas as pd

import matplotlib.pyplot as plt

from matplotlib import rcParams

#Loading the Telco-Customer-Churn.csv dataset

#https://www.kaggle.com/blastchar/telco-customer-churn

datainput = pd.read_csv('E:\Telecom_customers.csv')

print("Given input data :\n",datainput)

#Dropping columns

datainput.drop(['customerID'], axis=1, inplace=True)

datainput.pop('TotalCharges')

datainput['OnlineBackup'].unique()

data = datainput['Churn'].value_counts(sort = True)

chroma = ["#BDFCC9","#FFDEAD"]

rcParams['figure.figsize'] = 9,9

explode = [0.2,0.2]

plt.pie(data, explode=explode, colors=chroma, autopct='%1.1f%%', shadow=True, startangle=180,)

plt.title('Percentage of Churn in the given Data')

plt.show()

Output

Running the above code gives us the following result −

Data Preprocessing

To make the data ready to be used by ML algorithms, we label all the fields. We also convert the textual values to numeric flags. For example, the values in the gender column change to 0 and 1 instead of male and female. This helps in using those fields in the calculations and algorithms that will evaluate the impact of these fields on the churn value. We use LabelEncoder method from sklearn.

Example 3

import pandas as pd

from sklearn import preprocessing

label_encoder = preprocessing.LabelEncoder()

datainput['gender'] = label_encoder.fit_transform(datainput['gender'])

datainput['Partner'] = label_encoder.fit_transform(datainput['Partner'])

datainput['Dependents'] = label_encoder.fit_transform(datainput['Dependents'])

datainput['PhoneService'] = label_encoder.fit_transform(datainput['PhoneService'])

datainput['MultipleLines'] = label_encoder.fit_transform(datainput['MultipleLines'])

datainput['InternetService'] = label_encoder.fit_transform(datainput['InternetService'])

datainput['OnlineSecurity'] = label_encoder.fit_transform(datainput['OnlineSecurity'])

datainput['OnlineBackup'] = label_encoder.fit_transform(datainput['OnlineBackup'])

datainput['DeviceProtection'] = label_encoder.fit_transform(datainput['DeviceProtection'])

datainput['TechSupport'] = label_encoder.fit_transform(datainput['TechSupport'])

datainput['StreamingTV'] = label_encoder.fit_transform(datainput['StreamingTV'])

datainput['StreamingMovies'] = label_encoder.fit_transform(datainput['StreamingMovies'])

datainput['Contract'] = label_encoder.fit_transform(datainput['Contract'])

datainput['PaperlessBilling'] = label_encoder.fit_transform(datainput['PaperlessBilling'])

datainput['PaymentMethod'] = label_encoder.fit_transform(datainput['PaymentMethod'])

datainput['Churn'] = label_encoder.fit_transform(datainput['Churn'])

print("input data after label encoder :\n",datainput)

#separating features(X) and label(y)

datainput["Churn"] = datainput["Churn"].astype(int)

y = datainput["Churn"].values

X = datainput.drop(labels = ["Churn"],axis = 1)

print("\nseparated X and y :")

print("y -",y)

print("X -",X)

Output

Running the above code gives us the following result −

input data after label encoder customerID gender SeniorCitizen ... MonthlyCharges TotalCharges Churn 0 7590-VHVEG 0 0 ... 29.85 29.85 0 1 5575-GNVDE 1 0 ... 56.95 1889.5 0 2 3668-QPYBK 1 0 ... 53.85 108.15 1 3 7795-CFOCW 1 0 ... 42.30 1840.75 0 4 9237-HQITU 0 0 ... 70.70 151.65 1 ... ... ... ... ... ... ... ... 7038 6840-RESVB 1 0 ... 84.80 1990.5 0 7039 2234-XADUH 0 0 ... 103.20 7362.9 0 7040 4801-JZAZL 0 0 ... 29.60 346.45 0 7041 8361-LTMKD 1 1 ... 74.40 306.6 1 7042 3186-AJIEK 1 0 ... 105.65 6844.5 0 [7043 rows x 21 columns] separated X and y : y - [0 0 1 ... 0 1 0] X - customerID gender ... MonthlyCharges TotalCharges 0 7590-VHVEG 0 ... 29.85 29.85 1 5575-GNVDE 1 ... 56.95 1889.5 2 3668-QPYBK 1 ... 53.85 108.15 3 7795-CFOCW 1 ... 42.30 1840.75 4 9237-HQITU 0 ... 70.70 151.65 ... ... ... ... ... ... 7038 6840-RESVB 1 ... 84.80 1990.5 7039 2234-XADUH 0 ... 103.20 7362.9 7040 4801-JZAZL 0 ... 29.60 346.45 7041 8361-LTMKD 1 ... 74.40 306.6 7042 3186-AJIEK 1 ... 105.65 6844.5 [7043 rows x 20 columns]

Training and Testing the Data

Now we split the data set into two parts. One is for training and another is for testing. The test_size parameter is used to decide what percentage of the data set will be used only for testing. This exercise will help us gain the confidence on the model we are creating. Then we apply the Logistic Regression algorithm and find out the predicted values.

Example

import pandas as pd

import warnings

warnings.filterwarnings("ignore")

from sklearn.linear_model import LogisticRegression

#Loading the Telco-Customer-Churn.csv dataset with pandas

datainput = pd.read_csv('E:\Telecom_customers.csv')

datainput.drop(['customerID'], axis=1, inplace=True)

datainput.pop('TotalCharges')

datainput['OnlineBackup'].unique()

#LabelEncoder()

from sklearn import preprocessing

label_encoder = preprocessing.LabelEncoder()

datainput['gender'] = label_encoder.fit_transform(datainput['gender'])

datainput['Partner'] = label_encoder.fit_transform(datainput['Partner'])

datainput['Dependents'] = label_encoder.fit_transform(datainput['Dependents'])

datainput['PhoneService'] = label_encoder.fit_transform(datainput['PhoneService'])

datainput['MultipleLines'] = label_encoder.fit_transform(datainput['MultipleLines'])

datainput['InternetService'] = label_encoder.fit_transform(datainput['InternetService'])

datainput['OnlineSecurity'] = label_encoder.fit_transform(datainput['OnlineSecurity'])

datainput['OnlineBackup'] = label_encoder.fit_transform(datainput['OnlineBackup'])

datainput['DeviceProtection'] = label_encoder.fit_transform(datainput['DeviceProtection'])

datainput['TechSupport'] = label_encoder.fit_transform(datainput['TechSupport'])

datainput['StreamingTV'] = label_encoder.fit_transform(datainput['StreamingTV'])

datainput['StreamingMovies'] = label_encoder.fit_transform(datainput['StreamingMovies'])

datainput['Contract'] = label_encoder.fit_transform(datainput['Contract'])

datainput['PaperlessBilling'] = label_encoder.fit_transform(datainput['PaperlessBilling'])

datainput['PaymentMethod'] = label_encoder.fit_transform(datainput['PaymentMethod'])

datainput['Churn'] = label_encoder.fit_transform(datainput['Churn'])

#print("input data after label encoder :\n",datainput)

#separating features(X) and label(y)

datainput["Churn"] = datainput["Churn"].astype(int)

Y = datainput["Churn"].values

X = datainput.drop(labels = ["Churn"],axis = 1)

#train_test_split method

from sklearn.model_selection import train_test_split

X_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size=0.2)

#LogisticRegression

classifier=LogisticRegression()

classifier.fit(X_train,Y_train)

Y_pred=classifier.predict(X_test)

print("\npredicted values :\n",Y_pred)

Output

Running the above code gives us the following result −

predicted values : [0 0 1 ... 0 1 0]

Finding Evaluation Parameters

Once the accuracy level in the above step is acceptable we go on a further evaluation of the model by finding out different parameters. We use Accuracy and confusion matrix as our parameters to judge how accurately this model is behaving. A higher percentage of accuracy value suggests the model as a better fit. Similarly the confusion matrix shows a matrix of true positives, true negatives, false positives and false negatives. A higher percentage of true values as compared to the false values is an indication of better model.

Example

import pandas as pd

import warnings

warnings.filterwarnings("ignore")

from sklearn.linear_model import LogisticRegression

from sklearn import metrics

from sklearn.metrics import confusion_matrix

#Loading the Telco-Customer-Churn.csv dataset with pandas

datainput = pd.read_csv('E:\Telecom_customers.csv')

datainput.drop(['customerID'], axis=1, inplace=True)

datainput.pop('TotalCharges')

datainput['OnlineBackup'].unique()

#LabelEncoder()

from sklearn import preprocessing

label_encoder = preprocessing.LabelEncoder()

datainput['gender'] = label_encoder.fit_transform(datainput['gender'])

datainput['Partner'] = label_encoder.fit_transform(datainput['Partner'])

datainput['Dependents'] = label_encoder.fit_transform(datainput['Dependents'])

datainput['PhoneService'] = label_encoder.fit_transform(datainput['PhoneService'])

datainput['MultipleLines'] = label_encoder.fit_transform(datainput['MultipleLines'])

datainput['InternetService'] = label_encoder.fit_transform(datainput['InternetService'])

datainput['OnlineSecurity'] = label_encoder.fit_transform(datainput['OnlineSecurity'])

datainput['OnlineBackup'] = label_encoder.fit_transform(datainput['OnlineBackup'])

datainput['DeviceProtection'] = label_encoder.fit_transform(datainput['DeviceProtection'])

datainput['TechSupport'] = label_encoder.fit_transform(datainput['TechSupport'])

datainput['StreamingTV'] = label_encoder.fit_transform(datainput['StreamingTV'])

datainput['StreamingMovies'] = label_encoder.fit_transform(datainput['StreamingMovies'])

datainput['Contract'] = label_encoder.fit_transform(datainput['Contract'])

datainput['PaperlessBilling'] = label_encoder.fit_transform(datainput['PaperlessBilling'])

datainput['PaymentMethod'] = label_encoder.fit_transform(datainput['PaymentMethod'])

datainput['Churn'] = label_encoder.fit_transform(datainput['Churn'])

#print("input data after label encoder :\n",datainput)

#separating features(X) and label(y)

datainput["Churn"] = datainput["Churn"].astype(int)

Y = datainput["Churn"].values

X = datainput.drop(labels = ["Churn"],axis = 1)

#train_test_split method

from sklearn.model_selection import train_test_split

X_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size=0.2)

#LogisticRegression

classifier=LogisticRegression()

classifier.fit(X_train,Y_train)

Y_pred=classifier.predict(X_test)

#Accuracy

LR = metrics.accuracy_score(Y_test, Y_pred) * 100

print("\nThe accuracy score using the LR is -> ",LR)

#confusion matrix

cm=confusion_matrix(Y_test,Y_pred)

print("\nconfusion matrix : \n",cm)

Output

Running the above code gives us the following result −

The accuracy score using the LR is -> 80.8374733853797 confusion matrix : [[928 109] [161 211]]

Weight of Variables

Next we judge how each of the field or variable affects the churn value. This will help us target the specific variables that will have greater impacts on the churn and try to handle those variables in preventing the customer churn.For this we set the coefficients in our classifier to zero and get the weights of each variable.

Example

import pandas as pd

import warnings

warnings.filterwarnings("ignore")

from sklearn.linear_model import LogisticRegression

#Loading the dataset with pandas

datainput = pd.read_csv('E:\Telecom_customers.csv')

datainput.drop(['customerID'], axis=1, inplace=True)

datainput.pop('TotalCharges')

datainput['OnlineBackup'].unique()

#LabelEncoder()

from sklearn import preprocessing

label_encoder = preprocessing.LabelEncoder()

datainput['gender'] = label_encoder.fit_transform(datainput['gender'])

datainput['Partner'] = label_encoder.fit_transform(datainput['Partner'])

datainput['Dependents'] = label_encoder.fit_transform(datainput['Dependents'])

datainput['PhoneService'] = label_encoder.fit_transform(datainput['PhoneService'])

datainput['MultipleLines'] = label_encoder.fit_transform(datainput['MultipleLines'])

datainput['InternetService'] = label_encoder.fit_transform(datainput['InternetService'])

datainput['OnlineSecurity'] = label_encoder.fit_transform(datainput['OnlineSecurity'])

datainput['OnlineBackup'] = label_encoder.fit_transform(datainput['OnlineBackup'])

datainput['DeviceProtection'] = label_encoder.fit_transform(datainput['DeviceProtection'])

datainput['TechSupport'] = label_encoder.fit_transform(datainput['TechSupport'])

datainput['StreamingTV'] = label_encoder.fit_transform(datainput['StreamingTV'])

datainput['StreamingMovies'] = label_encoder.fit_transform(datainput['StreamingMovies'])

datainput['Contract'] = label_encoder.fit_transform(datainput['Contract'])

datainput['PaperlessBilling'] = label_encoder.fit_transform(datainput['PaperlessBilling'])

datainput['PaymentMethod'] = label_encoder.fit_transform(datainput['PaymentMethod'])

datainput['Churn'] = label_encoder.fit_transform(datainput['Churn'])

#print("input data after label encoder :\n",datainput)

#separating features(X) and label(y)

datainput["Churn"] = datainput["Churn"].astype(int)

Y = datainput["Churn"].values

X = datainput.drop(labels = ["Churn"],axis = 1)

#

#train_test_split method

from sklearn.model_selection import train_test_split

X_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size=0.2)

#

#LogisticRegression

classifier=LogisticRegression()

classifier.fit(X_train,Y_train)

Y_pred=classifier.predict(X_test)

#weights of all the variables

wt = pd.Series(classifier.coef_[0], index=X.columns.values)

print("\nweight of all the variables :")

print(wt.sort_values(ascending=False))

Output

Running the above code gives us the following result −

weight of all the variables : PaperlessBilling 0.389379 SeniorCitizen 0.246504 InternetService 0.209283 Partner 0.067855 StreamingMovies 0.054309 MultipleLines 0.042330 PaymentMethod 0.039134 MonthlyCharges 0.027180 StreamingTV -0.008606 gender -0.029547 tenure -0.034668 DeviceProtection -0.052690 OnlineBackup -0.143625 Dependents -0.209667 OnlineSecurity -0.245952 TechSupport -0.254740 Contract -0.729557 PhoneService -0.950555 dtype: float64