Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

How to solve this problem of scalable computers in computer architecture?

There are two basic problems to be solved in any scalable computer systems such as −

Tolerate and hide the latency of remote loads.

Tolerate and hide idling because of synchronization between parallel processors.

Remote loads are unavoidable in scalable parallel systems that use some form of distributed memory. Accessing a local memory usually requires only one clock cycle while access to a remote memory cell can take two orders of magnitude longer time. If a processor issuing a remote load operation had to wait for the operation to be completed without doing any usual work in the meantime, the remote load would significantly slow down the computation.

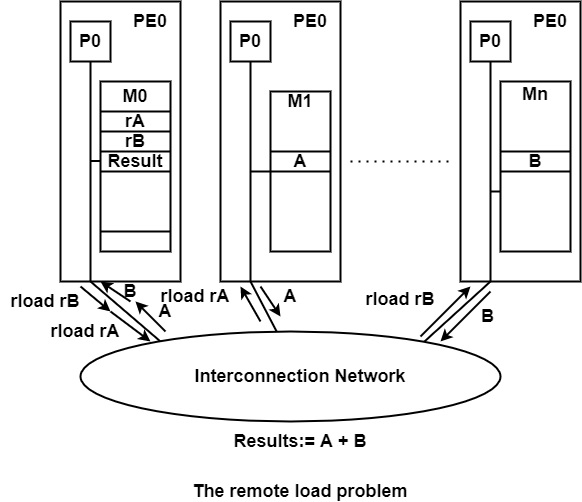

Because the rate of load instructions is high in most programs, the latency problem would eliminate all the potential benefits of parallel activities. A typical case is shown in the figure where P0 has to load two values A and B from two remote memory blocks M1 and Mn to evaluate the expression A + B.

The pointers to A and B are rA and rB stored in the local memory of P0. A and B are accessed by the rload rA and rload rB instruction which has to travel through the interconnection network to fetch A and B.

The situation is even worse if the values of rA and rB are currently not available in M1 and Mn because they are to be produced by other processes to be run later. In this case, where idling occurs due to synchronization between parallel processes, the original process on P0 has to wait for an unpredictable time, resulting in unpredictable latency.

It can solve these problems several possible hardware/software solutions have been proposed and applied in various parallel computers −

Application of cache memory

Prefetching

Introduction of threads and a fast context switching mechanism among threads.

The use of cache memory greatly reduces the time spent on remote load operations if most of them can be performed on the local cache. Suppose that A is placed in the same cache block as C and D which are objects in the expression following the one that contains A −

- Result:= A + B;

- Result2:= C – D;

Under such circumstances, caching A will also bring C and D into the cache memory of P0, and hence the remote load of C and D is replaced by local cache operations, which cause important acceleration in the program execution.

The prefetching technique relies on a similar principle. The main idea is to bring data into the local memory or cache before it is needed. A prefetch operation is a direct non-blocking request to fetch information before the actual memory operation is issued.