Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

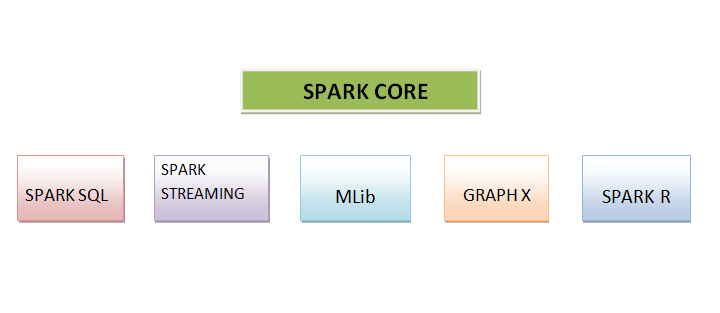

Components of Apache Spark

Apache Spark is a complex computing system. It provides high-level APIs in programming languages namely Python, Scala, and Java. It is easy to write parallel jobs in Spark. It offers general and quicker processing of data. It is written in Scala and it is faster than others. It is used to process a large number of datasets. It is now the most prominently engaged Apache Project. Its key feature is in-memory complex computing that extends the speed of the data process. It possesses some main features that are Multiple Language Support, Platform-Independent, High Speed, Modern Analytics, and General Purpose.

Now, We know some important features of Apache Spark, let us dive more into its components.

How does Apache Spark work?

Hadoop the programming paradigm MapReduce uses a distributed, parallel approach to handle large amounts of data. Developers don't have to worry about fault tolerance while creating massively parallelized operators. MapReduce is the sequential multiple steps it takes to run a job. It reads data at every step and performs operations and then writes the result back to HDFS. Its jobs are slower because of it.

Apache Spark was developed to solve the constraints of MapReduce. By using this, the process of reading data into memory, performing operations, and writing out the results can be done in just one step. It leads to quicker execution.

Components of Apache Spark

Apache Spark consists of Six Components. These are mentioned below ?

SPARK CORE

It is considered the foundation for the platform. It oversees memory management, job scheduling, job distribution, fault recovery, and job monitoring as well as communication with storage systems. APIs created for Python, Java, Scala, and R make it accessible. These APIs cover the distributed processing complexity and high-level operators.

SPARK SQL

It can be considered as the distributed query engine which offers low-latency queries and also offers interactive quicker than MapReduce. It consists of thousands of nodes, a cost-based optimizer, and code generation for quick queries. It comes pre-loaded with support for a number of data sources. From the Spark Package Ecosystem, we can access well-known stores like Amazon S3, Amazon Redshift, CouchBase, and many more.

SPARK STREAMING

It is a real-time approach for streaming analytics that makes use of Spark Core's quick scheduling ability. Many well-known sources present in the Spark Package Ecosystem like, Twitter, Flume, and HDFS are supported by Spark Streaming. It can operate different algorithms. It uses Micro-batching for real-time streaming.

MLib

MLib is the library of algorithms and is an important part of Spark. It enables large-scale data processing on machine learning. Machine Learning can be carried out swiftly by using this. It was developed for speedy and interactive computing that operates in storage. It contains various implementations of algorithms.

GRAPH X

Graph X is a distributed graph processing platform based on Spark. It comes with a variety of graph algorithms and versatile APIs. Developers use this to work with graphical data and exploratory analysis, and many more. It helps users to use graphical data for creating their applications.

SPARK R

It is a commonly used programming language by scientists due to its simplicity and capacity for running sophisticated algorithms. It has the biggest drawback of having a single node for computing power so, it is useless for processing massive amounts of data.

Benefits of Apache Spark

Apache Spark comes with many capabilities, some of which are mentioned below ?

Fast ? It can run fast queries against large data.

User-friendly ? It supports many programming languages like Java, Python, etc.

Multiple Workloads ? It supports multiple workloads seamlessly.

Platform-Independent ? It can run on any platform without much hustle.

Conclusion

Apache Spark is a cluster computing system. It is platform-independent and used to process huge amounts of datasets. It provides high-level APIs in Python, Java, and Scala. It consists of six main components namely, Spark Core, Spark SQL, Spark Streaming, MLib, Graph X, and Spark R. All these components can be used separately as well as all together. It can run multiple workloads and can run fast queries against a huge amount of data. It makes it easy to write parallel jobs. It is known for its simplicity and capacity for running sophisticated algorithms.