- Business Analytics - Home

- Business Analytics Basics

- Business Analytics - What It Is?

- Business Analytics - History and Evolution

- Business Analytics - Key Concepts and Terminologies

- Business Analytics - Types of Data

- Business Analytics - Data Collection Methods

- Different Tools used for Data Cleaning

- Business Analytics - Data Cleaning Process

- Different Sources of Data for Data Analysis

- Business Analytics - Data Cleaning

- Business Analytics - Data Quality

- Descriptive Analytics

- Descriptive Analytics - Introduction

- How Does Descriptive Analytics Work?

- Descriptive Analytics - Challenges and Future in Data Analysis

- Descriptive Analytics Process

- Descriptive Analytics - Advantages and Disadvantages

- Descriptive Analytics - Applications

- Descriptive Analytics - Tools

- Descriptive Analytics - Data Visualization

- Descriptive Analytics - Importance of Data Visualization

- Descriptive Analytics - Data Visualization Techniques

- Descriptive Analytics - Data Visualization Tools

- Predictive Analytics

- Predictive Analytics - Introduction

- Statistical Methods & Machine Learning Techniques

- Prescriptive Analytics

- Prescriptive Analytics - Introduction

- Prescriptive Analytics - Optimization Techniques

Predictive Analytics - Statistical Methods & Machine Learning Techniques

Predictive modelling is the foundation of predictive analytics. Predictive analytics and machine learning are closely linked since predictive models are most widely used in machine learning algorithms. These models are trained over time to adapt to new data or values and predict insights the business requires.

There are two types of prediction models. Classification models predict class membership, while regression models predict numbers. These models are then composed of algorithms. The algorithms do data mining and statistical analysis, identifying trends and patterns in the data. Predictive analytics techniques use built-in algorithms that may be used to generate predictive models. The algorithms are known as 'classifiers', and they determine the classes where data items map.

Some of the most commonly used statistical methods applied in predictive analytics are as follows −

1. Regression Analysis

Regression is a statistical analytic technique that determines the relationships between dependent and independent variables. Regression is effective for identifying trends in large datasets and determining the correlation between inputs. It performs best on continuous data with a known distribution. Regression is frequently used to determine how one or more independent variables influence one another like how a price increase affects product sales.

There are two main categories of regression analysis −

Linear Regression

Linear regression analysis mainly finds the relationship between a dependent variable and one or more independent variables using a linear equation to the data. It is widely used to predict continuous outcomes, such as sales or prices.

A linear regression equation is represented as follows −

Y = a + bX

X is the independent variable plotted along the x-axis, while Y is the dependent variable plotted along the y-axis.

Lasso and Ridge Regression

These are linear regression models that use penalties (regularization) to prevent overfitting by reducing coefficients of less essential variables. It is most commonly used in scenarios that involve multiple predictorsfor example, predicting house values using multiple variables.

Logistic Regression

A logistic regression is another category of regression analysis; this model is used when the dependent variable is categorical often binary categories like success/failure, yes/no. It determines the probability of occurring an event.

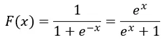

A logistic function is commonly employed in statistical models to model a binary dependent variable. The logistic function is also known as a sigmoid function, which is defined as −

This function helps the logistic regression model to squeeze values from (-k, k) to (0, 1). Logistic regression is primarily used for binary classification tasks, although it can also be utilized for multiclass classification.

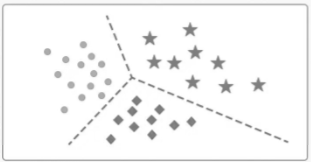

2. Classification Models

Classification models are a very popular type of statistical model in predictive analytics. They allow you to identify or categorize an observation based on its features. Classification models categorize data based on historical data. The classification model works with a training dataset where every data item has been labelled. The classification algorithm determines the relationships between data and labels and categorizes new data. Decision trees, random forests, and text analytics are some of the most common categorization modeling.

Classification models are most widely used in different industries because of their ease of retraining with new data. Banks frequently use classification models to detect fraudulent transactions. The system can evaluate millions of historical transactions to predict potential fraudulent activities and notify users when their account behaviour appears suspect.

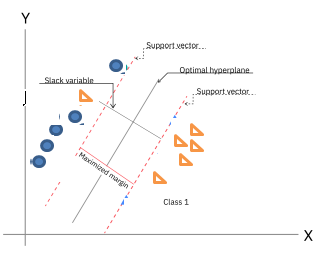

Support Vector Machines (SVM)

SVM is a classification technique that uses a hyperplane to separate different types of data. It works well in high-dimensional areas and can be utilized for both classification and regression problems. For example − image classification based on pixel data.

- Hyperplane − Multiple lines/decision boundaries can be used to separate classes in n-dimensional space, but we must determine which decision boundary is appropriate for classifying data points. The best boundary is referred to as the SVM hyperplane.

- Support Vectors − Support Vectors are data points or vectors that are closest to the hyperplane and have an effect on its position. Since these vectors support the hyperplane, they are referred to as support vectors.

Bayesian Methods

In statistics, naive Bayes are also known as probabilistic classifiers that use Bayes' Theorem to classify the data. This theorem is based on the probability of a hypothesis, given facts and prior information. The naive Bayes classifier implies that all features in the input data are independent of one another, which is rarely the practice case. Despite this simplistic assumption, the naive Bayes classifier is extensively utilized due to its efficiency and high performance in different applications in the real world.

Bayesian inference uses Bayes' theorem to update the probability of a hypothesis when new data becomes available. Its most common applications are in probabilistic reasoning and classification. Example: predicting email spam based on characteristics such as the presence of specified terms.

Naive Bayes

Naive Bayes is a classification technique that uses Bayes' Theorem and makes the assumption that independence between features. It is most frequently employed in text classification and recommendation systems. For example - spam email classification.

Principal Component Analysis (PCA)

PCA is a dimensionality reduction approach that converts data into a set of uncorrelated variables known as principal components. It is most commonly used for feature reduction while retaining important data variability. For example, consider reducing the complexity of large datasets for predictive modelling.

3. Clustering Models

Clustering models organize data based on comparable features. A clustering model employs a data matrix that correlates each data item with relevant features. Using this matrix, the algorithm will cluster items with similar attributes, identifying patterns in the data.

Clustering models can be used by organizations to group customers and develop more tailored marketing strategies. For example, a restaurant may group their customers by location and only send flyers to those who reside within a specified driving distance of their newest location.

K-Nearest Neighbors

Clustering algorithms such as k-Means are used to divide similar data points into clusters based on their characteristics. It's most commonly used for client segmentation. For example, to predict future actions, group similar customer behaviour patterns together.

K-Nearest Neighbors (k-NN) is a non-parametric method for classifying objects based on the majority class of their nearest neighbours. It is most commonly employed in classification, although it can also be applied to regression. For example, clients can be classified into distinct groups based on their purchase behaviour.

Decision Trees

Decision trees are classification models that assign data to multiple categories based on different variables. Overall, a decision tree resembles a flowchart, with each internal node representing a "decision" on a feature, each branch representing a result, and each leaf representing a class label. It is most commonly used for classification and regression problems.

The model is best fitted when attempting to comprehend an individual's decisions. The model resembles a tree, with each branch indicating a possible choice and the leaf representing the outcome of the decision. Decision trees are often simple to grasp and perform well when a dataset has multiple missing variables. Example: Predicting customer churn based on usage patterns.

Random Forest

A random forest is an ensemble method that employs many decision trees and aggregates their outputs to increase predictive accuracy. It is most commonly used for classification and regression problems. Example: Predicting disease diagnosis based on multiple patient factors. This is an extension of decision trees that creates a network of decision trees to improve accuracy and decrease overfitting.

Gradient Boosting

Gradient boosting model that creates trees in sequence, with each tree fixing the mistakes of the preceding ones (e.g., XGBoost). Gradient boosting is an ensemble method for building models in a sequential order, with each new model correcting the errors of the preceding ones. It is most commonly used for structured/tabular data in classification and regression. Example: Predicting customer lifetime value.

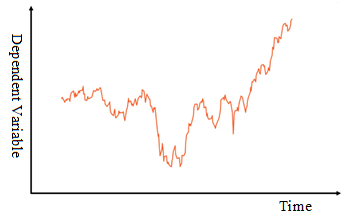

4. Time-series Models

Time series models collect data points about time. There are most suitable examples in the real world where data can be represented as a time series, time is one of the most commonly employed independent variables in predictive analytics. Time series analysis methods such as ARIMA (Auto-Regressive Integrated Moving Average) and exponential smoothing are used to model data across time. It is most commonly used to anticipate future values based on historical trends. For example - stock price prediction and demand prediction.

This is a most typical model that might assess the previous year's data to forecast a statistic for the following weeks. Power BI and Tableau's advanced business analytics tools enable organizations to predict and analyze different scenarios without wasting time or resources. Because time is a frequent variable, businesses use time series data for different applications with different purposes. The real application of this model is in seasonality analysis, which predicts how assets are influenced by specific times of the year, as well as trend analysis, which identifies asset movements over time. Some practical applications include projecting revenue for the next quarter, predicting the amount of visitors to a store and many more related ones.

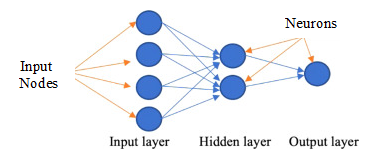

5. Neural Networks

Neural networks are machine learning techniques that play a vital role in predictive analytics to find complex relationships. Essentially, they are pattern recognition algorithms. Neural networks are computational models inspired by the human brain, made up of layers of interconnected nodes ("neurons"). Deep learning is a subclass with multiple layers. It is mostly used for complicated tasks such as image classification and natural language processing. Example: predicting product recommendations in e-commerce.

Neural networks are ideally suited to determining nonlinear correlations in datasets, particularly when there is no known mathematical technique for analyzing the data. Neural networks can be used to verify the output of decision trees and regression models.