- Business Analytics - Home

- Business Analytics Basics

- Business Analytics - What It Is?

- Business Analytics - History and Evolution

- Business Analytics - Key Concepts and Terminologies

- Business Analytics - Types of Data

- Business Analytics - Data Collection Methods

- Different Tools used for Data Cleaning

- Business Analytics - Data Cleaning Process

- Different Sources of Data for Data Analysis

- Business Analytics - Data Cleaning

- Business Analytics - Data Quality

- Descriptive Analytics

- Descriptive Analytics - Introduction

- How Does Descriptive Analytics Work?

- Descriptive Analytics - Challenges and Future in Data Analysis

- Descriptive Analytics Process

- Descriptive Analytics - Advantages and Disadvantages

- Descriptive Analytics - Applications

- Descriptive Analytics - Tools

- Descriptive Analytics - Data Visualization

- Descriptive Analytics - Importance of Data Visualization

- Descriptive Analytics - Data Visualization Techniques

- Descriptive Analytics - Data Visualization Tools

- Predictive Analytics

- Predictive Analytics - Introduction

- Statistical Methods & Machine Learning Techniques

- Prescriptive Analytics

- Prescriptive Analytics - Introduction

- Prescriptive Analytics - Optimization Techniques

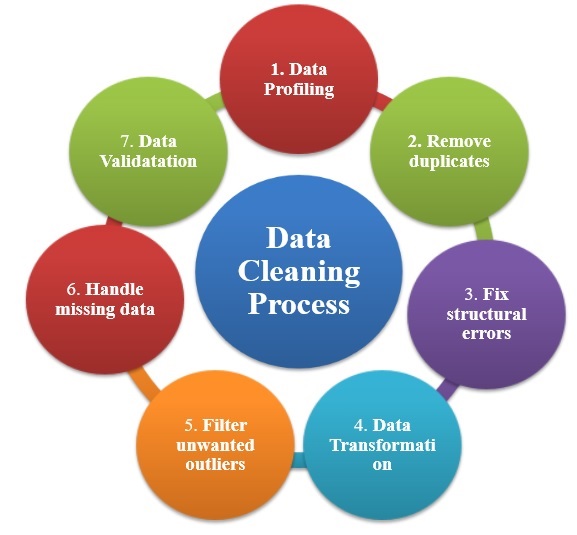

Business Analytics - Data Cleaning Process

Data cleaning, also known as data cleansing or data scrubbing, is an important process in data management that entails discovering and cleaning data by correcting it and fixing data consistencies to improve its overall quality. The purpose is to make sure that the data is correct, full, and dependable for analysis.

This article describes in detail the process of data cleaning, how to clean the data and what the necessary steps of data cleaning are.

Step 1: Data Profiling

Data profiling ensures the structure, content, and quality of the data by Reviewing of Data Types appropriate for each column.

Step 2: Remove duplicates and Irrelevant Data

Remove duplicate or irrelevant records from the dataset. These duplicated records increase data redundancy to the dataset which unnecessarily increases data length and makes data analytics wrong.

Step 3: Fix structural errors

Structural errors occur due to the layout and format of data sets like naming conventions, typos, date formats, or mislabelled groups or classes. These inconsistencies can cause mislabeled categories or classes.

For example - you may see "N/A" and "Not Applicable" appear together, but they should be treated as the same category. Some dates are in the format MM/DD/YYYY while others are in DD/MM/YYYY. Standardizing these date formats provides uniformity and avoids errors in analysis.

Step 4: Data Transformation

Transform data into a suitable format or structure for analysis. It includes data aggregation, Pivoting, and Deriving New Variables.

Step 5: Filter unwanted outliers

An outlier is a data point in a dataset that deviates significantly from other observations. An outlier may reflect measurement variability or indicate an experimental error, which is occasionally deleted from the data set.

Step 6: Handle missing data

Missing values in a dataset make it difficult to analyse so it cannot be ignored, it should be taken care of properly because some algorithms will not accept missing values in datasets to process. Missing data can be handled as

- Drop − Drop entire records which have missing values; this approach may lose data, so a user should take care before removing it.

- Imputation − Fill missing values with average data values based on the observations; this method affects data integrity because assumptions of average values are not actual ones.

- Flagging − Mark missing values for special treatment in later analysis.

- Navigate null values − Effectively navigate null values.

Step 7: Validate your data

This phase of the data cleaning process validates data values in a dataset by answering the following −

- Is data valuable?

- Is it containing appropriate formats?

- Is it error-free and fulfils organisational needs to provide desired results?

Effective data cleaning is essential to ensuring the validity and reliability of subsequent data analysis or machine learning models.