Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

What is Style Generative Adversarial Networks (StyleGAN)?

Introduction

Artificial intelligence has become an integral part of numerous industries, and the field of computer?generated imagery is no exception. One remarkable innovation in this domain is Style Generative Adversarial Networks (StyleGAN). Pushing the boundaries of what was previously achievable in generating realistic images, StyleGAN opens a world of creativity and possibilities. In this article, we will explore the fascinating concept behind StyleGAN and its impact on computer graphics.

Style Generative Adversarial Networks (StyleGAN)

The generator network aims to create synthetic data samples that resemble real data instances within a given dataset. Meanwhile, the discriminator's role is to identify whether an image presented to it belongs to the real dataset or was produced by the generator. This back?and?forth interplay teaches the generator how to continuously improve its output until it becomes perceptually indistinguishable from authentic examples.

StyleGAN ? developed by researchers at NVIDIA in 2018 as an evolution of traditional GAN architecture for generating high?quality synthetic images with unprecedented control over specific attributes such as pose, hair color, and facial features. What sets StyleGAN apart from earlier versions is its ability to blend styles extracted from a training set while preserving fine?grained details unique to each image instance generated by using adaptive instance normalization layers. These layers take care of modifying mean and standard deviation values across different levels within neural networks used during style transfer processes.

Training Process

During training iterations with vast amounts of labeled images' data points combined with latent vectors encoding properties like age range or dominant colors, StyleGAN gradually learns to disentangle distinct aspects of an image. It is then capable of generating realistic output by controlling these learned features.

The Magic of Control

StyleGAN's true power lies in its ability to allow users meaningful control over the synthesized images' characteristics. By manipulating a set of latent variables, one can determine various style parameters such as age, hair length, smile intensity, and even attributes that do not exist organically (like eye color not found in humans). Artists and designers find this feature supremely valuable, enabling them to let their creativity soar with endless possibilities.

Applications and Implications

The versatility of StyleGAN extends far beyond artistry ? from fashion design to interior decorating and entertainment industries. Fashion brands can use it for virtual garment try-ons or customization recommendations based on individual preferences. Architects have potential benefits through exploring various visual representations during the design phase simply by adjusting architectural styles or materials dynamically through generative models like StyleGAN.

Concerns regarding authenticity arise as well since synthetic images generated using StyleGAN could potentially lead us into a realm where real from unreal becomes challenging to discern easily. However, ethical considerations and responsible usage should always be at the forefront when employing AI technologies.

Block Diagram

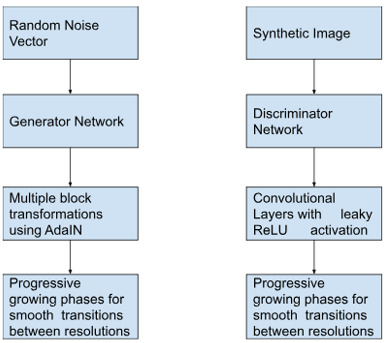

At its core, StyleGAN incorporates two key components: generator network and discriminator network.

-

Generator Network

The generator takes random noise vectors as inputs to generate synthetic images from scratch based on learned patterns. However, instead of directly mapping these vectors to entire images like traditional GAN approaches, StyleGAN employs multiple block transformations using adaptive instance normalization (AdaIN). These blocks are where different styles can be independently manipulated within each stage or resolution level.

-

Discriminator Network

The discriminator attempts to distinguish whether an image is real or fake generated by the generator network utilizing convolutional layers combined with leaky ReLU activation functions for enhanced performance at discerning intricate details.

Both networks communicate throughout a series of progressive growing phases which facilitate smooth transitions between resolutions during training stages. This unique technique undoubtedly contributes to impressive results ? preserving minute details while avoiding potential artifacts that might arise when scaling up lower?resolution features.

Real?time Example ? Portrait Generation

To better comprehend how this innovative technology translates into practicality, let's dive into portrait generation using StyleGAN architecture as our real?time example.

Utilizing facial datasets, StyleGAN can generate lifelike portraits with striking accuracy and variety, showcasing a remarkable level of control over several facial attributes.

For instance, one can employ fixed vectors to manipulate specific traits like age progression or gender transformation while maintaining realistic features.

Moreover, the disentangled structure of StyleGAN enables modification at different levels by independently altering its latent space dimensions. This means that users can seamlessly modify aspects such as hair color or style, eye shape or color that is without affecting other attributes ? even down to fine-grained details like freckles or wrinkles.

Conclusion

As we continue advancing in AI?driven creative applications, Style Generative Adversarial Networks revolutionize how artificial intelligence interprets artistic expression. From refining computer graphics standards to unlocking previously unimaginable potentials across multiple domains and industries: with StyleGAN backing our creativity pursuits ? vivid imagination becomes reality.