Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

What is DBScan Clustering in R Programming?

Introduction

Clustering analysis, a fundamental technique in machine learning and data mining, allows for identifying patterns and grouping similar data points together. Among various clustering algorithms, Density?Based Spatial Clustering of Applications with Noise (DBSCAN) stands out as a powerful tool that can automatically discover clusters of arbitrary shapes. In this article, we will explore the concepts behind DBSCAN and demonstrate its implementation in R programming through clear and concise code examples.

DBScan Clustering

DBSCAN is particularly valuable when dealing with datasets that contain groups of varying densities or irregularly shaped clusters. Unlike other traditional clustering techniques like K?means or hierarchical clustering, DBSCAN determines clusters based on density?reachable points rather than predefined distance thresholds.

Key Parameters of DBSCAN

Epsilon (?): The largest distance between 2 neighboring points to be considered part of the same cluster.

MinPts: The smallest number of neighboring points required within ? distance to classify a point as a core point.

Border Points:Data points located within ? radius but do not possess sufficient neighbors to be considered core points.

Noise Points: Outliers far from any cluster.

Advantages of DBSCAN Clustering

Ability to handle noise: Unlike some other clustering algorithms like K?means or hierarchical clustering, DBSCAN can effectively handle noisy data by considering them as outliers or noise rather than forcing them into specific clusters.

Flexibility in cluster shape detection: Traditional methods often assume spherical?shaped or convex clusters. However, DBSCAN excels at identifying clusters with complex shapes and varying densities without prior assumptions.

Automatic determination of number of clusters: Unlike approaches such as K?means where one needs to specify the number of desired clusters beforehand, DBSCAN does not require prior knowledge about the number of resulting clusters ? it automatically discovers the optimal number.

Robustness against parameter selection: Although there are parameters involved (epsilon and minPts), they have intuitive interpretations which make it easier to tune compared to many other clustering algorithms.

Scalability with large datasets: By cleverly exploiting indexing structures like k?d trees or R?trees combined with efficient density?based calculations, DBSCAN can scale well for high?dimensional data or large datasets.

Disadvantages of using DBSCAN

Parameter sensitivity: Choosing appropriate values for epsilon (?) and minPts is crucial but challenging since these parameters depend heavily on dataset characteristics such as underlying density distribution. Selecting inappropriate values may result in merging clusters or creating too many small, insignificant clusters.

Inefficient for datasets with varying densities: Traditional DBSCAN struggles with datasets where the density varies significantly across different regions. Although there are modified versions like Improved DBSCAN that try to address this issue, they may come at the cost of increased computational complexity.

Difficulty in handling high?dimensional data: Like many clustering algorithms, DBSCAN faces challenges when applied to high?dimensional datasets due to the "curse of dimensionality." In such cases, feature selection or dimensionality reduction techniques might be necessary before applying DBSCAN.

R programming to implement the Hierarchical Clustering

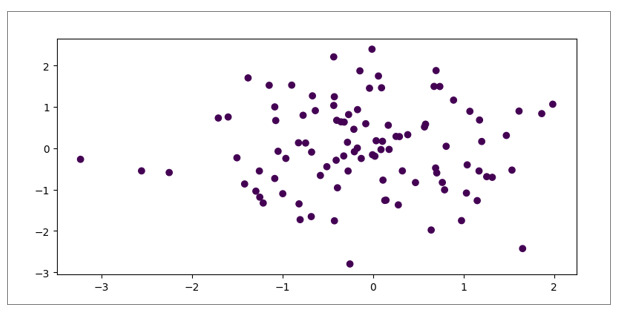

The DBscan clustering is implemented by calculating the distance with the help of Euclidean distance. The below code will perform DBSCAN clustering on the iris dataset and then plot the resulting clusters using a scatter plot with the highlighted clusters in purple.

Algorithm

Step 1 :To start with, we first need to install and load the sample dataset.

Step 2 :Before clustering, data preprocessing is necessary. We may need to standardize variables or handle missing values if present.

Step 3 :Calculating distances of dissimilarity or distance between observations based on selected metrics such as Euclidean distance or Manhattan distance.

Step 4 :Creating DBSCAN Clusters, that we have our distance matrix ready, we can proceed to perform DBscan clustering using `hclust()` function in R.

Step 5 :The resulting object "hc" stores all information required for subsequent steps.

Step 6 :Plotting scatter plots, we can visualize our clusters through plotting plots in R

Example

#install.packages("fpc")

# Load the fpc package

library(fpc)

# Load the iris dataset

data(iris)

# Standardize the iris dataset

iris_std <- scale(iris[-5])

# Calculate the distance matrix using Euclidean distance

distance_mat <- dist(iris_std, method = "euclidean")

# Create DBSCAN clusters

db_cl <- dbscan(distance_mat, eps = 0.5, MinPts = 5)

# Perform hierarchical clustering using Ward's method

hc <- hclust(distance_mat, method = "ward.D2")

# Plot the resulting dendrogram

plot(db_cl, iris_std, main="ed")

Output

Conclusion

DBSCAN offers an excellent alternative for uncovering hidden patterns in complex datasets without relying on predefined distances or assuming specific shapes for clusters. With its ability to identify noise and outliers automatically while being flexible enough to handle different density levels within a single analysis, DBSCAN proves itself valuable across various domains.