Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

The Optimal Number of Epochs to Train a Neural Network in Keras

Introduction

Training a neural network includes finding the proper adjustment between under fitting and overfitting. In this article, we'll learn the epochs's concept and dive into deciding the epoch's number, a well?known deep?learning library. By understanding the trade?off between underfitting and overfitting, utilizing methods like early ceasing and cross?validation, and considering learning curves, we are able successfully to decide the perfect number of epochs.

Understanding Epochs

An epoch alludes to one total pass of the whole preparing dataset through a neural network. Amid each epoch, the network learns from the training information and updates its internal parameters, such as weights and predispositions, to progress its execution. The purpose of preparing a neural network is to minimize the loss function, which evaluates the inconsistency among the network's forecasts and the actual targets.

The Trade?Off: Under fitting and over fitting

Sometime recently diving into the ideal number of epochs, let's get it the trade?off between under fitting and over fitting.

Under fitting: This happens when the show is incapable to capture the basic designs within the preparing information. It comes about in high preparing mistake and destitute generalization to unseen data. Under fitting can happen when the show is as well straightforward or when it hasn't been trained for sufficient epochs.

Over fitting: On the other hand, over fitting happens when the model becomes as well specialized within the training information and fails to generalize well to new information. Over fitting is characterized by low preparing mistake but high validation error. It happens when the show is as well complex or when it has been prepared for as well many epochs.

Implementation Code For Optimal Eppochs

Algorithm

Step 1 :Import essential libraries i.e. tensorflow and keras.

Step 2 :Characterize input information and the neural network model.

Step 3 :Compile the code with the suitable function, optimizer & measurements.

Step 4 :Set up early stopping criteria employing a callback to screen the validation loss.

Step 5 :Prepare the show with the early ceasing callback to stop preparing when the validation loss does not make strides.

Step 6 :Recover the ideal number of epochs where preparing was stopped early, speaking to the model's ideal point.

Example

import numpy as np

import tensorflow as tf

from keras.models import Sequential

from keras.layers import Dense

from keras.callbacks import EarlyStopping

X_train = np.random.random((1000, 10))

y_train = np.random.randint(2, size=(1000,))

X_val = np.random.random((200, 10))

y_val = np.random.randint(2, size=(200,))

num_classes = 2

model = Sequential()

model.add(Dense(64, activation='relu', input_shape=(10,)))

model.add(Dense(num_classes, activation='softmax'))

model.compile(loss='sparse_categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

early_stopping = EarlyStopping(monitor='val_loss', patience=5, verbose=1, restore_best_weights=True)

history = model.fit(X_train, y_train, validation_data=(X_val, y_val), epochs=100, batch_size=32, callbacks=[early_stopping])

optimal_epochs = early_stopping.stopped_epoch + 1

print("Optimal number of epochs:", optimal_epochs)

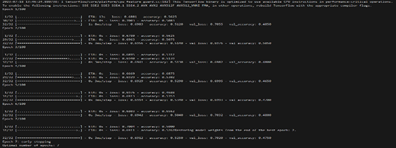

Output

Learning Curves

They are the plots that appear the performance in including both the preparing and validation inputs as a function of the no. of epochs. They give a visual representation of how the show is learning over time and can be valuable in deciding the ideal number of epochs.

Under fitting: Within the case of underfitting, the learning curves meet gradually, and both the preparation and approval errors remain high even with more epochs. This demonstrates that the show needs more capacity or more preparation information to capture the basic designs.

Over fitting: When the model begins to overfit, the learning curves wander, with the preparing error diminishing whereas the approval error increments. This happens when the show has learned to memorize the prepared information as well, driving to poor generalization.

Ideal Point: The ideal number of epochs lies at the point where the learning curves stabilize, and the approval error is at its lowest. At this point, the demonstrate has learned the fundamental designs within the information without overfitting.

Conclusion

In Conclusion, deciding the optimal no. of epochs for preparing a neural organize in Keras could be a basic step in accomplishing great show execution. By adjusting the trade?off between underfitting and overfitting, utilizing methods like early halting and watching learning curves, and considering cross?validation, you can discover the proper number of epochs for your issue. Keep in mind that hyperparameter tuning and empirical experimentation are essential in optimizing the execution of your neural organize.