Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Pre-trained Word embedding using Glove in NLP models

The field of Natural Language Processing (NLP) has made remarkable progress in comprehending and processing human language, leading to the development of various applications such as machine translation, sentiment analysis, word embedding, and text classification using various libraries like Glove. One crucial aspect of NLP focuses on representing words in a way that computers can understand, using numerical vectors for analysis.

Pre-trained word embeddings have emerged as a powerful solution for capturing the meaning and relationships between words. In this article, we investigate the utilization of pre-trained word embeddings from GloVe (Global Vectors for Word Representation) and explore their application in NLP models. We will highlight how they enhance language comprehension and improve the performance of diverse NLP tasks.

What is word embedding?

Word embedding is the process of converting words into numerical vectors that capture their contextual information and meaning. By mapping words to a continuous vector space, pre-trained word embeddings allow NLP models to interpret the similarities and relationships between words, bringing us closer to human-like language understanding.

What is GloVe?

GloVe, developed by Stanford University, stands for Global Vectors for Word Representation. It is a popular pre-trained word embedding model that constructs word vectors based on the global word co-occurrence statistics found in large text corpora. GloVe captures the statistical patterns of word usage and distribution, producing embeddings that represent the semantic relationships between words effectively.

Use of Pre-trained Word embedding using Glove in NLP models

The use of pre-trained word embeddings from GloVe has brought numerous benefits to NLP models. First and foremost, these embeddings alleviate the burden of having to train word representations from scratch. Training word embeddings from large corpora can be computationally expensive and time-consuming. By using pre-trained embeddings, researchers and practitioners can leverage the collective intelligence of vast text data that GloVe has been trained on, saving valuable time and computational resources.

Furthermore, pre-trained word embeddings like GloVe improve the generalization capabilities of NLP models. The semantic relationships captured by GloVe embeddings allow models to recognize similarities between words and transfer knowledge from one task to another. This transfer learning aspect is particularly useful when working with limited training data or when faced with domain-specific language.

How to perform Pre-trained Word embedding using Glove in NLP models?

By following the steps given below, we can effectively utilize pre-trained GloVe word embeddings in our NLP models, enhancing language understanding, and improving performance across various NLP tasks.

Obtain the GloVe Pre-trained Word Embeddings ? Start by downloading the pre-trained GloVe word embeddings from the official website or other reliable sources. These embeddings come in different dimensions and are trained on large text corpora.

Load the GloVe Embeddings ? Load the downloaded GloVe embeddings into your NLP model. This can be done by reading the embeddings file, which usually contains word-to-vector mappings, into a data structure that allows efficient access.

Tokenize and Preprocess Text Data ? Tokenize your text data by breaking it into individual words or subwords. Remove any irrelevant characters, punctuation, or special symbols that may interfere with the word-matching process. Additionally, consider lowercasing the words to ensure consistency.

Map Words to GloVe Embeddings ? Iterate through each tokenized word and check if it exists in the loaded GloVe embeddings. If a word is present, retrieve its corresponding pre-trained vector. If a word is not found, you can assign a random vector or a vector based on similar words present in the embeddings.

Integrate Embeddings into NLP Model ? Incorporate the GloVe embeddings into your NLP model. This can be done by initializing an embedding layer with the pre-trained vectors or by concatenating them with other input features. Ensure that the dimensions of the embeddings align with the model's requirements.

Fine-tune the NLP Model ? Once the GloVe embeddings are integrated, fine-tune your NLP model using specific training data and target tasks. This step allows the model to adapt and optimize its performance based on the given objectives.

Evaluate and Iterate ? Evaluate the performance of your NLP model using appropriate metrics and test datasets. If necessary, iterate and make adjustments to the model architecture or training process to achieve desired results.

Example

Below is the program example that shows How to perform Pre-trained Word embedding using Glove in NLP models ?

import numpy as np

from gensim.models import KeyedVectors

# Step 1: Load GloVe Pre-trained Word Embeddings

glove_path = 'C:/Users/Tutorialspoint/glove.6B.100d.txt' # Update the path to your GloVe file

word_embeddings = KeyedVectors.load_word2vec_format(glove_path, binary=False, no_header=True)

# Step 2: Define Sample Dictionary

sample_dictionary = {

'apple': None,

'banana': None,

'carrot': None

}

# Step 3: Map Words to GloVe Embeddings

def get_word_embedding(word):

if word in word_embeddings.key_to_index:

return word_embeddings[word]

else:

# Handle out-of-vocabulary words

return np.zeros(word_embeddings.vector_size) # Return zero vector for OOV words

def map_dictionary_to_embeddings(dictionary):

embeddings = {}

for word in dictionary:

embedding = get_word_embedding(word)

embeddings[word] = embedding

return embeddings

# Step 4: Print Mapped Embeddings

embeddings = map_dictionary_to_embeddings(sample_dictionary)

for word, embedding in embeddings.items():

print(f'Word: {word}, Embedding: {embedding}')

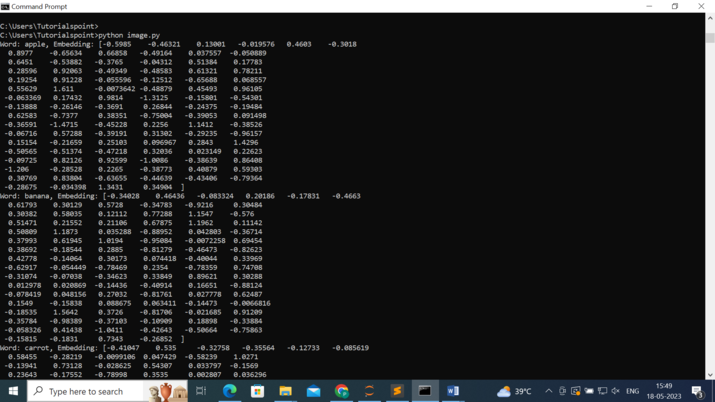

Output

Conclusion

In conclusion, pre-trained word embeddings using GloVe have proven to be a valuable asset in NLP models. By capturing semantic relationships between words, these embeddings enhance language understanding and improve the performance of various NLP tasks. The ability to transform words into numerical vectors enables computational analysis of text data.

Leveraging the extensive pre-training on large text corpora, GloVe embeddings provide a powerful solution for incorporating contextual information into NLP models. Incorporating GloVe embeddings offers a significant advantage in advancing the field of natural language processing and its diverse applications.