Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Parallelism in Query in DBMS

Introduction

Are you struggling with slow query execution in your Database Management System (DBMS)? Actually, the fact is, parallelism in DBMS can significantly enhance the performance and speed of your queries. In this article, we'll unravel the concept of parallel query execution, its types - intraquery and interquery parallelism, their benefits and how they can be implemented to optimize your DBMS operations.

Intrigued? Let's dive into the world of efficient data processing!

Types of Parallelism in Query Execution in DBMS

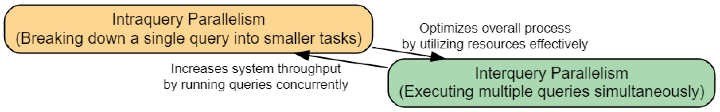

There are two types of parallelism in query execution in DBMS: intraquery parallelism and interquery parallelism.

Intraquery Parallelism

Intraquery parallelism in Database Management Systems (DBMS) is an efficient and powerful technique that breaks down a single query into multiple smaller tasks, which are then executed simultaneously.

This method significantly boosts the speed of query execution by distributing processing power across different CPUs or workers within a database system.

Let's consider a simple example for clarity's sake- a voluminous database requiring sorting data based on certain parameters. Instead of executing this as one big task (which could be time-consuming), intraquery parallelism divides it into manageable sub-tasks implemented concurrently.

The results from these individual operations are then combined to produce the final output, effectively speeding up the overall process while utilizing resources optimally.

Interquery Parallelism

Interquery parallelism is a type of parallelism in query execution in DBMS that focuses on executing multiple queries simultaneously. This feature allows multiple queries to be executed simultaneously instead of having to wait for one query to complete before initiating the next one.

The main goal of interquery parallelism is to increase overall system throughput and reduce query response time.

By allowing multiple queries to run concurrently, interquery parallelism makes more efficient use of system resources. This leads to faster query execution and improved performance. For example, if one query is performing a long-running operation, such as a complex join, other queries can still be processed independently without being blocked.

Implementing interquery parallelism involves various techniques, such as dividing the workload into smaller tasks that can be executed simultaneously across different processors or nodes in a distributed database environment.

Parallel database systems are designed with this capability in mind and employ sophisticated algorithms for distributing workloads effectively.

Benefits and Importance

Parallelism in query execution offers several advantages and is crucial for efficient database management systems. It leads to increased throughput and performance, allows for the optimal utilization of resources, and significantly reduces query execution times.

Increased Throughput and Performance

Parallelism in query execution plays a vital role in enhancing the throughput and performance of database management systems (DBMS). By dividing complex queries into smaller tasks that can be executed simultaneously, parallelism allows multiple processors to work on different parts of the query at the same time.

This results in faster execution times and improved overall system efficiency. With increased throughput, DBMS can handle larger workloads and process more queries concurrently, allowing for better scalability and responsiveness.

Additionally, parallelism ensures efficient utilization of resources by distributing the workload evenly across processors, maximizing their potential and reducing unnecessary idle time. Both novice users and experienced professionals can benefit from understanding how parallelism boosts throughput and performance in DBMS operations.

Efficient Utilization of Resources

Efficient utilization of resources is one of the key benefits of parallelism in query execution in DBMS. By dividing a query into smaller tasks that can be executed simultaneously, parallel processing allows for optimal use of available system resources such as CPU, memory, and disk I/O.

This means that multiple queries can be processed concurrently, reducing the overall time required to execute complex queries and increasing system throughput. Parallelism also enables better load balancing across different nodes or processors in a distributed database environment, ensuring that resources are evenly utilized and avoiding bottlenecks.

Overall, efficient resource utilization through parallelism enhances the performance and scalability of database systems, making them capable of handling large-scale data processing tasks more effectively.

Faster Query Execution

Parallelism in query execution plays a crucial role in achieving faster query execution in a database management system (DBMS). By dividing the workload of a single query into multiple smaller tasks that can be processed simultaneously, parallelism enables queries to be executed much more quickly.

This is especially beneficial for complex and resource-intensive queries that would otherwise take a long time to complete. Through parallel processing, the DBMS can distribute the workload across multiple processors or nodes, allowing for efficient utilization of resources and significantly reducing the overall response time of queries.

With faster query execution, users can obtain results more rapidly and make better-informed decisions based on up-to-date information.

Implementing parallelism in DBMS involves utilizing different techniques such as shared disk architecture, shared-memory architecture, and shared-nothing architecture. These architectures allow for concurrent processing of multiple queries by leveraging interquery parallelism and intraquery parallelism.

Interquery parallelism focuses on executing multiple independent queries simultaneously, while intraquery parallelism divides a single query into smaller parts that can be executed concurrently.

Implementation and Techniques

To achieve parallelism in DBMS, various implementation techniques can be used such as shared disk architecture, shared-memory architecture, and shared-nothing architecture.

Shared Disk Architecture

Shared disk architecture is a key implementation technique for achieving parallelism in query execution in database management systems (DBMS). In this architecture, multiple processors or nodes share a common disk storage system.

Each processor has its own private cache memory and can independently access the shared disk to process queries.

With shared disk architecture, parallelism is achieved through interquery parallelism, where multiple queries are executed simultaneously by different processors. This allows for faster query execution and improved performance as the workload is distributed among multiple processors.

Shared-memory Architecture

Shared-memory architecture is a type of implementation for achieving parallelism in database management systems (DBMS). In this architecture, multiple processors or threads can access the same physical memory simultaneously.

It allows for efficient data sharing and communication between different processing units, enabling them to work on different parts of a query concurrently.

In a shared-memory architecture, each processor or thread has its own cache memory, which stores frequently accessed data. This reduces the need for accessing the main memory, improving overall performance.

Additionally, it eliminates the need for complex data transfer operations between processors, simplifying the implementation and reducing latency.

By utilizing shared-memory architecture in DBMS, database queries can be executed in parallel more effectively. This leads to faster query execution times and improved throughput. It also enables better utilization of system resources by distributing workload among multiple processing units efficiently.

Shared-nothing Architecture

The shared-nothing architecture is a parallel database design that allows for efficient and scalable query execution in DBMS. Within this particular architectural framework, individual nodes or processors within the system maintain exclusive control over their own memory and disk storage units as an intrinsic characteristic thereof thereby ruling out any possibility of sharing such provisions with other neighboring components or counterparts within their collective networked domain thereby underscoring singular autonomy as a catalyst for high-performance parallel processing. Correspondingly, in the ambit of a shared-nothing architectural paradigm, data undergoes partition across various interconnected nodes spreading responsibility for overseeing and managing particular sections or parcels of the overall dataset among individual nodes.

Hence, during query execution instances, such questions can be effectively fragmented into smaller individual subqueries that may be routed to and concurrently processed by different constituent nodes promoting efficiency through the exercise of parallel processing operations.

Each node independently processes its assigned subquery using its own local data and returns results to be combined at the end.

This architecture offers several benefits. Firstly, it enables efficient utilization of resources as there is no need to replicate data across multiple nodes. Secondly, it allows for faster query execution as subqueries can be processed simultaneously by different nodes.

Lastly, it supports scalability by easily adding more nodes to handle increased workloads.

Conclusion

Parallelism plays a crucial role in query execution in DBMS. With its ability to increase throughput and performance, efficiently utilize resources, and expedite query execution, parallelism enhances the overall efficiency of database operations.

By implementing techniques such as shared disk architecture, shared-memory architecture, and shared-nothing architecture, organizations can harness the power of parallelism to optimize their database systems and achieve faster query processing.

The benefits of parallelism extend beyond just speed; they also provide improved concurrency control and enable effective distributed query processing. As technology continues to evolve, embracing parallelism will be essential for businesses looking to unlock the full potential of their data management systems.