Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

What is shared disk architecture in parallel databases?

In parallel database system data processing performance is improved by using multiple resources in parallel. In this CPU, disks are used parallel to enhance the processing performance.

Operations like data loading and query processing are performed parallel. Centralized and client server database systems are not powerful enough to handle applications that need fast processing.

Parallel database systems have great advantages for online transaction processing and decision support applications. Parallel processing divides a large task into multiple tasks and each task is performed concurrently on several nodes. This gives a larger task to complete more quickly.

Architectural Models for Parallel DBMS

There are several architectural models for parallel machines, which are given below −

- Shared memory architecture.

- Shared disk architecture.

- Shared nothing architecture.

- Hierarchical architecture.

Shared Disk Architecture

Let us discuss shared disk architecture.

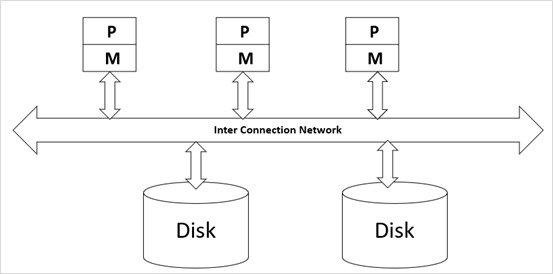

Shared disk architecture − In this each node has its own main memory but all nodes share mass storage. In practice, each node also has multiple processors. All processors can access all disks directly via an interconnection network, each processor has their private memories.

Examples − IMS/VS data sharing.

Given below is the diagram of the shared disk architecture −

Here,

P is the Processor.

M is the Main Memory

Advantages

The advantages of the shared disk architecture are as follows −

As each processor has its own memory thus the memory bus is not a bottleneck.

It offers a degree of fault tolerance that is if a processor fails the other processors can take over its task because the database is resident on disks that are accessible from all processors.

It can scale to a somewhat large number of CPU’s.

Disadvantages

The disadvantages of the shared disk architecture are as follows −

The architecture is not scalable, since the interconnection to the disk subsystem is now having the bottleneck.

CPU to CPU communication is slower since it has to go through a communication network.

The basic problem with the shared disk model is interface because more CPUs are added. Existing CPU’s are slowed down because of increased contention for memory access in network bandwidth.