- OS - Home

- OS - Overview

- OS - History

- OS - Evolution

- OS - Functions

- OS - Components

- OS - Structure

- OS - Architecture

- OS - Services

- OS - Properties

- Process Management

- Processes in Operating System

- States of a Process

- Process Schedulers

- Process Control Block

- Operations on Processes

- Process Suspension and Process Switching

- Process States and the Machine Cycle

- Inter Process Communication (IPC)

- Remote Procedure Call (RPC)

- Context Switching

- Threads

- Types of Threading

- Multi-threading

- System Calls

- Scheduling Algorithms

- Process Scheduling

- Types of Scheduling

- Scheduling Algorithms Overview

- FCFS Scheduling Algorithm

- SJF Scheduling Algorithm

- Round Robin Scheduling Algorithm

- HRRN Scheduling Algorithm

- Priority Scheduling Algorithm

- Multilevel Queue Scheduling

- Lottery Scheduling Algorithm

- Starvation and Aging

- Turn Around Time & Waiting Time

- Burst Time in SJF Scheduling

- Process Synchronization

- Process Synchronization

- Solutions For Process Synchronization

- Hardware-Based Solution

- Software-Based Solution

- Critical Section Problem

- Critical Section Synchronization

- Mutual Exclusion Synchronization

- Mutual Exclusion Using Interrupt Disabling

- Peterson's Algorithm

- Dekker's Algorithm

- Bakery Algorithm

- Semaphores

- Binary Semaphores

- Counting Semaphores

- Mutex

- Turn Variable

- Bounded Buffer Problem

- Reader Writer Locks

- Test and Set Lock

- Monitors

- Sleep and Wake

- Race Condition

- Classical Synchronization Problems

- Dining Philosophers Problem

- Producer Consumer Problem

- Sleeping Barber Problem

- Reader Writer Problem

- OS Deadlock

- Introduction to Deadlock

- Conditions for Deadlock

- Deadlock Handling

- Deadlock Prevention

- Deadlock Avoidance (Banker's Algorithm)

- Deadlock Detection and Recovery

- Deadlock Ignorance

- Resource Allocation Graph

- Livelock

- Memory Management

- Memory Management

- Logical and Physical Address

- Contiguous Memory Allocation

- Non-Contiguous Memory Allocation

- First Fit Algorithm

- Next Fit Algorithm

- Best Fit Algorithm

- Worst Fit Algorithm

- Buffering

- Fragmentation

- Compaction

- Virtual Memory

- Segmentation

- Paged Segmentation & Segmented Paging

- Buddy System

- Slab Allocation

- Overlays

- Free Space Management

- Locality of Reference

- Paging and Page Replacement

- Paging

- Demand Paging

- Page Table

- Page Replacement Algorithms

- Second Chance Page Replacement

- Optimal Page Replacement Algorithm

- Belady's Anomaly

- Thrashing

- Storage and File Management

- File Systems

- File Attributes

- Structures of Directory

- Linked Index Allocation

- Indexed Allocation

- Disk Scheduling Algorithms

- FCFS Disk Scheduling

- SSTF Disk Scheduling

- SCAN Disk Scheduling

- LOOK Disk Scheduling

- I/O Systems

- I/O Hardware

- I/O Software

- I/O Programmed

- I/O Interrupt-Initiated

- Direct Memory Access

- OS Types

- OS - Types

- OS - Batch Processing

- OS - Multiprogramming

- OS - Multitasking

- OS - Multiprocessing

- OS - Distributed

- OS - Real-Time

- OS - Single User

- OS - Monolithic

- OS - Embedded

- Popular Operating Systems

- OS - Hybrid

- OS - Zephyr

- OS - Nix

- OS - Linux

- OS - Blackberry

- OS - Garuda

- OS - Tails

- OS - Clustered

- OS - Haiku

- OS - AIX

- OS - Solus

- OS - Tizen

- OS - Bharat

- OS - Fire

- OS - Bliss

- OS - VxWorks

- Miscellaneous Topics

- OS - Security

- OS Questions Answers

- OS - Questions Answers

- OS Useful Resources

- OS - Quick Guide

- OS - Useful Resources

- OS - Discussion

Operating System - Deadlock Prevention

The first method to handle a deadlock is to prevent it from occurring in the first place itself. This is known as Deadlock Prevention. Read this chapter to learn all the methods used for deadlock prevention in operating systems.

How to Prevent Deadlock in an Operating System?

A deadlock will occur only if all the following four necessary conditions are occurring simultaneously in the system −

- Mutual Exclusion

- Hold and Wait

- No Preemption

- Circular Wait

To prevent a deadlock, the operating system just needs to ensure that at least one of the above four conditions never takes place. In the next section, we will see how to prevent each of these conditions from occurring.

Prevent Mutual Exclusion

Mutual Exclusion is a condition that states to occur deadlock in an operating system, at least one resource must be held in a non-shareable mode, meaning that only one process can use that resource at any given time. If another process requests the same resource, it must wait for the resource to be released.

To prevent occurring of mutual exclusion, the operating system can make resources shareable whenever possible. For example, read-only resources such as files can be shared among multiple processes simultaneously without causing any issues. But writeable resources like printers or memory cannot be shared to multiple processes at the same time. Because when two processes try to write data to the same resource simultaneously, it can cause data corruption and inconsistency.

So preventing mutual exclusion is not always a choice for deadlock prevention. But whenever possible, the operating system should make resources shareable to avoid deadlock.

Prevent Hold and Wait

Hold and Wait is a condition that occurs when a process holding at least one resource is waiting to get additional resources that are currently being held by other processes. This means that a process that already has resources may request more resources, which it cannot obtain because they are held by other processes.

To prevent hold and wait, the operating system can use one of the following two strategies −

- Declare the Needed Resources Upfront − Before a process starts executing, it must request and be allocated all the resources it will need during its execution. This is known as the "all-or-nothing" approach. If all the requested resources are not available, the process should wait until they are available.

- Release Resources Before Requesting New Ones − If at any point during its execution, a process needs additional resources, it must first release all the resources it currently holds before requesting the new ones. This ensures that a process does not hold onto resources while waiting for others.

Both of these strategies can help prevent hold and wait. However, they can also lead to decreased resource utilization and increased waiting times for processes. So, the operating system must carefully consider the trade-offs when implementing these strategies. End of the day, preventing preventing deadlock comes with some cost.

Example

Consider two processes P1 and P2 and two resource types R1 and R2.

Process P1 - Needs R1 and R2 Process P2 - Needs R1 and R2

Scenario 1 − Without Preventing Hold and Wait

P1 - Allocated R1, Waiting for R2 P2 - Allocated R2, Waiting for R1 Result: Deadlock occurs.

Scenario 2 − Preventing Hold and Wait by Declaring Resources Upfront

P1 - Requests R1 and R2 together P1 - Allocated R1 and R2 P1 - Completes execution and releases R1 and R2 P2 - Requests R1 and R2 together P2 - Allocated R1 and R2 P2 - Completes execution and releases R1 and R2 Result: No Deadlock occurs.

Prevent No Preemption

No Preemption is a condition that states resources cannot be taken away from a process holding them. Meaning a process must release the resource voluntarily when it is no longer needed.

To prevent no preemption condition, the operating system can allow preemption of resources. If a process holding some resources is waiting for additional resources, the operating system can preempt the resources currently held by the process and assign them to other processes. The preempted process can be restarted later when it can get all the resources it needs.

However, preempting resources can lead to issues such as data inconsistency and starvation. So, the operating system must carefully manage the preemption process to ensure that it does not lead to other problems.

Prevent Circular Wait

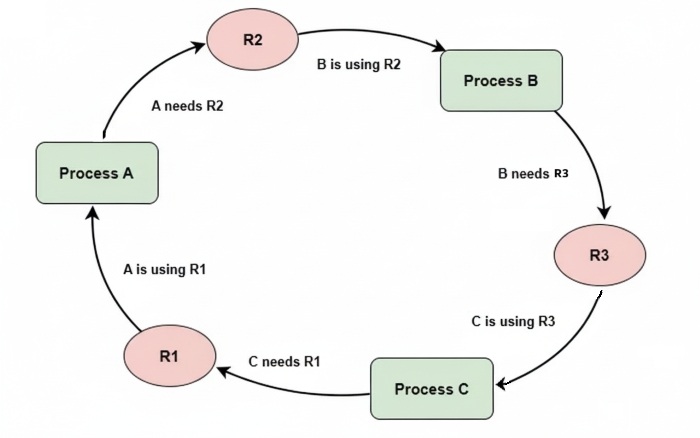

Circular Wait says that a set of processes exist such that each process in the set is waiting for a resource that another process in the set holds, thus forming a cycle of waiting. It can be visualized as in the diagram below −

To prevent circular wait, the operating system can implement a total ordering method. In this method, all resource types are assigned a unique numerical order. Each process is required to request resources in an increasing order of their enumeration. This means that a process can only request a resource with a higher number than the resources it currently holds. By implementing this order, the operating system can prevent the formation of circular wait conditions among processes.

Example

Consider three resource types R1, R2, and R3 with the following order −

- R1 - 1

- R2 - 2

- R3 - 3

Process P1 - Holds R1, Can request R2 or R3 Process P2 - Holds R2, Can request R3 Process P3 - Holds R3, Cannot request any other resource as of now Result: No Circular Wait can occur.

Conclusion

To prevent a system from running into a deadlock, you can ensure that at least one of the four necessary conditions for deadlock is not allowed to happen. This can be done by making resources shareable, requiring processes to declare all needed resources upfront, allowing preemption of resources, and imposing a total ordering of resource types. Implementing any one of these four methods can help prevent a deadlock. Operating systems choose to implement the best method by considering the trade-offs and the needs of the system.