Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Assumption of Linear Regression - Homoscedasticity

Introduction

Linear regression is one of the most used and simplest algorithms in machine learning, which helps predict linear data in almost all kinds of problem statements. Although linear regression is a parametric machine learning algorithm, the algorithm assumes certain assumptions for the data to make predictions faster and easier. Homoscadastocoty is also one of the core assumptions of linear regression, which is assumed to be satisfied while applying linear regression on the respected dataset. In this article, we will discuss the homoscedasticity assumption of linear regression, its core idea, its importance, and some other important stuff related to the same.

In this article, we will discuss linear regression's behavioral pattern, why it is a parametric algorithm, what homoscedasticity is, and what it is essential. This article will help one to understand the linear regression's parametric behavior and will also be able to understand the assumption of homoscedasticity by linear regression as well.

The Need for Assumptions

Before starting the discussion of the assumptions that linear regression assumes, it is necessary to know why we are assuming this thing; what is the need for that?

To understand this concept better, it is necessary to understand the parametric models first. The parametric models are the type of algorithms in machine learning that works on the principle of the function using which they are trained, and they result in their output based on the function. As the function is sued to train and predict the algorithm, every data point or dataset can not be directly applied, and hence to make the process more accessible and efficient, we assume certain things and then build a function of the same.

For example, linear regression is a parametric algorithm that assumes the data to be linear and homoscedastic.

What is Homoscedasticity?

Homoscadasticisty is one of the assumptions of linear regression in which the variance of the residuals is assumed to be constant. In simple words, the error terms should be of constant variance in the graph or errors vs. the predictor variable, and the values of the error terms should not be changed as the value of the predictor variable changes.

The opposite of homoscedasticity is heteroscedasticity, where the error terms or the residuals are not constant as per the predictor variable, and the error terms change rapidly as the value of the predictor variable changes.

As we know, in linear regression, the lime equation is used to solve the data pattern problems, which is Y = mX + c, where m and c are constant, X is an independent variable, and y is a dependent variable.

Now here, we will have some values of dependent variable Y that are considered true values, and also, we will predict the values of Y using the independent variable X which will be known as the predicted values of the variable Y.

Here the error terms are simply the difference between the true value of the variable Y and the predicted value of Variable Y. where homoscedasticity represents the constant variance between the error terms and the predictor variable Y.

Homoscedasticity Example

Let us try to understand the concept of homoscedasticity by taking an example to clear all the core intuition behind it.

Let us suppose that you want to predict the marks of the student based on the hours that a particular student spends studying this subject. So, in this case, the marks of the student would be the dependent variable for us which will depend on the hours variable, and the independent column or variable would be the hours that a particular student spends studying the subject.

Now let us also suppose that the data we have is linear, and we will use linear regression to solve the problem. Here, in this case, one best-fit line will be generated, which will be the regression line, which can give an idea of the student marks for unknown data as well.

But also, as you have created a model for predicting the student's marks, you would check the model's accuracy to validate the algorithm and make changes if needed. Now to validate the model, you will calculate the error that model makes by calculating the difference between the actual values of student marks and the predicted values of student marks.

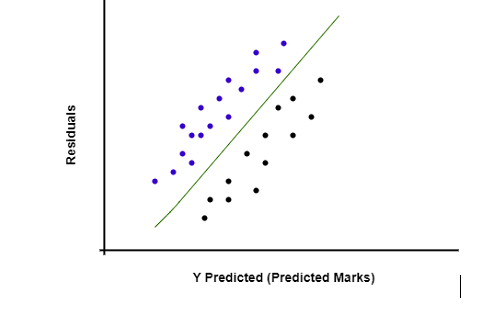

Now if you want to check whether the model is following the homoscedasticity or not, then you can plot the graph (scatter plot) between the error terms (residuals) and the predictor variable Y (Predicted marks of the students).

As we can see in the above image, the scatter plot between the predicted values of marks or dependent predictor variables vs. the residual is drawn for which the spread or the variance is constant, which means the model follows the homoscedasticity and is satisfactory.

Importance of Homoscedasticity

Homoscadasticiuty is one of the assumptions of linear regression. We know that linear regression is a parametric model which needs its assumptions to be satisfied, so if we are applying linear regression. If this assumption is not satisfied, then the model built will be very poor and less accurate.

Also, the homoscedasticity gives us an idea about the spread or the variance of the error terms with respect to the predicted variable; by analyzing the graph of the homoscedasticity, we can easily identify the spot where the model is making a high error and mistakes which can affect the overall performance of the model.

Key Takeaways

Linear regression is a parametric model that assumes certain assumptions for the data and model to be satisfied.

Homoscedasticity is the term that defines equal variance on a graph between the error terms of the model and the predictor variable.

Heteroscedastic models do not have an equal variance on the graph of errors vs. predictor variables.

The graph of homoscedasticity gives us an idea about the spots where the model is giving high values of error and which can be fixed.

Models following homoscedasticity are satisfactory for the linear regression algorithm.

Conclusion

In this article. We discussed the homoscedasticity assumption of the linear regression algorithm by discussing the other important terms like a parametric model, why assumptions are required, and examples of the same. This article will help one to understand the concept of homoscedasticity better and will be able to answer the interview questions related to the same very efficiently, which are mostly asked.