Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

What is design space of hardware-based cache coherence protocols?

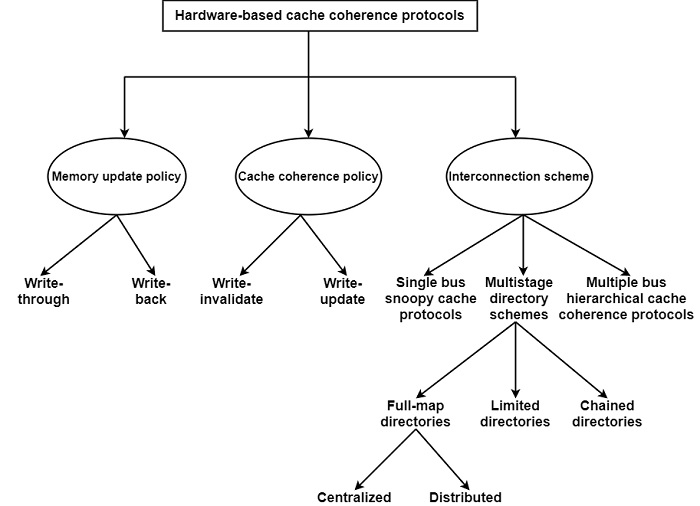

Hardware-based protocols support general solutions to the issues of cache coherence without any condition on the cachability of data. Hardware-based protocols can be classified as follows −

Memory update policy − There are two types of memory update policy are used in multiprocessors. The write-through policy maintains consistency between the main memory and caches; that is when a block is updated in one of the caches it is immediately updated in memory, too. The write-back policy permits the memory to be temporarily inconsistent with the most recently updated cached block.

The application of the write-through policy leads to unnecessary traffic on the interconnection network in the case of private data and infrequently used shared data. On the other hand, it is more reliable than the write-back scheme since error detection and recovery features are available only at the main memory.

The write-back policy avoids useless interconnection traffic; however, it requires more complex cache controllers since read references to memory locations that have not yet been updated should be redirected to the appropriate cache.

The write-through policy is a greedy policy because it updates the memory copy immediately, while the write-back policy is a lazy one with postponed memory updates. Similarly, a greedy and a lazy cache coherence policy have been introduced for updating the cache copies of a data structure −

write-update policy (a greedy policy) − The key idea of the write-update policy is that whenever a processor updates a cached data structure, it immediately updates all the other cached copies as well.

write-invalidate policy (a lazy policy) − In the case of the write-invalidate policy, the updated cache block is not transmitted directly to another cache; instead a simple invalidate command is transmitted to all other cached copies, and the original version in the shared memory so that they become false.

Hardware-based protocols can be classified into three basic classes based on the feature of the interconnection network used in the shared memory system. If the network efficiently supports broadcasting, the so-called snoopy cache protocol.

Large interconnection networks cannot provide broadcasting effectively and hence a structure is required that can precisely forward consistency commands to those caches that include a copy of the refreshed data structure. For this purpose, a directory should be preserved for each block of the shared memory to supervise the actual area of blocks in the possible caches. This approach is called the directory scheme.

The third approach seeks to prevent the application of the costly directory scheme but supports high scalability. It suggests multiple-bus networks with the application of hierarchical cache coherence protocols that are derived or continued versions of the single bus-based snoopy cache protocol.