Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Statistical Comparison of Machine Learning Algorithm

Predictive modeling and data?driven decision?making are built on machine learning algorithms. These algorithms enable computers to provide precise predictions and insightful information by learning patterns and correlations from data. Since there are many different algorithms accessible, it's important to comprehend their distinctive qualities and select the best one for a certain situation.

By offering a dispassionate assessment of each algorithm's performance, statistical comparison plays a crucial role in algorithm selection. We can evaluate algorithms' strengths, shortcomings, and appropriateness for particular tasks by contrasting them using statistical measurements. It enables us to put algorithm effectiveness indicators like recall, precision, and accuracy into numerical form. In this article, we will compare machine learning algorithms statistically.

Understanding Statistical Comparison

A crucial component in assessing the effectiveness of machine learning algorithms is statistical comparison. The technique of objectively evaluating and contrasting the effectiveness of various algorithms using statistical metrics is known as a statistical comparison. It lets us compare things fairly and derive important conclusions from the findings.

Key metrics and evaluation techniques

Accuracy, Precision, Recall, and F1?Score: The majority of the time, categorization jobs employ these indicators. Precision counts the percentage of accurately anticipated positive cases, whereas accuracy assesses the total accuracy of the algorithm's predictions. Recall, usually referred to as sensitivity, measures how well an algorithm can recognize positive cases. F1?score provides a fair assessment of classification ability by combining accuracy and recall into a single statistic.

Confusion matrix: A confusion matrix offers a thorough breakdown of the categorization outcomes of the algorithm. A greater comprehension of the algorithm's performance across several classes is made possible by the presentation of the true positive, true negative, false positive, and false negative numbers.

ROC Curves and AUC: The trade?off between the true positive rate and the false positive rate at different categorization levels is depicted graphically using Receiver Operating Characteristic (ROC) curves. The algorithm's performance over all potential thresholds is shown by the Area Under the Curve (AUC). AUC values that are higher suggest improved classification performance.

Cross?Validation: Cross?validation is a method for evaluating how well an algorithm performs on several groups of data. Cross?validation helps assess the method's generalizability and reduce overfitting by splitting the dataset into many folds and iteratively training and assessing the algorithm on various combinations.

Bias?Variance Trade?Off: One of the essential ideas in statistical comparison is the bias?variance trade?off. It has to do with striking a balance between a model's capacity to detect subtle patterns in the data (low bias) and its sensitivity to noise or minor variations (high variance). To make sure the algorithm performs effectively on both training and unknown data, it is imperative to find the ideal balance.

Statistical comparison of machine learning algorithm

Linear Regression

Regression analysis uses this technique as a fundamental method for simulating the connection between a dependent variable and one or more independent variables. In order to reduce the sum of squared errors, linear regression attempts to fit a straight line to the data points. It is possible to evaluate the model's relevance and goodness?of?fit using statistical measures like the coefficient of determination (R?squared) and p?values for coefficients.

Polynomial Regression

Polynomial regression is helpful when there is a curved pattern in the connection between the variables. This approach can capture more intricate correlations between the variables by using polynomial terms in addition to linear terms. Hypothesis tests may be used to assess the statistical significance of the polynomial terms, allowing us to choose the polynomial's most suitable degree.

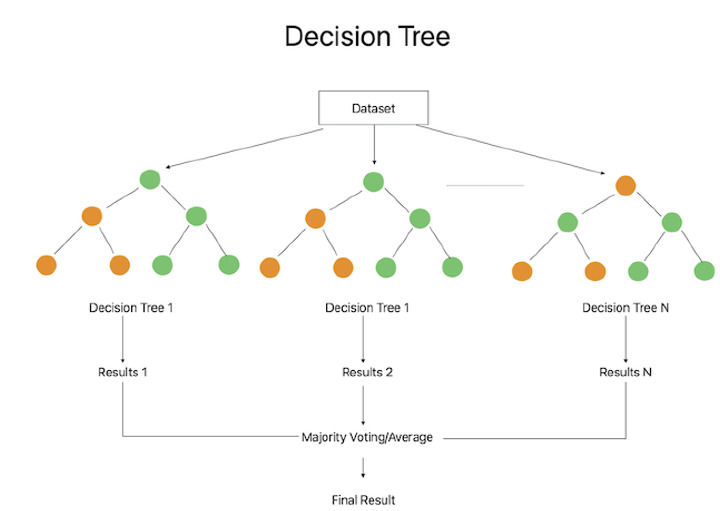

Decision Tree Regression

By recursively segmenting the feature space into regions, decision tree regression provides a non?linear solution to regression issues. Decisions are made based on feature values at each internal node, which results in several branches. By averaging the target values throughout the area corresponding to the input feature values, one can derive the final predicted value. One can evaluate the effectiveness and interpretability of decision tree regression using statistical measures like mean squared error (MSE) and R?squared.

Logistic Regression

Logistic regression is a versatile method that forecasts the relationship between input data and a binary or multi?class target variable. It determines the probability that a specific instance belongs to a specific class. Statistical measures like accuracy, precision, recall, and F1?score can be used to evaluate the classification performance of the system.

Support Vector Machine

SVM is a powerful algorithm that finds the optimum hyperplane in a high?dimensional space and separates data into several groups. By maximizing the margin between the classes, SVM aims to deliver robust categorization. Important statistical metrics used to assess SVM performance include accuracy, precision, recall, and F1?score. SVM can additionally resolve nonlinear relationships between features by using the kernel technique.

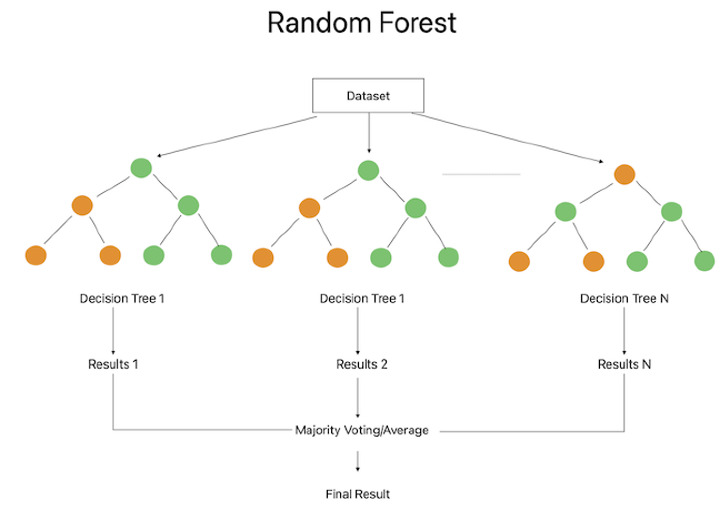

Random Forest

Several decision trees are combined using the Random Forest ensemble approach to get a prediction. Each decision tree is created using a randomly chosen subset of the traits and information. Statistical measures including accuracy, precision, recall, and F1?score can be used to evaluate the Random Forest classifier's performance. The program provides insights regarding feature importance based on the Gini index or information gain.

Conclusion

The process of choosing the best machine learning algorithm for a particular task heavily relies on statistical comparison. We can impartially assess the functionality and traits of various algorithms by carrying out a comprehensive statistical study. Comparing statistics can shed light on a number of parameters, including area under the ROC curve, F1?score, recall, accuracy, and precision. These measures allow us to assess the algorithm's prognostic accuracy, adaptability to various data distributions, and resistance to noise or outliers. We can also gauge how effectively the algorithm generalizes and make sure it does well on untested data by using statistical comparison techniques like cross?validation.