Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Multiple Processors Scheduling in Operating System

To increase the system's overall performance, numerous processors or cores are frequently used in modern computer systems. The operating system must be able to effectively schedule processes to execute on various processors, though, in order to make the best use of these resources.

Multiple processor scheduling involves deciding which processes should be assigned to which processor or core and how long they should be permitted to run. While ensuring that all processes are fairly and appropriately prioritized, the objective is to achieve efficient utilization of the available processors.

In this article, we will be discussing Multiple Processor Scheduling, the various approaches used, the types, and a few cases of Multiple Processors Scheduling in Operating Systems.

Multiple Processor Scheduling

The goal of multiple processor scheduling, also known as multiprocessor scheduling, is to create a system's scheduling function that utilizes several processors. In multiprocessor scheduling, multiple CPUs split the workload (load sharing) to enable concurrent execution of multiple processes. In comparison to single-processor scheduling, multiprocessor scheduling is generally more complicated. There are many identical processors in the multiprocessor scheduling system, allowing us to perform any process at any moment.

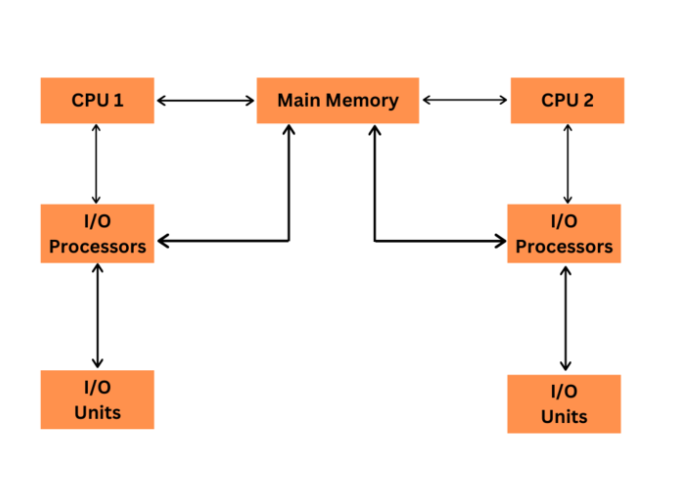

The system's numerous CPUs communicate often and share a common bus, memory, and other peripherals. As a result, the system is said to be strongly connected. These systems are employed whenever large amounts of data need to be processed, and they are mostly used in satellite, weather forecasting, etc.

In some instances of multiple-processor scheduling, the functioning of the processors is homogeneous, or identical. Any process in the queue can run on any available processor.

Multiprocessor systems can be homogeneous (the same CPU) or heterogeneous (various types of CPUs). Special scheduling restrictions, such as devices coupled to a single CPU through a private bus, may apply.

The ideal scheduling method for a system with a single processor cannot be determined by any rule or policy. There is also no ideal scheduling strategy for a system with several CPUs.

Approaches to Multiple Processor Scheduling

There are two different architectures utilized in multiprocessor systems: ?

Symmetric Multiprocessing

In an SMP system, each processor is comparable and has the same access to memory and I/O resources. The CPUs are not connected in a master-slave fashion, and they all use the same memory and I/O subsystems. This suggests that every memory location and I/O device are accessible to every processor without restriction. An operating system manages the task distribution among the processors in an SMP system, allowing every operation to be completed by any processor.

Asymmetric Multiprocessing

In the AMP asymmetric architecture, one processor, known as the master processor, has complete access to all of the system's resources, particularly memory and I/O devices. The master processor is in charge of allocating tasks to the other processors, also known as slave processors. Every slave processor is responsible for doing a certain set of tasks that the master processing has assigned to it. The master processor receives tasks from the operating system, which the master processor then distributes to the subordinate processors.

Types of Multiprocessor Scheduling Algorithms

Operating systems utilize a range of multiprocessor scheduling algorithms. Among the most typical types are ?

Round-Robin Scheduling ? The round-robin scheduling algorithm allocates a time quantum to each CPU and configures processes to run in a round-robin fashion on each processor. Since it ensures that each process gets an equivalent amount of CPU time, this strategy might be useful in systems wherein all programs have the same priority.

Priority Scheduling ? Processes are given levels of priority in this method, and those with greater priorities are scheduled to run first. This technique might be helpful in systems where some jobs, like real-time tasks, call for a higher priority.

Scheduling with the shortest job first (SJF) ? This algorithm schedules tasks according to how long they should take to complete. It is planned for the shortest work to run first, then the next smallest job, and so on. This technique can be helpful in systems with lots of quick processes since it can shorten the typical response time.

Fair-share scheduling ? In this technique, the number of processors and the priority of each process determine how much time is allotted to each. As it ensures that each process receives a fair share of processing time, this technique might be helpful in systems with a mix of long and short processes.

Earliest deadline first (EDF) scheduling ? Each process in this algorithm is given a deadline, and the process with the earliest deadline is the one that will execute first. In systems with real-time activities that have stringent deadlines, this approach can be helpful.

Scheduling using a multilevel feedback queue (MLFQ) ? Using a multilayer feedback queue (MLFQ), processes are given a range of priority levels and are able to move up or down the priority levels based on their behavior. This strategy might be useful in systems with a mix of short and long processes.

Use Cases of Multiple Processors Scheduling in Operating System

Now, we will discuss a few of the use cases of Multiple Processor Scheduling in Operating Systems?

High-Performance Computing ? Multiple processor scheduling is crucial in high-performance computing (HPC) environments where large-scale scientific simulations, data analysis, or complex computations are performed. Efficient scheduling of processes across multiple processors enables parallel execution, leading to faster computation times and increased overall system performance.

Server Virtualization ? In virtualized environments, where multiple virtual machines (VMs) run on a single physical server with multiple processors, effective scheduling ensures fair allocation of resources to VMs. It enables optimal utilization of processing power while maintaining performance isolation and ensuring that each VM receives its allocated share of CPU time.

Real-Time Systems ? Real-time systems, such as those used in aerospace, defense, and industrial automation, have strict timing requirements. Multiple processor scheduling algorithms like Earliest Deadline First (EDF) ensure that critical tasks with imminent deadlines are executed promptly, guaranteeing timely response and meeting stringent timing constraints.

Multimedia Processing ? Multimedia applications, such as video rendering or audio processing, often require significant computational power. Scheduling processes across multiple processors allows for parallel execution of multimedia tasks, enabling faster processing and smooth real-time performance.

Distributed Computing ? In distributed computing systems, tasks are distributed across multiple processors or nodes for collaborative processing. Efficient scheduling algorithms ensure load balancing, fault tolerance, and effective resource utilization across the distributed infrastructure, improving overall system efficiency and scalability.

Cloud Computing ? Cloud service providers employ multiple processors to serve numerous client requests simultaneously. Scheduling algorithms optimize the allocation of virtual machines and containers across the available processors, ensuring fairness, scalability, and efficient resource utilization in cloud computing environments.

Big Data Processing ? Big data analytics involves processing and analyzing massive volumes of data. Multiple processor scheduling enables parallel execution of data processing tasks, such as data ingestion, transformation, and analysis, significantly reducing the time required for data processing and enabling real-time or near-real-time insights.

Scientific Simulations and Modeling ? Numerical simulations and scientific modeling often require extensive computational resources. Multiple processor scheduling allows for the parallel execution of simulation tasks, accelerating the time it takes to obtain results and enabling researchers to explore complex phenomena and perform more accurate simulations.

Gaming ? In modern gaming systems, multiple processors are utilized to handle complex graphics rendering, physics simulations, and AI computations. Effective scheduling ensures smooth gameplay, minimizes lag, and maximizes the utilization of available processing power to deliver an immersive gaming experience.

Embedded Systems ? Embedded systems with multiple processors, such as automotive systems, IoT devices, or robotics, require efficient scheduling to ensure a timely response, real-time control, and coordination of various tasks running on different processors. Scheduling algorithms prioritize critical tasks and manage resource allocation to meet system requirements.

Conclusion

Multiple processor scheduling is a key concept in operating systems and is essential for managing how actions are executed in systems with multiple processors. Due to the growing need for computing power, multiprocessor systems are becoming more widespread, and efficient scheduling techniques are needed to manage the allocation of system resources.