Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

Look aside Buffer

What is a Look Aside Buffer?

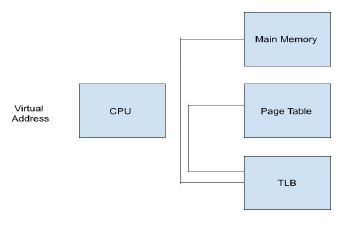

A Look-aside Buffer (LAB) is a type of cache memory that is used in computer systems to store frequently accessed data. The LAB is located between the CPU and the main memory, and it acts as a high-speed buffer to improve system performance.

The LAB works by caching a portion of the data from the main memory that is frequently accessed by the CPU. When the CPU requests data from the main memory, the LAB first checks if the data is present in the buffer. If the data is found in the LAB, it is immediately retrieved and sent to the CPU, which significantly reduces the access time compared to fetching data from the main memory.

Where is a Look Aside Buffer used?

The LAB is commonly used in computer systems that have slow main memory access times, such as hard disk drives, to improve the system's overall performance. It is also used in virtual memory systems, where it stores frequently accessed pages to reduce the number of page faults and improve performance.

Translation Look aside Buffer (TLB)

A Translation Look aside Buffer (TLB) is a special type of cache that helps the processor to quickly access recently used data. It stores page table entries that have been recently accessed and helps the processor to quickly retrieve the frame number and form the real address when given a virtual address.

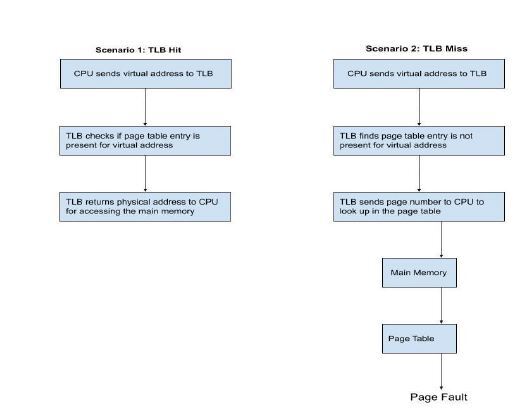

When the processor looks up a virtual address in the TLB, it either finds the page table entry (known as a TLB hit) or it doesn't (known as a TLB miss). If it's a hit, the processor retrieves the frame number and forms the real address. If it's a miss, the page number is used as an index while processing the page table. The TLB first checks if the required page is already in the main memory. If it's not, then a page fault is issued, and the TLB is updated to include the new page entry.

TLB is faster and smaller than the main memory but cheaper and bigger than the register. It is used to reduce the effective memory access time (EMAT) by acting as a high-speed associative cache. The EMAT is calculated using the hit ratio of the TLB, memory access time, and TLB access time.

In Scenario 1, the TLB finds the page table entry for the virtual address in question and returns the corresponding physical address to the CPU for accessing the main memory. This is a TLB hit.

In Scenario 2, the TLB does not find the page table entry for the virtual address in question, so it sends the page number to the CPU to look up in the page table. If the page is not already in main memory, a page fault occurs and the TLB is updated with the new page entry. This is a TLB miss.

Locality of Reference

TLB contains entries for frequently accessed pages only

Follows the concept of locality of reference

OS Translation Look aside Buffer

TLB uses tags and keys for mapping

TLB hit occurs when desired entry is found in TLB

In case of TLB hit, CPU can access actual location in main memory

In case of TLB miss, CPU has to access page table in main memory and then access actual frame

TLB hit rate affects effective access time (EAT)

EAT can be decreased by increasing TLB hit rate

Drawbacks of Paging

Page table size can be very large, wastes main memory

CPU takes more time to read a single word from main memory

Page table size can be decreased by increasing page size, but causes internal fragmentation and page wastage

Multilevel paging increases effective access time

Using register with page table stored inside can reduce access time, but registers are expensive and small compared to page table size

Access times with translation look aside hits and misses

Effective Access Time (EAT)

TLB is used to reduce effective memory access time as it is a high speed associative cache.

TLB can decrease EAT by reducing time to access page table

EAT formula: EAT = P(t + m) + (1 - P)(t + k.m + m)

P = TLB hit rate, t = time to access TLB, m = time to access main memory, k = 1 for single-level paging

Increasing TLB hit rate decreases EAT

Multilevel paging increases EAT

Conclusion

Paging is a memory management technique in Operating Systems that divides memory into pages and processes into blocks. However, the page table size can become very large, wasting memory and increasing access time. Translation Look aside Buffer (TLB) acts as a high-speed cache for frequently used page table entries, reducing the effective access time of the page table. TLB hit rate affects the effective access time, and increasing it decreases the access time. The TLB is faster and smaller than main memory but cheaper and larger than the register. Overall, TLB plays a crucial role in reducing memory access time and improving system performance