Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

How to Locate Elements using Selenium Python?

With the frequent change in technology to present the content on the web it is often needed to redesign and restructure the content of the web pages or websites. Selenium with Python is a good combination that is helpful to extract the desired content from web pages. Selenium is a free open-source automated tool that is used for evaluating web applications across multiple platforms. Selenium Test Scripts can be written in a variety of computer languages such as Java, C#, Python, NodeJS, PHP, Perl, etc. In this Python Selenium article, using two different examples, the ways to locate elements of a webpage using Selenium are given. In both examples, News Websites are used for extracting the content.

Locating the elements to be extracted ?

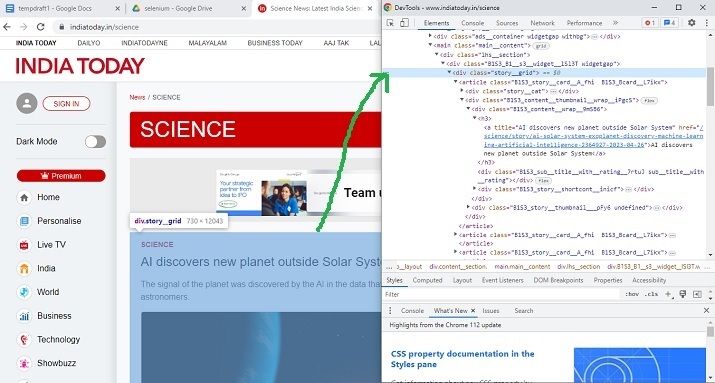

Open the website from which the content is to be extracted. Now press the right mouse button and open the inspect window. Highlight the element or section from the webpage and see its HTML design specifications from the inspect window. Use these specifications for locating the elements.

Example 1: Using Selenium with Python to Locate div Element With a Specific Class name

Algorithm

Step 1 ? First download the Chrome driver with the same version as Chrome. Now save that driver in the same folder where the Python file is stored.

Step 2 ? Use the START_URL= "https://www.indiatoday.in/science".Import the BeautifulSoup for parsing. Use the "class" as "story__grid" to locate div elements.

Step 3 ? Specify the website URL, and start the driver to get the URL.

Step 4 ? The fetched page will be parsed by using BeautifulSoup.

Step 5 ? Search for the div tag with the required class.

Step 6 ? Extract the content. Print it and convert it into html form by enclosing the extracted content within HTML tags.

Step 7 ? Write the output HTML file. Run the program. Open the output HTML file and check the result.

Example

from selenium import webdriver

from bs4 import BeautifulSoup

import time

START_URL= "https://www.indiatoday.in/science"

driver = webdriver.Chrome("./chromedriver")

driver.get(START_URL)

time.sleep(10)

def scrape():

temp_l=[]

soup = BeautifulSoup(driver.page_source, "html.parser")

for div_tag in soup.find_all("div", attrs={"class", "story__grid"}):

temp_l.append(str(div_tag))

print(temp_l)

enclosing_start= "<html><head><link rel='stylesheet' " + "href='styles.css'></head> <body>"

enclosing_end= "</body></html>"

with open('restructuredarticle.html', 'w+', encoding='utf-16') as f:

f.write(enclosing_start)

f.write('\n' + '<p> EXTRACTED CONTENT START </p>'+'\n')

for items in temp_l:

f.write('%s' %items)

f.write('\n' + enclosing_end)

print("File written successfully")

f.close()

scrape()

Output

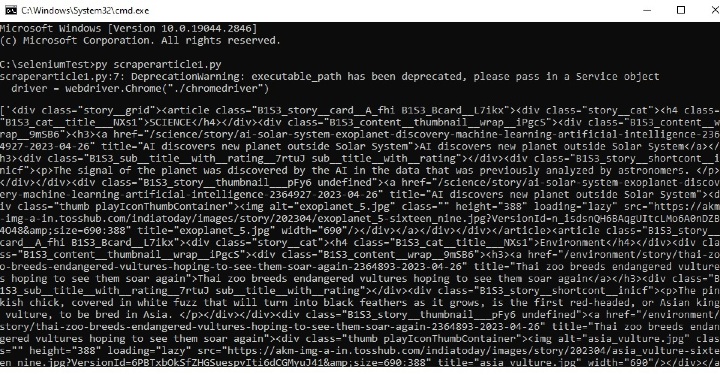

Run the Python file in the Command window ?

Open the cmd window. First, we will check the output in the cmd window. And then the saved html file is opened in a browser to see the extracted content.

Example 2: Using Selenium with Python to locate h4 element with a specific class name

Step 1 ? First download the chromedriver with the same version as Chrome. Now save that driver in the same folder where the Python file is stored.

Step 2 ? Use the START_URL= "https://jamiatimes.in/".Import the BeautifulSoup for parsing. Use the "class" as "entry-title title" to locate h4 elements.

Step 3 ? Specify the website URL, and start the driver to get the URL.

Step 4 ? The fetched page will be parsed by using BeautifulSoup.

Step 5 ? Search for the h4 tag with the required class.

Step 6 ? Extract the content. Print it and convert it into html form by enclosing the extracted content within HTML tags.

Step 7 ? Write the output HTML file. Run the program. Open the output HTML file and check the result.

Example

from selenium import webdriver

from bs4 import BeautifulSoup

import time

START_URL= "https://jamiatimes.in/"

driver = webdriver.Chrome("./chromedriver")

driver.get(START_URL)

time.sleep(10)

def scrape():

temp_l=[]

soup = BeautifulSoup(driver.page_source, "html.parser")

for h4_tag in soup.find_all("h4", attrs={"class", "entry-title title"}):

temp_l.append(str(h4_tag))

enclosing_start= "<html><head><link rel='stylesheet' " + "href='styles.css'></head> <body>"

enclosing_end= "</body></html>"

with open('restructuredarticle2.html', 'w+', encoding='utf-16') as f:

f.write(enclosing_start)

f.write('\n' + '<p> EXTRACTED CONTENT START </p>'+'\n')

for items in temp_l:

f.write('%s' %items)

f.write('\n' + enclosing_end)

print("File written successfully")

f.close()

print(temp_l)

scrape()

Output

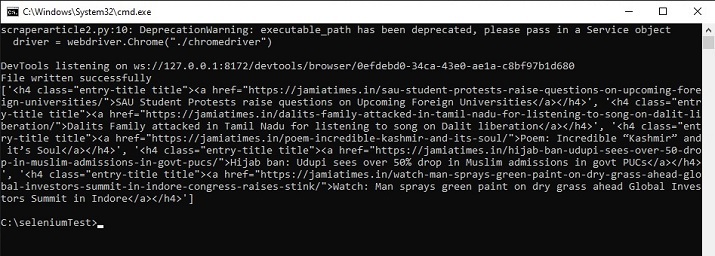

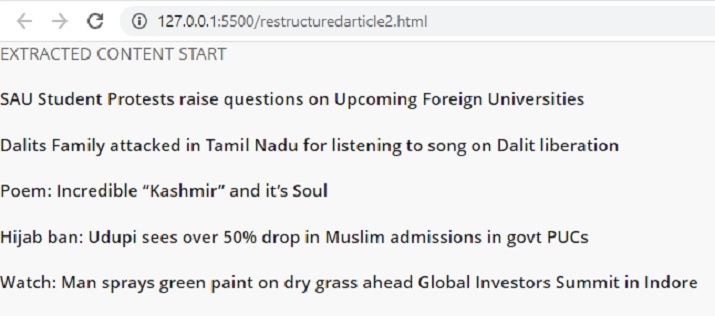

Run the python file in the Command window ?

Open the cmd window. First we will check the output in cmd window. And then the saved html file is opened in a browser to see the extracted content.

In this Python Selenium article, two different examples, the ways to show how to locate the element for scraping, are given. In the first example, Selenium is used to locate the div tags and then it scrapes the specified elements from a news website. In the second example, the h4 tags are located and the required headings are extracted from another news website.