Data Structure

Data Structure Networking

Networking RDBMS

RDBMS Operating System

Operating System Java

Java MS Excel

MS Excel iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C Programming

C Programming C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

- Who is Who

How to Implementing an Autoencoder in PyTorch?

An autoencoder is a method of unsupervised learning for neural networks that train the network to disregard signal "noise" in order to develop effective data representations (encoding). It is a kind of neural network where the output layer has the same number of dimensions as the input layer. In other words, the number of input units in the input layer equals the number of output units in the output layer. An autoencoder, also known as a replicator neural network, duplicates data from the input to the output in an unsupervised fashion.

By sending the input across the network, the autoencoders rebuild each dimension of the input. It would appear simple to utilize a neural network to replicate the input, however during replication, the input's size is reduced to its smaller representation. In comparison to the input or output layers, the intermediate layers of the neural network have fewer units. As a result, the intermediary layers contain a condensed version of the input. From this condensed representation of the input, the output is constructed.

Components of Autoencoder

Encoder ? Where the model learns how to encode the input data and minimize the input dimensions.

Decoder ? Where the model learns how to rebuild the data from the encoded representation to be as near to the original input as feasible.

Implementing Autoencoder in PyTorch

1. Installation

Torch ? High-level tensor computation and deep neural networks based on the autograd framework are provided by this Python package.

Torchvision ? A variety of databases, picture structures, and computer vision transformations are included in this module.

pip install torch

pip install torchvision

2. Bringing in the necessary modules and packages

We must first import the desired modules that are necessary. The torch. optim and torch.nn modules from the light bundle as well as datasets and updates from the torchvision bundle will be used. In this post, we'll make use of the well-known MNIST dataset, which has grayscale images of hand-typed single digits between 0 and 9.

import torch from torchvision import datasets from torchvision import transforms import matplotlib.pyplot as plt

3. Add the dataset

Using the DataLoader module, we must load the necessary dataset into the loader in this phase. The downloaded dataset can be used to transform images. The tensors are stacked and prepared for use with the help of the DataLoader module.

tensor_transform = transforms.ToTensor() dataset = datasets.MNIST(root = "./data", train = True, download = True, transform = tensor_transform) loader = torch.utils.data.DataLoader(dataset = dataset, batch_size = 64, shuffle = True)

4. The Autoencoder class should now be created

Data dimensions are gradually decreased by the encoder portion. The encoder starts off with 28*28 nodes in a Linear layer, followed by a ReLU layer, and it continues until the dimensionality is decreased to 9 nodes. By adopting the encoder architecture's opposite, the decryptor employs these 9 data representations to restore the original picture. To only range the values between 0 and 1, the decryptor design employs a Sigmoid Layer.

class Autoenc(torch.nn.Module): def __init__(self): super().__init__() self.encoder = torch.nn.Sequential( torch.nn.Linear(28 * 28, 128), torch.nn.ReLU(), torch.nn.Linear(128, 64), torch.nn.ReLU(), torch.nn.Linear(64, 36), torch.nn.ReLU(), torch.nn.Linear(36, 18), torch.nn.ReLU(), torch.nn.Linear(18, 9) ) self.decoder = torch.nn.Sequential( torch.nn.Linear(9, 18), torch.nn.ReLU(), torch.nn.Linear(18, 36), torch.nn.ReLU(), torch.nn.Linear(36, 64), torch.nn.ReLU(), torch.nn.Linear(64, 128), torch.nn.ReLU(), torch.nn.Linear(128, 28 * 28), torch.nn.Sigmoid() ) def forward(self, x): encoded = self.encoder(x) decoded = self.decoder(encoded) return decoded

5. Starting the model

Depending entirely on the kind of need we have, which we refer to as model initialization, we need to specify the model that we want to incorporate into our project here. We utilize an Adam Optimizer with a learning rate of 0.1 and a weight decay of 10-8 to evaluate the model. We also employ the Mean Squared Error function.

model = Autoenc() loss_function = torch.nn.MSELoss() optimizer = torch.optim.Adam(model.parameters(), lr = 1e-1, weight_decay = 1e8)

6. Result Generation

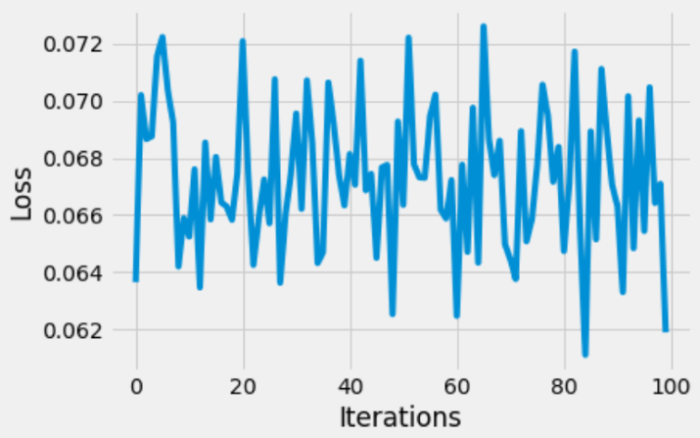

The final tensor is saved in an output list once the output against each epoch has been computed using the argument given to the Model() class. The MSELoss function is used to compute and illustrate the loss function.

With the aid of zero grad, the optimizer's starting gradient values are set to zero (). Loss.backward() calculates and stores the grade values. The optimizer is updated using the step() method. To plot the pictures, the detached original image and the rebuilt image from the outputs list are both converted into a NumPy Array.

Example

epochs = 10 outputs = [] losses = [] for epoch in range(epochs): for (image, _) in loader: image = image.reshape(-1, 28*28) reconstructed = model(image) loss = loss_function(reconstructed, image) optimizer.zero_grad() loss.backward() optimizer.step() losses.append(loss) outputs.append((epochs, image, reconstructed)) plt.style.use('fivethirtyeight') plt.xlabel('Iterations') plt.ylabel('Loss') plt.plot(losses[-100:])

Output of plot

Loss function graph

7. Creating a New Input

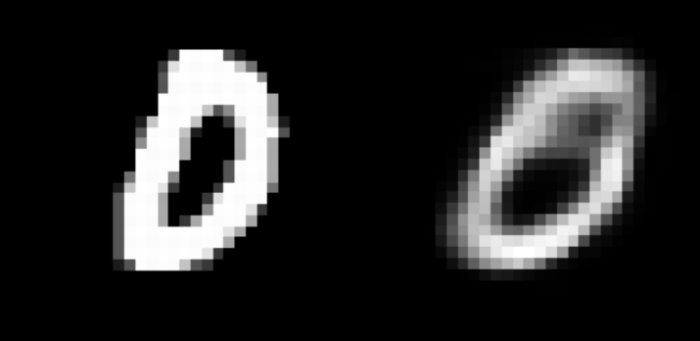

The initial input picture array and the first reconstructed input image array were plotted using plt.imshow.

Example

for i, item in enumerate(image): item = item.reshape(-1, 28, 28) plt.imshow(item[0]) for i, item in enumerate(reconstructed): item = item.reshape(-1, 28, 28) plt.imshow(item[0])

Output

Model reconstructed image on the right

Conclusion

The reconstructed images seem ok, but they are rather grainy. The autoencoder model might be constructed using the architecture of a convolution neural network, or more layers and/or neurons could be added to improve this result. Autoencoders have many advantages for dimensionality reduction. It might also be used to understand the distribution of a dataset and denoise data, though. The Pytorch autoencoder is covered in more detail in this paper, we hope. The aforementioned article taught us the Pytorch autoencoder's fundamental idea and syntax, as well as how and when to utilize it.